- - Google Chrome

Intended for healthcare professionals

- Access provided by Google Indexer

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- The PRISMA 2020...

The PRISMA 2020 statement: an updated guideline for reporting systematic reviews

PRISMA 2020 explanation and elaboration: updated guidance and exemplars for reporting systematic reviews

- Related content

- Peer review

- Matthew J Page , senior research fellow 1 ,

- Joanne E McKenzie , associate professor 1 ,

- Patrick M Bossuyt , professor 2 ,

- Isabelle Boutron , professor 3 ,

- Tammy C Hoffmann , professor 4 ,

- Cynthia D Mulrow , professor 5 ,

- Larissa Shamseer , doctoral student 6 ,

- Jennifer M Tetzlaff , research product specialist 7 ,

- Elie A Akl , professor 8 ,

- Sue E Brennan , senior research fellow 1 ,

- Roger Chou , professor 9 ,

- Julie Glanville , associate director 10 ,

- Jeremy M Grimshaw , professor 11 ,

- Asbjørn Hróbjartsson , professor 12 ,

- Manoj M Lalu , associate scientist and assistant professor 13 ,

- Tianjing Li , associate professor 14 ,

- Elizabeth W Loder , professor 15 ,

- Evan Mayo-Wilson , associate professor 16 ,

- Steve McDonald , senior research fellow 1 ,

- Luke A McGuinness , research associate 17 ,

- Lesley A Stewart , professor and director 18 ,

- James Thomas , professor 19 ,

- Andrea C Tricco , scientist and associate professor 20 ,

- Vivian A Welch , associate professor 21 ,

- Penny Whiting , associate professor 17 ,

- David Moher , director and professor 22

- 1 School of Public Health and Preventive Medicine, Monash University, Melbourne, Australia

- 2 Department of Clinical Epidemiology, Biostatistics and Bioinformatics, Amsterdam University Medical Centres, University of Amsterdam, Amsterdam, Netherlands

- 3 Université de Paris, Centre of Epidemiology and Statistics (CRESS), Inserm, F 75004 Paris, France

- 4 Institute for Evidence-Based Healthcare, Faculty of Health Sciences and Medicine, Bond University, Gold Coast, Australia

- 5 University of Texas Health Science Center at San Antonio, San Antonio, Texas, USA; Annals of Internal Medicine

- 6 Knowledge Translation Program, Li Ka Shing Knowledge Institute, Toronto, Canada; School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, Canada

- 7 Evidence Partners, Ottawa, Canada

- 8 Clinical Research Institute, American University of Beirut, Beirut, Lebanon; Department of Health Research Methods, Evidence, and Impact, McMaster University, Hamilton, Ontario, Canada

- 9 Department of Medical Informatics and Clinical Epidemiology, Oregon Health & Science University, Portland, Oregon, USA

- 10 York Health Economics Consortium (YHEC Ltd), University of York, York, UK

- 11 Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Canada; School of Epidemiology and Public Health, University of Ottawa, Ottawa, Canada; Department of Medicine, University of Ottawa, Ottawa, Canada

- 12 Centre for Evidence-Based Medicine Odense (CEBMO) and Cochrane Denmark, Department of Clinical Research, University of Southern Denmark, Odense, Denmark; Open Patient data Exploratory Network (OPEN), Odense University Hospital, Odense, Denmark

- 13 Department of Anesthesiology and Pain Medicine, The Ottawa Hospital, Ottawa, Canada; Clinical Epidemiology Program, Blueprint Translational Research Group, Ottawa Hospital Research Institute, Ottawa, Canada; Regenerative Medicine Program, Ottawa Hospital Research Institute, Ottawa, Canada

- 14 Department of Ophthalmology, School of Medicine, University of Colorado Denver, Denver, Colorado, United States; Department of Epidemiology, Johns Hopkins Bloomberg School of Public Health, Baltimore, Maryland, USA

- 15 Division of Headache, Department of Neurology, Brigham and Women's Hospital, Harvard Medical School, Boston, Massachusetts, USA; Head of Research, The BMJ , London, UK

- 16 Department of Epidemiology and Biostatistics, Indiana University School of Public Health-Bloomington, Bloomington, Indiana, USA

- 17 Population Health Sciences, Bristol Medical School, University of Bristol, Bristol, UK

- 18 Centre for Reviews and Dissemination, University of York, York, UK

- 19 EPPI-Centre, UCL Social Research Institute, University College London, London, UK

- 20 Li Ka Shing Knowledge Institute of St. Michael's Hospital, Unity Health Toronto, Toronto, Canada; Epidemiology Division of the Dalla Lana School of Public Health and the Institute of Health Management, Policy, and Evaluation, University of Toronto, Toronto, Canada; Queen's Collaboration for Health Care Quality Joanna Briggs Institute Centre of Excellence, Queen's University, Kingston, Canada

- 21 Methods Centre, Bruyère Research Institute, Ottawa, Ontario, Canada; School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, Canada

- 22 Centre for Journalology, Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Canada; School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, Canada

- Correspondence to: M J Page matthew.page{at}monash.edu

- Accepted 4 January 2021

The Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) statement, published in 2009, was designed to help systematic reviewers transparently report why the review was done, what the authors did, and what they found. Over the past decade, advances in systematic review methodology and terminology have necessitated an update to the guideline. The PRISMA 2020 statement replaces the 2009 statement and includes new reporting guidance that reflects advances in methods to identify, select, appraise, and synthesise studies. The structure and presentation of the items have been modified to facilitate implementation. In this article, we present the PRISMA 2020 27-item checklist, an expanded checklist that details reporting recommendations for each item, the PRISMA 2020 abstract checklist, and the revised flow diagrams for original and updated reviews.

Systematic reviews serve many critical roles. They can provide syntheses of the state of knowledge in a field, from which future research priorities can be identified; they can address questions that otherwise could not be answered by individual studies; they can identify problems in primary research that should be rectified in future studies; and they can generate or evaluate theories about how or why phenomena occur. Systematic reviews therefore generate various types of knowledge for different users of reviews (such as patients, healthcare providers, researchers, and policy makers). 1 2 To ensure a systematic review is valuable to users, authors should prepare a transparent, complete, and accurate account of why the review was done, what they did (such as how studies were identified and selected) and what they found (such as characteristics of contributing studies and results of meta-analyses). Up-to-date reporting guidance facilitates authors achieving this. 3

The Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) statement published in 2009 (hereafter referred to as PRISMA 2009) 4 5 6 7 8 9 10 is a reporting guideline designed to address poor reporting of systematic reviews. 11 The PRISMA 2009 statement comprised a checklist of 27 items recommended for reporting in systematic reviews and an “explanation and elaboration” paper 12 13 14 15 16 providing additional reporting guidance for each item, along with exemplars of reporting. The recommendations have been widely endorsed and adopted, as evidenced by its co-publication in multiple journals, citation in over 60 000 reports (Scopus, August 2020), endorsement from almost 200 journals and systematic review organisations, and adoption in various disciplines. Evidence from observational studies suggests that use of the PRISMA 2009 statement is associated with more complete reporting of systematic reviews, 17 18 19 20 although more could be done to improve adherence to the guideline. 21

Many innovations in the conduct of systematic reviews have occurred since publication of the PRISMA 2009 statement. For example, technological advances have enabled the use of natural language processing and machine learning to identify relevant evidence, 22 23 24 methods have been proposed to synthesise and present findings when meta-analysis is not possible or appropriate, 25 26 27 and new methods have been developed to assess the risk of bias in results of included studies. 28 29 Evidence on sources of bias in systematic reviews has accrued, culminating in the development of new tools to appraise the conduct of systematic reviews. 30 31 Terminology used to describe particular review processes has also evolved, as in the shift from assessing “quality” to assessing “certainty” in the body of evidence. 32 In addition, the publishing landscape has transformed, with multiple avenues now available for registering and disseminating systematic review protocols, 33 34 disseminating reports of systematic reviews, and sharing data and materials, such as preprint servers and publicly accessible repositories. To capture these advances in the reporting of systematic reviews necessitated an update to the PRISMA 2009 statement.

Summary points

To ensure a systematic review is valuable to users, authors should prepare a transparent, complete, and accurate account of why the review was done, what they did, and what they found

The PRISMA 2020 statement provides updated reporting guidance for systematic reviews that reflects advances in methods to identify, select, appraise, and synthesise studies

The PRISMA 2020 statement consists of a 27-item checklist, an expanded checklist that details reporting recommendations for each item, the PRISMA 2020 abstract checklist, and revised flow diagrams for original and updated reviews

We anticipate that the PRISMA 2020 statement will benefit authors, editors, and peer reviewers of systematic reviews, and different users of reviews, including guideline developers, policy makers, healthcare providers, patients, and other stakeholders

Development of PRISMA 2020

A complete description of the methods used to develop PRISMA 2020 is available elsewhere. 35 We identified PRISMA 2009 items that were often reported incompletely by examining the results of studies investigating the transparency of reporting of published reviews. 17 21 36 37 We identified possible modifications to the PRISMA 2009 statement by reviewing 60 documents providing reporting guidance for systematic reviews (including reporting guidelines, handbooks, tools, and meta-research studies). 38 These reviews of the literature were used to inform the content of a survey with suggested possible modifications to the 27 items in PRISMA 2009 and possible additional items. Respondents were asked whether they believed we should keep each PRISMA 2009 item as is, modify it, or remove it, and whether we should add each additional item. Systematic review methodologists and journal editors were invited to complete the online survey (110 of 220 invited responded). We discussed proposed content and wording of the PRISMA 2020 statement, as informed by the review and survey results, at a 21-member, two-day, in-person meeting in September 2018 in Edinburgh, Scotland. Throughout 2019 and 2020, we circulated an initial draft and five revisions of the checklist and explanation and elaboration paper to co-authors for feedback. In April 2020, we invited 22 systematic reviewers who had expressed interest in providing feedback on the PRISMA 2020 checklist to share their views (via an online survey) on the layout and terminology used in a preliminary version of the checklist. Feedback was received from 15 individuals and considered by the first author, and any revisions deemed necessary were incorporated before the final version was approved and endorsed by all co-authors.

The PRISMA 2020 statement

Scope of the guideline.

The PRISMA 2020 statement has been designed primarily for systematic reviews of studies that evaluate the effects of health interventions, irrespective of the design of the included studies. However, the checklist items are applicable to reports of systematic reviews evaluating other interventions (such as social or educational interventions), and many items are applicable to systematic reviews with objectives other than evaluating interventions (such as evaluating aetiology, prevalence, or prognosis). PRISMA 2020 is intended for use in systematic reviews that include synthesis (such as pairwise meta-analysis or other statistical synthesis methods) or do not include synthesis (for example, because only one eligible study is identified). The PRISMA 2020 items are relevant for mixed-methods systematic reviews (which include quantitative and qualitative studies), but reporting guidelines addressing the presentation and synthesis of qualitative data should also be consulted. 39 40 PRISMA 2020 can be used for original systematic reviews, updated systematic reviews, or continually updated (“living”) systematic reviews. However, for updated and living systematic reviews, there may be some additional considerations that need to be addressed. Where there is relevant content from other reporting guidelines, we reference these guidelines within the items in the explanation and elaboration paper 41 (such as PRISMA-Search 42 in items 6 and 7, Synthesis without meta-analysis (SWiM) reporting guideline 27 in item 13d). Box 1 includes a glossary of terms used throughout the PRISMA 2020 statement.

Glossary of terms

Systematic review —A review that uses explicit, systematic methods to collate and synthesise findings of studies that address a clearly formulated question 43

Statistical synthesis —The combination of quantitative results of two or more studies. This encompasses meta-analysis of effect estimates (described below) and other methods, such as combining P values, calculating the range and distribution of observed effects, and vote counting based on the direction of effect (see McKenzie and Brennan 25 for a description of each method)

Meta-analysis of effect estimates —A statistical technique used to synthesise results when study effect estimates and their variances are available, yielding a quantitative summary of results 25

Outcome —An event or measurement collected for participants in a study (such as quality of life, mortality)

Result —The combination of a point estimate (such as a mean difference, risk ratio, or proportion) and a measure of its precision (such as a confidence/credible interval) for a particular outcome

Report —A document (paper or electronic) supplying information about a particular study. It could be a journal article, preprint, conference abstract, study register entry, clinical study report, dissertation, unpublished manuscript, government report, or any other document providing relevant information

Record —The title or abstract (or both) of a report indexed in a database or website (such as a title or abstract for an article indexed in Medline). Records that refer to the same report (such as the same journal article) are “duplicates”; however, records that refer to reports that are merely similar (such as a similar abstract submitted to two different conferences) should be considered unique.

Study —An investigation, such as a clinical trial, that includes a defined group of participants and one or more interventions and outcomes. A “study” might have multiple reports. For example, reports could include the protocol, statistical analysis plan, baseline characteristics, results for the primary outcome, results for harms, results for secondary outcomes, and results for additional mediator and moderator analyses

PRISMA 2020 is not intended to guide systematic review conduct, for which comprehensive resources are available. 43 44 45 46 However, familiarity with PRISMA 2020 is useful when planning and conducting systematic reviews to ensure that all recommended information is captured. PRISMA 2020 should not be used to assess the conduct or methodological quality of systematic reviews; other tools exist for this purpose. 30 31 Furthermore, PRISMA 2020 is not intended to inform the reporting of systematic review protocols, for which a separate statement is available (PRISMA for Protocols (PRISMA-P) 2015 statement 47 48 ). Finally, extensions to the PRISMA 2009 statement have been developed to guide reporting of network meta-analyses, 49 meta-analyses of individual participant data, 50 systematic reviews of harms, 51 systematic reviews of diagnostic test accuracy studies, 52 and scoping reviews 53 ; for these types of reviews we recommend authors report their review in accordance with the recommendations in PRISMA 2020 along with the guidance specific to the extension.

How to use PRISMA 2020

The PRISMA 2020 statement (including the checklists, explanation and elaboration, and flow diagram) replaces the PRISMA 2009 statement, which should no longer be used. Box 2 summarises noteworthy changes from the PRISMA 2009 statement. The PRISMA 2020 checklist includes seven sections with 27 items, some of which include sub-items ( table 1 ). A checklist for journal and conference abstracts for systematic reviews is included in PRISMA 2020. This abstract checklist is an update of the 2013 PRISMA for Abstracts statement, 54 reflecting new and modified content in PRISMA 2020 ( table 2 ). A template PRISMA flow diagram is provided, which can be modified depending on whether the systematic review is original or updated ( fig 1 ).

Noteworthy changes to the PRISMA 2009 statement

Inclusion of the abstract reporting checklist within PRISMA 2020 (see item #2 and table 2 ).

Movement of the ‘Protocol and registration’ item from the start of the Methods section of the checklist to a new Other section, with addition of a sub-item recommending authors describe amendments to information provided at registration or in the protocol (see item #24a-24c).

Modification of the ‘Search’ item to recommend authors present full search strategies for all databases, registers and websites searched, not just at least one database (see item #7).

Modification of the ‘Study selection’ item in the Methods section to emphasise the reporting of how many reviewers screened each record and each report retrieved, whether they worked independently, and if applicable, details of automation tools used in the process (see item #8).

Addition of a sub-item to the ‘Data items’ item recommending authors report how outcomes were defined, which results were sought, and methods for selecting a subset of results from included studies (see item #10a).

Splitting of the ‘Synthesis of results’ item in the Methods section into six sub-items recommending authors describe: the processes used to decide which studies were eligible for each synthesis; any methods required to prepare the data for synthesis; any methods used to tabulate or visually display results of individual studies and syntheses; any methods used to synthesise results; any methods used to explore possible causes of heterogeneity among study results (such as subgroup analysis, meta-regression); and any sensitivity analyses used to assess robustness of the synthesised results (see item #13a-13f).

Addition of a sub-item to the ‘Study selection’ item in the Results section recommending authors cite studies that might appear to meet the inclusion criteria, but which were excluded, and explain why they were excluded (see item #16b).

Splitting of the ‘Synthesis of results’ item in the Results section into four sub-items recommending authors: briefly summarise the characteristics and risk of bias among studies contributing to the synthesis; present results of all statistical syntheses conducted; present results of any investigations of possible causes of heterogeneity among study results; and present results of any sensitivity analyses (see item #20a-20d).

Addition of new items recommending authors report methods for and results of an assessment of certainty (or confidence) in the body of evidence for an outcome (see items #15 and #22).

Addition of a new item recommending authors declare any competing interests (see item #26).

Addition of a new item recommending authors indicate whether data, analytic code and other materials used in the review are publicly available and if so, where they can be found (see item #27).

PRISMA 2020 item checklist

- View inline

PRISMA 2020 for Abstracts checklist*

PRISMA 2020 flow diagram template for systematic reviews. The new design is adapted from flow diagrams proposed by Boers, 55 Mayo-Wilson et al. 56 and Stovold et al. 57 The boxes in grey should only be completed if applicable; otherwise they should be removed from the flow diagram. Note that a “report” could be a journal article, preprint, conference abstract, study register entry, clinical study report, dissertation, unpublished manuscript, government report or any other document providing relevant information.

- Download figure

- Open in new tab

- Download powerpoint

We recommend authors refer to PRISMA 2020 early in the writing process, because prospective consideration of the items may help to ensure that all the items are addressed. To help keep track of which items have been reported, the PRISMA statement website ( http://www.prisma-statement.org/ ) includes fillable templates of the checklists to download and complete (also available in the data supplement on bmj.com). We have also created a web application that allows users to complete the checklist via a user-friendly interface 58 (available at https://prisma.shinyapps.io/checklist/ and adapted from the Transparency Checklist app 59 ). The completed checklist can be exported to Word or PDF. Editable templates of the flow diagram can also be downloaded from the PRISMA statement website.

We have prepared an updated explanation and elaboration paper, in which we explain why reporting of each item is recommended and present bullet points that detail the reporting recommendations (which we refer to as elements). 41 The bullet-point structure is new to PRISMA 2020 and has been adopted to facilitate implementation of the guidance. 60 61 An expanded checklist, which comprises an abridged version of the elements presented in the explanation and elaboration paper, with references and some examples removed, is available in the data supplement on bmj.com. Consulting the explanation and elaboration paper is recommended if further clarity or information is required.

Journals and publishers might impose word and section limits, and limits on the number of tables and figures allowed in the main report. In such cases, if the relevant information for some items already appears in a publicly accessible review protocol, referring to the protocol may suffice. Alternatively, placing detailed descriptions of the methods used or additional results (such as for less critical outcomes) in supplementary files is recommended. Ideally, supplementary files should be deposited to a general-purpose or institutional open-access repository that provides free and permanent access to the material (such as Open Science Framework, Dryad, figshare). A reference or link to the additional information should be included in the main report. Finally, although PRISMA 2020 provides a template for where information might be located, the suggested location should not be seen as prescriptive; the guiding principle is to ensure the information is reported.

Use of PRISMA 2020 has the potential to benefit many stakeholders. Complete reporting allows readers to assess the appropriateness of the methods, and therefore the trustworthiness of the findings. Presenting and summarising characteristics of studies contributing to a synthesis allows healthcare providers and policy makers to evaluate the applicability of the findings to their setting. Describing the certainty in the body of evidence for an outcome and the implications of findings should help policy makers, managers, and other decision makers formulate appropriate recommendations for practice or policy. Complete reporting of all PRISMA 2020 items also facilitates replication and review updates, as well as inclusion of systematic reviews in overviews (of systematic reviews) and guidelines, so teams can leverage work that is already done and decrease research waste. 36 62 63

We updated the PRISMA 2009 statement by adapting the EQUATOR Network’s guidance for developing health research reporting guidelines. 64 We evaluated the reporting completeness of published systematic reviews, 17 21 36 37 reviewed the items included in other documents providing guidance for systematic reviews, 38 surveyed systematic review methodologists and journal editors for their views on how to revise the original PRISMA statement, 35 discussed the findings at an in-person meeting, and prepared this document through an iterative process. Our recommendations are informed by the reviews and survey conducted before the in-person meeting, theoretical considerations about which items facilitate replication and help users assess the risk of bias and applicability of systematic reviews, and co-authors’ experience with authoring and using systematic reviews.

Various strategies to increase the use of reporting guidelines and improve reporting have been proposed. They include educators introducing reporting guidelines into graduate curricula to promote good reporting habits of early career scientists 65 ; journal editors and regulators endorsing use of reporting guidelines 18 ; peer reviewers evaluating adherence to reporting guidelines 61 66 ; journals requiring authors to indicate where in their manuscript they have adhered to each reporting item 67 ; and authors using online writing tools that prompt complete reporting at the writing stage. 60 Multi-pronged interventions, where more than one of these strategies are combined, may be more effective (such as completion of checklists coupled with editorial checks). 68 However, of 31 interventions proposed to increase adherence to reporting guidelines, the effects of only 11 have been evaluated, mostly in observational studies at high risk of bias due to confounding. 69 It is therefore unclear which strategies should be used. Future research might explore barriers and facilitators to the use of PRISMA 2020 by authors, editors, and peer reviewers, designing interventions that address the identified barriers, and evaluating those interventions using randomised trials. To inform possible revisions to the guideline, it would also be valuable to conduct think-aloud studies 70 to understand how systematic reviewers interpret the items, and reliability studies to identify items where there is varied interpretation of the items.

We encourage readers to submit evidence that informs any of the recommendations in PRISMA 2020 (via the PRISMA statement website: http://www.prisma-statement.org/ ). To enhance accessibility of PRISMA 2020, several translations of the guideline are under way (see available translations at the PRISMA statement website). We encourage journal editors and publishers to raise awareness of PRISMA 2020 (for example, by referring to it in journal “Instructions to authors”), endorsing its use, advising editors and peer reviewers to evaluate submitted systematic reviews against the PRISMA 2020 checklists, and making changes to journal policies to accommodate the new reporting recommendations. We recommend existing PRISMA extensions 47 49 50 51 52 53 71 72 be updated to reflect PRISMA 2020 and advise developers of new PRISMA extensions to use PRISMA 2020 as the foundation document.

We anticipate that the PRISMA 2020 statement will benefit authors, editors, and peer reviewers of systematic reviews, and different users of reviews, including guideline developers, policy makers, healthcare providers, patients, and other stakeholders. Ultimately, we hope that uptake of the guideline will lead to more transparent, complete, and accurate reporting of systematic reviews, thus facilitating evidence based decision making.

Acknowledgments

We dedicate this paper to the late Douglas G Altman and Alessandro Liberati, whose contributions were fundamental to the development and implementation of the original PRISMA statement.

We thank the following contributors who completed the survey to inform discussions at the development meeting: Xavier Armoiry, Edoardo Aromataris, Ana Patricia Ayala, Ethan M Balk, Virginia Barbour, Elaine Beller, Jesse A Berlin, Lisa Bero, Zhao-Xiang Bian, Jean Joel Bigna, Ferrán Catalá-López, Anna Chaimani, Mike Clarke, Tammy Clifford, Ioana A Cristea, Miranda Cumpston, Sofia Dias, Corinna Dressler, Ivan D Florez, Joel J Gagnier, Chantelle Garritty, Long Ge, Davina Ghersi, Sean Grant, Gordon Guyatt, Neal R Haddaway, Julian PT Higgins, Sally Hopewell, Brian Hutton, Jamie J Kirkham, Jos Kleijnen, Julia Koricheva, Joey SW Kwong, Toby J Lasserson, Julia H Littell, Yoon K Loke, Malcolm R Macleod, Chris G Maher, Ana Marušic, Dimitris Mavridis, Jessie McGowan, Matthew DF McInnes, Philippa Middleton, Karel G Moons, Zachary Munn, Jane Noyes, Barbara Nußbaumer-Streit, Donald L Patrick, Tatiana Pereira-Cenci, Ba’ Pham, Bob Phillips, Dawid Pieper, Michelle Pollock, Daniel S Quintana, Drummond Rennie, Melissa L Rethlefsen, Hannah R Rothstein, Maroeska M Rovers, Rebecca Ryan, Georgia Salanti, Ian J Saldanha, Margaret Sampson, Nancy Santesso, Rafael Sarkis-Onofre, Jelena Savović, Christopher H Schmid, Kenneth F Schulz, Guido Schwarzer, Beverley J Shea, Paul G Shekelle, Farhad Shokraneh, Mark Simmonds, Nicole Skoetz, Sharon E Straus, Anneliese Synnot, Emily E Tanner-Smith, Brett D Thombs, Hilary Thomson, Alexander Tsertsvadze, Peter Tugwell, Tari Turner, Lesley Uttley, Jeffrey C Valentine, Matt Vassar, Areti Angeliki Veroniki, Meera Viswanathan, Cole Wayant, Paul Whaley, and Kehu Yang. We thank the following contributors who provided feedback on a preliminary version of the PRISMA 2020 checklist: Jo Abbott, Fionn Büttner, Patricia Correia-Santos, Victoria Freeman, Emily A Hennessy, Rakibul Islam, Amalia (Emily) Karahalios, Kasper Krommes, Andreas Lundh, Dafne Port Nascimento, Davina Robson, Catherine Schenck-Yglesias, Mary M Scott, Sarah Tanveer and Pavel Zhelnov. We thank Abigail H Goben, Melissa L Rethlefsen, Tanja Rombey, Anna Scott, and Farhad Shokraneh for their helpful comments on the preprints of the PRISMA 2020 papers. We thank Edoardo Aromataris, Stephanie Chang, Toby Lasserson and David Schriger for their helpful peer review comments on the PRISMA 2020 papers.

Contributors: JEM and DM are joint senior authors. MJP, JEM, PMB, IB, TCH, CDM, LS, and DM conceived this paper and designed the literature review and survey conducted to inform the guideline content. MJP conducted the literature review, administered the survey and analysed the data for both. MJP prepared all materials for the development meeting. MJP and JEM presented proposals at the development meeting. All authors except for TCH, JMT, EAA, SEB, and LAM attended the development meeting. MJP and JEM took and consolidated notes from the development meeting. MJP and JEM led the drafting and editing of the article. JEM, PMB, IB, TCH, LS, JMT, EAA, SEB, RC, JG, AH, TL, EMW, SM, LAM, LAS, JT, ACT, PW, and DM drafted particular sections of the article. All authors were involved in revising the article critically for important intellectual content. All authors approved the final version of the article. MJP is the guarantor of this work. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted.

Funding: There was no direct funding for this research. MJP is supported by an Australian Research Council Discovery Early Career Researcher Award (DE200101618) and was previously supported by an Australian National Health and Medical Research Council (NHMRC) Early Career Fellowship (1088535) during the conduct of this research. JEM is supported by an Australian NHMRC Career Development Fellowship (1143429). TCH is supported by an Australian NHMRC Senior Research Fellowship (1154607). JMT is supported by Evidence Partners Inc. JMG is supported by a Tier 1 Canada Research Chair in Health Knowledge Transfer and Uptake. MML is supported by The Ottawa Hospital Anaesthesia Alternate Funds Association and a Faculty of Medicine Junior Research Chair. TL is supported by funding from the National Eye Institute (UG1EY020522), National Institutes of Health, United States. LAM is supported by a National Institute for Health Research Doctoral Research Fellowship (DRF-2018-11-ST2-048). ACT is supported by a Tier 2 Canada Research Chair in Knowledge Synthesis. DM is supported in part by a University Research Chair, University of Ottawa. The funders had no role in considering the study design or in the collection, analysis, interpretation of data, writing of the report, or decision to submit the article for publication.

Competing interests: All authors have completed the ICMJE uniform disclosure form at http://www.icmje.org/conflicts-of-interest/ and declare: EL is head of research for the BMJ ; MJP is an editorial board member for PLOS Medicine ; ACT is an associate editor and MJP, TL, EMW, and DM are editorial board members for the Journal of Clinical Epidemiology ; DM and LAS were editors in chief, LS, JMT, and ACT are associate editors, and JG is an editorial board member for Systematic Reviews . None of these authors were involved in the peer review process or decision to publish. TCH has received personal fees from Elsevier outside the submitted work. EMW has received personal fees from the American Journal for Public Health , for which he is the editor for systematic reviews. VW is editor in chief of the Campbell Collaboration, which produces systematic reviews, and co-convenor of the Campbell and Cochrane equity methods group. DM is chair of the EQUATOR Network, IB is adjunct director of the French EQUATOR Centre and TCH is co-director of the Australasian EQUATOR Centre, which advocates for the use of reporting guidelines to improve the quality of reporting in research articles. JMT received salary from Evidence Partners, creator of DistillerSR software for systematic reviews; Evidence Partners was not involved in the design or outcomes of the statement, and the views expressed solely represent those of the author.

Provenance and peer review: Not commissioned; externally peer reviewed.

Patient and public involvement: Patients and the public were not involved in this methodological research. We plan to disseminate the research widely, including to community participants in evidence synthesis organisations.

This is an Open Access article distributed in accordance with the terms of the Creative Commons Attribution (CC BY 4.0) license, which permits others to distribute, remix, adapt and build upon this work, for commercial use, provided the original work is properly cited. See: http://creativecommons.org/licenses/by/4.0/ .

- Gurevitch J ,

- Koricheva J ,

- Nakagawa S ,

- Liberati A ,

- Tetzlaff J ,

- Altman DG ,

- PRISMA Group

- Tricco AC ,

- Sampson M ,

- Shamseer L ,

- Leoncini E ,

- de Belvis G ,

- Ricciardi W ,

- Fowler AJ ,

- Leclercq V ,

- Beaudart C ,

- Ajamieh S ,

- Rabenda V ,

- Tirelli E ,

- O’Mara-Eves A ,

- McNaught J ,

- Ananiadou S

- Marshall IJ ,

- Noel-Storr A ,

- Higgins JPT ,

- Chandler J ,

- McKenzie JE ,

- López-López JA ,

- Becker BJ ,

- Campbell M ,

- Sterne JAC ,

- Savović J ,

- Sterne JA ,

- Hernán MA ,

- Reeves BC ,

- Whiting P ,

- Higgins JP ,

- ROBIS group

- Hultcrantz M ,

- Stewart L ,

- Bossuyt PM ,

- Flemming K ,

- McInnes E ,

- France EF ,

- Cunningham M ,

- Rethlefsen ML ,

- Kirtley S ,

- Waffenschmidt S ,

- PRISMA-S Group

- ↵ Higgins JPT, Thomas J, Chandler J, et al, eds. Cochrane Handbook for Systematic Reviews of Interventions : Version 6.0. Cochrane, 2019. Available from https://training.cochrane.org/handbook .

- Dekkers OM ,

- Vandenbroucke JP ,

- Cevallos M ,

- Renehan AG ,

- ↵ Cooper H, Hedges LV, Valentine JV, eds. The Handbook of Research Synthesis and Meta-Analysis. Russell Sage Foundation, 2019.

- IOM (Institute of Medicine)

- PRISMA-P Group

- Salanti G ,

- Caldwell DM ,

- Stewart LA ,

- PRISMA-IPD Development Group

- Zorzela L ,

- Ioannidis JP ,

- PRISMAHarms Group

- McInnes MDF ,

- Thombs BD ,

- and the PRISMA-DTA Group

- Beller EM ,

- Glasziou PP ,

- PRISMA for Abstracts Group

- Mayo-Wilson E ,

- Dickersin K ,

- MUDS investigators

- Stovold E ,

- Beecher D ,

- Noel-Storr A

- McGuinness LA

- Sarafoglou A ,

- Boutron I ,

- Giraudeau B ,

- Porcher R ,

- Chauvin A ,

- Schulz KF ,

- Schroter S ,

- Stevens A ,

- Weinstein E ,

- Macleod MR ,

- IICARus Collaboration

- Kirkham JJ ,

- Petticrew M ,

- Tugwell P ,

- PRISMA-Equity Bellagio group

REVIEW article

Emotions in self-regulated learning: a critical literature review and meta-analysis.

- 1 Department of Education and Human Services, Lehigh University, Bethlehem, PA, United States

- 2 Department of Educational and Counselling Psychology, McGill University, Montreal, QC, Canada

- 3 Department of Community and Population Health, Lehigh University, Bethlehem, PA, United States

Emotion has been recognized as an important component in the framework of self-regulated learning (SRL) over the past decade. Researchers explore emotions and SRL at two levels. Emotions are studied as traits or states, whereas SRL is deemed functioning at two levels: Person and Task × Person. However, limited research exists on the complex relationships between emotions and SRL at the two levels. Theoretical inquiries and empirical evidence about the role of emotions in SRL remain somewhat fragmented. This review aims to illustrate the role of both trait and state emotions in SRL at Person and Task × Person levels. Moreover, we conducted a meta-analysis to synthesize 23 empirical studies that were published between 2009 and 2020 to seek evidence about the role of emotions in SRL. An integrated theoretical framework of emotions in SRL is proposed based on the review and the meta-analysis. We propose several research directions that deserve future investigation, including collecting multimodal multichannel data to capture emotions and SRL. This paper lays a solid foundation for developing a comprehensive understanding of the role of emotions in SRL and asking important questions for future investigation.

1. Introduction

Students experience a variety of emotions, which can be either beneficial or detrimental to their learning processes and performance. Positive emotions have a considerable impact on students’ academic achievement and can ultimately lead to success in the academic domain ( Pekrun et al., 2009 ). In contrast, negative emotions may impede students’ academic processes. For example, negative emotions (e.g., anger, anxiety, and boredom) have been found to be negatively associated with students’ motivation, learning strategies, and cognitive resources ( Pekrun et al., 2002 ). Given the impressive growth of research on emotions in education, the notion of emotions has been incorporated into various education theories, especially the theoretical frameworks of self-regulated learning (SRL).

Self-regulated learning (SRL) refers to thoughts, feelings, and behaviors that learners plan and adjust to attain learning goals ( Zimmerman, 2000 ). SRL theories account for the cognitive, metacognitive, motivational, and emotional processes and strategies that characterize learners’ efforts to build sophisticated mental models during learning ( Pintrich, 2000 ; Winne and Perry, 2000 ). Although theorists emphasize different aspects of SRL, the majority of them include emotions as one component of SRL ( Boekaerts, 1996 ; Efklides, 2011 ). Emotions are generally considered contributing factors that enhance or undermine the use of superficial learning strategies or deep strategies in SRL. A growing number of empirical studies provide general support for the significance of emotions in SRL by examining the effects of emotions on SRL strategies (e.g., Pekrun et al., 2010 ). However, both theoretical inquiries and empirical evidence about the role of emotions in SRL are still in a state of fragmentation. The field still needs a comprehensive framework that explains the complex connections between emotions and SRL. This paper addresses two research questions : (1) What theories can be found to explain the complex relationships between academic emotions and SRL? and (2) what empirical evidence exists to support the relationships between academic emotions and SRL ? Our goal is to synthesize the current theoretical frameworks and empirical evidence with the purpose of proposing a model that underpins the link between academic emotions and SRL in individualized learning environments.

2. Academic emotions: What are they?

Academic emotion is an important dimension of self-regulated learning that researchers should consider when focusing on within-individual factors influencing learning ( Ben-Eliyahu, 2019 ). Academic emotions are no longer just disruptions that learners should avoid or suppress ( Shuman and Scherer, 2014 ). Academic emotions can be beneficial and harmful, pleasant and unpleasant, and activating and deactivating, depending on the specific emotions and situations.

2.1. Taxonomy of emotions

Researchers generally agree to categorize emotions according to the focus of objects (stimulus of emotions), valence (positive or negative), and degree of activation (activating or deactivating). Based on the focus of objects, emotions in the learning context can be distinguished as achievement emotions, epistemic emotions, topic emotions, and social emotions ( Pekrun and Linnenbrink-Garcia, 2012 ). This review focuses on individual emotions, i.e., achievement and epistemic emotions that occur in individualized learning environments rather than social emotions that arise in group learning environments.

Achievement emotions are emotions that pertain to achievement activities or outcomes that are typically judged by competency-based standards, including anxiety, enjoyment, hope, pride, relief, anger, shame, hopelessness, and boredom ( Pekrun, 2006 ). Epistemic emotions are triggered by knowledge and knowledge-generating qualities in cognitive tasks and activities ( Trevors et al., 2016 ; Pekrun et al., 2017 ). For instance, when personal knowledge conflicts with external knowledge, namely cognitive incongruity, emotions may be activated by the epistemic nature of the task ( Muis et al., 2015a ). This kind of cognitive incongruity may cause surprise, curiosity, enjoyment, confusion, anxiety, frustration, or boredom. There are overlaps between achievement and epistemic emotions ( Pekrun and Stephens, 2012 ). For example, a student’s enjoyment can be an achievement emotion if it focuses on personal success or an epistemic emotion if it stems from a cognitive incongruity in knowledge. Achievement emotions and epistemic emotions are pervasive in different learning situations and have significant influences on learning ( Sinatra et al., 2015 ). To better understand how these types of emotions can be evaluated in SRL, Rosenberg (1998) suggested that we must also consider the levels and organization of emotions.

2.2. Trait emotions and state emotions

According to Rosenberg’s (1998) seminal work, emotions can be distinguished as traits and states. Trait emotions reflect a relatively general and stable way of responding to the world. In contrast, state emotions are characterized as episodic, experiential, and contextual and can be influenced by situational cues ( Goetz et al., 2015 ). These differences can also be applied to the educational context, where one can differentiate trait-like academic emotions from state-like emotions ( Pekrun et al., 2002 ). Trait-like emotions are typical course-related emotional experiences pertaining to a specific course, an exam, or a class. In contrast, state-like emotions are momentary emotional experiences within a single episode of academic life ( Ahmed et al., 2013 ). The differences between trait and state emotions can be traced back to the factors influencing emotions. Trait emotions are derived from memory and are influenced by students’ subjective beliefs and semantic knowledge ( Robinson and Clore, 2002 ). For example, students who do not have abundant knowledge of a specific situation may report more emotions than those with sufficient or similar knowledge. On the other hand, memory plays a less significant role in state emotions ( Bieg et al., 2013 ), where the intra-individual variance of state emotions is influenced more by the students’ interactions between the learning content and environment in a single learning episode. Consequently, these distinctions between trait-like and state-like emotions are essential in understanding the inconsistent self-report emotion measurements that often occur when people are asked to self-report feelings they generally experienced in a course versus those they are currently experiencing ( Robinson and Clore, 2002 ). Furthermore, these distinctions can also help us to better understand the role of emotions in SRL.

3. SRL: Two levels of development

Self-regulated learning (SRL) researchers evaluate self-regulated learners based on their theoretical orientations. Winne (1997) first distinguished between an aptitude and an event in terms of the property of SRL. An aptitude is a person’s relatively enduring attribute aggregated in multiple learning activities. For example, a student who reports their habit of memorizing everything in learning can be predicted as a learner who is more inclined to use memorizing strategy. However, this does not mean the student will use a memorizing strategy in every SRL event. An event is a transient and continuous learning state that has a clear starting point and endpoint. Completing a task and finishing an exam are all examples of event-like SRL. Greene and Azevedo (2009) further identified 35 event-like SRL processes at the micro level, e.g., re-reading, reviewing notes, and hypothesizing. Moreover, Efklides (2011) articulated the difference between the Person level SRL, represented by personal characteristics, and the Task × Person level SRL, guided by the monitoring features of task processing. In sum, there exist two levels of SRL: Person level SRL (or aptitude-like SRL) and the Task × Person level SRL (or event-like SRL). The underlying premise for this claim is that different learning contexts, including the nature of tasks and the structure of subjects, can influence how learners regulate their learning process ( Poitras and Lajoie, 2013 ). The claim calls for attention to the acknowledgment of the two levels of SRL while reviewing the theoretical and empirical evidence regarding the role of emotions in SRL.

4. What do SRL models say about emotions in SRL?

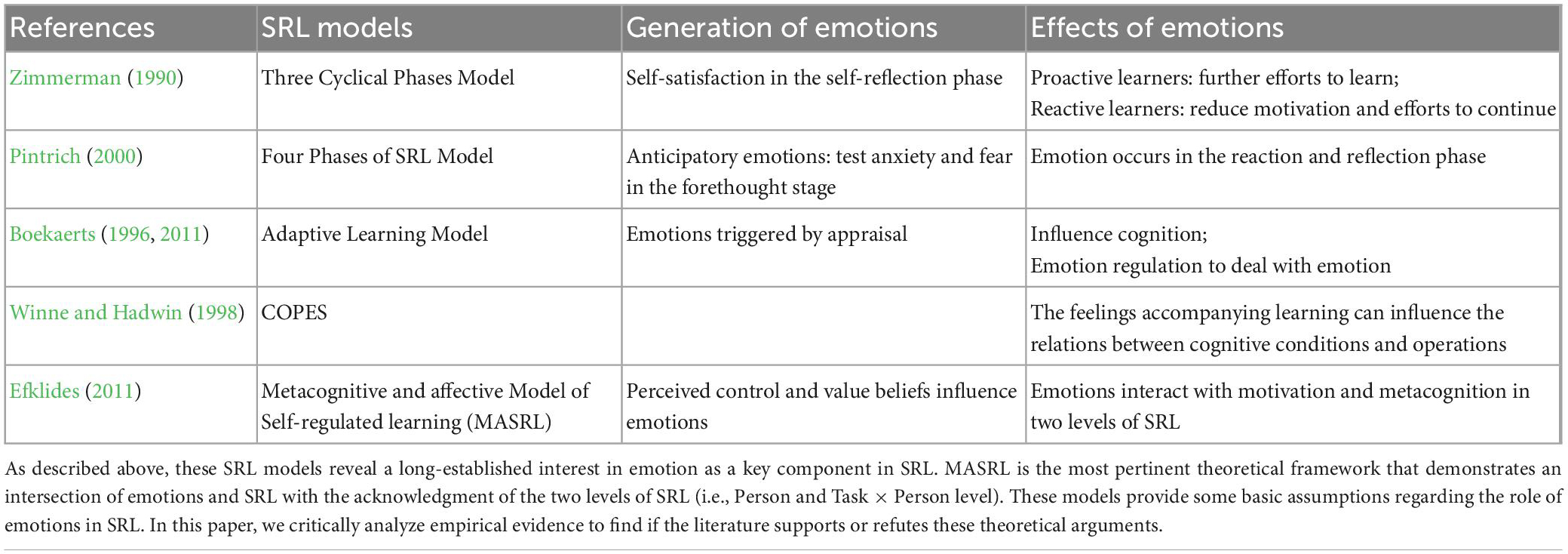

The answers to the role of emotions in SRL have changed over time because of changing conceptualizations of SRL and the development of SRL models. However, emotions are consistently viewed as an important dimension in SRL ( Lajoie, 2008 ). SRL models paved the way for understanding the role of emotions in SRL. In order to address our first research question regarding emotion and SRL theories, we reviewed five SRL models recognized by Panadero (2017) who argued that all have a consolidated theoretical and empirical foundation. Moreover, the five SRL models are all seminal theories and they are well-recognized in the literature. Järvelä and Hadwin’s (2013) socially shared regulated learning model was not included, as individualized learning is the focus of this paper. Table 1 presents our review of these six SRL models, focusing on what emotions are generated and how emotions affect SRL.

Table 1. The role of emotions in self-regulated learning (SRL) models.

Based on the social cognitive paradigm, Zimmerman (1990) acknowledged the existence of emotions and their role in SRL. He described self-satisfaction as a combination of emotions ranging from elation to depression. However, he did not specify which emotions were included in the umbrella of self-satisfaction feelings. Pintrich (2000) extended Zimmerman’s (1990) model and discussed emotions in the context of test anxiety. In Pintrich’s (2000) model, task or contextual features were proposed as factors that might activate test anxiety, and emotion regulation strategies were used to manage test anxiety. This model recognized both the generation and effect of emotions. However, the model only identified test anxiety as an emotion, failing to address other types of emotions that might affect learning. Boekaerts (1996 , 2011) gradually shifted her theory from cognition and motivation to emotion and emotion regulation ( Panadero, 2017 ). In her dual-processing model, emotions were proposed as a result of the dual processing of appraisals toward the learning situation ( Boekaerts, 2011 ). If the learning situation were appraised as congruent with personal goals, positive emotions toward the task would be triggered. In contrast, negative emotions would be triggered if the learning situation was appraised as threatening well-being because of task difficulty or insufficient support. The dual processing model highlighted the importance of emotions in SRL but did not specify the type of emotions and the outcomes of emotions in SRL. Winne and Hadwin (1998) emphasized how conditions, operations, products, evaluations, and standards (COPES) could influence the four phases of SRL tasks (i.e., task definition, goals and planning, studying tactics, adaptations). Emotion was not explicitly referred to in this model ( Panadero, 2017 ). The discussion about motivational factors could be an allusion to emotions. Learning feelings may influence the relationship between cognitive conditions and actual operations. In contrast, the metacognitive and affective model of self-regulated learning (MASRL) provided insight into the interactions of metacognition, motivation, and affect in SRL. This model puts more emphasis on the affect in SRL and refers explicitly to the two levels of SRL. As mentioned before, MASRL presented a Person level of SRL functioning, as well as a Task × Person level of SRL events in task processing ( Efklides, 2011 ). We will discuss further how this model describes the relationship between emotions and SRL at two levels.

At the Person level, decisions about what SRL strategies to choose are made based on stable personal characteristics and habitual representation of situational demands ( Efklides et al., 2018 ). Emotion is a relatively stable characteristic of the individual, namely trait emotions. Efklides (2011) describes three extreme scenarios pertaining to how emotions may interact with SRL at the Person level. In the first positive scenario, learners predict success with appropriate SRL strategies and positive emotions. In the second negative scenario, learners predict failure with inappropriate SRL strategies and negative emotions. In the third scenario, learners underestimate or overestimate personal competency; consequently, their emotional reactions and effort expenditure do not match learning outcomes. More specifically, if a student underestimates their mathematics skills, for example, they would emotionally feel anxious and spend more effort learning math, resulting in successful learning outcomes. By contrast, a student who overestimates his effort would experience positive emotions, exert insufficient effort, and have unsuccessful outcomes. These estimated efforts and emotions, restored at a general level, provide cues for subsequent specific tasks ( Efklides, 2006 ).

In a specific task, SRL happens in the form of dynamic events at a Task × Person level. According to this model, task features (e.g., complexity) are objective and independent of a specific learning context but intersect with the person’s attributes and must be considered jointly. The MASRL model proposed three phases of SRL that align with Zimmerman’s (2000) proposition of SRL phases (i.e., forethought, performance, and self-reflection ). The forethought phase may involve two types of cognitive processes. The first type is an automatic and unconscious cognitive process, which can be generated when dealing with familiar, fluent, and effortless tasks ( Efklides, 2011 ). When processes are automatic, emotions are neutral or moderately positive without conscious control processes and increased physiological activity ( Carver and Scheier, 1998 ). The second type of cognitive process is analytic and can be triggered by the task’s structure, novelty, and complexity ( Alter et al., 2007 ). Negative emotions may appear with increased arousal ( Efklides, 2011 ). On the other hand, emotions such as surprise and curiosity may be generated depending on the uncertainty and cognitive interruption that occurs during this phase ( Bar-Anan et al., 2009 ). At the performance phase , negative or positive emotions may also change according to the fluency of processing and the rate of progress ( Ainley et al., 2005 ). When tasks are completed, and outcomes are produced at the self-reflection phase , positive or negative emotions accompanying the outcomes of the task are triggered or enhanced.

5. Academic emotions and SRL: What does the empirical evidence tell us?

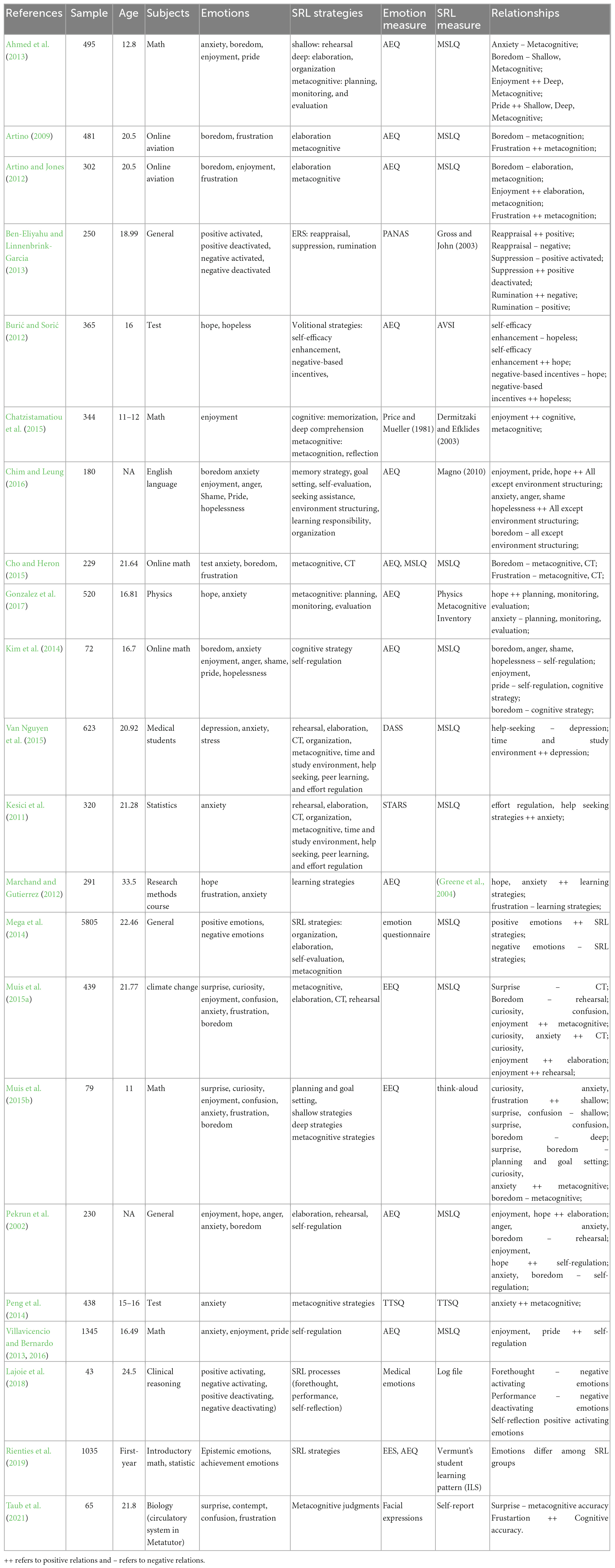

To address the second research question (i.e., empirical evidence regarding the role of emotions in SRL), we conducted a comprehensive literature search via the PsycINFO, ERIC, and Web of Science databases. The search syntax was (“self-regulated learning” OR “self-regulation” OR “metacognition”) AND (“emotion” OR “affective” OR “anxiety” OR “positive emotions” OR “negative emotions”). The search ended up retrieving 205 articles. We then applied these five inclusion criteria to screen articles: (1) The study must be published in English; (2) The study measured specific self-regulated learning strategies or self-regulated learning processes; (3) The study measured discrete emotions; (4) The study was conducted in a specific learning setting, including an exam, a task, a course, or a specific training program; (5) The study reported the correlation between specific SRL strategies/processes and discrete emotions. Only 23 studies that meet these five criteria are included.

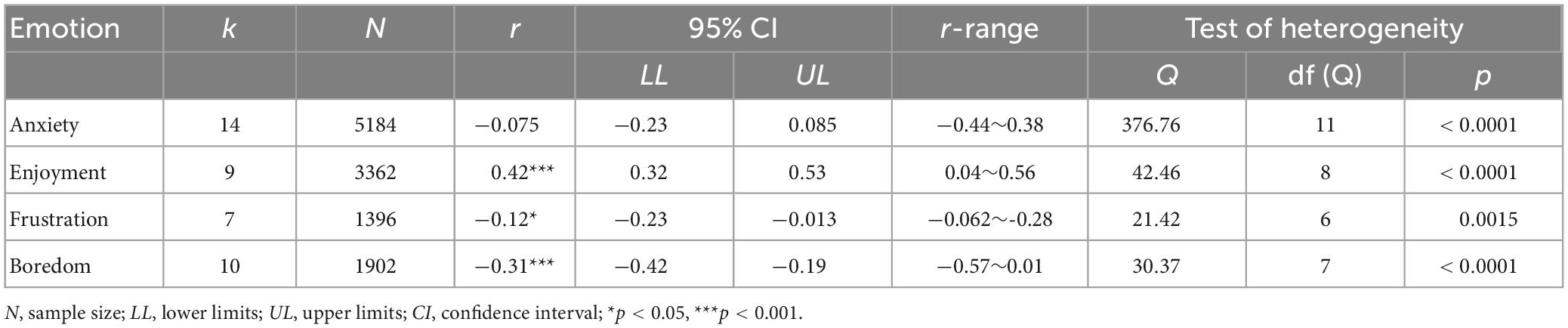

As can be seen in Appendix A , these 23 empirical studies examined the relationship between emotions and SRL strategies. By analyzing the summary of the 23 included studies ( Appendix A ), we find anxiety, enjoyment, frustration, and boredom are the most frequently examined academic emotions. Metacognitive strategy is most frequently examined in SRL. We then coded these five variables to synthesize the correlation between the four academic emotions and metacognitive strategies. Particularly, there were 14 studies focusing on anxiety, 11 studies on enjoyment, 7 studies on frustration, and 10 studies on boredom. The number of studies was statistically sufficient, based on the rule of a minimum of five independent studies for reliable estimation in the small-sample meta-analysis ( Fisher and Tipton, 2015 ).

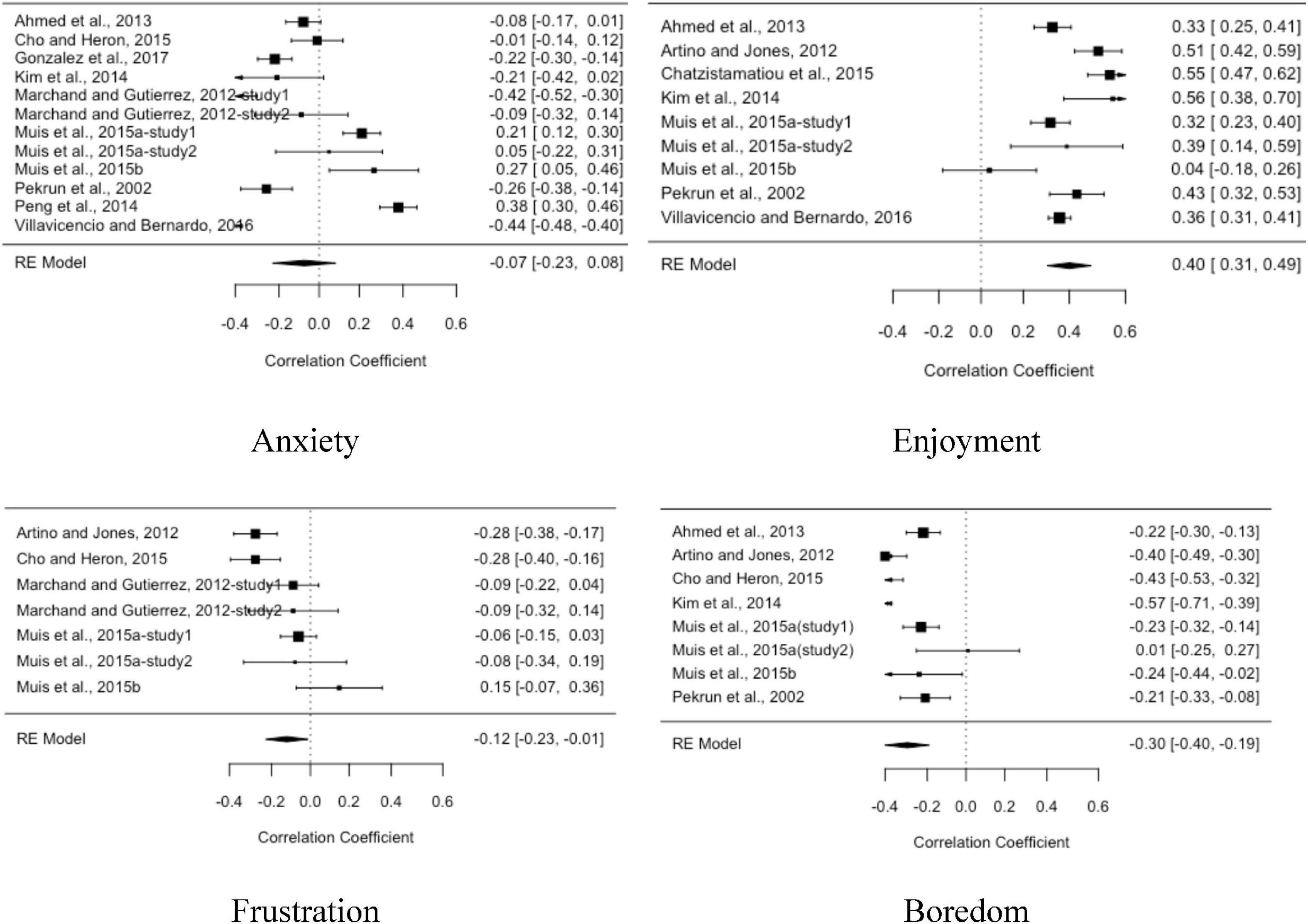

We adopted a random-effects model ( Hedges and Vevea, 1998 ) since the studies in our review differed in methodological characteristics. Among the positive emotions, we found that enjoyment was positively related to metacognitive strategies ( r = 0.42) (see Table 2 ). As displayed in the forest tree of enjoyment in Figure 1 , we found positive relationships between enjoyment and metacognitive strategies in all studies. In addition to enjoyment, pride also positively predicted cognitive and metacognitive strategies ( Ahmed et al., 2013 ).

Table 2. Meta-analysis results.

Figure 1. The forest tree of anxiety, enjoyment, frustration, and boredom.

In terms of negative emotions, anxiety and frustration are generally negatively related to metacognitive strategies ( r = −0.075 and r = −0.12, respectively). However, mixed findings have also been identified across studies. For instance, Muis et al. (2015a) and Peng et al. (2014) found positive relationships between anxiety and metacognitive strategies. Frustration was found to be both positively and negatively related to metacognitive strategies ( Artino and Stephens, 2009 ; Artino and Jones, 2012 ; Marchand and Gutierrez, 2012 ; Cho and Heron, 2015 ). Surprise, curiosity, and confusion are epistemic emotions that produce the most inconsistency in terms of valence categorization, meaning that for learners, they are sometimes pleasant and sometimes unpleasant when experiencing these three epistemic emotions ( Noordewier and Breugelmans, 2013 ). In our meta-analysis, we found boredom negatively related to metacognitive strategies in most of the studies ( r = −0.31). Curiosity and confusion either positively or negatively predict SRL depending on the depth of strategy use ( Muis et al., 2015b ). In other words, surprise, curiosity, and confusion can have different effects on shallow processing strategies, deep processing strategies, cognitive strategies, and metacognitive strategies.

The empirical studies provide evidence about the relationship between trait emotions and SRL strategies. Specifically, the majority of the empirical studies focused on examining how academic emotions affect SRL strategies at the Person level (see Appendix A ). This emphasis is partly because researchers initially conceptualized SRL as a relatively stable individual inclination, which led to trait-like measures of SRL strategies that have dominated the literature ( Boekaerts and Cascallar, 2006 ). The methodological and ethical issues regarding collecting online data also restrict the exploration of emotions and SRL as states and events ( Schutz and Davis, 2000 ).

To sum up, previous SRL models and empirical studies are not sufficient to reveal the underlying mechanisms of emotions in SRL. One reason is that existing SRL models put unequal emphasis on emotions and SRL. It is worth mentioning that the MASRL model took a crucial step toward a better understanding of emotions in SRL. The model emphasizes both the static and dynamic characteristics of emotions at the two levels of SRL. Nevertheless, the MASRL model provides no clues about how emotions are generated and how the complex interplays of emotions and SRL influence learning outcomes. In terms of empirical evidence, previous studies only addressed the relationships between emotions, SRL strategies, and learning outcomes. Many questions are still unanswered, for instance, (a) what academic emotions will be generated in the SRL process? (b) what are the effects of different emotions in SRL, (c) how do emotions change in different stages of SRL?, and (d) what are the relationships between emotion and SRL at the Task × Person level? An integrated framework is needed to illustrate the role of emotions in SRL better. To substantially advance this field of research, we contend that this framework should address the generation and effects of emotions in SRL. It should provide explanations of the reciprocal relationships between emotions and SRL. Furthermore, the two levels of SRL (i.e., Person and Task × Person level) should be considered to demonstrate how trait and state emotions unfold in different SRL phases, e.g., forethought, performance, and self-reflection.

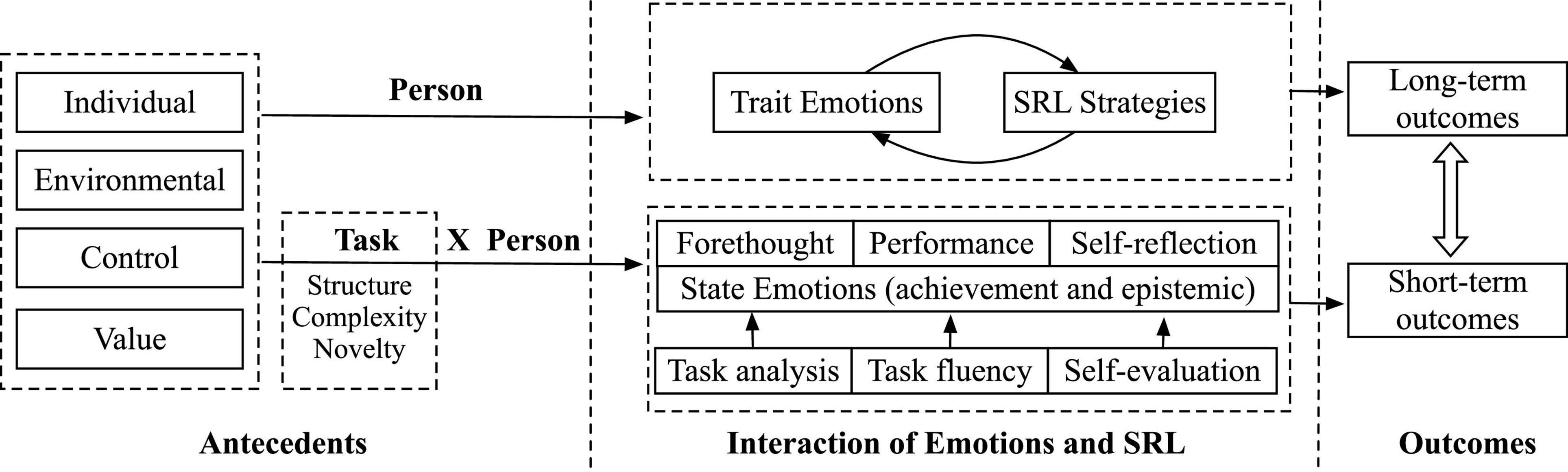

6. Toward an integrated framework for understanding emotions in SRL

In this study, we proposed an integrative framework of emotions in SRL (ESRL) ( Figure 2 ). The ESRL framework was developed based on the previous conceptualizations of emotions and SRL. It retains the important contributions of previous SRL models. As shown in Figure 2 , the framework focuses on the generation and effects of emotions in SRL at two levels (i.e., Person and Task × Person level). In the center of the ESRL framework are the propositions that SRL is an aptitude influenced by trait emotions at the Person level. Moreover, SRL is also an event in a specific task that has dynamic state emotions unfold during different phases at the Task × Person level.

Figure 2. The role of emotions in self-regulated learning (ESRL).

6.1. Antecedents of academic emotions

Individual characteristics, environmental factors, control appraisals, and value appraisals are the antecedents of academic emotions at both the Person and Task × Person levels. According to the Control Value theory (CVT), individual antecedents include intraindividual differences such as gender and achievement goals ( Pekrun and Perry, 2014 ). Environmental antecedents (e.g., autonomy support and feedback) are factors that characterize general learning environments. Either trait or state emotions can be triggered depending on the specificity of these antecedents. When control appraisals are conceptualized as the general perception of a learning situation, such as attending online courses ( You and Kang, 2014 ) or a math course ( Villavicencio and Bernardo, 2013 ), control appraisals can predict how students generally feel (trait emotions) in these similar situations. In contrast, when control appraisals are conceptualized as the perception of a specific learning task, for example, solving a math problem ( Muis et al., 2015b ), control can influence students’ emotions during the problem-solving process (i.e., state emotions). From the perspective of CVT, generalized control-value beliefs can be linked to trait emotions. They can also influence momentary appraisals and state emotions ( Pekrun and Perry, 2014 ).

Furthermore, the majority of the literature in educational psychology has focused on the effect of task features (i.e., task novelty, complexity, and structure) on the occurrence of emotions, especially epistemic emotions ( D’Mello and Graesser, 2012 ; Muis et al., 2018 ). These task features are objective and independent of a specific learning context but intersect with the person’s attributes and must be considered jointly ( Efklides, 2011 ). Foster and Keane (2015) focused on how new, novel or unique information may trigger surprise if the individual perceives the information as unexpected. D’Mello et al. (2014) proposed that complexity is a crucial antecedent to confusion during learning. Silvia (2010) argued that the complexity of the task would also predict either curiosity or confusion after the surprise toward novelty. In addition to curiosity and confusion, boredom and anxiety are the consequences of task novelty, complexity, and structure. For example, a generally highly competent student may still feel anxious when solving a difficult math question (i.e., task complexity). A student who usually feels bored in a face-to-face math class may be curious about a novel math question that is presented in an innovative way (i.e., task novelty and structure). All the emotions arise from appraisals of uncertainty stemming from task novelty, complexity, or structure ( Ellsworth and Scherer, 2003 ). It is the cognitive disequilibrium underlying uncertainty that plays a critical role in triggering dynamic epistemic emotions ( D’Mello and Graesser, 2012 ). Compared with the dynamic appraisal process of state emotions and ever-changing task attributes, however, trait emotions are relatively stable to interact with SRL.

6.2. Interaction between emotions and SRL at the person level

Trait emotions have reciprocal relationships with SRL strategies. Trait emotions are a decontextualized and stable way of reporting feelings ( Goetz et al., 2016 ), while SRL strategies include all the components of SRL, namely cognitive strategies, metacognitive strategies, emotional strategies, and motivational strategies ( Warr and Downing, 2000 ; Ferla et al., 2009 ). In the interaction between emotions and SRL, trait emotions are presented as an emotional loop or cycle that monitors the strategies or efforts exerted in SRL ( Efklides et al., 2018 ; Ben-Eliyahu, 2019 ). On the one hand, trait emotions may interfere with students’ prioritization of SRL strategies. Results from empirical studies support these propositions. Positive emotions (e.g., enjoyment, pride) are positively related to students’ usage of cognitive strategies and metacognitive strategies ( Pekrun et al., 2002 ; Artino and Stephens, 2009 ; Ahmed et al., 2013 ; Villavicencio and Bernardo, 2013 , 2016 ; Mega et al., 2014 ; Chatzistamatiou et al., 2015 ; Chim and Leung, 2016 ). Villavicencio and Bernardo (2013 , 2016) examined the relationship between academic emotions, self-regulation, and achievement in a math course and found that both enjoyment and pride were positively correlated with self-regulation. In terms of negative emotions, boredom, frustration, and anxiety were generally negatively associated with SRL strategies ( Pekrun et al., 2002 ; Kim et al., 2014 ; Mega et al., 2014 ; Peng et al., 2014 ; Gonzalez et al., 2017 ). More interestingly, researchers found that SRL strategies also influenced students’ trait emotions. For example, Ben-Eliyahu and Linnenbrink-Garcia (2013) examined how self-regulated emotion strategies would influence students’ emotions in academic courses. Results suggested that self-regulated emotions were differentially employed based on course preference, which consequently influences students’ emotions in the course. Furthermore, students who heavily rely on ineffective strategies show prolonged frustration, boredom, and confusion ( D’Mello and Graesser, 2012 ; Sabourin and Lester, 2014 ; Azevedo et al., 2017 ). The cyclical effects between emotions and SRL strategies generate long-term effects on student learning outcomes, including persistence ( Drake et al., 2014 ), procrastination ( Rakes and Dunn, 2010 ), and academic achievements ( Peng et al., 2014 ; Gonzalez et al., 2017 ).

6.3. State emotions function at the Task × Person level

Achievement emotions and epistemic emotions are the dominant emotions triggered in a specific task ( Pekrun and Stephens, 2012 ), where these emotions dynamically influence three phases of the SRL cycle ( Efklides, 2011 ). Research provides support for the dynamic emotional changes throughout SRL processes. Anticipatory feelings start from the beginning of a learning activity (i.e., forethought), even though these feelings may be more salient in the self-reflection phase of SRL ( Usher and Schunk, 2018 ). Within the SRL cycle, individuals experience emotions in proportion to the challenges they are facing ( Usher and Schunk, 2018 ). The structure, complexity, and novelty attributes of a task reflect the challenges, which have become the catalyst of academic emotions ( Muis et al., 2018 ). In other words, task analysis in the forethought phase predicts the initial emotions students may feel. It is reasonable to anticipate confusion when facing unfamiliar structures, anxiety when facing complexity, and curiosity when facing novelty. In addition to the forethought phase , task fluency in the performance phase contributes to discrete emotions. In two studies by Winkielman and Cacioppo (2001) , students showed more negative emotions in reaction to processing fluency. Fulmer and Tulis (2013) examined students’ fluency and emotions multiple times in a reading task. A latent growth curve showed that positive emotions decline with a decrease in reading fluency. Finally, self-evaluation in the self-reflection phase can also affect a change of emotions ( Efklides et al., 2018 ). Learners judge their learning situations by comparing them with performance standards established by themselves and others ( Usher and Schunk, 2018 ). There is no doubt that students experience different emotions even when they have similar performances. A low-performing student may experience more happiness and even pride in the self-reflection phase if they consider themself to outperform what they expected. As discussed above, emotions are dynamic and change throughout the three SRL phases, which can influence the effort individuals put toward a task or create an obstacle to further progress in the SRL tasks. Feelings of happiness and pride may lead to renewed efforts, while anxiety and frustration may lead to task avoidance or withdrawal ( Usher and Schunk, 2018 ). Consequently, the short-term learning outcomes will be influenced, including achievements and learning gains, in this SRL event.

6.4. Interaction between the two levels of SRL

Self-regulated learning (SRL) is a life-long learning process where students need to plan for each session, each semester, and each training period ( Efklides et al., 2018 ). The short-term learning outcome of one section determines if students will persist with learning or quit on their attempt in the next session. Repeated engagement or disengagement with similar tasks provides consistent information about self-efficacy in a task domain and updates the domain-specific self-concept ( Efklides, 2011 ). Indeed, Metallidou and Efklides (2001) found that self-ratings of confidence and personal estimates of mathematical performance predicted competence at the Person level. It is possible that the short-term perseverance or withdrawal of effort will transfer into long-term outcomes that will be relatively stable over time. From the Task × Person level to Person level, the short-term outcomes of a specific SRL event will gradually influence long-term SRL outcomes. On the other hand, long-term learning outcomes will be transformed into more stable individual characteristics to affect SRL at the Task × Person level. These individual characteristics can be prior knowledge, motivation, and self-efficacy, which will affect how students appraise a specific task. Efklides and Tsiora (2002) conducted a longitudinal study to examine the mechanism between SRL at the Task × Person level and Person level. They found self-concept at the Person level influenced SRL at the Task × Person level. Therefore, from the perspective of life-long learning, we assume the existence of long-term interaction between the two levels of SRL, even though empirical evidence to support this argument is sparse.

7. Future directions that build upon the integrative framework

Future research should progress beyond the singular study of relations between emotions and SRL at the Person level. As proposed in our framework, the dynamic relationships between emotion and SRL exist at the Task × Person level. Therefore, it is crucial for future research to examine how emotions unfold in different phases of SRL using advanced methodologies. For example, the high sampling rates of physiological and behavioral measures make it possible to capture the components of SRL with high granularity. Further research in examining emotions and SRL at the Task × Person level could provide insights into the dynamics of SRL, which will consequently inform instruction and the scaffolding for SRL.

Another fruitful area of research resides in the longitudinal research that examines the long-term interplays between the Task × Person and the Person levels of SRL. For example, if students are trained to have proper task analysis skills at the beginning of a specific task, will this kind of training influence their general SRL strategies? Do students transfer the strategies across different contexts and gradually develop them into trait-like personal inclinations? If so, what factors can promote or prohibit this kind of influence? Do trait emotions influence state emotions? Do achievements and motivations associated with a specific task accumulate to influence students’ general persistence and procrastination in learning? Answering these types of questions can provide educators and researchers with tools for designing SRL training programs that can affect learners in the long run.

The third area in need of investigation is how the structure, difficulty, and novelty of a task are related to control-value appraisals and collectively influence academic emotions and SRL. Task difficulty is likely a well-explored direction in task analysis; however, task structure and novelty need further exploration. For example, different emotions and SRL patterns may be triggered by an exam that starts with easy questions or an exam that starts with difficult questions. Thus, a better understanding of the influence of task structures and novelty would contribute to the design of a task that is beneficial for SRL.

Additionally, empirical studies are needed to verify the antecedents of emotions across the three SRL phases. As discussed in our ESRL model, task analysis in the forethought phase, task fluency in the performance phase, and self-evaluation in the self-reflection phase triggers the occurrence and changes of academic emotions. More studies are needed to empirically explore these possibilities. More importantly, researchers can delve into the real-time modeling and visualization of the factors influencing emotions so that corresponding strategies or interventions can be incorporated to optimize the whole learning process. For example, it would be interesting to provide instructors with a dashboard that displays how students’ task fluency evolves and students’ experience of academic emotions over time. In doing so, teachers can provide students with effective emotional or instructional support in real-time.

It is also important to highlight the necessity of using multimodal multichannel data to study SRL for future research. Researchers currently use four types of methodological approaches to studying SRL: (a) self-report measures (i.e., self-report questionnaires, structured dairy, think-aloud/emote aloud, interview); (b) behavioral measures (i.e., facial expressions, body posture, eye-tracking); (c) physiological measures; and (d) computer trace log files. However, each of these four types of measures has its strengths and weaknesses. For example, self-report has its strength in examining SRL at the Person level. However, many self-report measures are static and not capable of capturing the dynamic changes of SRL at the Task × Person level. In contrast, computer log files are powerful in keeping track of SRL at the Task × Person level. However, researchers have to overcome the challenge of making reliable inferences from the trace data.

In response to the drawbacks and strengths of the current methodologies, we argue that researchers need to use multimodal multichannel data when examining the relationship between emotions and SRL at both levels. Self-report measures can be the focus of trait measures at the Person level, whereas physiological measures and trace data can contribute to the situational measures at the Task × Person level. Furthermore, when adopting multichannel data to examine the relationships proposed in the ESRL model, researchers must pay attention to the challenges regarding data analysis and data interpretation. Alignment is the major challenge of analyzing multiple data streams, especially when the starting times are different or the sampling rate varies across devices and methods. For example, it is obvious that physiological sensors need to be attached before an actual data collection process. Consequently, the physiological time stamps start earlier in data collection than in computer log files if both are used for measuring SRL events. In a similar vein, the eye-trackers capture data continuously at 60–120 Hz, but EDA occurs at 8–20 Hz, which means that transformation is necessary when using multimodal multichannel data to analyze different data channels. In terms of data interpretation, it is problematic when researchers cannot make consistent predictions related to specific indices of a method. For example, fixation duration has been interpreted as both cognitive engagement and emotional arousal. These mixed interpretations can cause misleading findings in the literature.

8. Conclusion

There is remarkable progress in the theoretical development of SRL toward a holistic understanding of learning or problem-solving process that underscores academic emotions. Although limited empirical studies are available, contemporary literature clearly suggests the complex relationships between academic emotions and SRL. However, this field of study is still scattered and fragmented, given the many ambiguities and arguments about the nature of the two constructs (i.e., academic emotions and SRL). In this study, we contended that emotions could be studied as either traits or states and SRL functions at both Person and Task × Person levels. By reviewing predominant SRL models and analyzing relevant empirical studies, we proposed an integrative framework to explain the role of trait and state emotions in SRL. Specifically, the proposed framework illustrates what the antecedents of emotions are and how they influence academic emotions and consequently SRL and learning outcomes. Moreover, the framework explains how trait emotions influence SRL strategies at the Person level and how state emotions unfold in different phases of SRL at the Task × Person level. We discuss future research directions that build upon our framework, which will advance this field of study considerably. We acknowledge that there is still a long way to go to pinpoint the complex interplays between emotions and SRL. The proposed framework in this study lays a solid foundation for developing a comprehensive understanding of the role of emotions in SRL and asking important questions for future investigation.

Author contributions

JZ: conceptualization, investigation, and writing–original draft. SLa: writing–reviewing and editing, supervision, and funding acquisition. SLi: writing–reviewing and editing. All authors contributed to the article and approved the submitted version.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note