Data Annotation Tutorial: Definition, Tools, Datasets

Data is an integral part of all machine learning and deep learning algorithms .

It is what drives these complex and sophisticated algorithms to deliver state-of-the-art performances.

If you want to build truly reliable AI models , you must provide the algorithms with data that is properly structured and labeled.

And that's where the process of data annotation comes into play.

You need to annotate data so that the machine learning systems can use it to learn how to perform given tasks.

Data annotation is simple, but it might not be easy 😉 Luckily, we are about to walk you through this process and share our best practices that will save you plenty of time (and trouble!).

Here’s what we’ll cover:

What is data annotation?

Types of data annotations.

- Automated data annotation vs. human annotation

V7 data annotation tutorial

Solve any video or image labeling task 10x faster and with 10x less manual work.

Don't start empty-handed. Explore our repository of 500+ open datasets and test-drive V7's tools.

Ready to streamline AI product deployment right away? Check out:

- V7 Model Training

- V7 Workflows

- V7 Auto Annotation

- V7 Dataset Management

Essentially, this comes down to labeling the area or region of interest—this type of annotation is found specifically in images and videos. On the other hand, annotating text data largely encompasses adding relevant information, such as metadata, and assigning them to a certain class.

In machine learning , the task of data annotation usually falls into the category of supervised learning, where the learning algorithm associates input with the corresponding output, and optimizes itself to reduce errors.

Here are various types of data annotation and their characteristics.

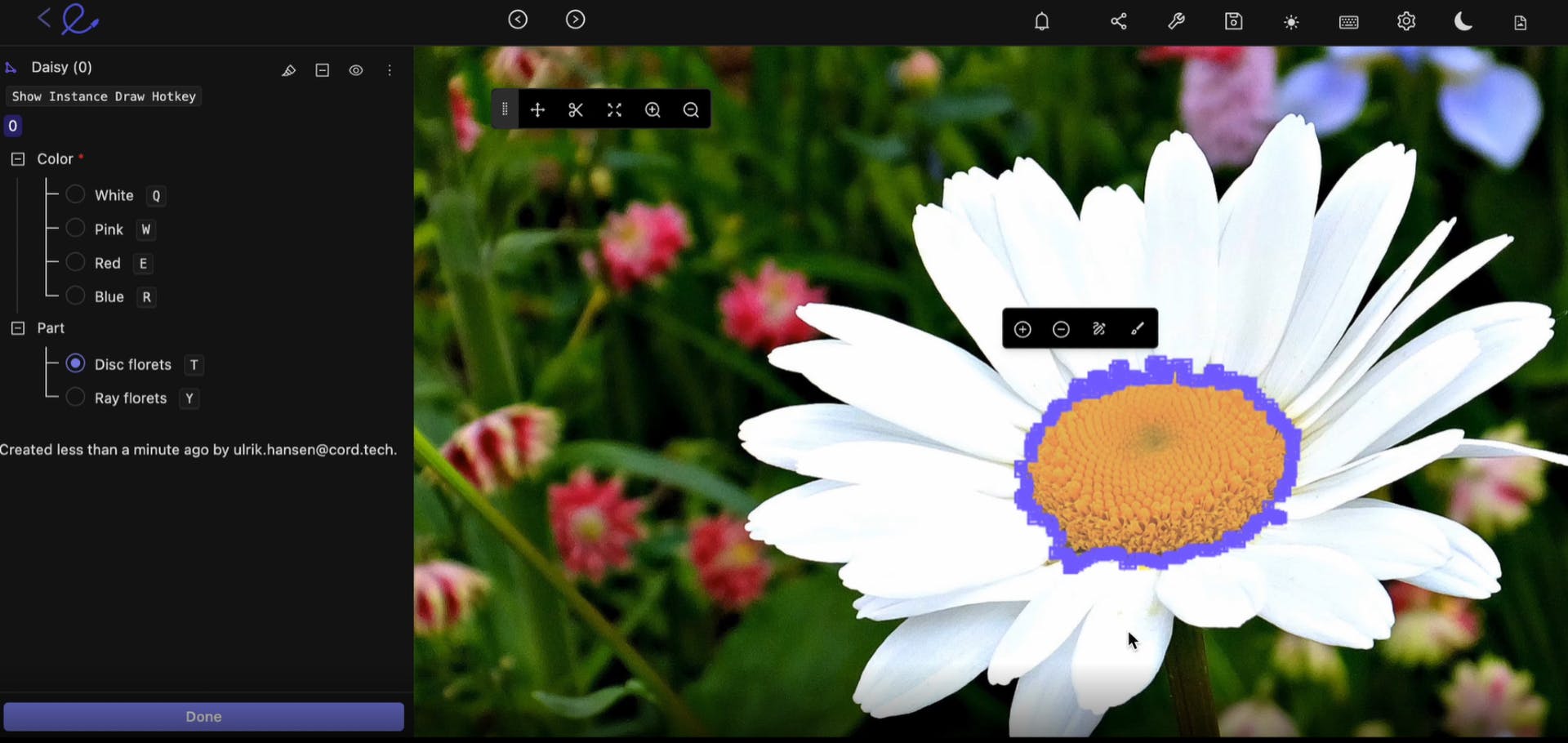

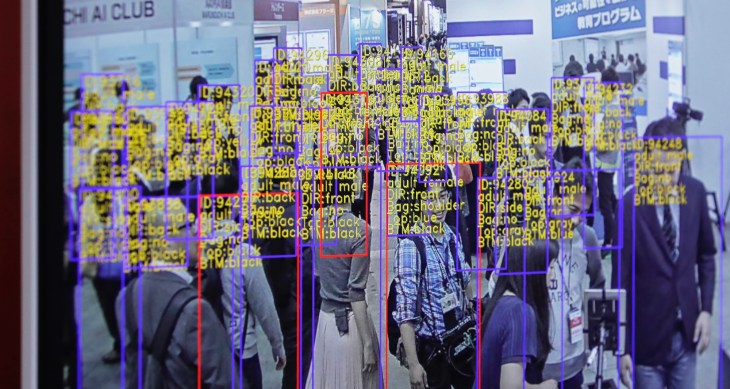

Image annotation

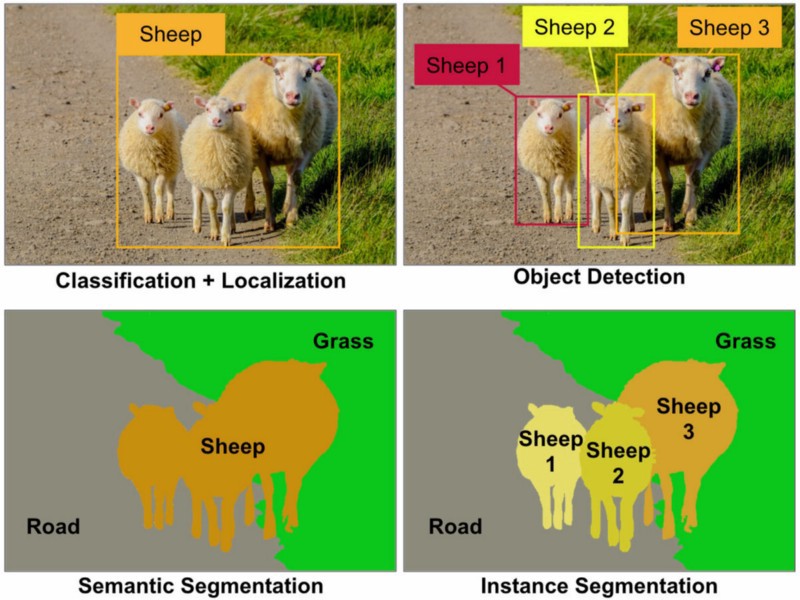

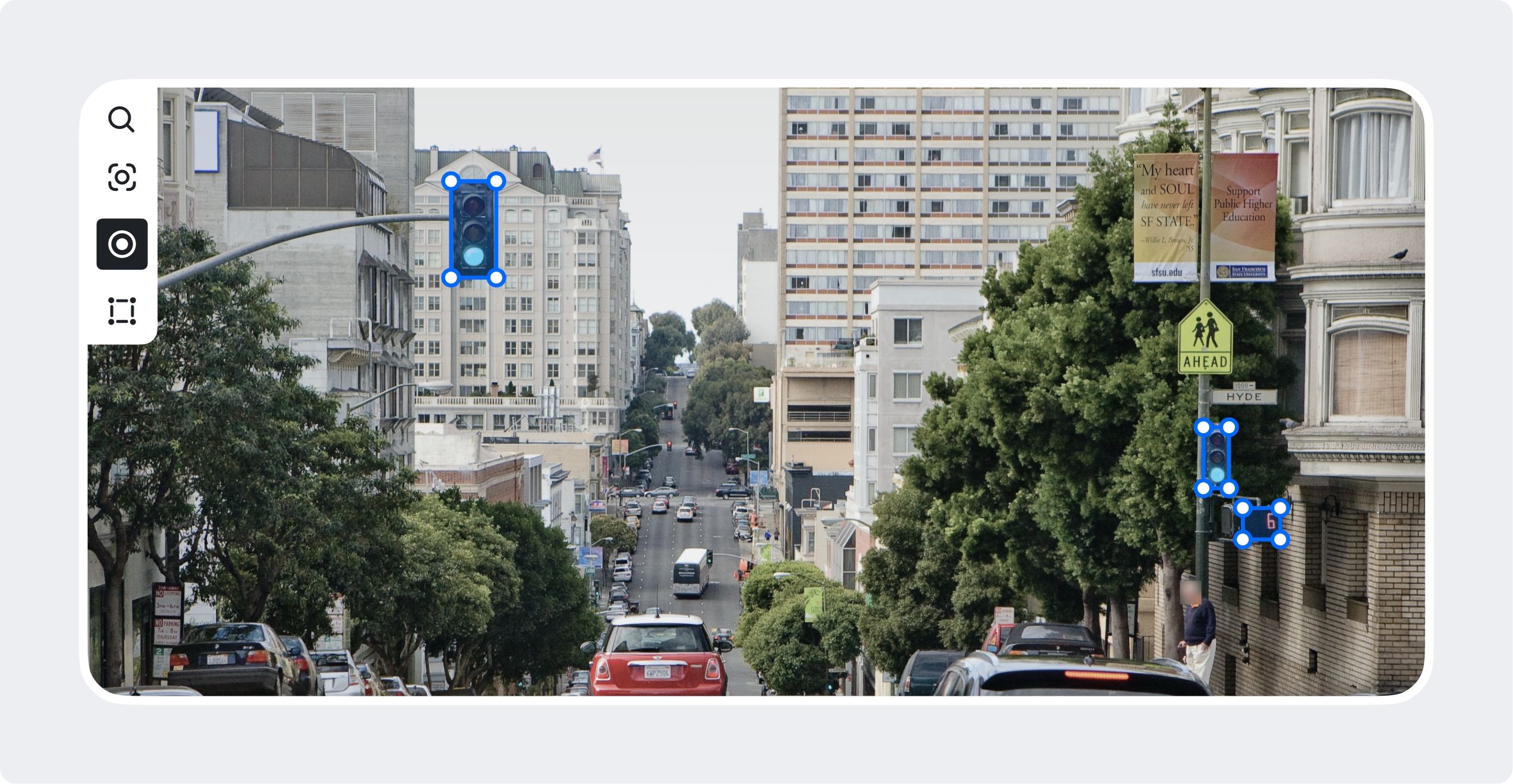

Image annotation is the task of annotating an image with labels. It ensures that a machine learning algorithm recognizes an annotated area as a distinct object or class in a given image.

It involves creating bounding boxes (for object detection ) and segmentation masks (for semantic and instance segmentation) to differentiate the objects of different classes. In V7, you can also annotate the image using tools such as keypoint, 3D cuboids, polyline, keypoint skeleton, and a brush.

💡 Pro tip: Check out 13 Best Image Annotation Tools to find the annotation tool that suits your needs.

Image annotation is often used to create training datasets for the learning algorithms.

Those datasets are then used to build AI-enabled systems like self-driving cars, skin cancer detection tools, or drones that assess the damage and inspect industrial equipment.

💡 Pro tip: Check out AI in Healthcare and AI in Insurance to learn more about AI applications in those industries.

Now, let’s explore and understand the different types of image annotation methods.

- Bounding box

The bounding box involves drawing a rectangle around a certain object in a given image. The edges of bounding boxes ought to touch the outermost pixels of the labeled object.

Otherwise, the gaps will create IoU (Intersection over Union) discrepancies and your model might not perform at its optimum level.

💡 Pro tip: Read Annotating With Bounding Boxes: Quality Best Practices to learn more.

The 3D cuboid annotation is similar to bounding box annotation, but in addition to drawing a 2D box around the object, the user has to take into account the depth factor as well. It can be used to annotate objects such on flat planes that need to be navigated, such as cars or planes, or objects that require robotic grasping.

You can annotate with cuboids to build to train the following model types:

- Object Detection

- 3D Cuboid Estimation

- 6DoF Pose Estimation

Creating a 3D cuboid in V7 is quite easy, as V7's cuboid tool automatically connects the bounding boxes you create by adding a spatial depth. Here's the image of a plane annotated using cuboids.

While creating a 3D cuboid or a bounding box, you might notice that various objects might get unintentionally included in the annotated region. This situation is far from ideal, as the machine learning model might get confused and, as a result, misclassify those objects.

Luckily, there's a way to avoid this situation—

And that's where polygons come in handy. What makes them so effective is their ability to create a mask around the desired object at a pixel level.

V7 offers two ways in which you can create pixel-perfect polygon masks.

a) Polygon tool

You can pick the tool and simply start drawing a line made of individual points around the object in the image. The line doesn't need not be perfect, as once the starting and ending points are connected around the object, V7 will automatically create anchor points that can be adjusted for the desired accuracy.

Once you've created your polygon masks, you can add a label to the annotated object.

b) Auto-annotation tool

V7's auto-annotate tool is an alternative to manual polygon annotation that allows you to create polygon and pixel-wise masks 10x faster.

💡 Pro tip: Ready to train your models? Have a look at Mean Average Precision (mAP) Explained: Everything You Need to Know.

Keypoint tool

Keypoint annotation is another method to annotate an object by a series or collection of points.

This type of method is very useful in hand gesture detection, facial landmark detection, and motion tracking. Keypoints can be used alone, or in combination to form a point map that defines the pose of an object.

Keypoint skeleton tool

V7 also offers keypoint skeleton tool—a network of keypoints connected by vectors, used specifically for pose estimation.

It is used to define the 2D or 3D pose of a multi-limbed object. Keypoints skeletons have a defined set of points that can be moved to adapt to an object’s appearance.

You can use keypoint annotation to train a machine learning model to mimic human pose and then extrapolate their functionality for task-specific applications, for example, AI-enabled robots.

See how you can annotate your image and video data using the keypoint skeleton in V7.

💡 Pro tip: Check out 27+ Most Popular Computer Vision Applications and Use Cases.

Polyline tool

Polyline tool allows the user to create a sequence of joined lines.

You can use this too by clicking around the object of interest to create a point. Each point will create a line by joining the current point with the previous one. It can be used to annotate roads, lane marking, traffic signs, etc.

Semantic segmentation

Semantic segmentation is the task of grouping together similar parts or pixels of the object in a given image. Annotating data using this method allows the machine learning algorithm to learn and understand a specific feature, and it can help it to classify anomalies.

Semantic segmentation is very useful in the medical field, where radiologists use it to annotate X-Ray, MRI, and CT scans to identify the region of interest. Here's an example of a chest X-Ray annotation.

If you are looking for medical data, check out our list of healthcare datasets and see how you can annotate medical imaging data using V7.

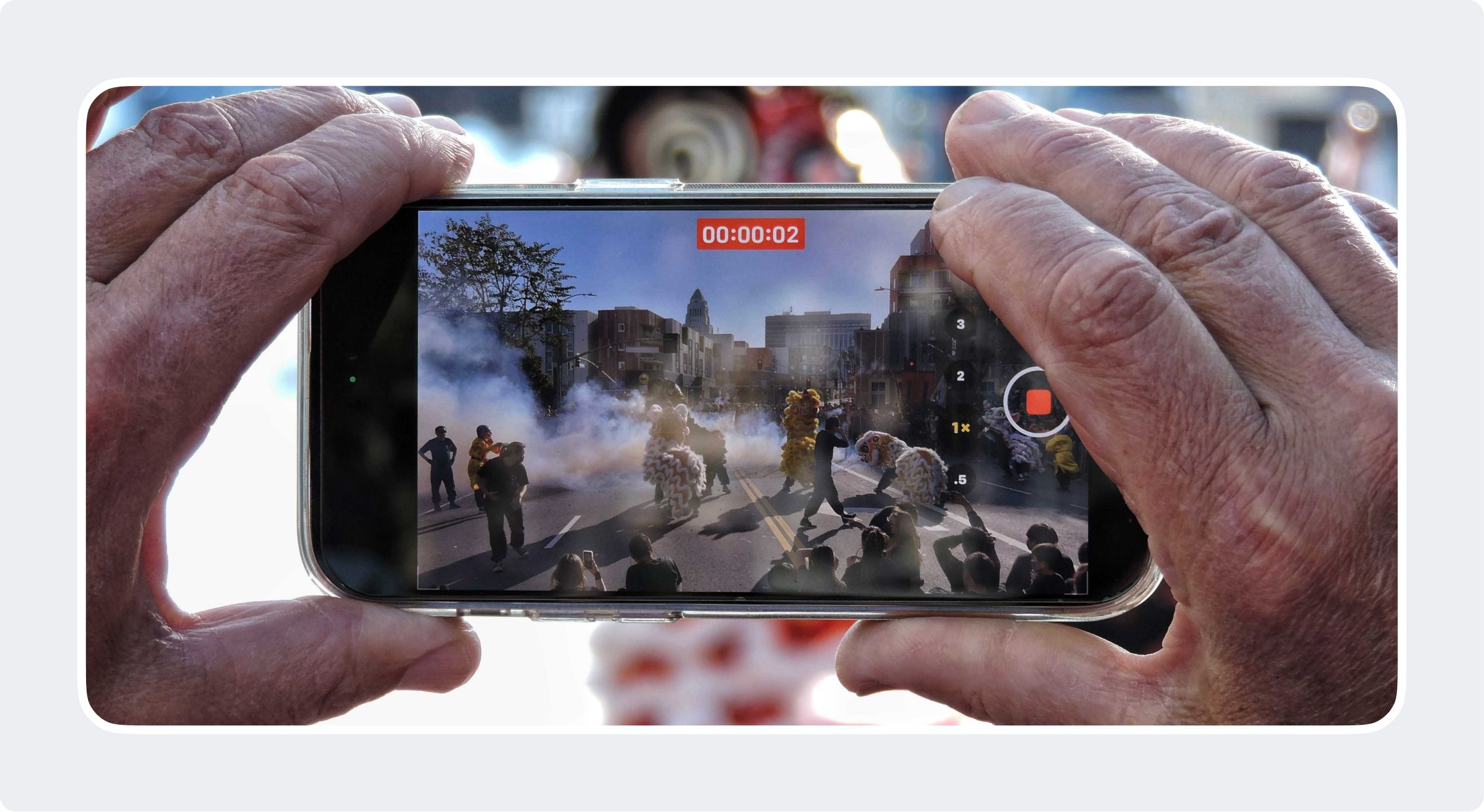

Video annotation

Similar to image annotation, video annotation is the task of labeling sections or clips in the video to classify, detect or identify desired objects frame by frame.

Video annotation uses the same techniques as image annotation like bounding boxes or semantic segmentation, but on a frame-by-frame basis. It is an essential technique for computer vision tasks such as localization and object tracking.

Here's how V7 handles video annotation .

Tackle any video format frame by frame. Use AI models to label sequences. Interpolate any annotation.

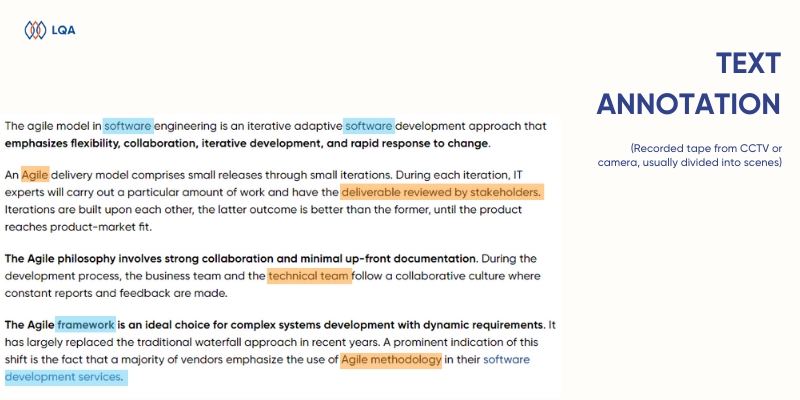

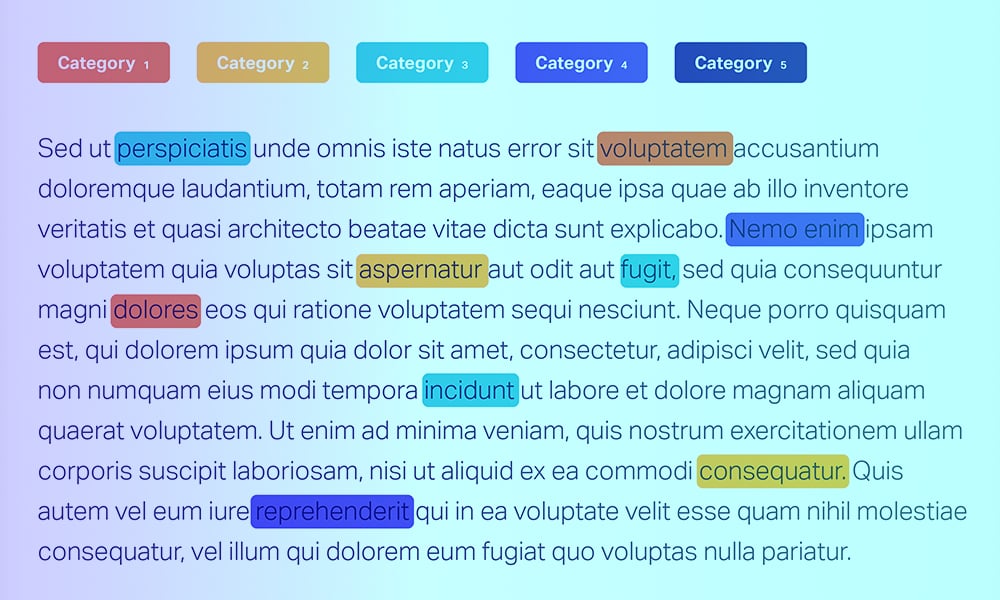

Text annotation

Data annotation is also essential in tasks related to Natural Language Processing (NLP).

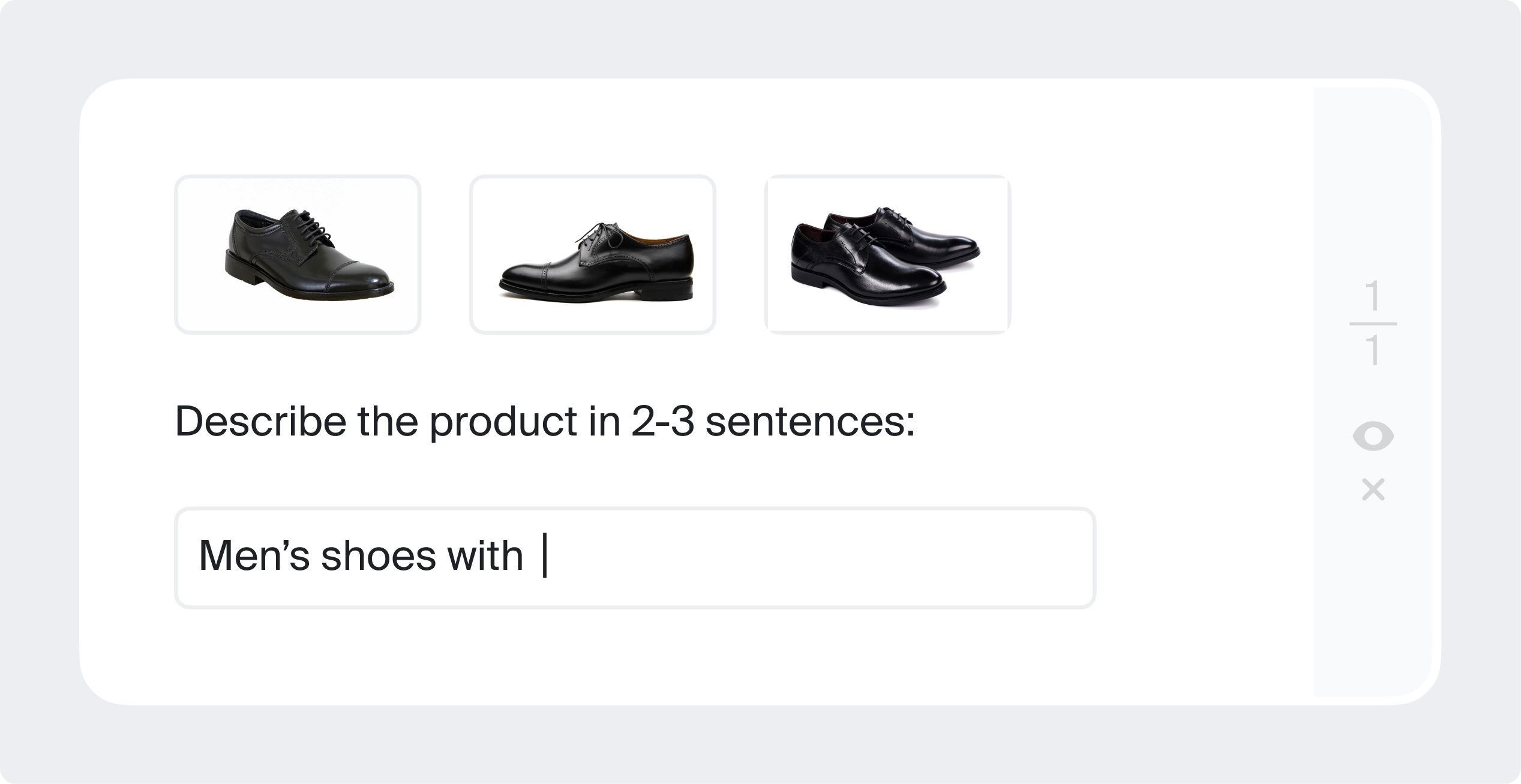

Text annotation refers to adding relevant information about the language data by adding labels or metadata. To get a more intuitive understanding of text annotation let's consider two examples.

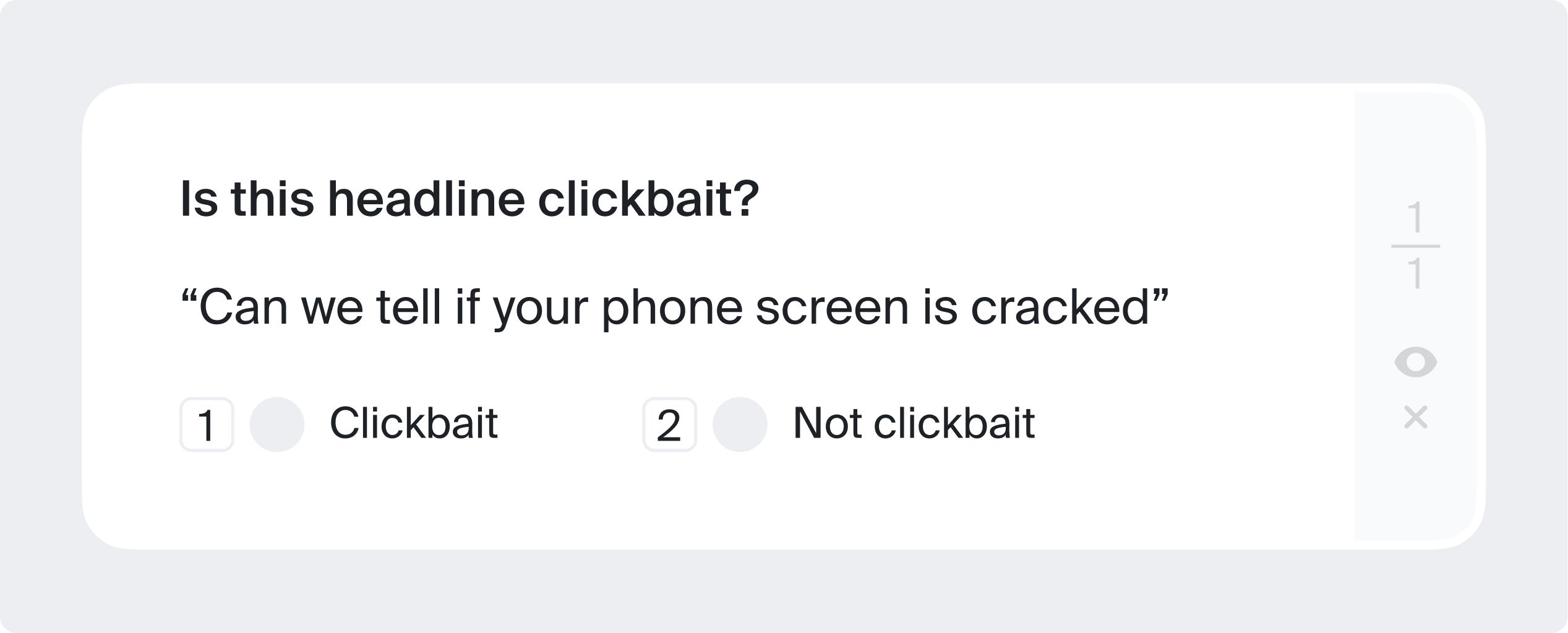

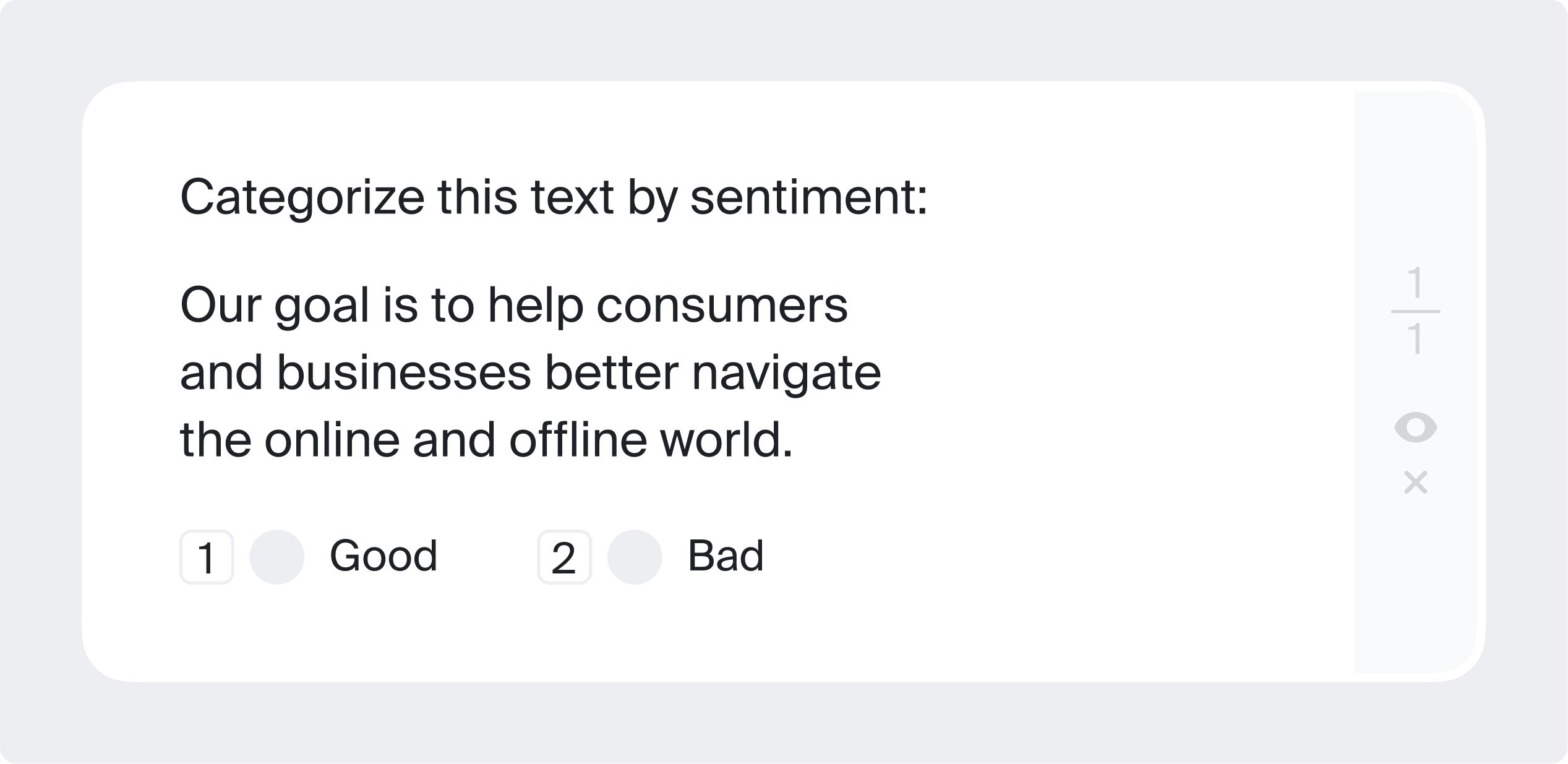

1. Assigning Labels

Adding labels means assigning a sentence with a word that describes its type. It can be described with sentiments, technicality, etc. For example, one can assign a label such as “happy” to this sentence “I am pleased with this product, it is great”.

2. Adding metadata

Similarly, in this sentence “I’d like to order a pizza tonight”, one can add relevant information for the learning algorithm, so that it can prioritize and focus on certain words. For instance, one can add information like “I’d like to order a pizza ( food_item ) tonight ( time )”.

Now, let’s briefly explore various types of text annotations.

Sentiment Annotation

Sentiment annotation is nothing but assigning labels that represent human emotions such as sad, happy, angry, positive, negative, neutral, etc. Sentiment annotation finds application in any task related to sentiment analysis (e.g. in retail to measure customer satisfaction based on facial expressions)

Intent Annotation

The intent annotation also assigns labels to the sentences, but it focuses on the intent or desire behind the sentence. For instance, in a customer service scenario, a message like “I need to talk to Sam ”, can route the call to Sam alone, or a message like “I have a concern about the credit card ” can route the call to the team dealing credit card issues.

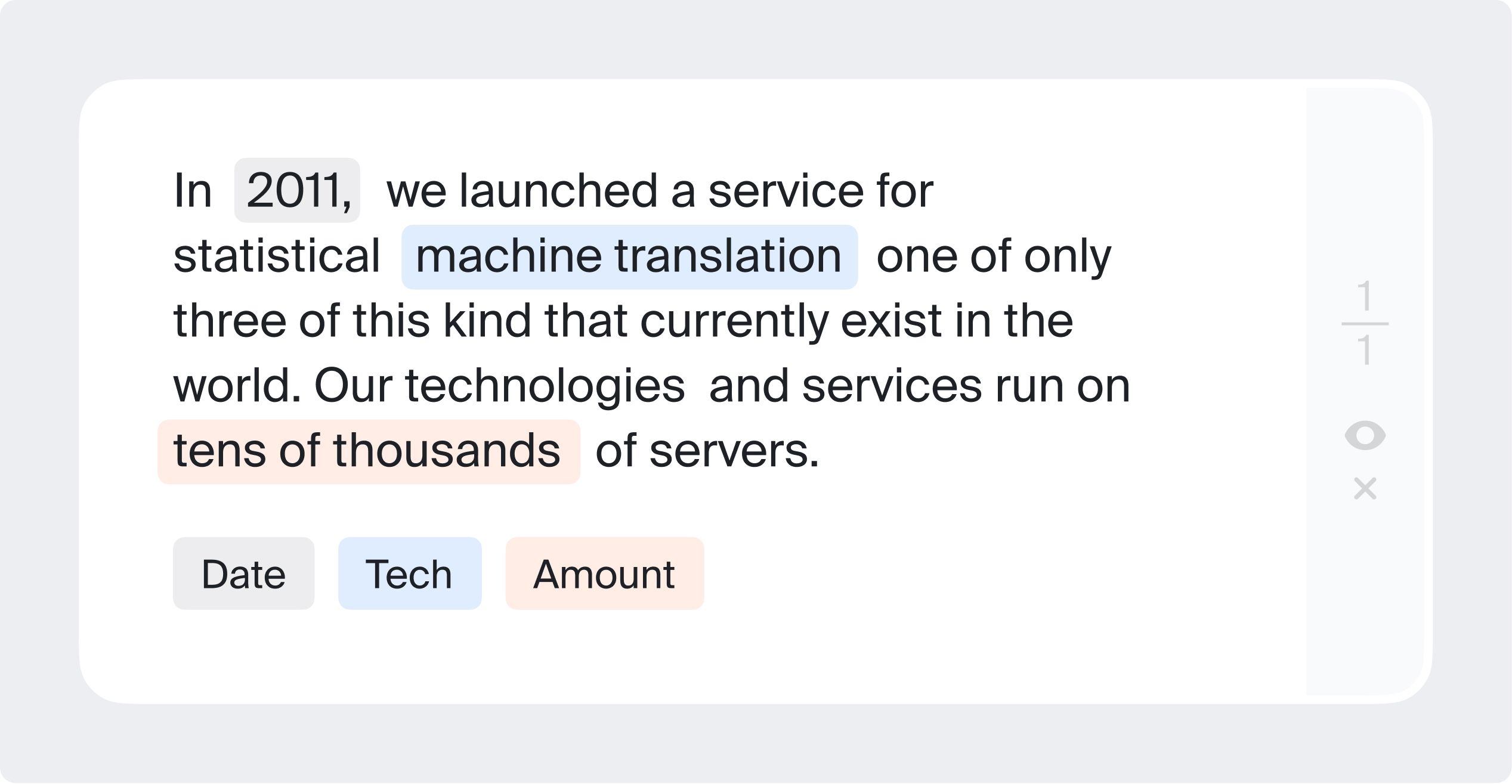

Named Entity Annotation (NER)

Named entity recognition (NER) aims to detect and classify predefined named entities or special expressions in a sentence.

It is used to search for words based on their meaning, such as the names of people, locations, etc. NER is useful in extracting information along with classifying and categorizing them.

Semantic annotation

Semantic annotation adds metadata, additional information, or tags to text that involves concepts and entities, such as people, places, or topics, as we saw earlier.

Automated data annotation vs. human annotations.

As the hours pass by, human annotators get tired and less focused, which often leads to poor performance and errors. Data annotation is a task that demands utter focus and skilled personnel, and manual annotation makes the process both time-consuming and expensive.

That's why leading ML teams bet on automated data labeling.

Here's how it works—

Once the annotation task is specified, a trained machine learning model can be applied to a set of unlabeled data. The model will then be able to predict the appropriate labels for the new and unseen dataset.

Here's how you can create an automated workflow in V7.

However, in cases where the model fails to label correctly, humans can intervene, review, and correct the mislabelled data. The corrected and reviewed data can be then used to train the labeling model once again.

Automated data labeling can save you tons of money and time, but it can lack accuracy. In contrast, human annotation can be much more costly, but it tends to be more accurate.

Finally, let me show you how you can take your data annotation to another level with V7 and start building robust computer vision models today.

To get started, go ahead and sign up for your 14-day free trial.

Once you are logged in, here's what to do next.

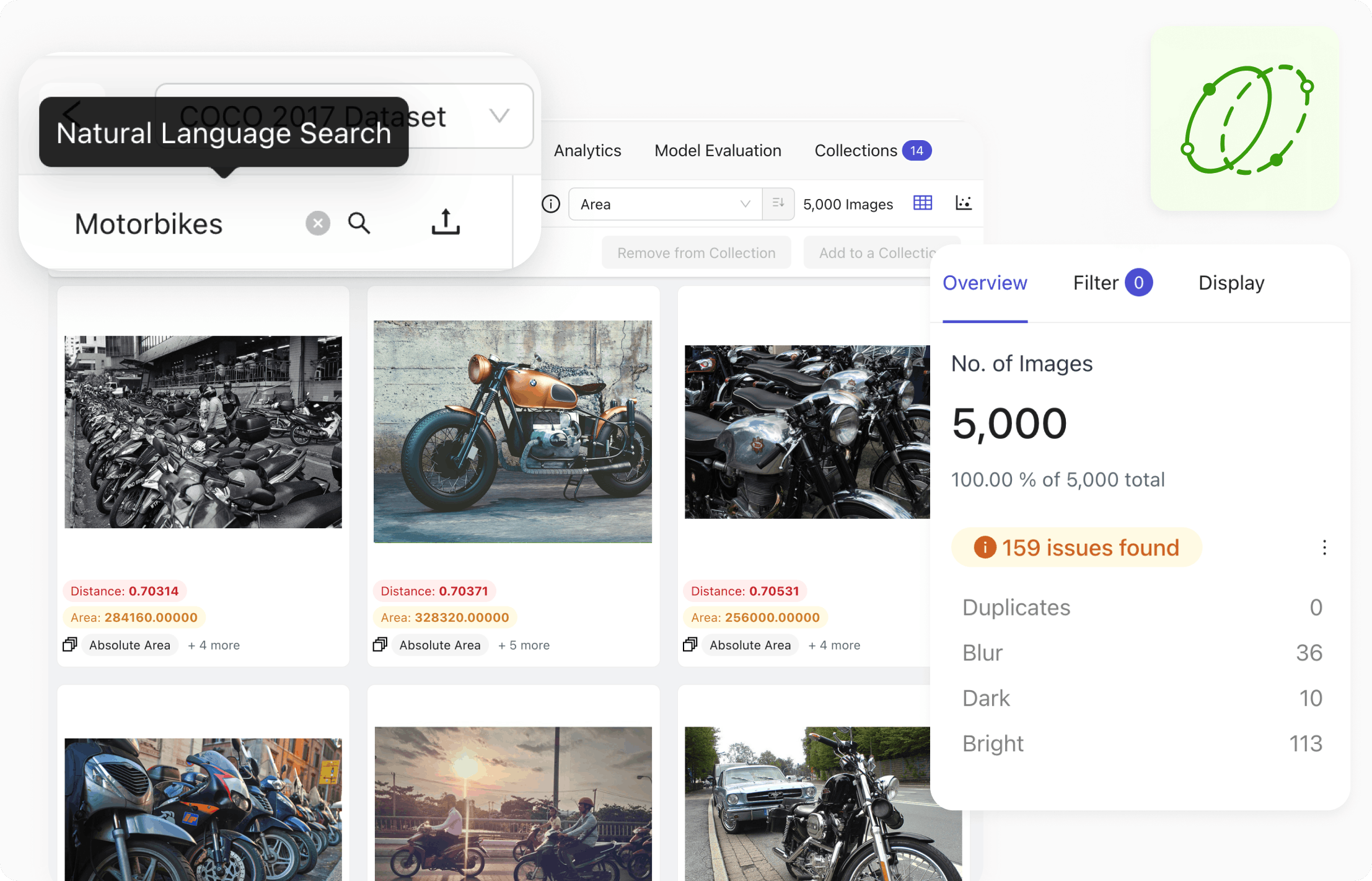

1. Collect and prepare training data

First and foremost, you need to collect the data you want to work with. Make sure that you access quality data to avoid issues with training your models.

Feel free to check out public datasets that you can find here:

- 65+ Best Free Datasets for Machine Learning

- 20+ Open Source Computer Vision Datasets

Once the data is downloaded, separate training data from the testing data . Also, make sure that your training data is varied, as it will enable the learning algorithm to extract rich information and avoid overfitting and underfitting.

2. Upload data to V7

Once the data is ready, you can upload it in bulk. Here's how:

1. Go to the Datasets tab in V7's dashboard, and click on “+ New Dataset”.

2. Give a name to the dataset that you want to upload.

It's worth mentioning that V7 offers three ways of uploading data to their server.

One is the conventional method of dragging and dropping the desired photos or folder to the interface. Another one is uploading by browsing in your local system. And the third one is by using the command line (CLI SDK) to directly upload the desired folder into the server.

Once the data has been uploaded, you can add your classes. This is especially helpful if you are outsourcing your data annotation or collaborating with a team, as it allows you to create annotation checklist and guidelines.

If you are annotating yourself, you can skip this part and add classes on the go later on in the "Classes" section or directly from the annotated image.

💡 Pro tip: Not sure what kind of model you want to build? Check out 15+ Top Computer Vision Project Ideas for Beginners.

3. Decide on the annotation type

If you have followed the steps above and decided to “Add New Class”, then you will have to add the class name and choose the annotation type for the class or the label that you want to add.

As mentioned before, V7 offers a wide variety of annotation tools , including:

- Auto-annotation

- Keypoint skeleton

Once you have added the name of your class, the system will save it for the whole dataset.

Image annotation experience in V7 is very smooth.

In fact, don't believe just me—here's what one of our users said in his G2 review:

V7 gives fast and intelligent auto-annotation experience. It's easy to use. UI is really interactive.

Apart from a wide range of available annotation tools, V7 also comes equipped with advanced dataset management features that will help you organize and manage your data from one place.

And let's not forget about V7's Neural Networks that allow you to train instance segmentation, image classification , and text recognition models.

Unlike other annotation tools, V7 allows you to annotate your data as a video rather than individual images.

You can upload your videos in any format, add and interpolate your annotations, create keyframes and sub annotations, and export your data in a few clicks!

Uploading and annotating videos is as simple as annotating images.

V7 offers frame by frame annotation method where you can essentially create a bounding box or semantic segmentation per-frame basis.

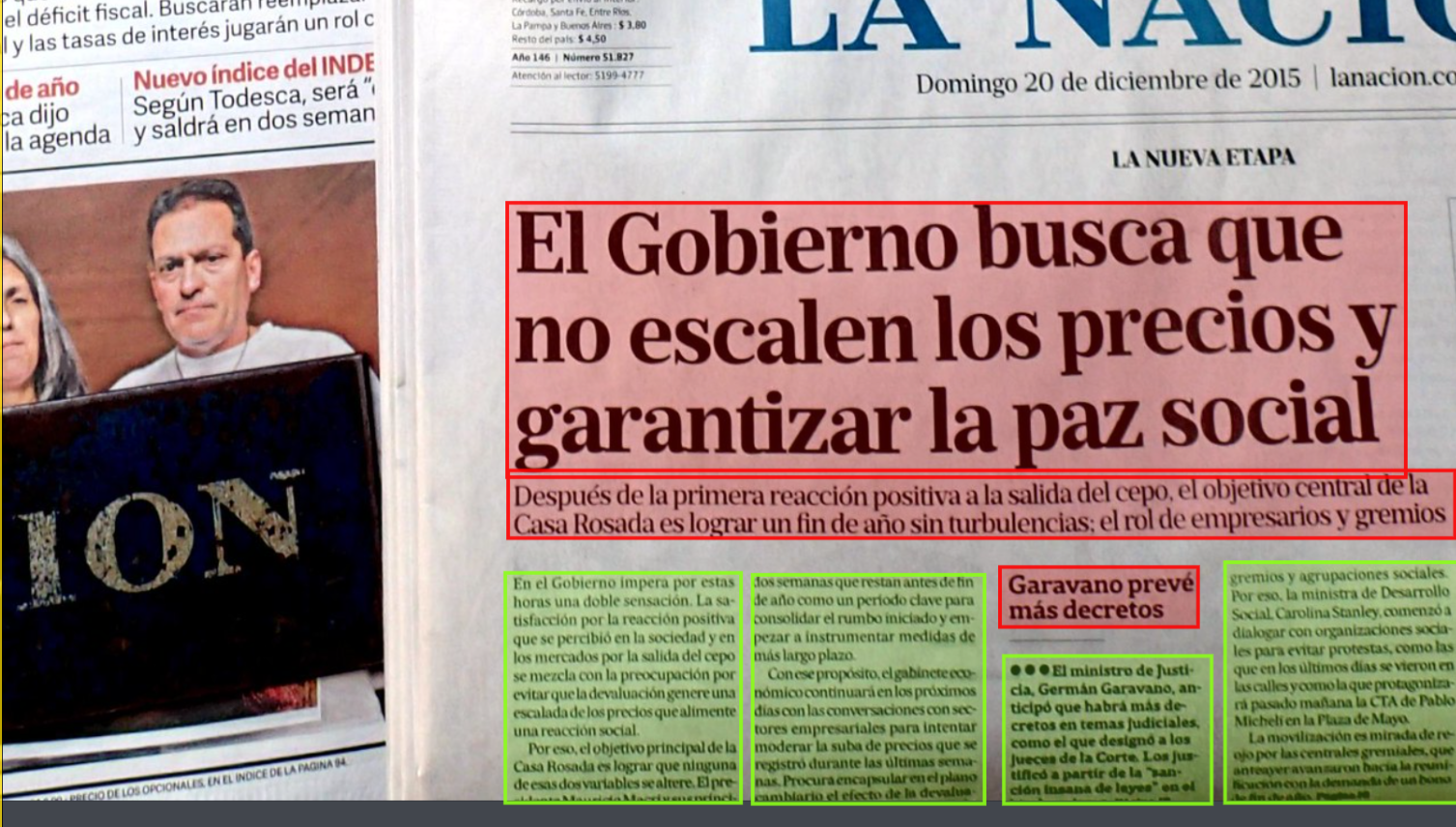

Apart from image and video annotation , V7 provides text annotation as well. Users can take advantage of the Text Scanner model that can automatically read the text in the images.

To get started, just go to the Neural Networks tab and run the Text Scanner model.

Once you have turned it on you can go back to the dataset tab and load the dataset. It is the same process as before.

Now you can create a new bounding box class. The bounding box will detect text in the image. You can specify the subtype as Text in the Classes page of your dataset.

Once the data is added and the annotation type is defined you can then add the Text Scanner model to your workflow under the Settings page of your dataset.

After adding the model to your workflow map your new text class.

Now, go back to the dataset tab and send your data the text scanner model by clicking on ‘Advance 1 Stage’; this will start the training process.

Once the training is over the model will detect and read text on any kind of image, whether it's a document, photo, or video.

💡 Pro tip: If you are looking for a free image annotation tool, check out The Complete Guide to CVAT—Pros & Cons

Data annotation: next steps.

Nice job! You've made it that far 😉

By now, you should have a pretty good idea of what is data annotation and how you can annotate data for machine learning.

We've covered image, video, and text annotation, which are used in training computer vision models. If you want to apply your new skills, go ahead, pick a project, sign up to V7, collect some data, and start labeling it to build image classifier or object detectors!

💡 To learn more, go ahead and check out:

An Introductory Guide to Quality Training Data for Machine Learning

Simple Guide to Data Preprocessing in Machine Learning

Data Cleaning Checklist: How to Prepare Your Machine Learning Data

3 Signs You Are Ready to Annotate Data for Machine Learning

The Beginner’s Guide to Contrastive Learning

9 Reinforcement Learning Real-Life Applications

Mean Average Precision (mAP) Explained: Everything You Need to Know

A Step-by-Step Guide to Text Annotation [+Free OCR Tool]

The Essential Guide to Data Augmentation in Deep Learning

Nilesh Barla is the founder of PerceptronAI, which aims to provide solutions in medical and material science through deep learning algorithms. He studied metallurgical and materials engineering at the National Institute of Technology Trichy, India, and enjoys researching new trends and algorithms in deep learning.

“Collecting user feedback and using human-in-the-loop methods for quality control are crucial for improving Al models over time and ensuring their reliability and safety. Capturing data on the inputs, outputs, user actions, and corrections can help filter and refine the dataset for fine-tuning and developing secure ML solutions.”

Building AI products? This guide breaks down the A to Z of delivering an AI success story.

Related articles

- Software Testing

- Software Development

- Data Annotation

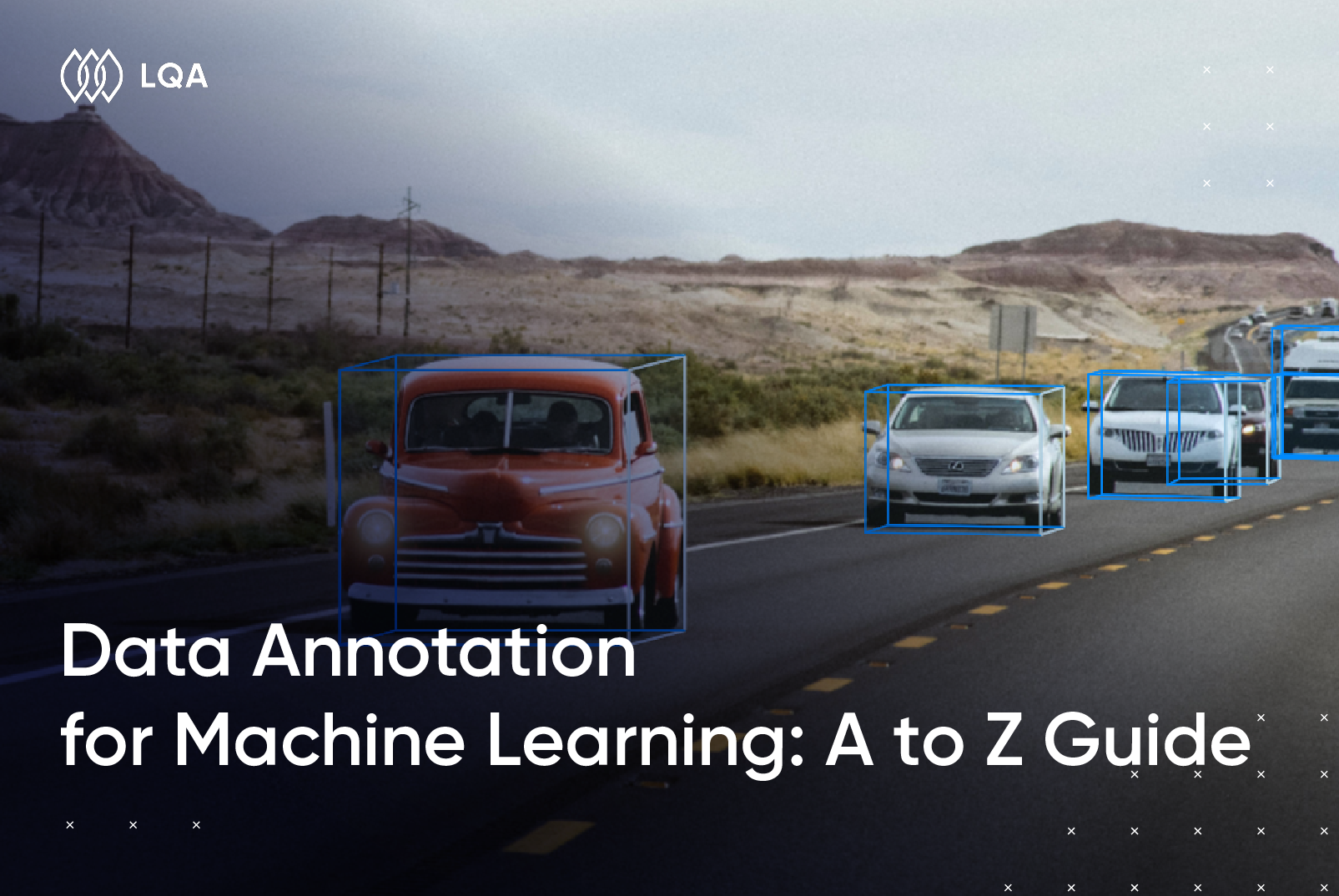

- Data Annotation for Machine Learning: A to Z Guide

In this dynamic era of machine learning, the fuel that powers accurate algorithms and AI breakthroughs is high-quality data. To help you demystify the crucial role of data annotation for machine learning, and master the complete process of data annotation from its foundational principles to advanced techniques, we’ve created this comprehensive guide. Let’s dive in and enhance your machine-learning journey.

Data Annotation for Machine Learning

What is machine learning.

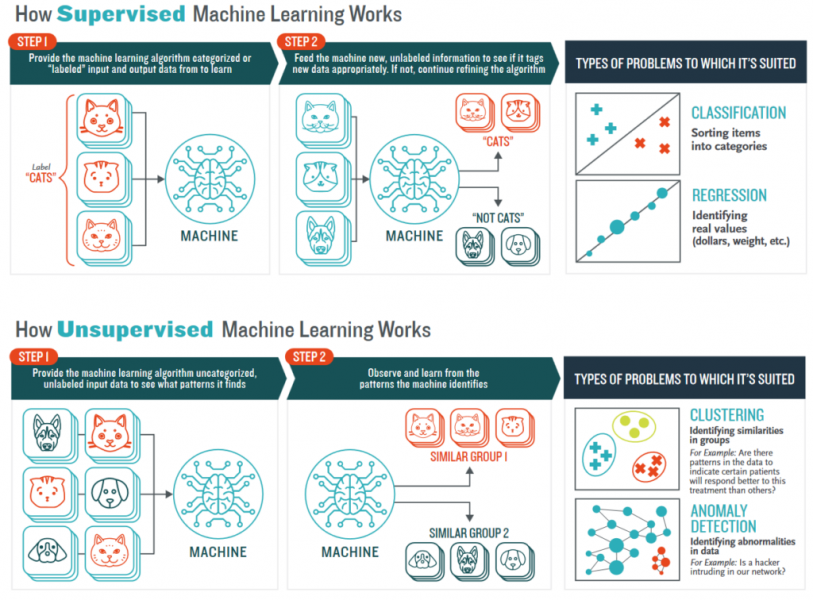

Machine learning is embedded in AI and allows machines to perform specific tasks through training. With data AI annotation, it can learn about pretty much everything. Machine learning techniques can be described into four types: Unsupervised learning, Semi-Supervised Learning, Supervised Learning, and Reinforcement learning

- Supervised Learning: Supervised learning learns from a set of labeled data. It is an algorithm that predicts the outcome of new data based on previously known labeled data.

- Unsupervised Learning : In unsupervised machine learning, training is based on unlabeled data. In this algorithm, you don’t know the outcome or the label of the input data.

- Semi-Supervised Learning : The AI will learn from a dataset that is partly labeled. This is the combination of the two types above.

- Reinforcement Learning : Reinforcement learning is the algorithm that helps a system determine its behavior to maximize its benefits. Currently, it is mainly applied to Game Theory, where algorithms need to determine the next move to achieve the highest score.

Although there are four types of techniques, the most frequently used are unsupervised and supervised learning. You can see how unsupervised and supervised learning works according to Booz Allen Hamilton’s description in this picture:

How data annotation for machine learning works

What is Annotated Data?

Data annotation for machine learning is the process of labeling or tagging data to make it understandable and usable for machine learning algorithms. This involves adding metadata, such as categories, tags, or attributes, to raw data, making it easier for algorithms to recognize patterns and learn from the data.

In fact, data annotation, or AI data processing, was once the most unwanted process of implementing AI in real life. Data annotation AI is a crucial step in creating supervised machine-learning models where the algorithm learns from labeled examples to make predictions or classifications.

The Importance of Data Annotation Machine Learning

Data annotation plays a pivotal role in machine learning for several reasons:

- Training Supervised Models : Most machine learning algorithms, especially supervised learning models, require labeled data to learn patterns and make predictions. Without accurate annotations, models cannot generalize well to new, unseen data.

- Quality and Performance : The quality of annotations directly impacts the quality and performance of machine learning models. Inaccurate or inconsistent annotations can lead to incorrect predictions and reduced model effectiveness.

- Algorithm Learning : Data annotation provides the algorithm with labeled examples, helping it understand the relationships between input data and the desired output. This enables the algorithm to learn and generalize from these examples.

- Feature Extraction : Annotations can also involve marking specific features within the data, aiding the algorithm in understanding relevant patterns and relationships.

- Benchmarking and Evaluation : Labeled datasets allow for benchmarking and evaluating the performance of different algorithms or models on standardized tasks.

- Domain Adaptation : Annotations can help adapt models to specific domains or tasks by providing tailored labeled data.

- Research and Development : In research and experimental settings, annotated data serves as a foundation for exploring new algorithms, techniques, and ideas.

- Industry Applications : Data annotation is essential in various industries, including healthcare (medical image analysis), autonomous vehicles (object detection), finance (fraud detection), and more.

Overall, data annotation is a critical step in the machine-learning pipeline that facilitates the creation of accurate, effective, and reliable models capable of performing a wide range of tasks across different domains.

Best data annotation for machine learning company

How to Process Data Annotation for Machine Learning?

Step 1: data collection.

Data collection is the process of gathering and measuring information from countless different sources. To use the data we collect to develop practical artificial intelligence (AI) and machine learning solutions, it must be collected and stored in a way that makes sense for the business problem at hand.

There are several ways to find data. In classification algorithm cases, it is possible to rely on class names to form keywords and to use crawling data from the Internet to find images. Or you can find photos, and videos from social networking sites, satellite images on Google, free collected data from public cameras or cars (Waymo, Tesla), and even you can buy data from third parties (notice the accuracy of data). Some of the standard datasets can be found on free websites like Common Objects in Context (COCO) , ImageNet , and Google’s Open Images .

Some common data types are Image, Video, Text, Audio, and 3D sensor data.

- Image data annotation for machine learning (photographs of people, objects, animals, etc.)

Image is perhaps the most common data type in the field of data annotation for machine learning. Since it deals with the most basic type of data there is, it plays an important part in a wide range of applications, namely robotic visions, facial recognition, or any kind of application that has to interpret images.

From the raw datasets provided from multiple sources, it is vital for these to be tagged with metadata that contains identifiers, captions, or keywords.

The significant fields that require enormous effort for data annotation for machine learning are healthcare applications (as in our case study of blood-cell annotation), and autonomous vehicles (as in our case study of traffic lights and sign annotation). With the effective and accurate annotation of images, AI applications can work flawlessly with no intervention from humans.

To train these solutions, metadata must be assigned to the images in the form of identifiers, captions, or keywords. From computer vision systems used by self-driving vehicles and machines that pick and sort produce to healthcare applications that auto-identify medical conditions, there are many use cases that require high volumes of annotated images. Image annotation increases precision and accuracy by effectively training these systems.

Image data annotation for machine learning

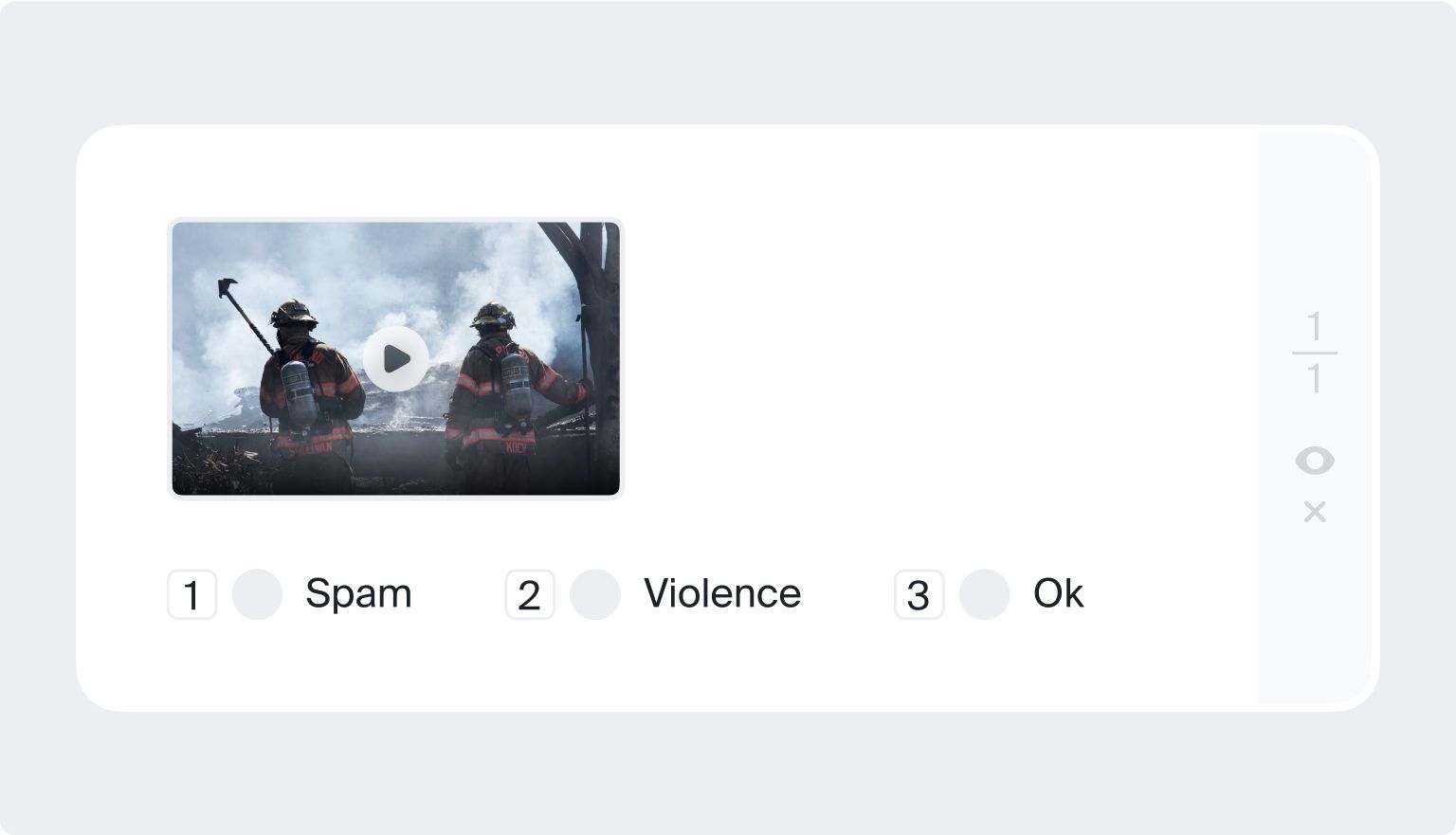

- Video data annotation for machine learning (Recorded tape from CCTV or camera, usually divided into scenes)

When compared with images, video is a more complex form of data that demands a bigger effort to annotate correctly. To put it simply, a video consists of different frames which can be understood as pictures. For example, a one-minute video can have thousands of frames, and to annotate this video, one must invest a lot of time.

One outstanding feature of video annotation in the Artificial Intelligence and Machine Learning model is that it offers great insight into how an object moves and its direction.

A video can also inform whether the object is partially obstructed or not while image annotation is limited to this.

Video data annotation for machine learning

- Text data annotation for machine learning: Different types of documents include numbers and words and they can be in multiple languages.

Algorithms use large amounts of annotated data to train AI models, which is part of a larger data labeling workflow. During the annotation process, a metadata tag is used to mark up the characteristics of a dataset. With text annotation, that data includes tags that highlight criteria such as keywords, phrases, or sentences. In certain applications, text annotation can also include tagging various sentiments in text, such as “angry” or “sarcastic” to teach the machine how to recognize human intent or emotion behind words.

The annotated data, known as training data, is what the machine processes. The goal? Help the machine understand the natural language of humans. This procedure, combined with data pre-processing and annotation, is known as natural language processing, or NLP.

Text data annotation for machine learning

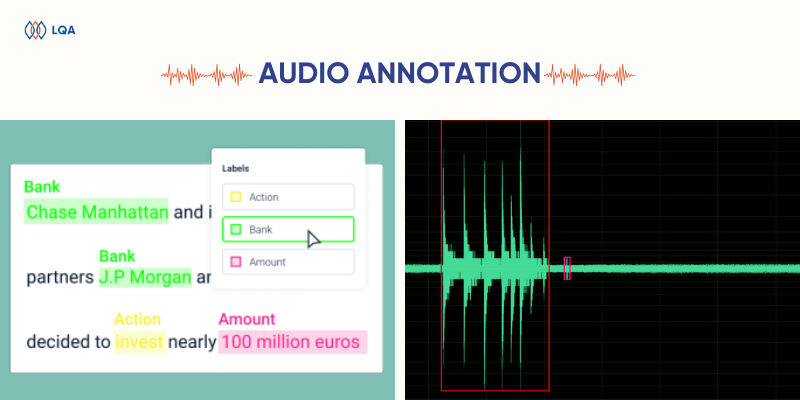

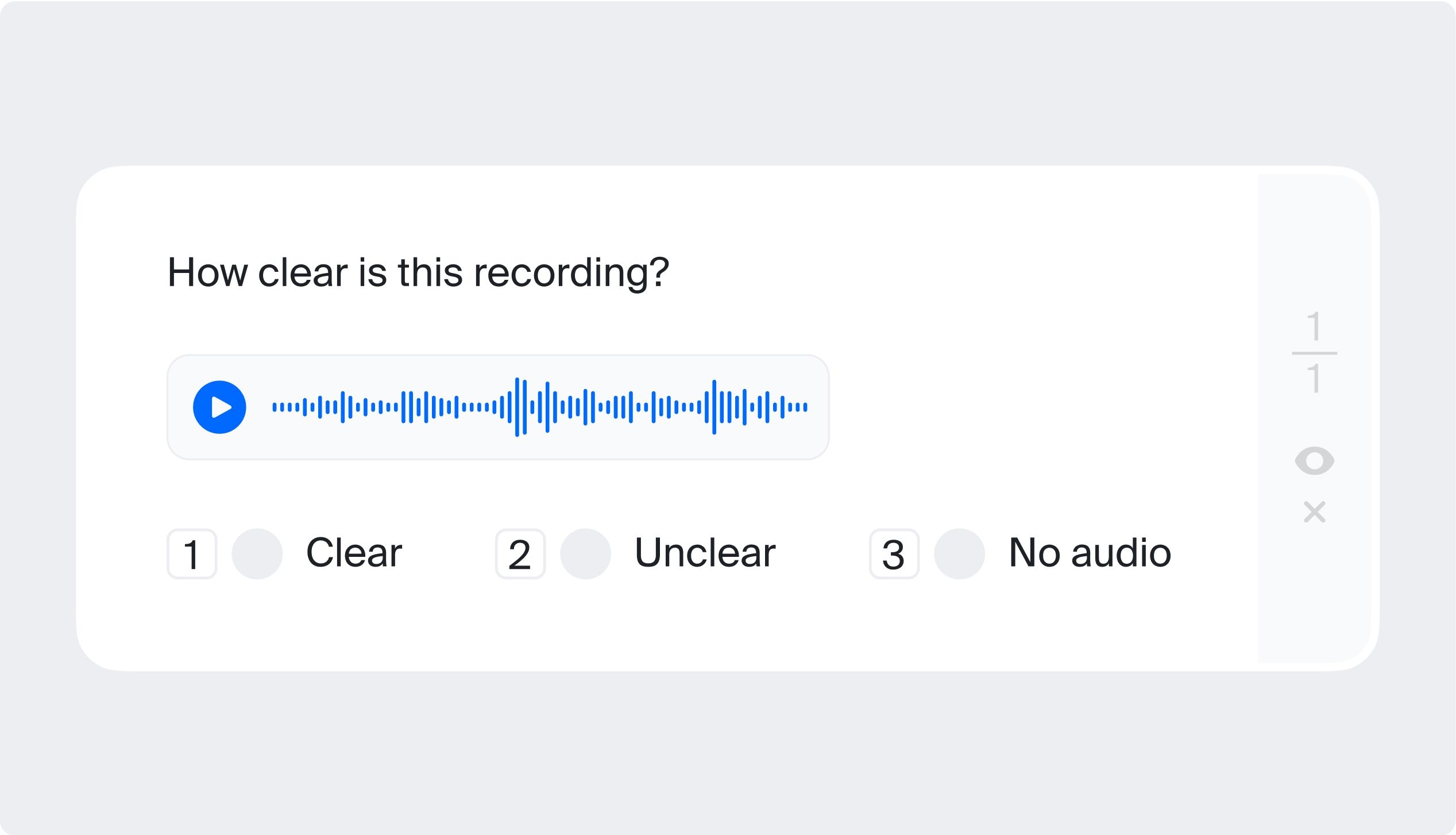

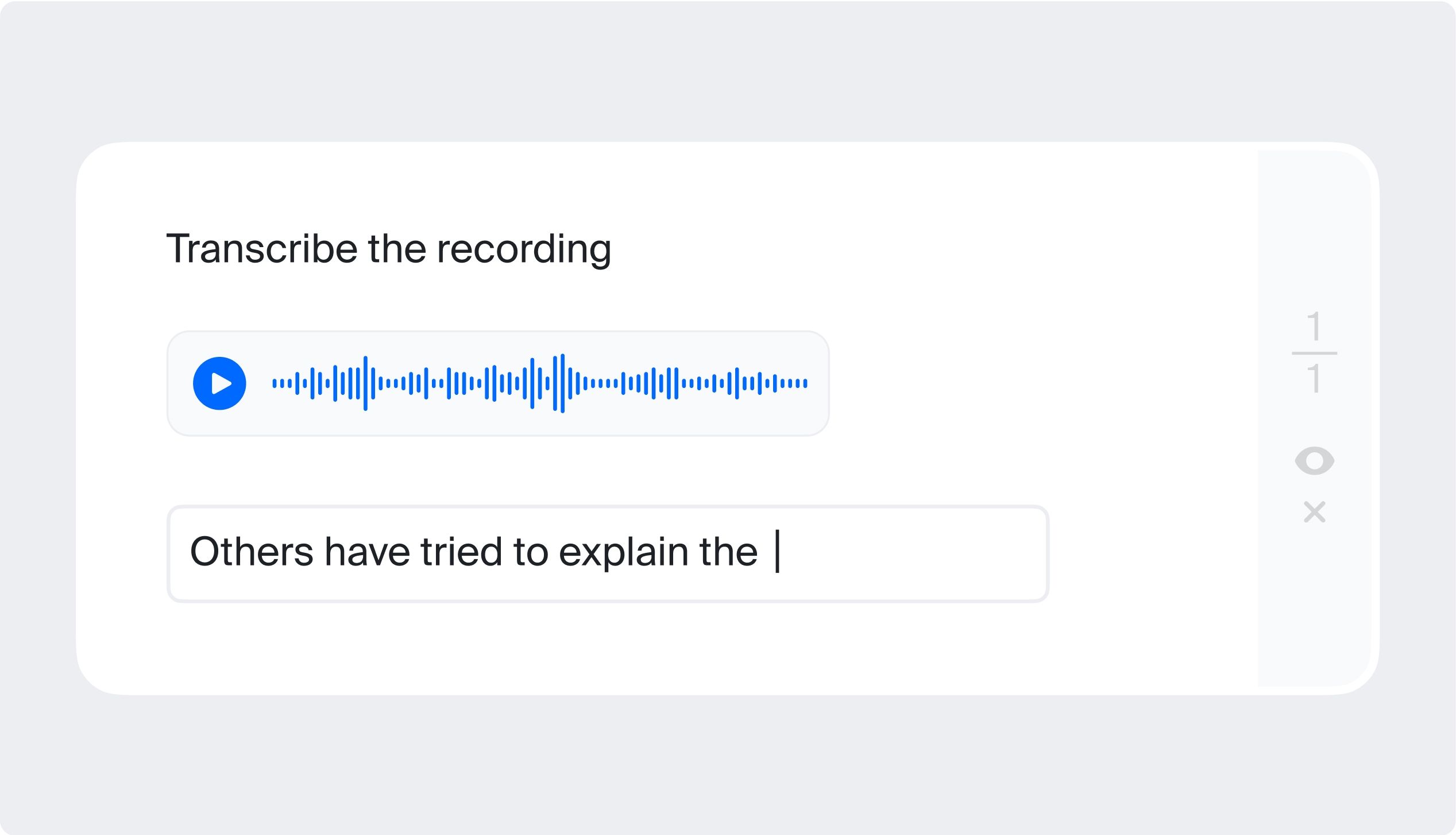

- Audio data annotation for machine learning: They are sound records from people having dissimilar demographics.

As the market is trending with Voice AI Data Annotation for machine learning, LTS Group provides top-notch service in annotating voice data. We have annotators fluent in languages.

All types of sounds recorded as audio files can be annotated with additional keynotes and suitable metadata. The Cogito annotation team is capable of exploring the audio features and annotating the corpus with intelligent audio information. Each word in the audio is carefully listened to by the annotators in order to recognize the speech correctly with our sound annotation service.

The speech in an audio file contains different words and sentences that are meant for the listeners. Making such phrases in the audio files recognizable to machines is possible, by using a special data labeling technique while annotating the audio. In NLP or NLU, machine algorithms for speech recognition need audio linguistic annotation to recognize such audio.

Audio data annotation facilitates various real-life AI applications. A prime example is the application of an AI-powered audio transcription tool that swiftly generates accurate transcripts for podcast episodes within minutes.

Audio data annotation for machine learning

- 3D Sensor data annotation for machine learning: 3D models generated by sensor devices.

No matter what, money is always a factor. 3D-capable sensors greatly vary in build complexity and accordingly – in price, ranging from hundreds to thousands of dollars. Choosing them over the standard camera setup is not cheap, especially given that you would usually need multiple units in order to guarantee a large enough field of view.

3D sensor data annotation for machine learning

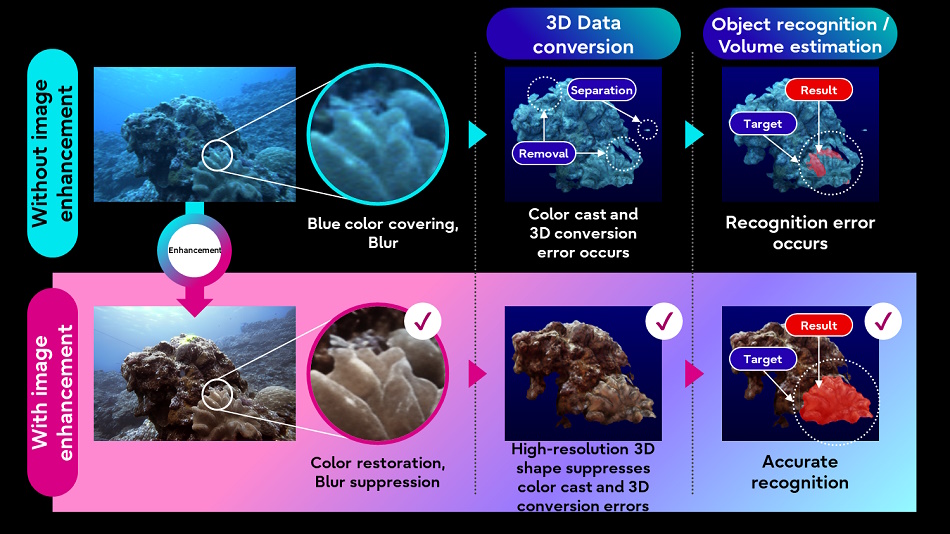

Low-resolution data annotation for machine learning

In many cases, the data gathered by 3D sensors are nowhere as dense or high-resolution as the one from conventional cameras. In the case of LiDARs, a standard sensor discretizes the vertical space in lines (the number of lines varies), each having a couple of hundred detection points. This produces approximately 1000 times fewer data points than what is contained in a standard HD picture. Furthermore, the further away the object is located, the fewer samples land on it, due to the conical shape of the laser beams’ spread. Thus the difficulty of detecting objects increases exponentially with their distance from the sensor.”

Step 2: Problem Identification

Knowing what problem you are dealing with will help you to decide the techniques you should use with the input data. In computer vision, there are some tasks such as:

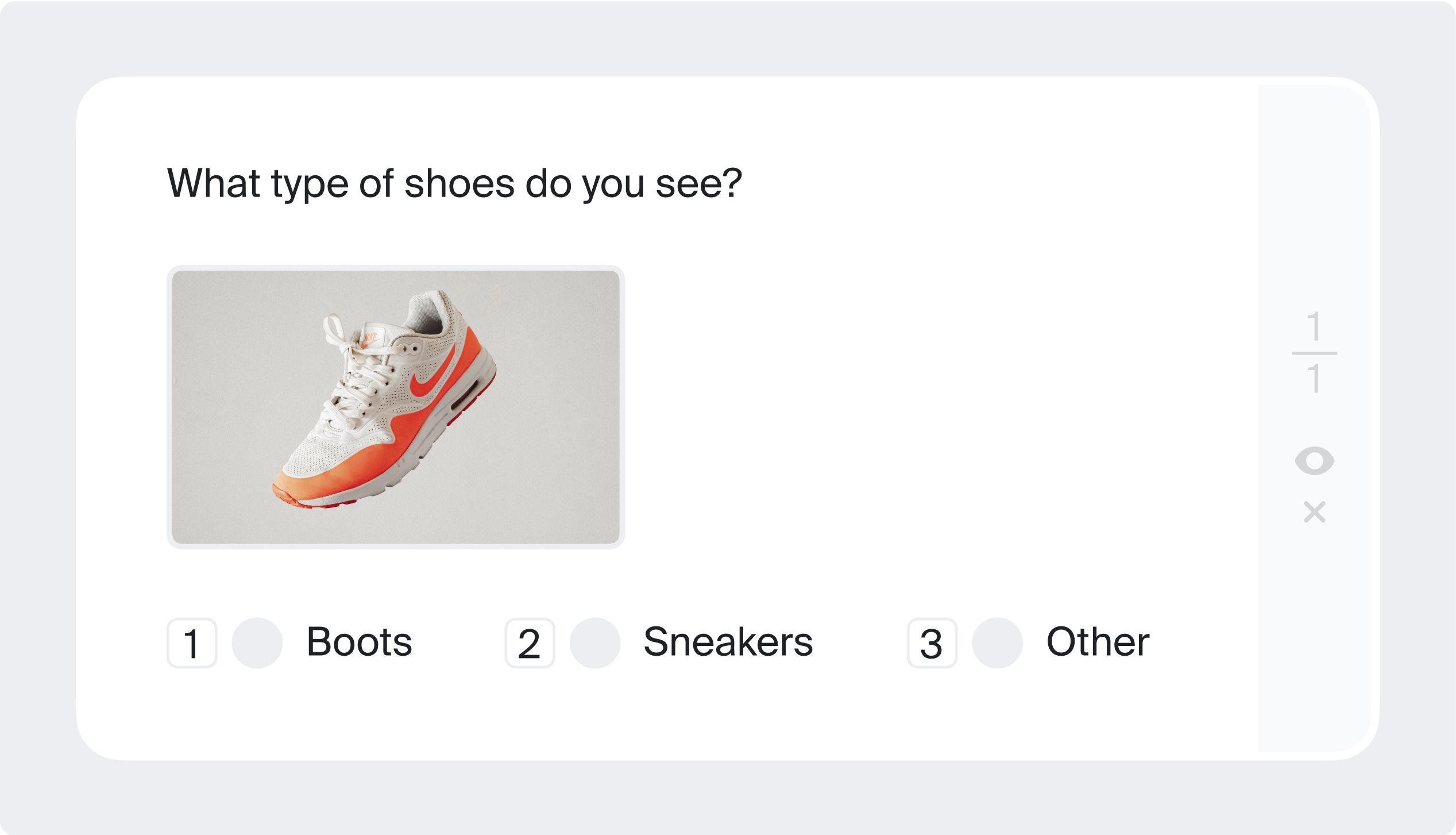

- Image classification: Collect and classify the input data by assigning a class label to an image.

- Object detection & localization: Detect and locate the presence of objects in an image and indicate their location with a bounding box, point, line, or polyline.

- Object instance / semantic segmentation: In semantic segmentation, you have to label each pixel with a class of objects (Car, Person, Dog, etc.) and non-objects (Water, Sky, Road, etc.). Polygon and masking tools can be used for object semantic segmentation.

Step 3: Data Annotation for Machine Learning

After identifying the problems, now you can process the data labeling accordingly. With the classification task, the labels are the keywords used during finding and crawling data. For instance segmentation task, there should be a label for each pixel of the image. After getting the label, you need to use tools to perform image annotation (i.e. to set labels and metadata for images). The popular annotated data tools can be named Comma Coloring, Annotorious, and LabelMe.

However, this way is manual and time-consuming. A faster alternative is to use algorithms like Polygon-RNN ++ or Deep Extreme Cut. Polygon-RNN ++ takes the object in the image as the input and gives the output as polygon points surrounding the object to create segments, thus making it more convenient to label. The working principle of Deep Extreme Cut is similar to Polygon-RNN ++ but it allows up to 4 polygons.

Process of data annotation for machine learning

It is also possible to use the “Transfer Learning” method to label data, by using pre-trained models on large-scale datasets such as ImageNet, and Open Images. Since the pre-trained models have learned many features from millions of different images, their accuracy is fairly high. Based on these models, you can find and label each object in the image. It should be noted that these pre-trained models must be similar to the collected dataset to perform feature extraction or fine-turning.

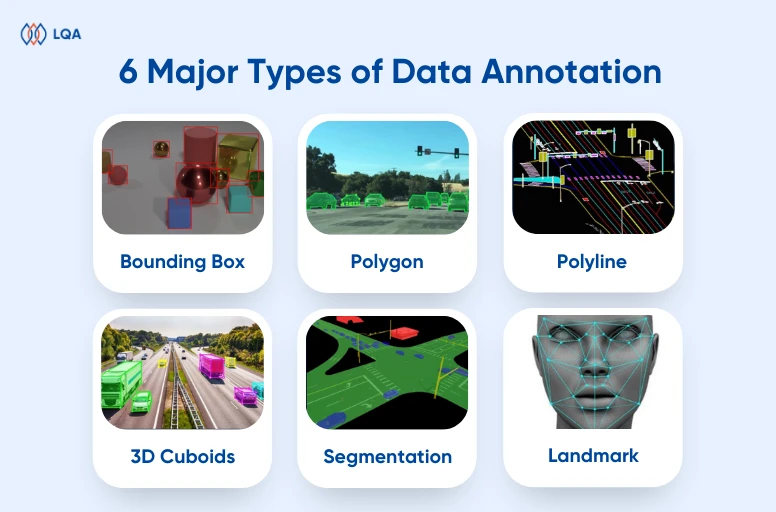

Types of Annotation Data

Data Annotation for machine learning is the process of labeling the training data sets, which can be images, videos, or audio. Needless to say, AI Annotation is of paramount importance to Machine Learning (ML), as ML algorithms need (quality) annotated data to process.

In our AI training projects, we use different types of annotation. Choosing what type(s) to use mainly depends on what kind of data and annotation tools you are working on.

- Bounding Box : As you can guess, the target object will be framed by a rectangular box. The data labeled using bounding boxes are used in various industries, mostly in automotive vehicle, security, and e-commerce industries.

- Polygon : When it comes to irregular shapes like human bodies, logos, or street signs, to have a more precise outcome, Polygons should be your choice. The boundaries drawn around the objects can give an exact idea about the shape and size, which can help the machine make better predictions.

- Polyline : Polylines usually serve as a solution to reduce the weakness of bounding boxes, which usually contain unnecessary space. It is mainly used to annotate lanes on road images.

- 3D Cuboids : The 3D Cuboids are utilized to measure the volume of objects which can be vehicles, buildings, or furniture.

- Segmentation : Segmentation is similar to polygons but more complicated. While polygons just choose some objects of interest, with segmentation, layers of alike objects are labeled until every pixel of the picture is done, which leads to better results of detection.

- Landmark : Landmark annotation comes in handy for facial and emotional recognition, human pose estimation, and body detection. The applications using data labeled by landmarks can indicate the density of the target object within a specific scene.

Types of data annotation for machine learning

Popular Tools of Data Annotation for Machine Learning

In machine learning, data processing, and analysis are extremely important, so I will introduce to you some Tools for annotating data to make the job simpler:

- Labelbox : Labelbox is a widely used platform that supports various data types, such as images, text, and videos. It offers a user-friendly interface, project management features, collaboration tools, and integration with machine learning pipelines.

- Amazon SageMaker Ground Truth : Provided by Amazon Web Services, SageMaker Ground Truth combines human annotation and automated labeling using machine learning. It’s suitable for a range of data types and can be seamlessly integrated into AWS workflows.

- Supervisely : Supervised focuses on computer vision tasks like object detection and image segmentation. It offers pre-built labeling interfaces, collaboration features, and integration with popular deep-learning frameworks.

- VGG Image Annotator (VIA) : Developed by the University of Oxford’s Visual Geometry Group, VIA is an open-source tool for image annotation. It’s commonly used for object detection and annotation tasks and supports various annotation types.

- CVAT (Computer Vision Annotation Tool) : CVAT is another popular open-source tool, specifically designed for annotating images and videos in the context of computer vision tasks. It provides a collaborative platform for creating bounding boxes, polygons, and more.

Popular data annotation tools

When selecting a data annotation for machine learning tool, consider factors like the type of data you’re working with, the complexity of annotation tasks, collaboration requirements, integration with your machine learning workflow, and budget constraints. It’s also a good idea to try out a few tools to determine which one best suits your specific needs.

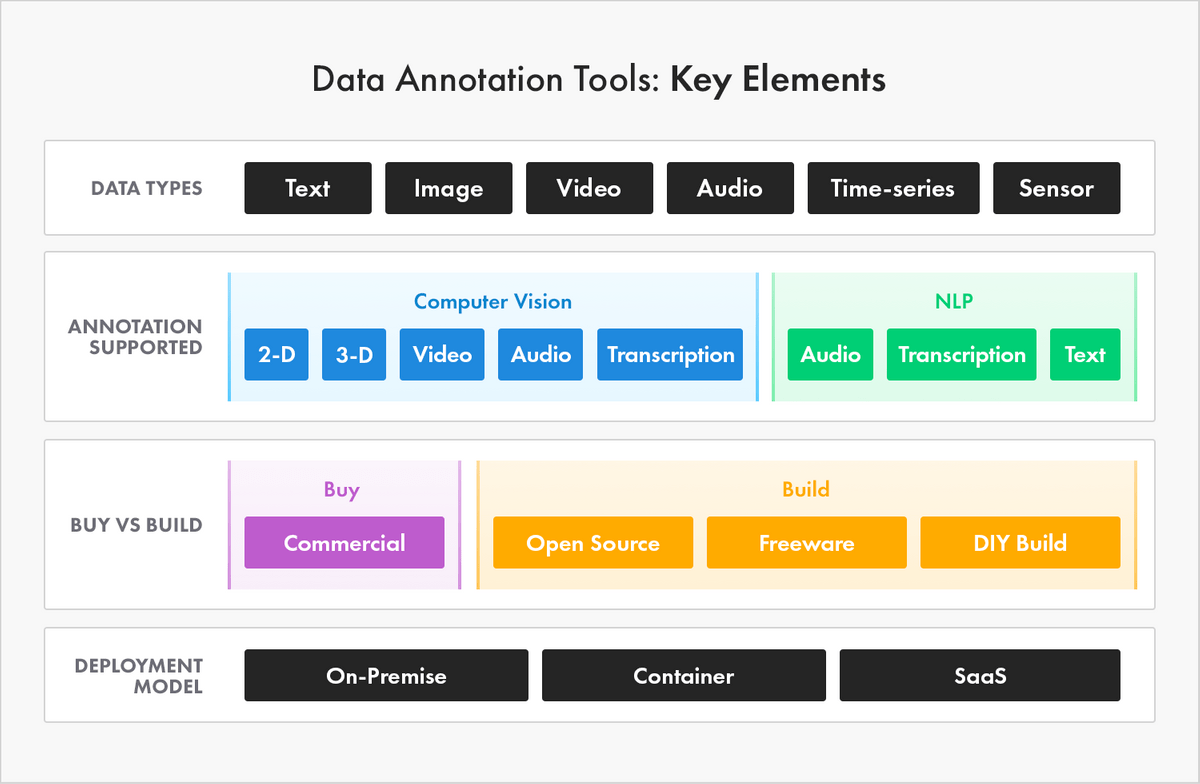

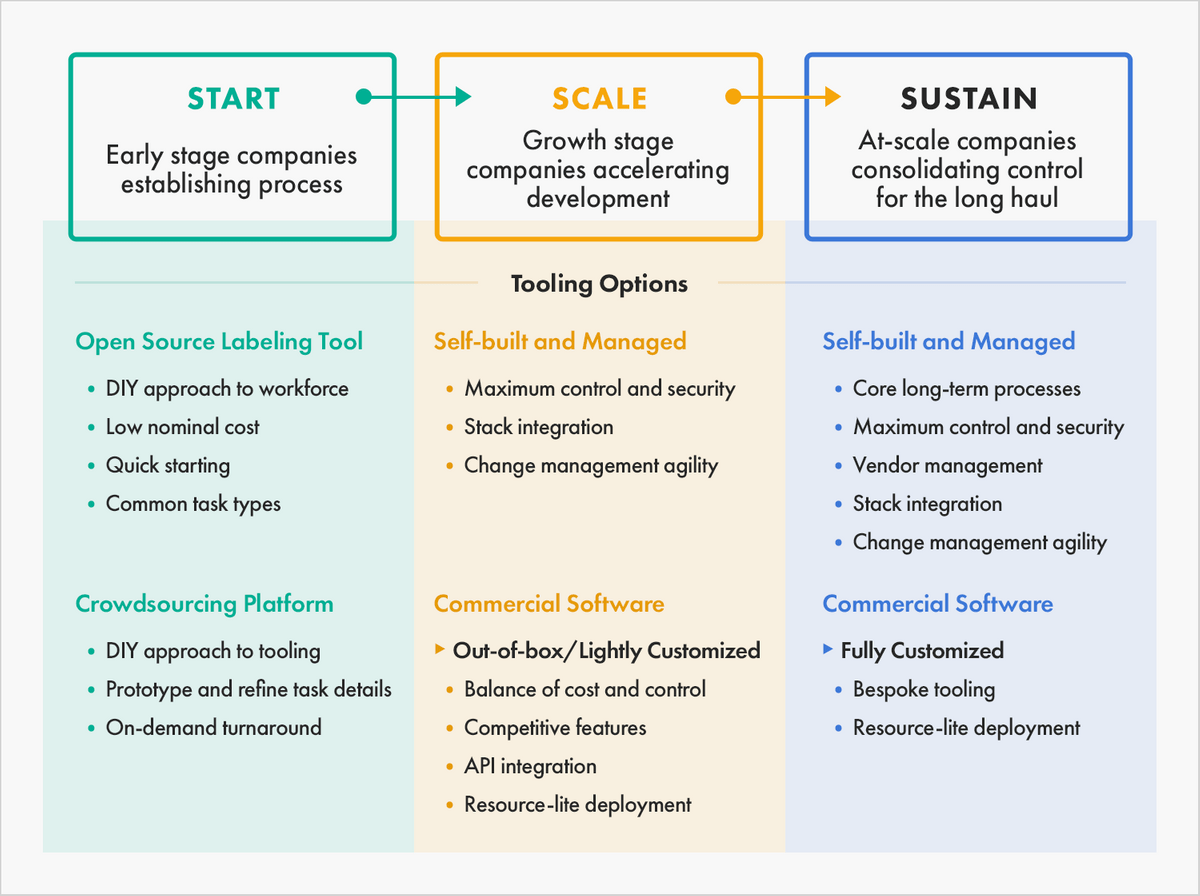

it is crucial for businesses to consider the top 5 annotation tool features to find the most suitable one for their products: Dataset management, Annotation Methods, Data Quality Control, Workforce Management, and Security.

Who can annotate data?

The data annotators are the ones in charge of labeling the data. There are some ways to allocate them:

In-house Annotating Data

The data scientists and AI researchers in your team are the ones who label data. The advantages of this way are easy to manage and has a high accuracy rate. However, it is such a waste of human resources since data scientists will have to spend much time and effort on a manual, repetitive task.

In fact, many AI projects have failed and been shut down, due to the poor quality of training data and inefficient management.

In order to ensure data labeling quality, you can check out our comprehensive Data annotation best practices . This guide follows the steps in a data annotation project and how to successfully and effectively manage the project:

- Define and plan the annotation project

- Managing timelines

- Creating guidelines and training workforce

- Feedback and changes

Outsourced AI Annotations Data

You can find a third party – a company that provides data annotation services. Although this option will cost less time and effort for your team, you need to ensure that the company commits to providing transparent and accurate data.

Online Workforce Resources for Data Annotation

Alternatively, you can use online workforce resources like Amazon Mechanical Turk or Crowdflower. These platforms recruit online workers around the world to do data annotation. However, the accuracy and the organization of the dataset are the issues that you need to consider when purchasing this service.

The Bottom Line

The data annotation for machine learning guide described here is basic and straightforward. To build machine learning, besides data scientists who will set the infrastructure and scale for complex machine learning tasks, you still need to find data annotators to label the input data. Lotus Quality Assurance provides professional data annotation services in different domains. With our quality review process, we commit to bringing a high-quality and secure service. Contact us for further support!

Our Clients Also Ask

What is data annotation in machine learning.

Data annotation in machine learning refers to the process of labeling or tagging data to create a labeled dataset. Labeled data is essential for training supervised machine learning models, where the algorithm learns patterns and relationships in the data to make predictions or classifications.

How many types of data annotation for machine learning?

Data Annotation for machine learning is the procedure of labeling the training data sets, which can be images, videos, or audio. In our AI training projects, we utilize diverse types of data annotation. Here are the most popular types: Bounding Box, Polygon, Polyline, 3D Cuboids, Segmentation, and Landmark.

What are the most popular data annotation tools?

Here are some popular tools for annotating data: Labelbox, Amazon SageMaker Ground Truth, CVAT (Computer Vision Annotation Tool), VGG Image Annotator (VIA), Annotator: ALOI Annotation Tool, Supervisely, LabelMe, Prodigy, etc.

What is a data annotator?

A data annotator is a person who adds labels or annotations to data, creating labeled datasets for training machine learning models. They follow guidelines to accurately label images, text, or other data types, helping models learn patterns and make accurate predictions.

Data Annotation's Role in Machine Learning: An Overview

Data annotation is a fundamental component of machine learning, playing a vital role in training models and enabling them to understand and interpret data accurately. By providing labeled data, annotations serve as the ground truth that guides machine learning algorithms in learning patterns and making accurate predictions. Various types of data annotation, including image annotation , text annotation , video annotation , and audio annotation , are utilized across different domains to enhance the performance and reliability of machine learning models.

To facilitate the annotation process, there are data labeling services and data annotation tools available, offering efficient solutions for accurately labeling and categorizing data. These services and tools streamline the annotation workflow, ensuring the quality and accuracy of annotations, and ultimately improving the performance of machine learning models.

Key Takeaways:

- Data annotation is crucial in machine learning for training models and making accurate predictions.

- Image annotation , text annotation , video annotation , and audio annotation are different types of data annotation techniques.

- Data labeling services and annotation tools exist to facilitate the annotation process.

- The quality and accuracy of annotations directly impact the performance and reliability of machine learning models.

- Data annotation is essential for various applications, including object recognition , sentiment analysis , and speech recognition.

What is Annotation in Machine Learning?

Annotation in machine learning refers to the process of labeling data to provide meaningful information to algorithms. It involves adding annotations or tags to data points to convey valuable information to algorithms more easily. Annotations can take different forms depending on the type of data being annotated, such as image annotation , text annotation , video annotation , and audio annotation . The effectiveness of annotations lies in their ability to provide context and structure to the data, enabling the algorithms to extract meaningful knowledge from the labeled examples.

Annotations play a crucial role in training machine learning models by providing a reference or ground truth that algorithms can learn from. By labeling data accurately, annotations aid in teaching models to recognize patterns, classify information, or perform specific tasks.

For example, in image annotation, objects, regions, or specific features within an image are labeled to train computer vision models. Text annotation involves labeling and categorizing textual data, contributing to natural language processing tasks like named entity recognition and sentiment analysis . In video annotation, objects or actions within video sequences are labeled, facilitating video analysis in applications such as surveillance and autonomous technology. Audio annotation encompasses labeling and transcribing audio data to enhance speaker identification , speech emotion recognition , and audio transcription tasks.

Each type of annotation technique contributes to the development and improvement of machine learning models in their respective domains. The labeled data creates a foundation that enables the models to interpret and understand the input they receive, resulting in more accurate and reliable predictions or outputs.

By leveraging the power of annotation in machine learning , organizations can unlock the potential of their data and build advanced models that can automate processes, make informed decisions, and drive innovation across various industries.

Benefits of Annotation in Machine Learning

Annotations provide context and structure to the data, enabling algorithms to extract meaningful knowledge from labeled examples. Annotated data serves as the ground truth for training machine learning models, facilitating pattern recognition and accurate predictions. By leveraging annotations, organizations can build advanced models that automate processes and make informed decisions. Annotation enhances the accuracy and reliability of machine learning models in various domains, from computer vision to natural language processing.

Types of Data Annotation: Image Annotation

Image annotation is a critical technique for training computer vision models to accurately recognize and classify objects within images. By labeling objects, regions, or specific features, image annotation provides valuable information to algorithms, enabling them to understand the presence and identity of objects. This enhances the accuracy and performance of computer vision algorithms, particularly for applications such as object recognition and image classification .

Several image annotation techniques are employed to annotate images effectively:

- Bounding boxes: This technique involves drawing rectangular boxes around objects or regions of interest within an image, clearly indicating their boundaries.

- Polygons: In polygon annotation, the contours of objects are outlined using a series of connected vertices, allowing for more precise labeling.

- Key points: Key point annotation involves marking specific points of interest, such as the corners of objects or the joints of a skeleton, to denote significant features for recognition.

- Semantic segmentation: This technique assigns semantic labels to pixels or regions within an image, enabling algorithms to differentiate objects and understand their boundaries.

Image annotation techniques , such as bounding boxes and semantic segmentation, play a crucial role in object recognition and image classification , enabling machines to accurately identify and classify objects within images.

Types of Data Annotation: Text Annotation

Text annotation is a crucial process in machine learning, involving the labeling and categorizing of textual data, such as documents, articles, or sentences. It plays a significant role in natural language processing tasks, enabling machines to understand and interpret the text accurately.

One of the primary applications of text annotation is named entity recognition , which involves identifying and classifying named entities within the text, such as people, organizations, locations, and dates. Sentiment analysis is another important task in which text annotation is utilized. It involves determining the sentiment or emotion expressed in the text, enabling sentiment classification for various purposes, such as customer feedback analysis or social media monitoring.

Part-of-speech tagging is yet another essential task enabled by text annotation. It involves assigning grammatical information, such as noun, verb, adjective, or adverb, to each word in the text. This provides valuable context and structure to the language, enabling machines to analyze and process the text effectively.

"Text annotation techniques provide valuable context and meaning to words within the text, enabling machines to understand and interpret the text accurately."

Text annotation plays a crucial role in various applications, including information extraction, text categorization, and question answering. By labeling and categorizing textual data, machine learning models can extract relevant information, classify texts into different categories, and provide accurate answers to user queries.

Overall, text annotation empowers machines to make sense of textual data, enabling them to perform a wide range of natural language processing tasks effectively. It enhances the understanding and interpretation of textual information, contributing to the development of advanced machine learning models in various domains.

Example of Text Annotation Workflow

Understanding the process of text annotation can provide further insights into its significance and impact. Here's an example of a typical text annotation workflow:

- Annotators receive a set of documents or sentences to be annotated.

- They read and analyze the text, identifying relevant entities, sentiments, and parts of speech.

- Using annotation tools, annotators label and categorize the identified entities, sentiments, and parts of speech.

- The annotated data is then used to train machine learning models, allowing them to learn patterns and make accurate predictions in various natural language processing tasks.

Through this workflow, text annotation facilitates the development of robust and reliable machine learning models that can effectively process and analyze textual data.

Types of Data Annotation: Video Annotation

Video annotation plays a critical role in enabling computer vision models to analyze and understand video content. This process involves labeling objects or actions within video sequences, allowing machines to track objects, classify actions, and identify specific time intervals or events. Video annotation techniques such as object tracking , action recognition , and temporal annotation enhance the capabilities of computer vision algorithms, facilitating applications such as surveillance, autonomous technology, and activity recognition.

Object tracking is a video annotation technique that focuses on following and tracing specific objects or targets throughout a video. By tracking objects, computer vision models can understand the movement and behavior of those objects within the video, improving object recognition and scene understanding.

Action recognition is another video annotation technique that involves labeling and categorizing different actions or activities performed within a video. This annotation enables machines to recognize and distinguish various actions, empowering applications like human activity analysis, sports video analysis, and video surveillance.

Temporal annotation is the process of marking specific time intervals or events within a video. It helps in identifying crucial moments or incidents that are significant for video analysis. Temporal annotation plays a vital role in applications like event detection, video summarization, and video search, enabling machines to pinpoint and extract relevant information.

Example: Video Annotation for Autonomous Driving

An illustrative example of video annotation is its application in autonomous driving. By annotating objects, actions, and temporal information within video footage, computer vision models can identify other vehicles, pedestrians, traffic signs, and road markings. This annotated data serves as a training ground truth for autonomous vehicles to make informed decisions and navigate safely on the roads. The accuracy and reliability of video annotation heavily influence the performance and safety of autonomous driving systems.

Types of Data Annotation: Audio Annotation

Audio annotation plays a crucial role in machine learning by labeling and transcribing audio data, enabling the extraction of valuable insights and patterns. This type of annotation is widely used in tasks such as speaker identification , speech emotion recognition , and audio transcription . Through various techniques like phonetic annotation, speaker diarization, and event labeling, audio annotation enhances the performance and accuracy of machine learning models in applications related to audio data.

Speaker Identification

Speaker identification is a key task in audio annotation, where machine learning models are trained to recognize and distinguish different speakers in audio recordings. By labeling the speakers and their corresponding segments in the data, models can accurately identify and differentiate speakers, enabling applications such as voice biometrics and speaker recognition systems.

Speech Emotion Recognition

Speech emotion recognition involves annotating audio data to identify and categorize the emotions expressed in spoken language. By labeling emotions such as happiness, sadness, anger, or surprise, machine learning models can accurately classify and interpret the emotional states of speakers. This enables applications in sentiment analysis, voice-based virtual assistants , and emotional speech recognition.

Transcription

Transcription involves the process of converting audio data into written text. Through audio annotation techniques like phonetic annotation and automatic speech recognition, machine learning models can transcribe spoken language accurately. Transcription is essential in various domains, including media and entertainment, customer support, and accessibility for the hearing impaired.

Audio annotation is a powerful tool for unlocking insights and understanding in audio data. By providing labeled information through techniques such as speaker identification, speech emotion recognition, and transcription, machine learning models can effectively analyze and interpret audio, creating valuable applications and solutions across industries.

Techniques of Audio Annotation

Why does annotation in machine learning matter.

Annotation plays a crucial role in machine learning as it provides labeled data that serves as the ground truth for training models. Without accurate and properly annotated data, machine learning algorithms would struggle to learn patterns and make meaningful predictions.

Annotation enables models to make informed decisions and predictions based on their training, even when faced with previously unseen data.

The quality and accuracy of annotations directly impact the performance and reliability of machine learning models, making annotation an essential component of the machine learning pipeline.

Key Challenges of Data Annotation in Machine Learning

Data annotation in machine learning presents several challenges that need to be addressed for successful implementation. These challenges include:

- Annotation Quality: High-quality annotations are crucial for training accurate and reliable machine learning models. Ensuring consistent, accurate, and detailed annotations that capture relevant information is essential for achieving optimal results.

- Scalability: The exponential growth of data poses a significant challenge in managing and annotating large volumes of data efficiently. Scaling annotation processes to handle increasingly large datasets while maintaining quality and accuracy is critical.

- Subjectivity: Data annotation can involve subjective judgments, such as determining the boundaries of objects or identifying sentiment. Managing subjectivity requires clear guidelines and continuous communication among annotators to maintain consistency and minimize bias.

- Consistency: Achieving consistent annotations across different annotators is crucial to ensure reliable training data. Consistency ensures that models can generalize effectively and make accurate predictions on unseen data.

- Privacy and Security: Data annotation often involves sensitive information, such as personally identifiable information (PII). Ensuring the privacy and security of annotated data by implementing robust data protection measures is essential to maintain trust and compliance.

Addressing these challenges is vital for obtaining high-quality annotated datasets that enable the development of accurate and reliable machine learning models.

"High-quality annotations are crucial for providing reliable training data for machine learning models."

Use Cases of Data Annotation in Machine Learning

Data annotation is a critical component in machine learning with various applications across different domains. Let's explore some common use cases where data annotation plays a vital role:

Medical Imaging

In the field of medical imaging , data annotation is crucial for accurate disease diagnosis and treatment planning. Annotated medical images assist healthcare professionals in identifying and analyzing abnormalities, enabling timely and effective interventions.

Autonomous Vehicles

Data annotation is essential for training autonomous vehicles to understand and interact with their environment. Annotated data helps in object recognition, allowing autonomous vehicles to accurately identify pedestrians, traffic signs, and other vehicles. It also contributes to road scene understanding, enabling autonomous vehicles to navigate safely and make informed decisions on the road.

Ecommerce platforms heavily rely on data annotation to enhance user experience and drive sales. Annotated product images and descriptions are used for recommendation systems, providing personalized product suggestions to customers based on their preferences and purchase history.

Sentiment Analysis

Sentiment analysis, which involves determining the sentiment or opinion expressed in customer reviews or social media posts, benefits greatly from data annotation. Annotated customer reviews serve as training data for sentiment classification models, enabling businesses to extract valuable insights and make data-driven decisions to improve their products and services.

Virtual Assistants

Data annotation is instrumental in training virtual assistants to accurately interpret and respond to user commands. Annotated voice recordings are used for speech recognition and natural language understanding, enabling virtual assistants to understand user queries and provide relevant and context-aware responses.

These are just a few examples of how data annotation is applied in machine learning to improve the performance and reliability of models across various domains. The precise and accurate labeling provided by data annotation enables machines to learn and make more informed decisions, ultimately enhancing the overall user experience and driving innovation.

Human vs. Machine in Data Annotation

When it comes to data annotation, there is an ongoing debate about the roles of humans and machines. Both have their advantages and limitations, and finding the right balance is crucial for accurate and meaningful annotations.

Machine automation in data annotation has the potential to streamline the process by leveraging algorithms to label data automatically. This approach is efficient, fast, and scalable, allowing large volumes of data to be annotated quickly.

However, human expertise in data annotation cannot be replaced. Human annotators bring a level of understanding, intuition, and domain-specific knowledge that machines currently lack. They are able to interpret complex contexts, understand nuances, and make subjective decisions that machines find challenging.

Human annotators play a vital role in creating ground truth datasets, ensuring the accuracy and quality of annotations. Their involvement improves the performance and reliability of machine learning models trained on annotated data. With their expertise, human annotators can provide valuable insights, identify edge cases, and handle ambiguous situations that machines struggle with.

"Human annotators bring a deeper understanding of intent, context, and domain-specific knowledge to the annotation process."

Additionally, human involvement can help address biases and inconsistencies in data annotation. By carefully selecting and training human annotators, organizations can ensure a high level of accuracy and reliability in annotations.

Although machine automation offers advantages in terms of speed and scalability , it is essential to strike a balance between human expertise and machine automation in data annotation. By combining the strengths of humans and machines, organizations can maximize the efficiency, accuracy, and effectiveness of the annotation process.

The Benefits of Human Expertise in Annotation

Human expertise in data annotation brings several key benefits:

- Deep understanding of intent and context: Human annotators can interpret complex data, understand subtle nuances, and accurately label data based on the intended meaning.

- Domain-specific knowledge: Human annotators bring domain-specific knowledge and subject matter expertise, enabling them to make informed decisions and handle industry-specific annotation tasks.

- Handling ambiguity and edge cases: Human annotators excel at handling ambiguous situations and edge cases that can arise in data annotation. They can make judgment calls and provide valuable insights.

- Quality control and feedback loop: Human annotators can actively participate in the quality control process by reviewing and providing feedback on automated annotations. This iterative feedback loop helps improve the accuracy and reliability of the annotation process over time.

The Role of Machine Automation in Annotation

Machine automation in data annotation offers several advantages:

- Efficiency and scalability : Machines can annotate large volumes of data quickly and efficiently, enabling organizations to process and label massive datasets with ease.

- Consistency : Machines can provide consistent annotations, reducing human error and ensuring uniformity in labeling.

- Speed: Automated annotation tools can expedite the annotation process, enabling organizations to save time and resources.

- Cost-effectiveness: Machine automation can reduce the cost of annotation by minimizing the need for extensive human labor.

However, it is important to note that machine automation has limitations, particularly in cases that require subjective analysis, contextual understanding, or handling complex semantics. In such cases, human expertise remains crucial.

Incorporating Human and Machine Collaboration

To achieve the best results, organizations should aim for a collaborative approach that leverages the strengths of both humans and machines. This can include:

- Using human annotators to curate high-quality annotated datasets that serve as the ground truth for model training.

- Employing automated tools and algorithms to assist human annotators in the annotation process, speeding up certain tasks and enhancing efficiency.

- Implementing a feedback loop between human annotators and automated systems to continuously improve and refine annotations.

- Regularly reviewing and auditing the annotations to maintain quality and consistency.

By combining the power of human expertise with machine automation, organizations can achieve accurate, reliable, and scalable data annotation, ultimately enhancing the performance and reliability of machine learning models.

Data annotation plays a pivotal role in machine learning, enabling models to learn from labeled examples and make accurate predictions. The importance of annotation in machine learning cannot be overstated, as it provides the necessary ground truth data for training models. Different annotation techniques, such as image annotation, text annotation, video annotation, and audio annotation, are used to train machine learning models in various domains.

The challenges associated with data annotation, including annotation quality , scalability, subjectivity , consistency, and privacy and security , must be addressed to ensure the success of data annotation projects. High-quality annotations are crucial for generating reliable training data and improving the performance and reliability of machine learning models.

By leveraging both human expertise and machine automation, organizations can generate high-quality labeled datasets and develop accurate and reliable machine learning models. Human annotators provide domain-specific knowledge and expertise, ensuring the accuracy and meaning of annotations. At the same time, machine automation can help streamline the annotation process and improve scalability.

As the field of machine learning continues to advance, the importance of data annotation will only grow. The quality and accuracy of annotations directly impact the performance and reliability of machine learning models, making annotation an essential component of the machine learning pipeline.

Whether it's image annotation for object recognition, text annotation for sentiment analysis, video annotation for action recognition , or audio annotation for speaker identification, data annotation plays a crucial role in enabling machines to understand and interpret various types of data.

With the proper implementation of data annotation, organizations can unlock the full potential of machine learning and harness its power to drive innovation and solve complex problems across a wide range of industries.

What is data annotation in machine learning?

Data annotation in machine learning refers to the process of labeling data to provide meaningful information to algorithms. It involves adding annotations or tags to data points to convey valuable information to algorithms easier.

What are the different types of data annotation?

The different types of data annotation include image annotation, text annotation, video annotation, and audio annotation.

What is image annotation?

Image annotation involves labeling objects, regions, or specific features within an image. It helps in training computer vision models to recognize and classify objects accurately.

What is text annotation?

Text annotation involves labeling and categorizing textual data, such as documents, articles, or sentences. It is commonly used in natural language processing tasks like named entity recognition , sentiment analysis, and part-of-speech tagging .

What is video annotation?

Video annotation is the process of labeling objects or actions within video sequences. It enables computer vision models to analyze and understand video content.

What is audio annotation?

Audio annotation encompasses the process of labeling and transcribing audio data. It plays a crucial role in tasks such as speaker identification, speech emotion recognition, and audio transcription.

Why is annotation important in machine learning?

Annotation is important in machine learning because it provides labeled data that serves as the ground truth for training models. Without accurate and properly annotated data, machine learning algorithms would struggle to learn patterns and make meaningful predictions.

What are the key challenges of data annotation?

The key challenges of data annotation include annotation quality , scalability, subjectivity, consistency, and privacy and security .

What are some use cases of data annotation?

Some common use cases of data annotation include medical imaging , autonomous vehicles, ecommerce , sentiment analysis, and virtual assistants.

What is the role of humans vs. machines in data annotation?

While machines have the potential to automate certain aspects of the annotation process, human expertise is crucial for accurate and meaningful annotations. Human annotators bring a deeper understanding of intent, context, and domain-specific knowledge to the annotation process.

Why is data annotation important in machine learning?

Data annotation is important in machine learning as it enables models to learn from labeled examples and make accurate predictions.

Efficient Image Data Annotation Methods

Data annotation vs. data labeling: explained, understanding data labeling techniques.

Data labeling, or data annotation, is part of the preprocessing stage when developing a machine learning (ML) model.

Data labeling requires the identification of raw data (i.e., images, text files, videos), and then the addition of one or more labels to that data to specify its context for the models, allowing the machine learning model to make accurate predictions.

Data labeling underpins different machine learning and deep learning use cases, including computer vision and natural language processing (NLP).

Discover the power of integrating a data lakehouse strategy into your data architecture, including enhancements to scale AI and cost optimization opportunities.

Register for the ebook on generative AI

Companies integrate software, processes and data annotators to clean, structure and label data. This training data becomes the foundation for machine learning models. These labels allow analysts to isolate variables within datasets, and this, in turn, enables the selection of optimal data predictors for ML models. The labels identify the appropriate data vectors to be pulled in for model training, where the model, then, learns to make the best predictions.

Along with machine assistance, data labeling tasks require “ human-in-the-loop (HITL) ” participation. HITL leverages the judgment of human “data labelers” toward creating, training, fine-tuning and testing ML models. They help guide the data labeling process by feeding the models datasets that are most applicable to a given project.

Labeled data vs. unlabeled data

Computers use labeled and unlabeled data to train ML models, but what is the difference ?

- Labeled data is used in supervised learning , whereas unlabeled data is used in unsupervised learning .

- Labeled data is more difficult to acquire and store (i.e. time consuming and expensive), whereas unlabeled data is easier to acquire and store.

- Labeled data can be used to determine actionable insights (e.g. forecasting tasks), whereas unlabeled data is more limited in its usefulness. Unsupervised learning methods can help discover new clusters of data, allowing for new categorizations when labeling.

Computers can also use combined data for semi-supervised learning, which reduces the need for manually labeled data while providing a large annotated dataset.

Data labeling is a critical step in developing a high-performance ML model. Though labeling appears simple, it’s not always easy to implement. As a result, companies must consider multiple factors and methods to determine the best approach to labeling. Since each data labeling method has its pros and cons, a detailed assessment of task complexity, as well as the size, scope and duration of the project is advised.

Here are some paths to labeling your data:

- Internal labeling - Using in-house data science experts simplifies tracking, provides greater accuracy, and increases quality. However, this approach typically requires more time and favors large companies with extensive resources.

- Synthetic labeling - This approach generates new project data from pre-existing datasets, which enhances data quality and time efficiency. However, synthetic labeling requires extensive computing power, which can increase pricing.

- Programmatic labeling - This automated data labeling process uses scripts to reduce time consumption and the need for human annotation. However, the possibility of technical problems requires HITL to remain a part of the quality assurance (QA) process.

- Outsourcing - This can be an optimal choice for high-level temporary projects, but developing and managing a freelance-oriented workflow can also be time-consuming. Though freelancing platforms provide comprehensive candidate information to ease the vetting process, hiring managed data labeling teams provides pre-vetted staff and pre-built data labeling tools.

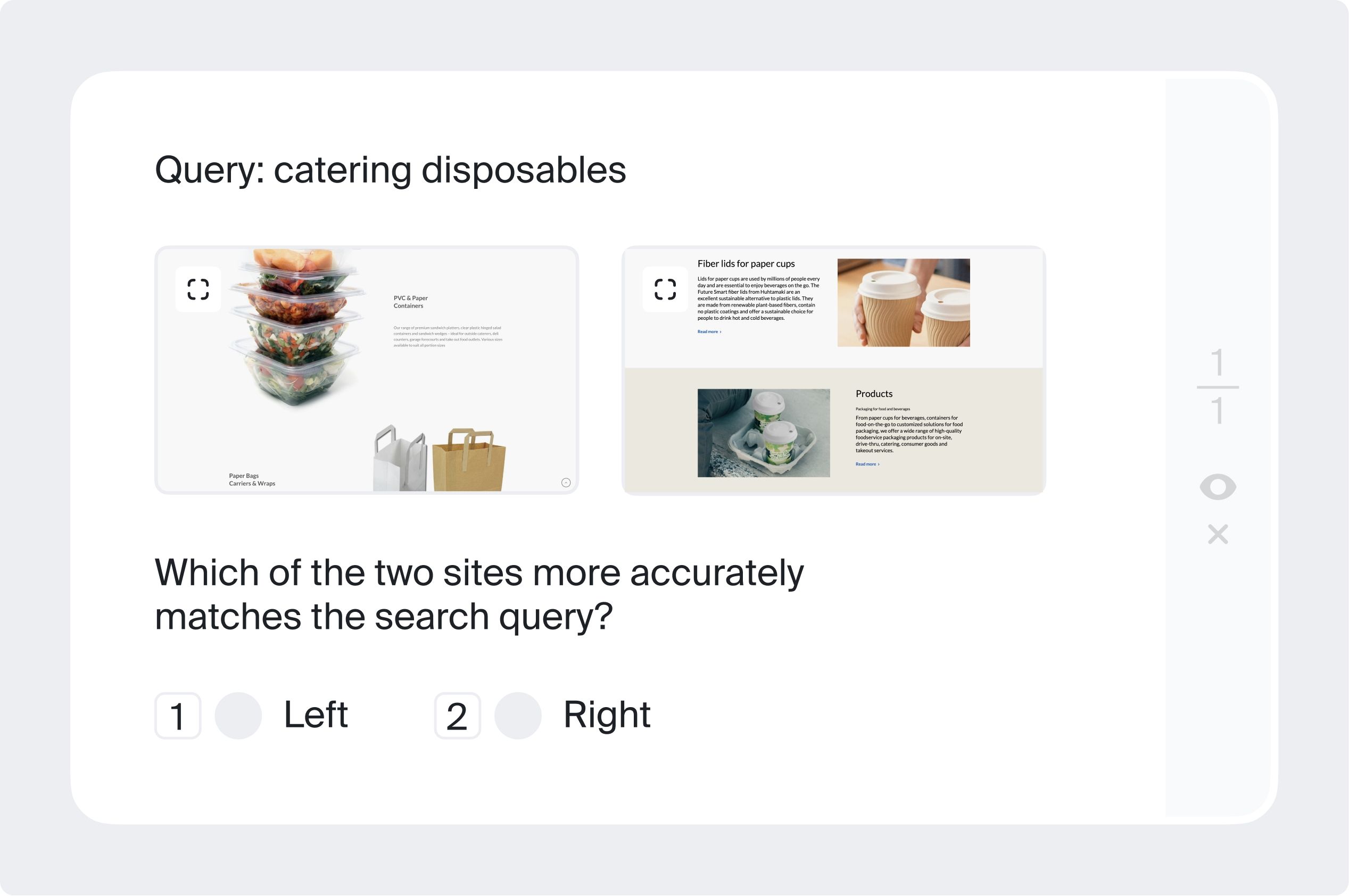

- Crowdsourcing - This approach is quicker and more cost-effective due to its micro-tasking capability and web-based distribution. However, worker quality, QA, and project management vary across crowdsourcing platforms. One of the most famous examples of crowdsourced data labeling is Recaptcha. This project was two-fold in that it controlled for bots while simultaneously improving data annotation of images. For example, a Recaptcha prompt would ask a user to identify all the photos containing a car to prove that they were human, and then this program could check itself based on the results of other users. The input of from these users provided a database of labels for an array of images.

The general tradeoff of data labeling is that while it can decrease a business’s time to scale, it tends to come at a cost. More accurate data generally improves model predictions, so despite its high cost, the value that it provides is usually well worth the investment. Since data annotation provides more context to datasets, it enhances the performance of exploratory data analysis as well as machine learning (ML) and artificial intelligence (AI) applications. For example, data labeling produces more relevant search results across search engine platforms and better product recommendations on e-commerce platforms. Let’s delve deeper into other key benefits and challenges:

Data labeling provides users, teams and companies with greater context, quality and usability. More specifically, you can expect:

- More Precise Predictions: Accurate data labeling ensures better quality assurance within machine learning algorithms, allowing the model to train and yield the expected output. Otherwise, as the old saying goes, “garbage in, garbage out.” Properly labeled data provide the “ ground truth ” (i.e., how labels reflect “real world” scenarios) for testing and iterating subsequent models.

- Better Data Usability: Data labeling can also improve usability of data variables within a model. For example, you might reclassify a categorical variable as a binary variable to make it more consumable for a model. Aggregating data in this way can optimize the model by reducing the number of model variables or enable the inclusion of control variables. Whether you’re using data to build computer vision models (i.e. putting bounding boxes around objects) or NLP models (i.e. classifying text for social sentiment), utilizing high-quality data is a top priority.

Challenges

Data labeling is not without its challenges. In particular, some of the most common challenges are:

- Expensive and time-consuming: While data labeling is critical for machine learning models, it can be costly from both a resource and time perspective. If a business takes a more automated approach, engineering teams will still need to set up data pipelines prior to data processing, and manual labeling will almost always be expensive and time-consuming.

- Prone to Human-Error: These labeling approaches are also subject to human-error (e.g. coding errors, manual entry errors), which can decrease the quality of data. This, in turn, leads to inaccurate data processing and modeling. Quality assurance checks are essential to maintaining data quality.

No matter the approach, the following best practices optimize data labeling accuracy and efficiency:

- Intuitive and streamlined task interfaces minimize cognitive load and context switching for human labelers.

- Consensus: Measures the rate of agreement between multiple labelers(human or machine). A consensus score is calculated by dividing the sum of agreeing labels by the total number of labels per asset.

- Label auditing: Verifies the accuracy of labels and updates them as needed.

- Transfer learning: Takes one or more pre-trained models from one dataset and applies them to another. This can include multi-task learning, in which multiple tasks are learned in tandem.

- Membership query synthesis - Generates a synthetic instance and requests a label for it.

- Pool-based sampling - Ranks all unlabeled instances according to informativeness measurement and selects the best queries to annotate.

- Stream-based selective sampling - Selects unlabeled instances one by one, and labels or ignores them depending on their informativeness or uncertainty.

Though data labeling can enhance accuracy, quality and usability in multiple contexts across industries, its more prominent use cases include:

- Computer vision: A field of AI that uses training data to build a computer vision model that enables image segmentation and category automation, identifies key points in an image and detects the location of objects. In fact, IBM offers a computer vision platform, Maximo Visual Inspection , that enables subject matter experts (SMEs) to label and train deep learning vision models that can be deployed in the cloud, edge devices, and local data centers. Computer vision is used in multiple industries - from energy and utilities to manufacturing and automotive. By 2022, this surging field is expected to reach a market value of USD 48.6 billion.

- Natural language processing (NLP): A branch of AI that combines computational linguistics with statistical, machine learning, and deep learning models to identify and tag important sections of text that generate training data for sentiment analysis, entity name recognition and optical character recognition. NLP is increasingly being used in enterprise solutions like spam detection, machine translation, speech recognition , text summarization, virtual assistants and chatbots, and voice-operated GPS systems. This has made NLP a critical component in the evolution of mission-critical business processes.

The natural language processing (NLP) service for advanced text analytics.

Enable AI workloads and consolidate primary and secondary big data storage with industry-leading, on-premises object storage.

See, predict and prevent issues with advanced AI-powered remote monitoring and computer vision for assets and operations.

Scale AI workloads for all your data, anywhere, with IBM watsonx.data, a fit-for-purpose data store built on an open data lakehouse architecture.

How to Resist the Temptation of AI When Writing

Salesforce’s BLIP Image Captioning: Create Captions from Images

Researchers create “The Consensus Game” to elevate AI’s text comprehension and generation skills

‘Tinder’s Most Swiped Man’, 33, breaks his silence on THAT date with ‘amazing’ Vanessa Feltz, 62… as he reveals they ‘shared a bottle of Sauvignon Blanc’ and she ‘loved his pheromones’ on intimate night out

Here’s Proof the AI Boom Is Real: More People Are Tapping ChatGPT at Work

- Applications

- Machine Learning

What is Data Annotation? Definition, Tools, Types and More

Introduction

Data annotation plays a crucial role in the field of machine learning, enabling the development of accurate and reliable models. In this article, we will explore the various aspects of data annotation, including its importance, types, tools, and techniques. We will also delve into the different career opportunities available in this field, the industry applications, job market trends, and the salaries associated with data annotation.

Let’s get started!

What is Data Annotation?

Data annotation involves the process of labeling or tagging data to make it understandable for machines. It provides the necessary context and information for machine learning algorithms to learn and make accurate predictions. By annotating data, we enable machines to recognize patterns, objects, and sentiments, thereby enhancing their ability to perform complex tasks.

What is the Importance of Data Annotation in Machine Learning?

Data annotation is a critical component in machine learning as it serves as the foundation for training models. Without properly annotated data, machine learning algorithms would struggle to understand and interpret the input. Accurate and comprehensive data annotation ensures that models can make informed decisions and predictions, leading to improved performance and reliability.

Become an AI/ML expert today with our BlackBelt Plus Program!

Types of Data Annotation

Data annotation can be performed using various tools and techniques, depending on the complexity and requirements of the task at hand.

What is the Process?

Time needed: 5 minutes

The data annotation process involves several stages to ensure the quality and reliability of the annotated data.