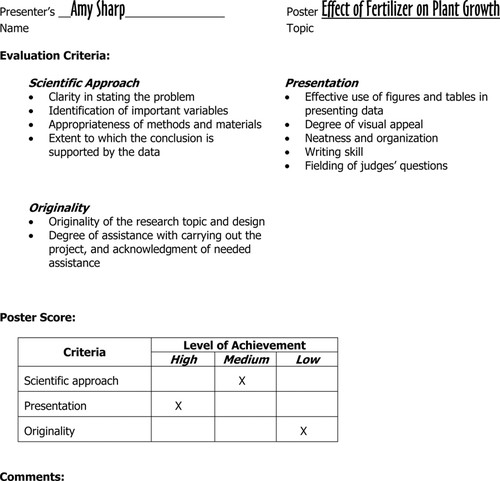

Rubrics for Oral Presentations

Introduction.

Many instructors require students to give oral presentations, which they evaluate and count in students’ grades. It is important that instructors clarify their goals for these presentations as well as the student learning objectives to which they are related. Embedding the assignment in course goals and learning objectives allows instructors to be clear with students about their expectations and to develop a rubric for evaluating the presentations.

A rubric is a scoring guide that articulates and assesses specific components and expectations for an assignment. Rubrics identify the various criteria relevant to an assignment and then explicitly state the possible levels of achievement along a continuum, so that an effective rubric accurately reflects the expectations of an assignment. Using a rubric to evaluate student performance has advantages for both instructors and students. Creating Rubrics

Rubrics can be either analytic or holistic. An analytic rubric comprises a set of specific criteria, with each one evaluated separately and receiving a separate score. The template resembles a grid with the criteria listed in the left column and levels of performance listed across the top row, using numbers and/or descriptors. The cells within the center of the rubric contain descriptions of what expected performance looks like for each level of performance.

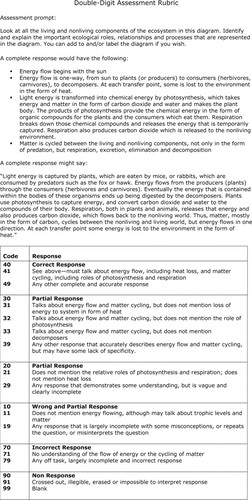

A holistic rubric consists of a set of descriptors that generate a single, global score for the entire work. The single score is based on raters’ overall perception of the quality of the performance. Often, sentence- or paragraph-length descriptions of different levels of competencies are provided.

When applied to an oral presentation, rubrics should reflect the elements of the presentation that will be evaluated as well as their relative importance. Thus, the instructor must decide whether to include dimensions relevant to both form and content and, if so, which one. Additionally, the instructor must decide how to weight each of the dimensions – are they all equally important, or are some more important than others? Additionally, if the presentation represents a group project, the instructor must decide how to balance grading individual and group contributions. Evaluating Group Projects

Creating Rubrics

The steps for creating an analytic rubric include the following:

1. Clarify the purpose of the assignment. What learning objectives are associated with the assignment?

2. Look for existing rubrics that can be adopted or adapted for the specific assignment

3. Define the criteria to be evaluated

4. Choose the rating scale to measure levels of performance

5. Write descriptions for each criterion for each performance level of the rating scale

6. Test and revise the rubric

Examples of criteria that have been included in rubrics for evaluation oral presentations include:

- Knowledge of content

- Organization of content

- Presentation of ideas

- Research/sources

- Visual aids/handouts

- Language clarity

- Grammatical correctness

- Time management

- Volume of speech

- Rate/pacing of Speech

- Mannerisms/gestures

- Eye contact/audience engagement

Examples of scales/ratings that have been used to rate student performance include:

- Strong, Satisfactory, Weak

- Beginning, Intermediate, High

- Exemplary, Competent, Developing

- Excellent, Competent, Needs Work

- Exceeds Standard, Meets Standard, Approaching Standard, Below Standard

- Exemplary, Proficient, Developing, Novice

- Excellent, Good, Marginal, Unacceptable

- Advanced, Intermediate High, Intermediate, Developing

- Exceptional, Above Average, Sufficient, Minimal, Poor

- Master, Distinguished, Proficient, Intermediate, Novice

- Excellent, Good, Satisfactory, Poor, Unacceptable

- Always, Often, Sometimes, Rarely, Never

- Exemplary, Accomplished, Acceptable, Minimally Acceptable, Emerging, Unacceptable

Grading and Performance Rubrics Carnegie Mellon University Eberly Center for Teaching Excellence & Educational Innovation

Creating and Using Rubrics Carnegie Mellon University Eberly Center for Teaching Excellence & Educational Innovation

Using Rubrics Cornell University Center for Teaching Innovation

Rubrics DePaul University Teaching Commons

Building a Rubric University of Texas/Austin Faculty Innovation Center

Building a Rubric Columbia University Center for Teaching and Learning

Rubric Development University of West Florida Center for University Teaching, Learning, and Assessment

Creating and Using Rubrics Yale University Poorvu Center for Teaching and Learning

Designing Grading Rubrics Brown University Sheridan Center for Teaching and Learning

Examples of Oral Presentation Rubrics

Oral Presentation Rubric Pomona College Teaching and Learning Center

Oral Presentation Evaluation Rubric University of Michigan

Oral Presentation Rubric Roanoke College

Oral Presentation: Scoring Guide Fresno State University Office of Institutional Effectiveness

Presentation Skills Rubric State University of New York/New Paltz School of Business

Oral Presentation Rubric Oregon State University Center for Teaching and Learning

Oral Presentation Rubric Purdue University College of Science

Group Class Presentation Sample Rubric Pepperdine University Graziadio Business School

Eberly Center

Teaching excellence & educational innovation, grading and performance rubrics, what are rubrics.

A rubric is a scoring tool that explicitly represents the performance expectations for an assignment or piece of work. A rubric divides the assigned work into component parts and provides clear descriptions of the characteristics of the work associated with each component, at varying levels of mastery. Rubrics can be used for a wide array of assignments: papers, projects, oral presentations, artistic performances, group projects, etc. Rubrics can be used as scoring or grading guides, to provide formative feedback to support and guide ongoing learning efforts, or both.

Advantages of Using Rubrics

Using a rubric provides several advantages to both instructors and students. Grading according to an explicit and descriptive set of criteria that is designed to reflect the weighted importance of the objectives of the assignment helps ensure that the instructor’s grading standards don’t change over time. Grading consistency is difficult to maintain over time because of fatigue, shifting standards based on prior experience, or intrusion of other criteria. Furthermore, rubrics can reduce the time spent grading by reducing uncertainty and by allowing instructors to refer to the rubric description associated with a score rather than having to write long comments. Finally, grading rubrics are invaluable in large courses that have multiple graders (other instructors, teaching assistants, etc.) because they can help ensure consistency across graders and reduce the systematic bias that can be introduced between graders.

Used more formatively, rubrics can help instructors get a clearer picture of the strengths and weaknesses of their class. By recording the component scores and tallying up the number of students scoring below an acceptable level on each component, instructors can identify those skills or concepts that need more instructional time and student effort.

Grading rubrics are also valuable to students. A rubric can help instructors communicate to students the specific requirements and acceptable performance standards of an assignment. When rubrics are given to students with the assignment description, they can help students monitor and assess their progress as they work toward clearly indicated goals. When assignments are scored and returned with the rubric, students can more easily recognize the strengths and weaknesses of their work and direct their efforts accordingly.

Examples of Rubrics

Here are links to a diverse set of rubrics designed by Carnegie Mellon faculty and faculty at other institutions. Although your particular field of study and type of assessment activity may not be represented currently, viewing a rubric that is designed for a similar activity may provide you with ideas on how to divide your task into components and how to describe the varying levels of mastery.

Paper Assignments

- Example 1: Philosophy Paper This rubric was designed for student papers in a range of philosophy courses, CMU.

- Example 2: Psychology Assignment Short, concept application homework assignment in cognitive psychology, CMU.

- Example 3: Anthropology Writing Assignments This rubric was designed for a series of short writing assignments in anthropology, CMU.

- Example 4: History Research Paper . This rubric was designed for essays and research papers in history, CMU.

- Example 1: Capstone Project in Design This rubric describes the components and standard of performance from the research phase to the final presentation for a senior capstone project in the School of Design, CMU.

- Example 2: Engineering Design Project This rubric describes performance standards on three aspects of a team project: Research and Design, Communication, and Team Work.

Oral Presentations

- Example 1: Oral Exam This rubric describes a set of components and standards for assessing performance on an oral exam in an upper-division history course, CMU.

- Example 2: Oral Communication

- Example 3: Group Presentations This rubric describes a set of components and standards for assessing group presentations in a history course, CMU.

Class Participation/Contributions

- Example 1: Discussion Class This rubric assesses the quality of student contributions to class discussions. This is appropriate for an undergraduate-level course, CMU.

- Example 2: Advanced Seminar This rubric is designed for assessing discussion performance in an advanced undergraduate or graduate seminar.

Rubric Best Practices, Examples, and Templates

A rubric is a scoring tool that identifies the different criteria relevant to an assignment, assessment, or learning outcome and states the possible levels of achievement in a specific, clear, and objective way. Use rubrics to assess project-based student work including essays, group projects, creative endeavors, and oral presentations.

Rubrics can help instructors communicate expectations to students and assess student work fairly, consistently and efficiently. Rubrics can provide students with informative feedback on their strengths and weaknesses so that they can reflect on their performance and work on areas that need improvement.

How to Get Started

Best practices, moodle how-to guides.

- Workshop Recording (Fall 2022)

- Workshop Registration

Step 1: Analyze the assignment

The first step in the rubric creation process is to analyze the assignment or assessment for which you are creating a rubric. To do this, consider the following questions:

- What is the purpose of the assignment and your feedback? What do you want students to demonstrate through the completion of this assignment (i.e. what are the learning objectives measured by it)? Is it a summative assessment, or will students use the feedback to create an improved product?

- Does the assignment break down into different or smaller tasks? Are these tasks equally important as the main assignment?

- What would an “excellent” assignment look like? An “acceptable” assignment? One that still needs major work?

- How detailed do you want the feedback you give students to be? Do you want/need to give them a grade?

Step 2: Decide what kind of rubric you will use

Types of rubrics: holistic, analytic/descriptive, single-point

Holistic Rubric. A holistic rubric includes all the criteria (such as clarity, organization, mechanics, etc.) to be considered together and included in a single evaluation. With a holistic rubric, the rater or grader assigns a single score based on an overall judgment of the student’s work, using descriptions of each performance level to assign the score.

Advantages of holistic rubrics:

- Can p lace an emphasis on what learners can demonstrate rather than what they cannot

- Save grader time by minimizing the number of evaluations to be made for each student

- Can be used consistently across raters, provided they have all been trained

Disadvantages of holistic rubrics:

- Provide less specific feedback than analytic/descriptive rubrics

- Can be difficult to choose a score when a student’s work is at varying levels across the criteria

- Any weighting of c riteria cannot be indicated in the rubric

Analytic/Descriptive Rubric . An analytic or descriptive rubric often takes the form of a table with the criteria listed in the left column and with levels of performance listed across the top row. Each cell contains a description of what the specified criterion looks like at a given level of performance. Each of the criteria is scored individually.

Advantages of analytic rubrics:

- Provide detailed feedback on areas of strength or weakness

- Each criterion can be weighted to reflect its relative importance

Disadvantages of analytic rubrics:

- More time-consuming to create and use than a holistic rubric

- May not be used consistently across raters unless the cells are well defined

- May result in giving less personalized feedback

Single-Point Rubric . A single-point rubric is breaks down the components of an assignment into different criteria, but instead of describing different levels of performance, only the “proficient” level is described. Feedback space is provided for instructors to give individualized comments to help students improve and/or show where they excelled beyond the proficiency descriptors.

Advantages of single-point rubrics:

- Easier to create than an analytic/descriptive rubric

- Perhaps more likely that students will read the descriptors

- Areas of concern and excellence are open-ended

- May removes a focus on the grade/points

- May increase student creativity in project-based assignments

Disadvantage of analytic rubrics: Requires more work for instructors writing feedback

Step 3 (Optional): Look for templates and examples.

You might Google, “Rubric for persuasive essay at the college level” and see if there are any publicly available examples to start from. Ask your colleagues if they have used a rubric for a similar assignment. Some examples are also available at the end of this article. These rubrics can be a great starting point for you, but consider steps 3, 4, and 5 below to ensure that the rubric matches your assignment description, learning objectives and expectations.

Step 4: Define the assignment criteria

Make a list of the knowledge and skills are you measuring with the assignment/assessment Refer to your stated learning objectives, the assignment instructions, past examples of student work, etc. for help.

Helpful strategies for defining grading criteria:

- Collaborate with co-instructors, teaching assistants, and other colleagues

- Brainstorm and discuss with students

- Can they be observed and measured?

- Are they important and essential?

- Are they distinct from other criteria?

- Are they phrased in precise, unambiguous language?

- Revise the criteria as needed

- Consider whether some are more important than others, and how you will weight them.

Step 5: Design the rating scale

Most ratings scales include between 3 and 5 levels. Consider the following questions when designing your rating scale:

- Given what students are able to demonstrate in this assignment/assessment, what are the possible levels of achievement?

- How many levels would you like to include (more levels means more detailed descriptions)

- Will you use numbers and/or descriptive labels for each level of performance? (for example 5, 4, 3, 2, 1 and/or Exceeds expectations, Accomplished, Proficient, Developing, Beginning, etc.)

- Don’t use too many columns, and recognize that some criteria can have more columns that others . The rubric needs to be comprehensible and organized. Pick the right amount of columns so that the criteria flow logically and naturally across levels.

Step 6: Write descriptions for each level of the rating scale

Artificial Intelligence tools like Chat GPT have proven to be useful tools for creating a rubric. You will want to engineer your prompt that you provide the AI assistant to ensure you get what you want. For example, you might provide the assignment description, the criteria you feel are important, and the number of levels of performance you want in your prompt. Use the results as a starting point, and adjust the descriptions as needed.

Building a rubric from scratch

For a single-point rubric , describe what would be considered “proficient,” i.e. B-level work, and provide that description. You might also include suggestions for students outside of the actual rubric about how they might surpass proficient-level work.

For analytic and holistic rubrics , c reate statements of expected performance at each level of the rubric.

- Consider what descriptor is appropriate for each criteria, e.g., presence vs absence, complete vs incomplete, many vs none, major vs minor, consistent vs inconsistent, always vs never. If you have an indicator described in one level, it will need to be described in each level.

- You might start with the top/exemplary level. What does it look like when a student has achieved excellence for each/every criterion? Then, look at the “bottom” level. What does it look like when a student has not achieved the learning goals in any way? Then, complete the in-between levels.

- For an analytic rubric , do this for each particular criterion of the rubric so that every cell in the table is filled. These descriptions help students understand your expectations and their performance in regard to those expectations.

Well-written descriptions:

- Describe observable and measurable behavior

- Use parallel language across the scale

- Indicate the degree to which the standards are met

Step 7: Create your rubric

Create your rubric in a table or spreadsheet in Word, Google Docs, Sheets, etc., and then transfer it by typing it into Moodle. You can also use online tools to create the rubric, but you will still have to type the criteria, indicators, levels, etc., into Moodle. Rubric creators: Rubistar , iRubric

Step 8: Pilot-test your rubric

Prior to implementing your rubric on a live course, obtain feedback from:

- Teacher assistants

Try out your new rubric on a sample of student work. After you pilot-test your rubric, analyze the results to consider its effectiveness and revise accordingly.

- Limit the rubric to a single page for reading and grading ease

- Use parallel language . Use similar language and syntax/wording from column to column. Make sure that the rubric can be easily read from left to right or vice versa.

- Use student-friendly language . Make sure the language is learning-level appropriate. If you use academic language or concepts, you will need to teach those concepts.

- Share and discuss the rubric with your students . Students should understand that the rubric is there to help them learn, reflect, and self-assess. If students use a rubric, they will understand the expectations and their relevance to learning.

- Consider scalability and reusability of rubrics. Create rubric templates that you can alter as needed for multiple assignments.

- Maximize the descriptiveness of your language. Avoid words like “good” and “excellent.” For example, instead of saying, “uses excellent sources,” you might describe what makes a resource excellent so that students will know. You might also consider reducing the reliance on quantity, such as a number of allowable misspelled words. Focus instead, for example, on how distracting any spelling errors are.

Example of an analytic rubric for a final paper

Example of a holistic rubric for a final paper, single-point rubric, more examples:.

- Single Point Rubric Template ( variation )

- Analytic Rubric Template make a copy to edit

- A Rubric for Rubrics

- Bank of Online Discussion Rubrics in different formats

- Mathematical Presentations Descriptive Rubric

- Math Proof Assessment Rubric

- Kansas State Sample Rubrics

- Design Single Point Rubric

Technology Tools: Rubrics in Moodle

- Moodle Docs: Rubrics

- Moodle Docs: Grading Guide (use for single-point rubrics)

Tools with rubrics (other than Moodle)

- Google Assignments

- Turnitin Assignments: Rubric or Grading Form

Other resources

- DePaul University (n.d.). Rubrics .

- Gonzalez, J. (2014). Know your terms: Holistic, Analytic, and Single-Point Rubrics . Cult of Pedagogy.

- Goodrich, H. (1996). Understanding rubrics . Teaching for Authentic Student Performance, 54 (4), 14-17. Retrieved from

- Miller, A. (2012). Tame the beast: tips for designing and using rubrics.

- Ragupathi, K., Lee, A. (2020). Beyond Fairness and Consistency in Grading: The Role of Rubrics in Higher Education. In: Sanger, C., Gleason, N. (eds) Diversity and Inclusion in Global Higher Education. Palgrave Macmillan, Singapore.

Center for Teaching Innovation

Resource library.

- AACU VALUE Rubrics

Using rubrics

A rubric is a type of scoring guide that assesses and articulates specific components and expectations for an assignment. Rubrics can be used for a variety of assignments: research papers, group projects, portfolios, and presentations.

Why use rubrics?

Rubrics help instructors:

- Assess assignments consistently from student-to-student.

- Save time in grading, both short-term and long-term.

- Give timely, effective feedback and promote student learning in a sustainable way.

- Clarify expectations and components of an assignment for both students and course teaching assistants (TAs).

- Refine teaching methods by evaluating rubric results.

Rubrics help students:

- Understand expectations and components of an assignment.

- Become more aware of their learning process and progress.

- Improve work through timely and detailed feedback.

Considerations for using rubrics

When developing rubrics consider the following:

- Although it takes time to build a rubric, time will be saved in the long run as grading and providing feedback on student work will become more streamlined.

- A rubric can be a fillable pdf that can easily be emailed to students.

- They can be used for oral presentations.

- They are a great tool to evaluate teamwork and individual contribution to group tasks.

- Rubrics facilitate peer-review by setting evaluation standards. Have students use the rubric to provide peer assessment on various drafts.

- Students can use them for self-assessment to improve personal performance and learning. Encourage students to use the rubrics to assess their own work.

- Motivate students to improve their work by using rubric feedback to resubmit their work incorporating the feedback.

Getting Started with Rubrics

- Start small by creating one rubric for one assignment in a semester.

- Ask colleagues if they have developed rubrics for similar assignments or adapt rubrics that are available online. For example, the AACU has rubrics for topics such as written and oral communication, critical thinking, and creative thinking. RubiStar helps you to develop your rubric based on templates.

- Examine an assignment for your course. Outline the elements or critical attributes to be evaluated (these attributes must be objectively measurable).

- Create an evaluative range for performance quality under each element; for instance, “excellent,” “good,” “unsatisfactory.”

- Avoid using subjective or vague criteria such as “interesting” or “creative.” Instead, outline objective indicators that would fall under these categories.

- The criteria must clearly differentiate one performance level from another.

- Assign a numerical scale to each level.

- Give a draft of the rubric to your colleagues and/or TAs for feedback.

- Train students to use your rubric and solicit feedback. This will help you judge whether the rubric is clear to them and will identify any weaknesses.

- Rework the rubric based on the feedback.

Search form

- About Faculty Development and Support

- Programs and Funding Opportunities

Consultations, Observations, and Services

- Strategic Resources & Digital Publications

- Canvas @ Yale Support

- Learning Environments @ Yale

- Teaching Workshops

- Teaching Consultations and Classroom Observations

- Teaching Programs

- Spring Teaching Forum

- Written and Oral Communication Workshops and Panels

- Writing Resources & Tutorials

- About the Graduate Writing Laboratory

- Writing and Public Speaking Consultations

- Writing Workshops and Panels

- Writing Peer-Review Groups

- Writing Retreats and All Writes

- Online Writing Resources for Graduate Students

- About Teaching Development for Graduate and Professional School Students

- Teaching Programs and Grants

- Teaching Forums

- Resources for Graduate Student Teachers

- About Undergraduate Writing and Tutoring

- Academic Strategies Program

- The Writing Center

- STEM Tutoring & Programs

- Humanities & Social Sciences

- Center for Language Study

- Online Course Catalog

- Antiracist Pedagogy

- NECQL 2019: NorthEast Consortium for Quantitative Literacy XXII Meeting

- STEMinar Series

- Teaching in Context: Troubling Times

- Helmsley Postdoctoral Teaching Scholars

- Pedagogical Partners

- Instructional Materials

- Evaluation & Research

- STEM Education Job Opportunities

- Yale Connect

- Online Education Legal Statements

You are here

Creating and using rubrics.

A rubric describes the criteria that will be used to evaluate a specific task, such as a student writing assignment, poster, oral presentation, or other project. Rubrics allow instructors to communicate expectations to students, allow students to check in on their progress mid-assignment, and can increase the reliability of scores. Research suggests that when rubrics are used on an instructional basis (for instance, included with an assignment prompt for reference), students tend to utilize and appreciate them (Reddy and Andrade, 2010).

Rubrics generally exist in tabular form and are composed of:

- A description of the task that is being evaluated,

- The criteria that is being evaluated (row headings),

- A rating scale that demonstrates different levels of performance (column headings), and

- A description of each level of performance for each criterion (within each box of the table).

When multiple individuals are grading, rubrics also help improve the consistency of scoring across all graders. Instructors should insure that the structure, presentation, consistency, and use of their rubrics pass rigorous standards of validity , reliability , and fairness (Andrade, 2005).

Major Types of Rubrics

There are two major categories of rubrics:

- Holistic : In this type of rubric, a single score is provided based on raters’ overall perception of the quality of the performance. Holistic rubrics are useful when only one attribute is being evaluated, as they detail different levels of performance within a single attribute. This category of rubric is designed for quick scoring but does not provide detailed feedback. For these rubrics, the criteria may be the same as the description of the task.

- Analytic : In this type of rubric, scores are provided for several different criteria that are being evaluated. Analytic rubrics provide more detailed feedback to students and instructors about their performance. Scoring is usually more consistent across students and graders with analytic rubrics.

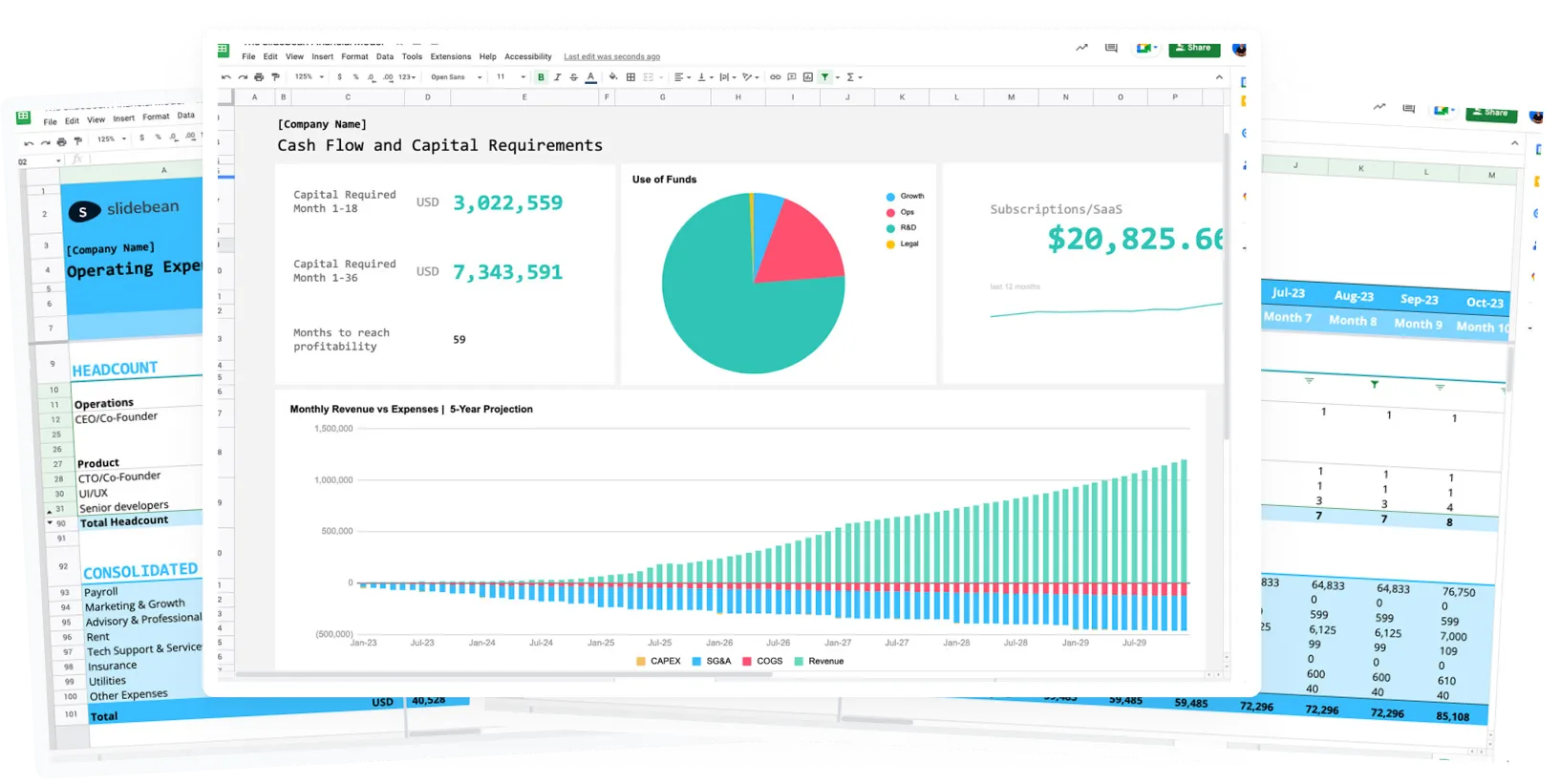

Rubrics utilize a scale that denotes level of success with a particular assignment, usually a 3-, 4-, or 5- category grid:

Figure 1: Grading Rubrics: Sample Scales (Brown Sheridan Center)

Sample Rubrics

Instructors can consider a sample holistic rubric developed for an English Writing Seminar course at Yale.

The Association of American Colleges and Universities also has a number of free (non-invasive free account required) analytic rubrics that can be downloaded and modified by instructors. These 16 VALUE rubrics enable instructors to measure items such as inquiry and analysis, critical thinking, written communication, oral communication, quantitative literacy, teamwork, problem-solving, and more.

Recommendations

The following provides a procedure for developing a rubric, adapted from Brown’s Sheridan Center for Teaching and Learning :

- Define the goal and purpose of the task that is being evaluated - Before constructing a rubric, instructors should review their learning outcomes associated with a given assignment. Are skills, content, and deeper conceptual knowledge clearly defined in the syllabus , and do class activities and assignments work towards intended outcomes? The rubric can only function effectively if goals are clear and student work progresses towards them.

- Decide what kind of rubric to use - The kind of rubric used may depend on the nature of the assignment, intended learning outcomes (for instance, does the task require the demonstration of several different skills?), and the amount and kind of feedback students will receive (for instance, is the task a formative or a summative assessment ?). Instructors can read the above, or consider “Additional Resources” for kinds of rubrics.

- Define the criteria - Instructors can review their learning outcomes and assessment parameters to determine specific criteria for the rubric to cover. Instructors should consider what knowledge and skills are required for successful completion, and create a list of criteria that assess outcomes across different vectors (comprehensiveness, maturity of thought, revisions, presentation, timeliness, etc). Criteria should be distinct and clearly described, and ideally, not surpass seven in number.

- Define the rating scale to measure levels of performance - Whatever rating scale instructors choose, they should insure that it is clear, and review it in-class to field student question and concerns. Instructors can consider if the scale will include descriptors or only be numerical, and might include prompts on the rubric for achieving higher achievement levels. Rubrics typically include 3-5 levels in their rating scales (see Figure 1 above).

- Write descriptions for each performance level of the rating scale - Each level should be accompanied by a descriptive paragraph that outlines ideals for each level, lists or names all performance expectations within the level, and if possible, provides a detail or example of ideal performance within each level. Across the rubric, descriptions should be parallel, observable, and measurable.

- Test and revise the rubric - The rubric can be tested before implementation, by arranging for writing or testing conditions with several graders or TFs who can use the rubric together. After grading with the rubric, graders might grade a similar set of materials without the rubric to assure consistency. Instructors can consider discrepancies, share the rubric and results with faculty colleagues for further opinions, and revise the rubric for use in class. Instructors might also seek out colleagues’ rubrics as well, for comparison. Regarding course implementation, instructors might consider passing rubrics out during the first class, in order to make grading expectations clear as early as possible. Rubrics should fit on one page, so that descriptions and criteria are viewable quickly and simultaneously. During and after a class or course, instructors can collect feedback on the rubric’s clarity and effectiveness from TFs and even students through anonymous surveys. Comparing scores and quality of assignments with parallel or previous assignments that did not include a rubric can reveal effectiveness as well. Instructors should feel free to revise a rubric following a course too, based on student performance and areas of confusion.

Additional Resources

Cox, G. C., Brathwaite, B. H., & Morrison, J. (2015). The Rubric: An assessment tool to guide students and markers. Advances in Higher Education, 149-163.

Creating and Using Rubrics - Carnegie Mellon Eberly Center for Teaching Excellence and & Educational Innovation

Creating a Rubric - UC Denver Center for Faculty Development

Grading Rubric Design - Brown University Sheridan Center for Teaching and Learning

Moskal, B. M. (2000). Scoring rubrics: What, when and how? Practical Assessment, Research & Evaluation 7(3).

Quinlan A. M., (2011) A Complete Guide to Rubrics: Assessment Made Easy for Teachers of K-college 2nd edition, Rowman & Littlefield Education.

Andrade, H. (2005). Teaching with Rubrics: The Good, the Bad, and the Ugly. College Teaching 53(1):27-30.

Reddy, Y. M., & Andrade, H. (2010). A review of rubric use in higher education. Assessment & Evaluation in Higher Education, 35(4), 435-448.

Sheridan Center for Teaching and Learning , Brown University

Downloads

YOU MAY BE INTERESTED IN

The Poorvu Center for Teaching and Learning routinely supports members of the Yale community with individual instructional consultations and classroom observations.

Reserve a Room

The Poorvu Center for Teaching and Learning partners with departments and groups on-campus throughout the year to share its space. Please review the reservation form and submit a request.

Instructional Enhancement Fund

The Instructional Enhancement Fund (IEF) awards grants of up to $500 to support the timely integration of new learning activities into an existing undergraduate or graduate course. All Yale instructors of record, including tenured and tenure-track faculty, clinical instructional faculty, lecturers, lectors, and part-time acting instructors (PTAIs), are eligible to apply. Award decisions are typically provided within two weeks to help instructors implement ideas for the current semester.

Skip to Content

Other ways to search:

- Events Calendar

Rubrics are a set of criteria to evaluate performance on an assignment or assessment. Rubrics can communicate expectations regarding the quality of work to students and provide a standardized framework for instructors to assess work. Rubrics can be used for both formative and summative assessment. They are also crucial in encouraging self-assessment of work and structuring peer-assessments.

Why use rubrics?

Rubrics are an important tool to assess learning in an equitable and just manner. This is because they enable:

- A common set of standards and criteria to be uniformly applied, which can mitigate bias

- Transparency regarding the standards and criteria on which students are evaluated

- Efficient grading with timely and actionable feedback

- Identifying areas in which students need additional support and guidance

- The use of objective, criterion-referenced metrics for evaluation

Some instructors may be reluctant to provide a rubric to grade assessments under the perception that it stifles student creativity (Haugnes & Russell, 2018). However, sharing the purpose of an assessment and criteria for success in the form of a rubric along with relevant examples has been shown to particularly improve the success of BIPOC, multiracial, and first-generation students (Jonsson, 2014; Winkelmes, 2016). Improved success in assessments is generally associated with an increased sense of belonging which, in turn, leads to higher student retention and more equitable outcomes in the classroom (Calkins & Winkelmes, 2018; Weisz et al., 2023). By not providing a rubric, faculty may risk having students guess the criteria on which they will be evaluated. When students have to guess what expectations are, it may unfairly disadvantage students who are first-generation, BIPOC, international, or otherwise have not been exposed to the cultural norms that have dominated higher-ed institutions in the U.S (Shapiro et al., 2023). Moreover, in such cases, criteria may be applied inconsistently for students leading to biases in grades awarded to students.

Steps for Creating a Rubric

Clearly state the purpose of the assessment, which topic(s) learners are being tested on, the type of assessment (e.g., a presentation, essay, group project), the skills they are being tested on (e.g., writing, comprehension, presentation, collaboration), and the goal of the assessment for instructors (e.g., gauging formative or summative understanding of the topic).

Determine the specific criteria or dimensions to assess in the assessment. These criteria should align with the learning objectives or outcomes to be evaluated. These criteria typically form the rows in a rubric grid and describe the skills, knowledge, or behavior to be demonstrated. The set of criteria may include, for example, the idea/content, quality of arguments, organization, grammar, citations and/or creativity in writing. These criteria may form separate rows or be compiled in a single row depending on the type of rubric.

(See row headers of Figure 1 )

Create a scale of performance levels that describe the degree of proficiency attained for each criterion. The scale typically has 4 to 5 levels (although there may be fewer levels depending on the type of rubrics used). The rubrics should also have meaningful labels (e.g., not meeting expectations, approaching expectations, meeting expectations, exceeding expectations). When assigning levels of performance, use inclusive language that can inculcate a growth mindset among students, especially when work may be otherwise deemed to not meet the mark. Some examples include, “Does not yet meet expectations,” “Considerable room for improvement,” “ Progressing,” “Approaching,” “Emerging,” “Needs more work,” instead of using terms like “Unacceptable,” “Fails,” “Poor,” or “Below Average.”

(See column headers of Figure 1 )

Develop a clear and concise descriptor for each combination of criterion and performance level. These descriptors should provide examples or explanations of what constitutes each level of performance for each criterion. Typically, instructors should start by describing the highest and lowest level of performance for that criterion and then describing intermediate performance for that criterion. It is important to keep the language uniform across all columns, e.g., use syntax and words that are aligned in each column for a given criteria.

(See cells of Figure 1 )

It is important to consider how each criterion is weighted and for each criterion to reflect the importance of learning objectives being tested. For example, if the primary goal of a research proposal is to test mastery of content and application of knowledge, these criteria should be weighted more heavily compared to other criteria (e.g., grammar, style of presentation). This can be done by associating a different scoring system for each criteria (e.g., Following a scale of 8-6-4-2 points for each level of performance in higher weight criteria and 4-3-2-1 points for each level of performance for lower weight criteria). Further, the number of points awarded across levels of performance should be evenly spaced (e.g., 10-8-6-4 instead of 10-6-3-1). Finally, if there is a letter grade associated with a particular assessment, consider how it relates to scores. For example, instead of having students receive an A only if they received the highest level of performance on each criterion, consider assigning an A grade to a range of scores (28 - 30 total points) or a combination of levels of performance (e.g., exceeds expectations on higher weight criteria and meets expectations on other criteria).

(See the numerical values in the column headers of Figure 1 )

Figure 1: Graphic describing the five basic elements of a rubric

Note : Consider using a template rubric that can be used to evaluate similar activities in the classroom to avoid the fatigue of developing multiple rubrics. Some tools include Rubistar or iRubric which provide suggested words for each criteria depending on the type of assessment. Additionally, the above format can be incorporated in rubrics that can be directly added in Canvas or in the grid view of rubrics in gradescope which are common grading tools. Alternately, tables within a Word processor or Spreadsheet may also be used to build a rubric. You may also adapt the example rubrics provided below to the specific learning goals for the assessment using the blank template rubrics we have provided against each type of rubric. Watch the linked video for a quick introduction to designing a rubric . Word document (docx) files linked below will automatically download to your device whereas pdf files will open in a new tab.

Types of Rubrics

In these rubrics, one specifies at least two criteria and provides a separate score for each criterion. The steps outlined above for creating a rubric are typical for an analytic style rubric. Analytic rubrics are used to provide detailed feedback to students and help identify strengths as well as particular areas in need of improvement. These can be particularly useful when providing formative feedback to students, for student peer assessment and self-assessments, or for project-based summative assessments that evaluate student learning across multiple criteria. You may use a blank analytic rubric template (docx) or adapt an existing sample of an analytic rubric (pdf) .

Fig 2: Graphic describing a sample analytic rubric (adopted from George Mason University, 2013)

These are a subset of analytical rubrics that are typically used to assess student performance and engagement during a learning period but not the end product. Such rubrics are typically used to assess soft skills and behaviors that are less tangible (e.g., intercultural maturity, empathy, collaboration skills). These rubrics are useful in assessing the extent to which students develop a particular skill, ability, or value in experiential learning based programs or skills. They are grounded in the theory of development (King, 2005). Examples include an intercultural knowledge and competence rubric (docx) and a global learning rubric (docx) .

These rubrics consider all criteria evaluated on one scale, providing a single score that gives an overall impression of a student’s performance on an assessment.These rubrics also emphasize the overall quality of a student’s work, rather than delineating shortfalls of their work. However, a limitation of the holistic rubrics is that they are not useful for providing specific, nuanced feedback or to identify areas of improvement. Thus, they might be useful when grading summative assessments in which students have previously received detailed feedback using analytic or single-point rubrics. They may also be used to provide quick formative feedback for smaller assignments where not more than 2-3 criteria are being tested at once. Try using our blank holistic rubric template docx) or adapt an existing sample of holistic rubric (pdf) .

Fig 3: Graphic describing a sample holistic rubric (adopted from Teaching Commons, DePaul University)

These rubrics contain only two levels of performance (e.g., yes/no, present/absent) across a longer list of criteria (beyond 5 levels). Checklist rubrics have the advantage of providing a quick assessment of criteria given the binary assessment of criteria that are either met or are not met. Consequently, they are preferable when initiating self- or peer-assessments of learning given that it simplifies evaluations to be more objective and criteria can elicit only one of two responses allowing uniform and quick grading. For similar reasons, such rubrics are useful for faculty in providing quick formative feedback since it immediately highlights the specific criteria to improve on. Such rubrics are also used in grading summative assessments in courses utilizing alternative grading systems such as specifications grading, contract grading or a credit/no credit grading system wherein a minimum threshold of performance has to be met for the assessment. Having said that, developing rubrics from existing analytical rubrics may require considerable investment upfront given that criteria have to be phrased in a way that can only elicit binary responses. Here is a link to the checklist rubric template (docx) .

Fig. 4: Graphic describing a sample checklist rubric

A single point rubric is a modified version of a checklist style rubric, in that it specifies a single column of criteria. However, rather than only indicating whether expectations are met or not, as happens in a checklist rubric, a single point rubric allows instructors to specify ways in which criteria exceeds or does not meet expectations. Here the criteria to be tested are laid out in a central column describing the average expectation for the assignment. Instructors indicate areas of improvement on the left side of the criteria, whereas areas of strength in student performance are indicated on the right side. These types of rubrics provide flexibility in scoring, and are typically used in courses with alternative grading systems such as ungrading or contract grading. However, they do require the instructors to provide detailed feedback for each student, which can be unfeasible for assessments in large classes. Here is a link to the single point rubric template (docx) .

Fig. 5 Graphic describing a single point rubric (adopted from Teaching Commons, DePaul University)

Best Practices for Designing and Implementing Rubrics

When designing the rubric format, descriptors and criteria should be presented in a way that is compatible with screen readers and reading assistive technology. For example, avoid using only color, jargon, or complex terminology to convey information. In case you do use color, pictures or graphics, try providing alternative formats for rubrics, such as plain text documents. Explore resources from the CU Digital Accessibility Office to learn more.

Co-creating rubrics can help students to engage in higher-order thinking skills such as analysis and evaluation. Further, it allows students to take ownership of their own learning by determining the criteria of their work they aspire towards. For graduate classes or upper-level students, one way of doing this may be to provide learning outcomes of the project, and let students develop the rubric on their own. However, students in introductory classes may need more scaffolding by providing them a draft and leaving room for modification (Stevens & Levi 2013). Watch the linked video for tips on co-creating rubrics with students . Further, involving teaching assistants in designing a rubric can help in getting feedback on expectations for an assessment prior to implementing and norming a rubric.

When first designing a rubric, it is important to compare grades awarded for the same assessment by multiple graders to make sure the criteria are applied uniformly and reliably for the same level of performance. Further, ensure that the levels of performance in student work can be adequately distinguished using a rubric. Such a norming protocol is particularly important to also do at the start of any course in which multiple graders use the same rubric to grade an assessment (e.g., recitation sections, lab sections, teaching team). Here, instructors may select a subset of assignments that all graders evaluate using the same rubric, followed by a discussion to identify any discrepancies in criteria applied and ways to address them. Such strategies can make the rubrics more reliable, effective, and clear.

Sharing the rubric with students prior to an assessment can help familiarize students with an instructor’s expectations. This can help students master their learning outcomes by guiding their work in the appropriate direction and increase student motivation. Further, providing the rubric to students can help encourage metacognition and ability to self-assess learning.

Sample Rubrics

Below are links to rubric templates designed by a team of experts assembled by the Association of American Colleges and Universities (AAC&U) to assess 16 major learning goals. These goals are a part of the Valid Assessment of Learning in Undergraduate Education (VALUE) program. All of these examples are analytic rubrics and have detailed criteria to test specific skills. However, since any given assessment typically tests multiple skills, instructors are encouraged to develop their own rubric by utilizing criteria picked from a combination of the rubrics linked below.

- Civic knowledge and engagement-local and global

- Creative thinking

- Critical thinking

- Ethical reasoning

- Foundations and skills for lifelong learning

- Information literacy

- Integrative and applied learning

- Intercultural knowledge and competence

- Inquiry and analysis

- Oral communication

- Problem solving

- Quantitative literacy

- Written Communication

Note : Clicking on the above links will automatically download them to your device in Microsoft Word format. These links have been created and are hosted by Kansas State University . Additional information regarding the VALUE Rubrics may be found on the AAC&U homepage .

Below are links to sample rubrics that have been developed for different types of assessments. These rubrics follow the analytical rubric template, unless mentioned otherwise. However, these rubrics can be modified into other types of rubrics (e.g., checklist, holistic or single point rubrics) based on the grading system and goal of assessment (e.g., formative or summative). As mentioned previously, these rubrics can be modified using the blank template provided.

- Oral presentations

- Painting Portfolio (single-point rubric)

- Research Paper

- Video Storyboard

Additional information:

Office of Assessment and Curriculum Support. (n.d.). Creating and using rubrics . University of Hawai’i, Mānoa

Calkins, C., & Winkelmes, M. A. (2018). A teaching method that boosts UNLV student retention . UNLV Best Teaching Practices Expo , 3.

Fraile, J., Panadero, E., & Pardo, R. (2017). Co-creating rubrics: The effects on self-regulated learning, self-efficacy and performance of establishing assessment criteria with students. Studies In Educational Evaluation , 53, 69-76

Haugnes, N., & Russell, J. L. (2016). Don’t box me in: Rubrics for àrtists and Designers . To Improve the Academy , 35 (2), 249–283.

Jonsson, A. (2014). Rubrics as a way of providing transparency in assessment , Assessment & Evaluation in Higher Education , 39(7), 840-852

McCartin, L. (2022, February 1). Rubrics! an equity-minded practice . University of Northern Colorado

Shapiro, S., Farrelly, R., & Tomaš, Z. (2023). Chapter 4: Effective and Equitable Assignments and Assessments. Fostering International Student Success in higher education (pp, 61-87, second edition). TESOL Press.

Stevens, D. D., & Levi, A. J. (2013). Introduction to rubrics: An assessment tool to save grading time, convey effective feedback, and promote student learning (second edition). Sterling, VA: Stylus.

Teaching Commons (n.d.). Types of Rubrics . DePaul University

Teaching Resources (n.d.). Rubric best practices, examples, and templates . NC State University

Winkelmes, M., Bernacki, M., Butler, J., Zochowski, M., Golanics, J., & Weavil, K.H. (2016). A teaching intervention that increases underserved college students’ success . Peer Review , 8(1/2), 31-36.

Weisz, C., Richard, D., Oleson, K., Winkelmes, M.A., Powley, C., Sadik, A., & Stone, B. (in progress, 2023). Transparency, confidence, belonging and skill development among 400 community college students in the state of Washington .

Association of American Colleges and Universities. (2009). Valid Assessment of Learning in Undergraduate Education (VALUE) .

Canvas Community. (2021, August 24). How do I add a rubric in a course? Canvas LMS Community.

Center for Teaching & Learning. (2021, March 03). Overview of Rubrics . University of Colorado, Boulder

Center for Teaching & Learning. (2021, March 18). Best practices to co-create rubrics with students . University of Colorado, Boulder.

Chase, D., Ferguson, J. L., & Hoey, J. J. (2014). Assessment in creative disciplines: Quantifying and qualifying the aesthetic . Common Ground Publishing.

Feldman, J. (2018). Grading for equity: What it is, why it matters, and how it can transform schools and classrooms . Corwin Press, CA.

Gradescope (n.d.). Instructor: Assignment - Grade Submissions . Gradescope Help Center.

Henning, G., Baker, G., Jankowski, N., Lundquist, A., & Montenegro, E. (Eds.). (2022). Reframing assessment to center equity . Stylus Publishing.

King, P. M. & Baxter Magolda, M. B. (2005). A developmental model of intercultural maturity . Journal of College Student Development . 46(2), 571-592.

Selke, M. J. G. (2013). Rubric assessment goes to college: Objective, comprehensive evaluation of student work. Lanham, MD: Rowman & Littlefield.

The Institute for Habits of Mind. (2023, January 9). Creativity Rubrics - The Institute for Habits of Mind .

- Assessment in Large Enrollment Classes

- Classroom Assessment Techniques

- Creating and Using Learning Outcomes

- Early Feedback

- Five Misconceptions on Writing Feedback

- Formative Assessments

- Frequent Feedback

- Online and Remote Exams

- Student Learning Outcomes Assessment

- Student Peer Assessment

- Student Self-assessment

- Summative Assessments: Best Practices

- Summative Assessments: Types

- Assessing & Reflecting on Teaching

- Departmental Teaching Evaluation

- Equity in Assessment

- Glossary of Terms

- Attendance Policies

- Books We Recommend

- Classroom Management

- Community-Developed Resources

- Compassion & Self-Compassion

- Course Design & Development

- Course-in-a-box for New CU Educators

- Enthusiasm & Teaching

- First Day Tips

- Flexible Teaching

- Grants & Awards

- Inclusivity

- Learner Motivation

- Making Teaching & Learning Visible

- National Center for Faculty Development & Diversity

- Open Education

- Student Support Toolkit

- Sustainaiblity

- TA/Instructor Agreement

- Teaching & Learning in the Age of AI

- Teaching Well with Technology

- Presentation Design

Presentation Rubric for a College Project

We seem to have an unavoidable relationship with public speaking throughout our lives. From our kindergarten years, when our presentations are nothing more than a few seconds of reciting cute words in front of our class…

...till our grown up years, when things get a little more serious, and the success of our presentations may determine getting funds for our business, or obtaining an academic degree when defending our thesis.

By the time we reach our mid 20’s, we become worryingly used to evaluations based on our presentations. Yet, for some reason, we’re rarely told the traits upon which we are being evaluated. Most colleges and business schools for instance use a PowerPoint presentation rubric to evaluate their students. Funny thing is, they’re not usually that open about sharing it with their students (as if that would do any harm!).

What is a presentation rubric?

A presentation rubric is a systematic and standardized tool used to evaluate and assess the quality and effectiveness of a presentation. It provides a structured framework for instructors, evaluators, or peers to assess various aspects of a presentation, such as content, delivery, organization, and overall performance. Presentation rubrics are commonly used in educational settings, business environments, and other contexts where presentations are a key form of communication.

A typical presentation rubric includes a set of criteria and a scale for rating or scoring each criterion. The criteria are specific aspects or elements of the presentation that are considered essential for a successful presentation. The scale assigns a numerical value or descriptive level to each criterion, ranging from poor or unsatisfactory to excellent or outstanding.

Common criteria found in presentation rubrics may include:

- Content: This criterion assesses the quality and relevance of the information presented. It looks at factors like accuracy, depth of knowledge, use of evidence, and the clarity of key messages.

- Organization: Organization evaluates the structure and flow of the presentation. It considers how well the introduction, body, and conclusion are structured and whether transitions between sections are smooth.

- Delivery: Delivery assesses the presenter's speaking skills, including vocal tone, pace, clarity, and engagement with the audience. It also looks at nonverbal communication, such as body language and eye contact.

- Visual Aids: If visual aids like slides or props are used, this criterion evaluates their effectiveness, relevance, and clarity. It may also assess the design and layout of visual materials.

- Audience Engagement: This criterion measures the presenter's ability to connect with the audience, maintain their interest, and respond to questions or feedback.

- Time Management: Time management assesses whether the presenter stayed within the allotted time for the presentation. Going significantly over or under the time limit can affect the overall effectiveness of the presentation.

- Creativity and Innovation: In some cases, rubrics may include criteria related to the creative and innovative aspects of the presentation, encouraging presenters to think outside the box.

- Overall Impact: This criterion provides an overall assessment of the presentation's impact on the audience, considering how well it achieved its intended purpose and whether it left a lasting impression.

“We’re used to giving presentations, yet we’re rarely told the traits upon which we’re being evaluated.

Well, we don’t believe in shutting down information. Quite the contrary: we think the best way to practice your speech is to know exactly what is being tested! By evaluating each trait separately, you can:

- Acknowledge the complexity of public speaking, that goes far beyond subject knowledge.

- Address your weaker spots, and work on them to improve your presentation as a whole.

I’ve assembled a simple Presentation Rubric, based on a great document by the NC State University, and I've also added a few rows of my own, so you can evaluate your presentation in pretty much any scenario!

CREATE PRESENTATION

What is tested in this powerpoint presentation rubric.

The Rubric contemplates 7 traits, which are as follows:

Now let's break down each trait so you can understand what they mean, and how to assess each one:

Presentation Rubric

How to use this Rubric?:

The Rubric is pretty self explanatory, so I'm just gonna give you some ideas as to how to use it. The ideal scenario is to ask someone else to listen to your presentation and evaluate you with it. The less that person knows you, or what your presentation is about, the better.

WONDERING WHAT YOUR SCORE MAY INDICATE?

- 21-28 Fan-bloody-tastic!

- 14-21 Looking good, but you can do better

- 7-14 Uhmmm, you ain't at all ready

As we don't always have someone to rehearse our presentations with, a great way to use the Rubric is to record yourself (this is not Hollywood material so an iPhone video will do!), watching the video afterwards, and evaluating your presentation on your own. You'll be surprised by how different your perception of yourself is, in comparison to how you see yourself on video.

Related read: Webinar - Public Speaking and Stage Presence: How to wow?

It will be fairly easy to evaluate each trait! The mere exercise of reading the Presentation Rubric is an excellent study on presenting best practices.

If you're struggling with any particular trait, I suggest you take a look at our Academy Channel where we discuss how to improve each trait in detail!

It's not always easy to objectively assess our own speaking skills. So the next time you have a big presentation coming up, use this Rubric to put yourself to the test!

Need support for your presentation? Build awesome slides using our very own Slidebean .

Popular Articles

How to Kickstart the economy

Startup vs Small Business: Main differences

Let’s move your company to the next stage 🚀

Ai pitch deck software, pitch deck services.

Financial Model Consulting for Startups 🚀

Raise money with our pitch deck writing and design service 🚀

The all-in-one pitch deck software 🚀

Check out our list of the top free presentation websites that offer unique features and design options. Discover the best platform for your next presentation now.

This presentation software list is the result of weeks of research of 50+ presentation tools currently available online. It'll help you compare and decide.

This is a functional model you can use to create your own formulas and project your potential business growth. Instructions on how to use it are on the front page.

Book a call with our sales team

In a hurry? Give us a call at

How to Use Rubrics

A rubric is a document that describes the criteria by which students’ assignments are graded. Rubrics can be helpful for:

- Making grading faster and more consistent (reducing potential bias).

- Communicating your expectations for an assignment to students before they begin.

Moreover, for assignments whose criteria are more subjective, the process of creating a rubric and articulating what it looks like to succeed at an assignment provides an opportunity to check for alignment with the intended learning outcomes and modify the assignment prompt, as needed.

Why rubrics?

Rubrics are best for assignments or projects that require evaluation on multiple dimensions. Creating a rubric makes the instructor’s standards explicit to both students and other teaching staff for the class, showing students how to meet expectations.

Additionally, the more comprehensive a rubric is, the more it allows for grading to be streamlined—students will get informative feedback about their performance from the rubric, even if they don’t have as many individualized comments. Grading can be more standardized and efficient across graders.

Finally, rubrics allow for reflection, as the instructor has to think about their standards and outcomes for the students. Using rubrics can help with self-directed learning in students as well, especially if rubrics are used to review students’ own work or their peers’, or if students are involved in creating the rubric.

How to design a rubric

1. consider the desired learning outcomes.

What learning outcomes is this assignment reinforcing and assessing? If the learning outcome seems “fuzzy,” iterate on the outcome by thinking about the expected student work product. This may help you more clearly articulate the learning outcome in a way that is measurable.

2. Define criteria

What does a successful assignment submission look like? As described by Allen and Tanner (2006), it can help develop an initial list of categories that the student should demonstrate proficiency in by completing the assignment. These categories should correlate with the intended learning outcomes you identified in Step 1, although they may be more granular in some cases. For example, if the task assesses students’ ability to formulate an effective communication strategy, what components of their communication strategy will you be looking for? Talking with colleagues or looking at existing rubrics for similar tasks may give you ideas for categories to consider for evaluation.

If you have assigned this task to students before and have samples of student work, it can help create a qualitative observation guide. This is described in Linda Suskie’s book Assessing Student Learning , where she suggests thinking about what made you decide to give one assignment an A and another a C, as well as taking notes when grading assignments and looking for common patterns. The often repeated themes that you comment on may show what your goals and expectations for students are. An example of an observation guide used to take notes on predetermined areas of an assignment is shown here .

In summary, consider the following list of questions when defining criteria for a rubric (O’Reilly and Cyr, 2006):

- What do you want students to learn from the task?

- How will students demonstrate that they have learned?

- What knowledge, skills, and behaviors are required for the task?

- What steps are required for the task?

- What are the characteristics of the final product?

After developing an initial list of criteria, prioritize the most important skills you want to target and eliminate unessential criteria or combine similar skills into one group. Most rubrics have between 3 and 8 criteria. Rubrics that are too lengthy make it difficult to grade and challenging for students to understand the key skills they need to achieve for the given assignment.

3. Create the rating scale

According to Suskie, you will want at least 3 performance levels: for adequate and inadequate performance, at the minimum, and an exemplary level to motivate students to strive for even better work. Rubrics often contain 5 levels, with an additional level between adequate and exemplary and a level between adequate and inadequate. Usually, no more than 5 levels are needed, as having too many rating levels can make it hard to consistently distinguish which rating to give an assignment (such as between a 6 or 7 out of 10). Suskie also suggests labeling each level with names to clarify which level represents the minimum acceptable performance. Labels will vary by assignment and subject, but some examples are:

- Exceeds standard, meets standard, approaching standard, below standard

- Complete evidence, partial evidence, minimal evidence, no evidence

4. Fill in descriptors

Fill in descriptors for each criterion at each performance level. Expand on the list of criteria you developed in Step 2. Begin to write full descriptions, thinking about what an exemplary example would look like for students to strive towards. Avoid vague terms like “good” and make sure to use explicit, concrete terms to describe what would make a criterion good. For instance, a criterion called “organization and structure” would be more descriptive than “writing quality.” Describe measurable behavior and use parallel language for clarity; the wording for each criterion should be very similar, except for the degree to which standards are met. For example, in a sample rubric from Chapter 9 of Suskie’s book, the criterion of “persuasiveness” has the following descriptors:

- Well Done (5): Motivating questions and advance organizers convey the main idea. Information is accurate.

- Satisfactory (3-4): Includes persuasive information.

- Needs Improvement (1-2): Include persuasive information with few facts.

- Incomplete (0): Information is incomplete, out of date, or incorrect.

These sample descriptors generally have the same sentence structure that provides consistent language across performance levels and shows the degree to which each standard is met.

5. Test your rubric

Test your rubric using a range of student work to see if the rubric is realistic. You may also consider leaving room for aspects of the assignment, such as effort, originality, and creativity, to encourage students to go beyond the rubric. If there will be multiple instructors grading, it is important to calibrate the scoring by having all graders use the rubric to grade a selected set of student work and then discuss any differences in the scores. This process helps develop consistency in grading and making the grading more valid and reliable.

Types of Rubrics

If you would like to dive deeper into rubric terminology, this section is dedicated to discussing some of the different types of rubrics. However, regardless of the type of rubric you use, it’s still most important to focus first on your learning goals and think about how the rubric will help clarify students’ expectations and measure student progress towards those learning goals.

Depending on the nature of the assignment, rubrics can come in several varieties (Suskie, 2009):

Checklist Rubric

This is the simplest kind of rubric, which lists specific features or aspects of the assignment which may be present or absent. A checklist rubric does not involve the creation of a rating scale with descriptors. See example from 18.821 project-based math class .

Rating Scale Rubric

This is like a checklist rubric, but instead of merely noting the presence or absence of a feature or aspect of the assignment, the grader also rates quality (often on a graded or Likert-style scale). See example from 6.811 assistive technology class .

Descriptive Rubric

A descriptive rubric is like a rating scale, but including descriptions of what performing to a certain level on each scale looks like. Descriptive rubrics are particularly useful in communicating instructors’ expectations of performance to students and in creating consistency with multiple graders on an assignment. This kind of rubric is probably what most people think of when they imagine a rubric. See example from 15.279 communications class .

Holistic Scoring Guide

Unlike the first 3 types of rubrics, a holistic scoring guide describes performance at different levels (e.g., A-level performance, B-level performance) holistically without analyzing the assignment into several different scales. This kind of rubric is particularly useful when there are many assignments to grade and a moderate to a high degree of subjectivity in the assessment of quality. It can be difficult to have consistency across scores, and holistic scoring guides are most helpful when making decisions quickly rather than providing detailed feedback to students. See example from 11.229 advanced writing seminar .

The kind of rubric that is most appropriate will depend on the assignment in question.

Implementation tips

Rubrics are also available to use for Canvas assignments. See this resource from Boston College for more details and guides from Canvas Instructure.

Allen, D., & Tanner, K. (2006). Rubrics: Tools for Making Learning Goals and Evaluation Criteria Explicit for Both Teachers and Learners. CBE—Life Sciences Education, 5 (3), 197-203. doi:10.1187/cbe.06-06-0168

Cherie Miot Abbanat. 11.229 Advanced Writing Seminar. Spring 2004. Massachusetts Institute of Technology: MIT OpenCourseWare, https://ocw.mit.edu . License: Creative Commons BY-NC-SA .

Haynes Miller, Nat Stapleton, Saul Glasman, and Susan Ruff. 18.821 Project Laboratory in Mathematics. Spring 2013. Massachusetts Institute of Technology: MIT OpenCourseWare, https://ocw.mit.edu . License: Creative Commons BY-NC-SA .

Lori Breslow, and Terence Heagney. 15.279 Management Communication for Undergraduates. Fall 2012. Massachusetts Institute of Technology: MIT OpenCourseWare, https://ocw.mit.edu . License: Creative Commons BY-NC-SA .

O’Reilly, L., & Cyr, T. (2006). Creating a Rubric: An Online Tutorial for Faculty. Retrieved from https://www.ucdenver.edu/faculty_staff/faculty/center-for-faculty-development/Documents/Tutorials/Rubrics/index.htm

Suskie, L. (2009). Using a scoring guide or rubric to plan and evaluate an assessment. In Assessing student learning: A common sense guide (2nd edition, pp. 137-154 ) . Jossey-Bass.

William Li, Grace Teo, and Robert Miller. 6.811 Principles and Practice of Assistive Technology. Fall 2014. Massachusetts Institute of Technology: MIT OpenCourseWare, https://ocw.mit.edu . License: Creative Commons BY-NC-SA .

- Visit the University of Nebraska–Lincoln

- Apply to the University of Nebraska–Lincoln

- Give to the University of Nebraska–Lincoln

Search Form

How to design effective rubrics.

Rubrics can be effective assessment tools when constructed using methods that incorporate four main criteria: validity, reliability, fairness, and efficiency. For a rubric to be valid and reliable, it must only grade the work presented (reducing the influence of instructor biases) so that anyone using the rubric would obtain the same grade (Felder and Brent 2016). Fairness ensures that the grading is transparent by providing students with access to the rubric at the beginning of the assessment while efficiency is evident when students receive detailed, timely feedback from the rubric after grading has occurred (Felder and Brent 2016). Because the most informative rubrics for student learning are analytical rubrics (Brookhart 2013), the steps below explain how to construct an analytical rubric.

Five Steps to Design Effective Rubrics

The first step in designing a rubric is determining the content, skills, or tasks you want students to be able to accomplish (Wormeli 2006) by completing an assessment. Thus, two main questions need to be answered:

- What do students need to know or do? and

- How will the instructor know when the students know or can do it?

Another way to think about this is to decide which learning objectives for the course are being evaluated using this assessment (Allen and Tanner 2006, Wormeli 2006). (More information on learning objectives can be found at Teaching@UNL). For most projects or similar assessments, more than one area of content or skill is occurring, so most rubrics assess more than one learning objective. For example, a project may require students to research a topic (content knowledge learning objective) using digital literacy skills (research learning objective) and presenting their findings (communication learning objective). Therefore, it is important to think through all the tasks or skills students will need to complete during an assessment to meet the learning objectives. Additionally, it is advised to review examples of rubrics for a specific discipline or task to find grade-level appropriate rubrics to aid in preparing a list of tasks and activities that are essential to meeting the learning objectives (Allen and Tanner 2006).

Once the learning objectives and a list of essential tasks for students is compiled and aligned to learning objectives, the next step is to determine the number of criteria for the rubric. Most rubrics have three or more criteria with most rubrics having less than a dozen criteria. It is important to remember that as more criteria are added to a rubric, a student’s cognitive load increases making it more difficult for students to remember all the assessment requirements (Allen and Tanner 2006, Wolf et al. 2008). Thus, usually 3-10 criteria are recommended for a rubric (if an assessment has less than 3 criteria, a different format (e.g., grade sheet) can be used to convey grading expectations and if a rubric has more than ten criteria, some criteria can be consolidated into a single larger category; Wolf et al. 2008). Once the number of criteria is established, the final step for the criteria aspect of a rubric is creating descriptive titles for each criterion and determining if some criteria will be weighted and thus be more influential on the grade for the assessment. Once this is accomplished, the right column of the rubric can be designed (Table 1).

The third aspect of a rubric design is the levels of performance and the labels for each level in the rubric. It is recommended to have 3-6 levels of performance in a rubric (Allen and Tanner 2006, Wormeli 2006, Wolf et al. 2008). The key to determining the number of performance levels for a rubric is based on how easy it is to distinguish between levels (Allen and Tanner 2006). Can the difference in student performance between a “3” and “4” be readily seen on a five-level rubric? If not, should only four levels be used for the rubric for all criteria. If most of the criteria can easily be differentiated with five levels, but only one criterion is difficult to discern, then two levels could be left blank (see “Research Skills” criterion in Table 1). It is also important to note that having fewer levels makes constructing the rubric faster but may result in ambiguous expectations and difficulty providing feedback to students.

Once the number of performance levels are set for the rubric, assign each level a name or title that indicates the level of performance. When creating the name system for the performance levels of a rubric, it is important to use terms that are not subjective, overly negative, or convey judgements (e.g., “Excellent”, “Good”, and “Bad”; Allen and Tanner 2006, Stevens and Levi 2013) and to ensure the terms use the same aspect of language (all nouns, all verbs ending in “-ing”, all adjectives, etc.; Wormeli 2006). Examples of different performance level naming systems include:

- Exemplary, Competent, Not yet competent

- Proficient, Intermediate, Novice

- Strong, Satisfactory, Not yet satisfactory

- Exceeds Expectations, Meets Expectations, Below Expectations

- Proficient, Capable, Adequate, Limited

- Exemplary, Proficient, Acceptable, Unacceptable

- Mastery, Proficient, Apprentice, Novice, Absent

Additionally, the order of the levels needs to be determined with some rubrics designed to increase in proficiency across the levels (lowest, middle, highest performance) and other designed to start with the highest performance level and move toward the lowest (highest, middle, lowest performance).