The 7 Most Useful Data Analysis Methods and Techniques

Data analytics is the process of analyzing raw data to draw out meaningful insights. These insights are then used to determine the best course of action.

When is the best time to roll out that marketing campaign? Is the current team structure as effective as it could be? Which customer segments are most likely to purchase your new product?

Ultimately, data analytics is a crucial driver of any successful business strategy. But how do data analysts actually turn raw data into something useful? There are a range of methods and techniques that data analysts use depending on the type of data in question and the kinds of insights they want to uncover.

You can get a hands-on introduction to data analytics in this free short course .

In this post, we’ll explore some of the most useful data analysis techniques. By the end, you’ll have a much clearer idea of how you can transform meaningless data into business intelligence. We’ll cover:

- What is data analysis and why is it important?

- What is the difference between qualitative and quantitative data?

- Regression analysis

- Monte Carlo simulation

- Factor analysis

- Cohort analysis

- Cluster analysis

- Time series analysis

- Sentiment analysis

- The data analysis process

- The best tools for data analysis

- Key takeaways

The first six methods listed are used for quantitative data , while the last technique applies to qualitative data. We briefly explain the difference between quantitative and qualitative data in section two, but if you want to skip straight to a particular analysis technique, just use the clickable menu.

1. What is data analysis and why is it important?

Data analysis is, put simply, the process of discovering useful information by evaluating data. This is done through a process of inspecting, cleaning, transforming, and modeling data using analytical and statistical tools, which we will explore in detail further along in this article.

Why is data analysis important? Analyzing data effectively helps organizations make business decisions. Nowadays, data is collected by businesses constantly: through surveys, online tracking, online marketing analytics, collected subscription and registration data (think newsletters), social media monitoring, among other methods.

These data will appear as different structures, including—but not limited to—the following:

The concept of big data —data that is so large, fast, or complex, that it is difficult or impossible to process using traditional methods—gained momentum in the early 2000s. Then, Doug Laney, an industry analyst, articulated what is now known as the mainstream definition of big data as the three Vs: volume, velocity, and variety.

- Volume: As mentioned earlier, organizations are collecting data constantly. In the not-too-distant past it would have been a real issue to store, but nowadays storage is cheap and takes up little space.

- Velocity: Received data needs to be handled in a timely manner. With the growth of the Internet of Things, this can mean these data are coming in constantly, and at an unprecedented speed.

- Variety: The data being collected and stored by organizations comes in many forms, ranging from structured data—that is, more traditional, numerical data—to unstructured data—think emails, videos, audio, and so on. We’ll cover structured and unstructured data a little further on.

This is a form of data that provides information about other data, such as an image. In everyday life you’ll find this by, for example, right-clicking on a file in a folder and selecting “Get Info”, which will show you information such as file size and kind, date of creation, and so on.

Real-time data

This is data that is presented as soon as it is acquired. A good example of this is a stock market ticket, which provides information on the most-active stocks in real time.

Machine data

This is data that is produced wholly by machines, without human instruction. An example of this could be call logs automatically generated by your smartphone.

Quantitative and qualitative data

Quantitative data—otherwise known as structured data— may appear as a “traditional” database—that is, with rows and columns. Qualitative data—otherwise known as unstructured data—are the other types of data that don’t fit into rows and columns, which can include text, images, videos and more. We’ll discuss this further in the next section.

2. What is the difference between quantitative and qualitative data?

How you analyze your data depends on the type of data you’re dealing with— quantitative or qualitative . So what’s the difference?

Quantitative data is anything measurable , comprising specific quantities and numbers. Some examples of quantitative data include sales figures, email click-through rates, number of website visitors, and percentage revenue increase. Quantitative data analysis techniques focus on the statistical, mathematical, or numerical analysis of (usually large) datasets. This includes the manipulation of statistical data using computational techniques and algorithms. Quantitative analysis techniques are often used to explain certain phenomena or to make predictions.

Qualitative data cannot be measured objectively , and is therefore open to more subjective interpretation. Some examples of qualitative data include comments left in response to a survey question, things people have said during interviews, tweets and other social media posts, and the text included in product reviews. With qualitative data analysis, the focus is on making sense of unstructured data (such as written text, or transcripts of spoken conversations). Often, qualitative analysis will organize the data into themes—a process which, fortunately, can be automated.

Data analysts work with both quantitative and qualitative data , so it’s important to be familiar with a variety of analysis methods. Let’s take a look at some of the most useful techniques now.

3. Data analysis techniques

Now we’re familiar with some of the different types of data, let’s focus on the topic at hand: different methods for analyzing data.

a. Regression analysis

Regression analysis is used to estimate the relationship between a set of variables. When conducting any type of regression analysis , you’re looking to see if there’s a correlation between a dependent variable (that’s the variable or outcome you want to measure or predict) and any number of independent variables (factors which may have an impact on the dependent variable). The aim of regression analysis is to estimate how one or more variables might impact the dependent variable, in order to identify trends and patterns. This is especially useful for making predictions and forecasting future trends.

Let’s imagine you work for an ecommerce company and you want to examine the relationship between: (a) how much money is spent on social media marketing, and (b) sales revenue. In this case, sales revenue is your dependent variable—it’s the factor you’re most interested in predicting and boosting. Social media spend is your independent variable; you want to determine whether or not it has an impact on sales and, ultimately, whether it’s worth increasing, decreasing, or keeping the same. Using regression analysis, you’d be able to see if there’s a relationship between the two variables. A positive correlation would imply that the more you spend on social media marketing, the more sales revenue you make. No correlation at all might suggest that social media marketing has no bearing on your sales. Understanding the relationship between these two variables would help you to make informed decisions about the social media budget going forward. However: It’s important to note that, on their own, regressions can only be used to determine whether or not there is a relationship between a set of variables—they don’t tell you anything about cause and effect. So, while a positive correlation between social media spend and sales revenue may suggest that one impacts the other, it’s impossible to draw definitive conclusions based on this analysis alone.

There are many different types of regression analysis, and the model you use depends on the type of data you have for the dependent variable. For example, your dependent variable might be continuous (i.e. something that can be measured on a continuous scale, such as sales revenue in USD), in which case you’d use a different type of regression analysis than if your dependent variable was categorical in nature (i.e. comprising values that can be categorised into a number of distinct groups based on a certain characteristic, such as customer location by continent). You can learn more about different types of dependent variables and how to choose the right regression analysis in this guide .

Regression analysis in action: Investigating the relationship between clothing brand Benetton’s advertising expenditure and sales

b. Monte Carlo simulation

When making decisions or taking certain actions, there are a range of different possible outcomes. If you take the bus, you might get stuck in traffic. If you walk, you might get caught in the rain or bump into your chatty neighbor, potentially delaying your journey. In everyday life, we tend to briefly weigh up the pros and cons before deciding which action to take; however, when the stakes are high, it’s essential to calculate, as thoroughly and accurately as possible, all the potential risks and rewards.

Monte Carlo simulation, otherwise known as the Monte Carlo method, is a computerized technique used to generate models of possible outcomes and their probability distributions. It essentially considers a range of possible outcomes and then calculates how likely it is that each particular outcome will be realized. The Monte Carlo method is used by data analysts to conduct advanced risk analysis, allowing them to better forecast what might happen in the future and make decisions accordingly.

So how does Monte Carlo simulation work, and what can it tell us? To run a Monte Carlo simulation, you’ll start with a mathematical model of your data—such as a spreadsheet. Within your spreadsheet, you’ll have one or several outputs that you’re interested in; profit, for example, or number of sales. You’ll also have a number of inputs; these are variables that may impact your output variable. If you’re looking at profit, relevant inputs might include the number of sales, total marketing spend, and employee salaries. If you knew the exact, definitive values of all your input variables, you’d quite easily be able to calculate what profit you’d be left with at the end. However, when these values are uncertain, a Monte Carlo simulation enables you to calculate all the possible options and their probabilities. What will your profit be if you make 100,000 sales and hire five new employees on a salary of $50,000 each? What is the likelihood of this outcome? What will your profit be if you only make 12,000 sales and hire five new employees? And so on. It does this by replacing all uncertain values with functions which generate random samples from distributions determined by you, and then running a series of calculations and recalculations to produce models of all the possible outcomes and their probability distributions. The Monte Carlo method is one of the most popular techniques for calculating the effect of unpredictable variables on a specific output variable, making it ideal for risk analysis.

Monte Carlo simulation in action: A case study using Monte Carlo simulation for risk analysis

c. Factor analysis

Factor analysis is a technique used to reduce a large number of variables to a smaller number of factors. It works on the basis that multiple separate, observable variables correlate with each other because they are all associated with an underlying construct. This is useful not only because it condenses large datasets into smaller, more manageable samples, but also because it helps to uncover hidden patterns. This allows you to explore concepts that cannot be easily measured or observed—such as wealth, happiness, fitness, or, for a more business-relevant example, customer loyalty and satisfaction.

Let’s imagine you want to get to know your customers better, so you send out a rather long survey comprising one hundred questions. Some of the questions relate to how they feel about your company and product; for example, “Would you recommend us to a friend?” and “How would you rate the overall customer experience?” Other questions ask things like “What is your yearly household income?” and “How much are you willing to spend on skincare each month?”

Once your survey has been sent out and completed by lots of customers, you end up with a large dataset that essentially tells you one hundred different things about each customer (assuming each customer gives one hundred responses). Instead of looking at each of these responses (or variables) individually, you can use factor analysis to group them into factors that belong together—in other words, to relate them to a single underlying construct. In this example, factor analysis works by finding survey items that are strongly correlated. This is known as covariance . So, if there’s a strong positive correlation between household income and how much they’re willing to spend on skincare each month (i.e. as one increases, so does the other), these items may be grouped together. Together with other variables (survey responses), you may find that they can be reduced to a single factor such as “consumer purchasing power”. Likewise, if a customer experience rating of 10/10 correlates strongly with “yes” responses regarding how likely they are to recommend your product to a friend, these items may be reduced to a single factor such as “customer satisfaction”.

In the end, you have a smaller number of factors rather than hundreds of individual variables. These factors are then taken forward for further analysis, allowing you to learn more about your customers (or any other area you’re interested in exploring).

Factor analysis in action: Using factor analysis to explore customer behavior patterns in Tehran

d. Cohort analysis

Cohort analysis is a data analytics technique that groups users based on a shared characteristic , such as the date they signed up for a service or the product they purchased. Once users are grouped into cohorts, analysts can track their behavior over time to identify trends and patterns.

So what does this mean and why is it useful? Let’s break down the above definition further. A cohort is a group of people who share a common characteristic (or action) during a given time period. Students who enrolled at university in 2020 may be referred to as the 2020 cohort. Customers who purchased something from your online store via the app in the month of December may also be considered a cohort.

With cohort analysis, you’re dividing your customers or users into groups and looking at how these groups behave over time. So, rather than looking at a single, isolated snapshot of all your customers at a given moment in time (with each customer at a different point in their journey), you’re examining your customers’ behavior in the context of the customer lifecycle. As a result, you can start to identify patterns of behavior at various points in the customer journey—say, from their first ever visit to your website, through to email newsletter sign-up, to their first purchase, and so on. As such, cohort analysis is dynamic, allowing you to uncover valuable insights about the customer lifecycle.

This is useful because it allows companies to tailor their service to specific customer segments (or cohorts). Let’s imagine you run a 50% discount campaign in order to attract potential new customers to your website. Once you’ve attracted a group of new customers (a cohort), you’ll want to track whether they actually buy anything and, if they do, whether or not (and how frequently) they make a repeat purchase. With these insights, you’ll start to gain a much better understanding of when this particular cohort might benefit from another discount offer or retargeting ads on social media, for example. Ultimately, cohort analysis allows companies to optimize their service offerings (and marketing) to provide a more targeted, personalized experience. You can learn more about how to run cohort analysis using Google Analytics .

Cohort analysis in action: How Ticketmaster used cohort analysis to boost revenue

e. Cluster analysis

Cluster analysis is an exploratory technique that seeks to identify structures within a dataset. The goal of cluster analysis is to sort different data points into groups (or clusters) that are internally homogeneous and externally heterogeneous. This means that data points within a cluster are similar to each other, and dissimilar to data points in another cluster. Clustering is used to gain insight into how data is distributed in a given dataset, or as a preprocessing step for other algorithms.

There are many real-world applications of cluster analysis. In marketing, cluster analysis is commonly used to group a large customer base into distinct segments, allowing for a more targeted approach to advertising and communication. Insurance firms might use cluster analysis to investigate why certain locations are associated with a high number of insurance claims. Another common application is in geology, where experts will use cluster analysis to evaluate which cities are at greatest risk of earthquakes (and thus try to mitigate the risk with protective measures).

It’s important to note that, while cluster analysis may reveal structures within your data, it won’t explain why those structures exist. With that in mind, cluster analysis is a useful starting point for understanding your data and informing further analysis. Clustering algorithms are also used in machine learning—you can learn more about clustering in machine learning in our guide .

Cluster analysis in action: Using cluster analysis for customer segmentation—a telecoms case study example

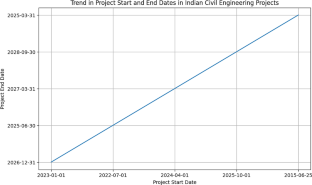

f. Time series analysis

Time series analysis is a statistical technique used to identify trends and cycles over time. Time series data is a sequence of data points which measure the same variable at different points in time (for example, weekly sales figures or monthly email sign-ups). By looking at time-related trends, analysts are able to forecast how the variable of interest may fluctuate in the future.

When conducting time series analysis, the main patterns you’ll be looking out for in your data are:

- Trends: Stable, linear increases or decreases over an extended time period.

- Seasonality: Predictable fluctuations in the data due to seasonal factors over a short period of time. For example, you might see a peak in swimwear sales in summer around the same time every year.

- Cyclic patterns: Unpredictable cycles where the data fluctuates. Cyclical trends are not due to seasonality, but rather, may occur as a result of economic or industry-related conditions.

As you can imagine, the ability to make informed predictions about the future has immense value for business. Time series analysis and forecasting is used across a variety of industries, most commonly for stock market analysis, economic forecasting, and sales forecasting. There are different types of time series models depending on the data you’re using and the outcomes you want to predict. These models are typically classified into three broad types: the autoregressive (AR) models, the integrated (I) models, and the moving average (MA) models. For an in-depth look at time series analysis, refer to our guide .

Time series analysis in action: Developing a time series model to predict jute yarn demand in Bangladesh

g. Sentiment analysis

When you think of data, your mind probably automatically goes to numbers and spreadsheets.

Many companies overlook the value of qualitative data, but in reality, there are untold insights to be gained from what people (especially customers) write and say about you. So how do you go about analyzing textual data?

One highly useful qualitative technique is sentiment analysis , a technique which belongs to the broader category of text analysis —the (usually automated) process of sorting and understanding textual data.

With sentiment analysis, the goal is to interpret and classify the emotions conveyed within textual data. From a business perspective, this allows you to ascertain how your customers feel about various aspects of your brand, product, or service.

There are several different types of sentiment analysis models, each with a slightly different focus. The three main types include:

Fine-grained sentiment analysis

If you want to focus on opinion polarity (i.e. positive, neutral, or negative) in depth, fine-grained sentiment analysis will allow you to do so.

For example, if you wanted to interpret star ratings given by customers, you might use fine-grained sentiment analysis to categorize the various ratings along a scale ranging from very positive to very negative.

Emotion detection

This model often uses complex machine learning algorithms to pick out various emotions from your textual data.

You might use an emotion detection model to identify words associated with happiness, anger, frustration, and excitement, giving you insight into how your customers feel when writing about you or your product on, say, a product review site.

Aspect-based sentiment analysis

This type of analysis allows you to identify what specific aspects the emotions or opinions relate to, such as a certain product feature or a new ad campaign.

If a customer writes that they “find the new Instagram advert so annoying”, your model should detect not only a negative sentiment, but also the object towards which it’s directed.

In a nutshell, sentiment analysis uses various Natural Language Processing (NLP) algorithms and systems which are trained to associate certain inputs (for example, certain words) with certain outputs.

For example, the input “annoying” would be recognized and tagged as “negative”. Sentiment analysis is crucial to understanding how your customers feel about you and your products, for identifying areas for improvement, and even for averting PR disasters in real-time!

Sentiment analysis in action: 5 Real-world sentiment analysis case studies

4. The data analysis process

In order to gain meaningful insights from data, data analysts will perform a rigorous step-by-step process. We go over this in detail in our step by step guide to the data analysis process —but, to briefly summarize, the data analysis process generally consists of the following phases:

Defining the question

The first step for any data analyst will be to define the objective of the analysis, sometimes called a ‘problem statement’. Essentially, you’re asking a question with regards to a business problem you’re trying to solve. Once you’ve defined this, you’ll then need to determine which data sources will help you answer this question.

Collecting the data

Now that you’ve defined your objective, the next step will be to set up a strategy for collecting and aggregating the appropriate data. Will you be using quantitative (numeric) or qualitative (descriptive) data? Do these data fit into first-party, second-party, or third-party data?

Learn more: Quantitative vs. Qualitative Data: What’s the Difference?

Cleaning the data

Unfortunately, your collected data isn’t automatically ready for analysis—you’ll have to clean it first. As a data analyst, this phase of the process will take up the most time. During the data cleaning process, you will likely be:

- Removing major errors, duplicates, and outliers

- Removing unwanted data points

- Structuring the data—that is, fixing typos, layout issues, etc.

- Filling in major gaps in data

Analyzing the data

Now that we’ve finished cleaning the data, it’s time to analyze it! Many analysis methods have already been described in this article, and it’s up to you to decide which one will best suit the assigned objective. It may fall under one of the following categories:

- Descriptive analysis , which identifies what has already happened

- Diagnostic analysis , which focuses on understanding why something has happened

- Predictive analysis , which identifies future trends based on historical data

- Prescriptive analysis , which allows you to make recommendations for the future

Visualizing and sharing your findings

We’re almost at the end of the road! Analyses have been made, insights have been gleaned—all that remains to be done is to share this information with others. This is usually done with a data visualization tool, such as Google Charts, or Tableau.

Learn more: 13 of the Most Common Types of Data Visualization

To sum up the process, Will’s explained it all excellently in the following video:

5. The best tools for data analysis

As you can imagine, every phase of the data analysis process requires the data analyst to have a variety of tools under their belt that assist in gaining valuable insights from data. We cover these tools in greater detail in this article , but, in summary, here’s our best-of-the-best list, with links to each product:

The top 9 tools for data analysts

- Microsoft Excel

- Jupyter Notebook

- Apache Spark

- Microsoft Power BI

6. Key takeaways and further reading

As you can see, there are many different data analysis techniques at your disposal. In order to turn your raw data into actionable insights, it’s important to consider what kind of data you have (is it qualitative or quantitative?) as well as the kinds of insights that will be useful within the given context. In this post, we’ve introduced seven of the most useful data analysis techniques—but there are many more out there to be discovered!

So what now? If you haven’t already, we recommend reading the case studies for each analysis technique discussed in this post (you’ll find a link at the end of each section). For a more hands-on introduction to the kinds of methods and techniques that data analysts use, try out this free introductory data analytics short course. In the meantime, you might also want to read the following:

- The Best Online Data Analytics Courses for 2024

- What Is Time Series Data and How Is It Analyzed?

- What is Spatial Analysis?

Qualitative case study data analysis: an example from practice

Affiliation.

- 1 School of Nursing and Midwifery, National University of Ireland, Galway, Republic of Ireland.

- PMID: 25976531

- DOI: 10.7748/nr.22.5.8.e1307

Aim: To illustrate an approach to data analysis in qualitative case study methodology.

Background: There is often little detail in case study research about how data were analysed. However, it is important that comprehensive analysis procedures are used because there are often large sets of data from multiple sources of evidence. Furthermore, the ability to describe in detail how the analysis was conducted ensures rigour in reporting qualitative research.

Data sources: The research example used is a multiple case study that explored the role of the clinical skills laboratory in preparing students for the real world of practice. Data analysis was conducted using a framework guided by the four stages of analysis outlined by Morse ( 1994 ): comprehending, synthesising, theorising and recontextualising. The specific strategies for analysis in these stages centred on the work of Miles and Huberman ( 1994 ), which has been successfully used in case study research. The data were managed using NVivo software.

Review methods: Literature examining qualitative data analysis was reviewed and strategies illustrated by the case study example provided. Discussion Each stage of the analysis framework is described with illustration from the research example for the purpose of highlighting the benefits of a systematic approach to handling large data sets from multiple sources.

Conclusion: By providing an example of how each stage of the analysis was conducted, it is hoped that researchers will be able to consider the benefits of such an approach to their own case study analysis.

Implications for research/practice: This paper illustrates specific strategies that can be employed when conducting data analysis in case study research and other qualitative research designs.

Keywords: Case study data analysis; case study research methodology; clinical skills research; qualitative case study methodology; qualitative data analysis; qualitative research.

- Case-Control Studies*

- Data Interpretation, Statistical*

- Nursing Research / methods*

- Qualitative Research*

- Research Design

Qualitative Data Analysis Methods

In the following, we will discuss basic approaches to analyzing data in all six of the acceptable qualitative designs.

After reviewing the information in this document, you will be able to:

- Recognize the terms for data analysis methods used in the various acceptable designs.

- Recognize the data preparation tasks that precede actual analysis in all the designs.

- Understand the basic analytic methods used by the respective qualitative designs.

- Identify and apply the methods required by your selected design.

Terms Used in Data Analysis by the Six Designs

Each qualitative research approach or design has its own terms for methods of data analysis:

- Ethnography—uses modified thematic analysis and life histories.

- Case study—uses description, categorical aggregation, or direct interpretation.

- Grounded theory—uses open, axial, and selective coding (although recent writers are proposing variations on those basic analysis methods).

- Phenomenology—describes textures and structures of the essential meaning of the lived experience of the phenomenon

- Heuristics—patterns, themes, and creative synthesis along with individual portraits.

- Generic qualitative inquiry—thematic analysis, which is really a foundation for all the other analytic methods. Thematic analysis is the starting point for the other five, and the endpoint for generic qualitative inquiry. Because it is the basic or foundational method, we'll take it first.

Preliminary Tasks in Analysis in all Methods

In all the approaches—case study, grounded theory, generic inquiry, and phenomenology—there are preliminary tasks that must be performed prior to the analysis itself. For each, you will need to:

- Arrange for secure storage of original materials. Storage should be secure and guaranteed to protect the privacy and confidentiality of the participants' information and identities.

- Transcribe interviews or otherwise transform raw data into usable formats.

- Make master copies and working copies of all materials. Master copies should be kept securely with the original data. Working copies will be marked up, torn apart, and used heavily: make plenty.

- Arrange secure passwords or other protection for all electronic data and copies.

- When ready to begin, read all the transcripts repeatedly—at least three times—for a sense of the whole. Don't force it—allow the participants' words to speak to you.

These tasks are done in all forms of qualitative analysis. Now let's look specifically at generic qualitative inquiry.

Data Analysis in Generic Qualitative Inquiry: Thematic Analysis

The primary tool for conducting the analysis of data when using the generic qualitative inquiry approach is thematic analysis, a flexible analytic method for deriving the central themes from verbal data. A thematic analysis can also be used to conduct analysis of the qualitative data in some types of case study.

Thematic analysis essentially creates theme-statements for ideas or categories of ideas (codes) that the researcher extracts from the words of the participants.

There are two main types of thematic analysis:

- Inductive thematic analysis, in which the data are interpreted inductively, that is, without bringing in any preselected theoretical categories.

- Theoretical thematic analysis, in which the participants' words are interpreted according to categories or constructs from the existing literature.

Analytic Steps in Thematic Analysis: Reading

Remember that the last preliminary task listed above was to read the transcripts for a sense of the whole. In this discussion, we'll assume you're working with transcribed data, usually from interviews. You can apply each step, with changes, to any kind of qualitative data. Now, before you start analyzing, take the first transcript and read it once more, as often as necessary, for a sense of what this participant told you about the topic of your study. If you're using other sources of data, spend time with them holistically.

Thematic Analysis: Steps in the Process

When you have a feel for the data,

- Underline any passages (phases, sentences, or paragraphs) that appear meaningful to you. Don't make any interpretations yet! Review the underlined data.

- Decide if the underlined data are relevant to the research question and cross out or delete all data unrelated to the research question. Some information in the transcript may be interesting but unrelated to the research question.

- Create a name or "code" for each remaining underlined passage (expressions or meaning units) that focus on one single idea. The code should be:

- Briefer than the passage, should

- Sum up its meaning, and should be

- Supported by the meaning unit (the participant's words).

- Find codes that recur; cluster these together. Now begin the interpretation, but only with the understanding that the codes or patterns may shift and change during the process of analysis.

- After you have developed the clusters or patterns of codes, name each pattern. The pattern name is a theme. Use language supported by the original data in the language of your discipline and field.

- Write a brief description of each theme. Use brief direct quotations from the transcript to show the reader how the patterns emerged from the data.

- Compose a paragraph integrating all the themes you developed from the individual's data.

- Repeat this process for each participant, the "within-participant" analysis.

- Finally, integrate all themes from all participants in "across-participants" analysis, showing what general themes are found across all the data.

Some variation of thematic analysis will appear in most of the other forms of qualitative data analysis, but the other methods tend to be more complex. Let's look at them one at a time. If you are already clear as to which approach or design your study will use, you can skip to the appropriate section below.

Ethnographic Data Analysis

Ethnographic data analysis relies on a modified thematic analysis. It is called modified because it combines standard thematic analysis as previously described for interview data with modified thematic methods applied to artifacts, observational notes, and other non-interview data.

Depending on the kinds of data to be interpreted (for instance pictures and historical documents) Ethnographers devise unique ways to find patterns or themes in the data. Finally, the themes must be integrated across all sources and kinds of data to arrive at a composite thematic picture of the culture.

(Adapted from Bogdan and Taylor, 1975; Taylor and Bogdan, 1998; Aronson, 1994.)

Data Analysis in Grounded Theory

Going beyond the descriptive and interpretive goals of many other qualitative models, grounded theory's goal is building a theory. It seeks explanation, not simply description.

It uses a constant comparison method of data analysis that begins as soon as the researcher starts collecting data. Each data collection event (for example, an interview) is analyzed immediately, and later data collection events can be modified to seek more information on emerging themes.

In other words, analysis goes on during each step of the data collection, not merely after data collection.

The heart of the grounded theory analysis is coding, which is analogous to but more rigorous than coding in thematic analysis.

Coding in Grounded Theory Method

There are three different types of coding used in a sequential manner.

- The first type of coding is open coding, which is like basic coding in thematic analysis. During open coding, the researcher performs:

- A line-by-line analysis (or sentence or paragraph analysis) of the data.

- Labels and categorizes the dimensions or aspects of the phenomenon being studied.

- The researcher also uses memos to describe the categories that are found.

- The second type of coding is axial coding, which involves finding links between categories and subcategories found in the open coding.

- The open codes are examined for their relationships: cause and effect, co-occurrence, and so on.

- The goal here is to picture how the various dimensions or categories of data interact with one another in time and space.

- The third type of coding is selective coding, which identifies a core category and relates the categories subsidiary to this core.

- Selective coding selects the main phenomenon, (core category) around which subsidiary phenomena, (all other categories) are grouped, arranging the groupings, studying the results, and rearranging where the data require it.

The Final Stages of Grounded Theory Analysis, after Coding

From selective coding, the grounded theory researcher develops:

- A model of the process, which is the description of which actions and interactions occur in a sequence or series.

- A transactional system, which is the description of how the interactions of different events explain the phenomenon being investigated.

- Finally, A conditional matrix is diagrammed to help consider the conditions and consequences related to the phenomenon under study.

These three essentially tell the story of the outcome of the research, in other words, the description of the process by which the phenomenon seems to happen, the transactional system supporting it, and the conditional matrix that pictures the explanation of the phenomenon are the findings of a grounded theory study.

(Adapted from Corbin and Strauss, 2008; Strauss and Corbin, 1990, 1998.)

Data Analysis in Qualitative Case Study: Background

There are a few points to consider in analyzing case study data:

- Analysis can be:

- Holistic—the entire case.

- Embedded—a specific aspect of the case.

- Multiple sources and kinds of data must be collected and analyzed.

- Data must be collected, analyzed, and described about both:

- The contexts of the case (its social, political, economic contexts, its affiliations with other organizations or cases, and so on).

- The setting of the case (geography, location, physical grounds, or set-up, business organization, etc.).

Qualitative Case Study Data Analysis Methods

Data analysis is detailed in description and consists of an analysis of themes. Especially for interview or documentary analysis, thematic analysis can be used (see the section on generic qualitative inquiry). A typical format for data analysis in a case study consists of the following phases:

- Description: This entails developing a detailed description of each instance of the case and its setting. The words "instance" and "case" can be confusing. Let's say we're conducting a case study of gay and lesbian members of large urban evangelical Christian congregations in the Southeast. The case would be all such people and their congregations. Instances of the case would be any individual person or congregation. In this phase, all the congregations (the settings) and their larger contexts would be described in detail, along with the individuals who are interviewed or observed.

- Categorical Aggregation: This involves seeking a collection of themes from the data, hoping that relevant meaning about lessons to be learned about the case will emerge. Using our example, a kind of thematic analysis from all the data would be performed, looking for common themes.

- Direct Interpretation: By looking at the single instance or member of the case and drawing meaning from it without looking for multiple instances, direct interpretation pulls the data apart and puts it together in more meaningful ways. Here, the interviews with all the gay and lesbian congregation members would be subjected to thematic analysis or some other form of analysis for themes.

- Within-Case Analysis: This would identify the themes that emerge from the data collected from each instance of the case, including connections between or among the themes. These themes would be further developed using verbatim passages and direct quotation to elucidate each theme. This would serve as the summary of the thematic analysis for each individual participant.

- Cross-Case Analysis: This phase develops a thematic analysis across cases as well as assertions and interpretations of the meaning of the themes emerging from all participants in the study.

- Interpretive Phase: In the final phase, this is the creation of naturalistic generalizations from the data as a whole and reporting on the lesson learned from the case study.

(Adapted from Creswell, 1998; Stake, 1995.)

Data Analysis in Phenomenological Research

There are a few existing models of phenomenological research, and they each propose slightly different methods of data analysis. They all arrive at the same goal, however. The goal of phenomenological analysis is to describe the essence or core structures and textures of some conscious psychological experience. One such model, empirical, was developed at Duquesne University. This method of analysis consists of five essential steps and represents the other variations well. Whichever model is chosen, those wishing to conduct phenomenological research must choose a model and abide by its procedures. Empirical phenomenology is presented as an example.

- Sense of the whole. One reads the entire description in order to get a general sense of the whole statement. This often takes a few readings, which should be approached contemplatively.

- Discrimination of meaning units. Once the sense of the whole has been grasped, the researcher returns to the beginning and reads through the text once more, delineating each transition in meaning.

- The researcher adopts a psychological perspective to do this. This means that the researcher looks for shifts in psychological meaning.

- The researcher focuses on the phenomenon being investigated. This means that the researcher keeps in mind the study's topic and looks for meaningful passages related to it.

- The researcher next eliminates redundancies and unrelated meaning units.

- Transformation of subjects' everyday expressions (meaning units) into psychological language. Once meaning units have been delineated,

- The researcher reflects on each of the meaning units, which are still expressed in the concrete language of the participants, and describes the essence of the statement for the participant.

- The researcher makes these descriptions in the language of psychological science.

- Synthesis of transformed meaning units into a consistent statement of the structure of the experience.

- Using imaginative variation on these transformed meaning units, the researcher discovers what remains unchanged when variations are imaginatively applied, and

- From this develops a consistent statement regarding the structure of the participant's experience.

- The researcher completes this process for each transcript in the study.

- Final synthesis. Finally, the researcher synthesizes all of the statements regarding each participant's experience into one consistent statement that describes and captures [of] the essence of the experience being studied.

(Adapted from Giorgi, 1985, 1997; Giorgi and Giorgi, 2003.)

Data Analysis in Heuristics

Six steps typically characterize the heuristic process of data analysis, consisting of:

- Initial engagement.

- Incubation.

- Illumination.

- Explication.

To start, place all the material drawn from one participant before you (recordings, transcriptions, journals, notes, poems, artwork, and so on). This material may either be data gathered by self-search or by interviews with co-researchers.

- Immerse yourself fully in the material until you are aware of and understand everything that is before you.

- Incubate the material. Put the material aside for a while. Let it settle in you. Live with it but without particular attention or focus. Return to the immersion process. Make notes where they would enable you to remember or classify the material. Continue this rhythm of working with the data and resting until an illumination or essential configuration emerges. From your core or global sense, list the essential components or patterns and themes that characterize the fundamental nature and meaning of the experience. Reflectively study the patterns and themes, dwell inside them, and develop a full depiction of the experience. The depiction must include the essential components of the experience.

- Illustrate the depiction of the experience with verbatim samples, poems, stories, or other materials to highlight and accentuate the person's lived experience.

- Return to the raw material of your co-researcher (participant). Does your depiction of the experience fit the data from which you have developed it? Does it contain all that is essential?

- Develop a full reflective depiction of the experience, one that characterizes the participant's experience reflecting core meanings for the individuals as a whole. Include in the depiction, verbatim samples, poems, stories, and the like to highlight and accentuate the lived nature of the experience. This depiction will serve as the creative synthesis, which will combine the themes and patterns into a representation of the whole in an aesthetically pleasing way. This synthesis will communicate the essence of the lived experience under inquiry. The synthesis is more than a summary: it is like a chemical reaction, a creation anew.

- Return to the data and develop a portrait of the person in such a way that the phenomenon and the person emerge as real.

(Adapted from Douglass and Moustakas, l985; Moustakas, 1990.)

Bogdan, R., & Taylor, S. J. (1975). Introduction to qualitative research methods: A phenomenological approach (3rd ed.). New York, NY: Wiley.

Corbin, J., & Strauss, A. (2008). Basics of qualitative research: Techniques and procedures for developing grounded theory (3rd ed.). Los Angeles, CA: Sage.

Creswell, J. W. (1998). Qualitative inquiry and research design: Choosing among five traditions . Thousand Oaks, CA: Sage.

Douglass, B. G., & Moustakas, C. (1985). Heuristic inquiry: The internal search to know. Journal of Humanistic Psychology , 25(3), 39–55.

Giorgi, A. (Ed.). (1985). Phenomenology and psychological research . Pittsburgh, PA: Duquesne University Press.

Giorgi, A. (1997). The theory, practice and evaluation of phenomenological methods as a qualitative research procedure. Journal of Phenomenological Psychology , 28, 235–260.

Giorgi, A. P., & Giorgi, B. M. (2003). The descriptive phenomenological psychological method. In P. M. Camic, J. E. Rhodes, & L. Yardley (Eds.), Qualitative research in psychology: Expanding perspectives in methodology and design (pp. 243–273). Washington, DC: American Psychological Association.

Moustakas, C. (1990). Heuristic research: Design, methodology, and applications . Newbury Park, CA: Sage.

Stake, R. E. (1995). The art of case study research . Thousand Oaks, CA: Sage.

Strauss, A., & Corbin, J. (1990). Basics of qualitative research: Grounded theory procedures and techniques . Newbury Park, CA: Sage.

Strauss, A., & Corbin, J. (1998). Basics of qualitative research: Techniques and theory for developing grounded theory (2nd ed.). Thousand Oaks, CA: Sage.

Taylor, S. J., & Bogdan, R. (1998). Introduction to qualitative research methods: A guidebook and resource (3rd ed.). New York: Wiley.

Doc. reference: phd_t3_u06s6_qualanalysis.html

Case Study Research in Software Engineering: Guidelines and Examples by Per Runeson, Martin Höst, Austen Rainer, Björn Regnell

Get full access to Case Study Research in Software Engineering: Guidelines and Examples and 60K+ other titles, with a free 10-day trial of O'Reilly.

There are also live events, courses curated by job role, and more.

DATA ANALYSIS AND INTERPRETATION

5.1 introduction.

Once data has been collected the focus shifts to analysis of data. It can be said that in this phase, data is used to understand what actually has happened in the studied case, and where the researcher understands the details of the case and seeks patterns in the data. This means that there inevitably is some analysis going on also in the data collection phase where the data is studied, and for example when data from an interview is transcribed. The understandings in the earlier phases are of course also valid and important, but this chapter is more focusing on the separate phase that starts after the data has been collected.

Data analysis is conducted differently for quantitative and qualitative data. Sections 5.2 – 5.5 describe how to analyze qualitative data and how to assess the validity of this type of analysis. In Section 5.6 , a short introduction to quantitative analysis methods is given. Since quantitative analysis is covered extensively in textbooks on statistical analysis, and case study research to a large extent relies on qualitative data, this section is kept short.

5.2 ANALYSIS OF DATA IN FLEXIBLE RESEARCH

5.2.1 introduction.

As case study research is a flexible research method, qualitative data analysis methods are commonly used [176]. The basic objective of the analysis is, as in any other analysis, to derive conclusions from the data, keeping a clear chain of evidence. The chain of evidence means that a reader ...

Get Case Study Research in Software Engineering: Guidelines and Examples now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.

Don’t leave empty-handed

Get Mark Richards’s Software Architecture Patterns ebook to better understand how to design components—and how they should interact.

It’s yours, free.

Check it out now on O’Reilly

Dive in for free with a 10-day trial of the O’Reilly learning platform—then explore all the other resources our members count on to build skills and solve problems every day.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- What Is a Case Study? | Definition, Examples & Methods

What Is a Case Study? | Definition, Examples & Methods

Published on May 8, 2019 by Shona McCombes . Revised on November 20, 2023.

A case study is a detailed study of a specific subject, such as a person, group, place, event, organization, or phenomenon. Case studies are commonly used in social, educational, clinical, and business research.

A case study research design usually involves qualitative methods , but quantitative methods are sometimes also used. Case studies are good for describing , comparing, evaluating and understanding different aspects of a research problem .

Table of contents

When to do a case study, step 1: select a case, step 2: build a theoretical framework, step 3: collect your data, step 4: describe and analyze the case, other interesting articles.

A case study is an appropriate research design when you want to gain concrete, contextual, in-depth knowledge about a specific real-world subject. It allows you to explore the key characteristics, meanings, and implications of the case.

Case studies are often a good choice in a thesis or dissertation . They keep your project focused and manageable when you don’t have the time or resources to do large-scale research.

You might use just one complex case study where you explore a single subject in depth, or conduct multiple case studies to compare and illuminate different aspects of your research problem.

Prevent plagiarism. Run a free check.

Once you have developed your problem statement and research questions , you should be ready to choose the specific case that you want to focus on. A good case study should have the potential to:

- Provide new or unexpected insights into the subject

- Challenge or complicate existing assumptions and theories

- Propose practical courses of action to resolve a problem

- Open up new directions for future research

TipIf your research is more practical in nature and aims to simultaneously investigate an issue as you solve it, consider conducting action research instead.

Unlike quantitative or experimental research , a strong case study does not require a random or representative sample. In fact, case studies often deliberately focus on unusual, neglected, or outlying cases which may shed new light on the research problem.

Example of an outlying case studyIn the 1960s the town of Roseto, Pennsylvania was discovered to have extremely low rates of heart disease compared to the US average. It became an important case study for understanding previously neglected causes of heart disease.

However, you can also choose a more common or representative case to exemplify a particular category, experience or phenomenon.

Example of a representative case studyIn the 1920s, two sociologists used Muncie, Indiana as a case study of a typical American city that supposedly exemplified the changing culture of the US at the time.

While case studies focus more on concrete details than general theories, they should usually have some connection with theory in the field. This way the case study is not just an isolated description, but is integrated into existing knowledge about the topic. It might aim to:

- Exemplify a theory by showing how it explains the case under investigation

- Expand on a theory by uncovering new concepts and ideas that need to be incorporated

- Challenge a theory by exploring an outlier case that doesn’t fit with established assumptions

To ensure that your analysis of the case has a solid academic grounding, you should conduct a literature review of sources related to the topic and develop a theoretical framework . This means identifying key concepts and theories to guide your analysis and interpretation.

There are many different research methods you can use to collect data on your subject. Case studies tend to focus on qualitative data using methods such as interviews , observations , and analysis of primary and secondary sources (e.g., newspaper articles, photographs, official records). Sometimes a case study will also collect quantitative data.

Example of a mixed methods case studyFor a case study of a wind farm development in a rural area, you could collect quantitative data on employment rates and business revenue, collect qualitative data on local people’s perceptions and experiences, and analyze local and national media coverage of the development.

The aim is to gain as thorough an understanding as possible of the case and its context.

In writing up the case study, you need to bring together all the relevant aspects to give as complete a picture as possible of the subject.

How you report your findings depends on the type of research you are doing. Some case studies are structured like a standard scientific paper or thesis , with separate sections or chapters for the methods , results and discussion .

Others are written in a more narrative style, aiming to explore the case from various angles and analyze its meanings and implications (for example, by using textual analysis or discourse analysis ).

In all cases, though, make sure to give contextual details about the case, connect it back to the literature and theory, and discuss how it fits into wider patterns or debates.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Ecological validity

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, November 20). What Is a Case Study? | Definition, Examples & Methods. Scribbr. Retrieved April 4, 2024, from https://www.scribbr.com/methodology/case-study/

Is this article helpful?

Shona McCombes

Other students also liked, primary vs. secondary sources | difference & examples, what is a theoretical framework | guide to organizing, what is action research | definition & examples, unlimited academic ai-proofreading.

✔ Document error-free in 5minutes ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

10 Real World Data Science Case Studies Projects with Example

Top 10 Data Science Case Studies Projects with Examples and Solutions in Python to inspire your data science learning in 2023.

BelData science has been a trending buzzword in recent times. With wide applications in various sectors like healthcare , education, retail, transportation, media, and banking -data science applications are at the core of pretty much every industry out there. The possibilities are endless: analysis of frauds in the finance sector or the personalization of recommendations on eCommerce businesses. We have developed ten exciting data science case studies to explain how data science is leveraged across various industries to make smarter decisions and develop innovative personalized products tailored to specific customers.

Walmart Sales Forecasting Data Science Project

Downloadable solution code | Explanatory videos | Tech Support

Table of Contents

Data science case studies in retail , data science case study examples in entertainment industry , data analytics case study examples in travel industry , case studies for data analytics in social media , real world data science projects in healthcare, data analytics case studies in oil and gas, what is a case study in data science, how do you prepare a data science case study, 10 most interesting data science case studies with examples.

So, without much ado, let's get started with data science business case studies !

With humble beginnings as a simple discount retailer, today, Walmart operates in 10,500 stores and clubs in 24 countries and eCommerce websites, employing around 2.2 million people around the globe. For the fiscal year ended January 31, 2021, Walmart's total revenue was $559 billion showing a growth of $35 billion with the expansion of the eCommerce sector. Walmart is a data-driven company that works on the principle of 'Everyday low cost' for its consumers. To achieve this goal, they heavily depend on the advances of their data science and analytics department for research and development, also known as Walmart Labs. Walmart is home to the world's largest private cloud, which can manage 2.5 petabytes of data every hour! To analyze this humongous amount of data, Walmart has created 'Data Café,' a state-of-the-art analytics hub located within its Bentonville, Arkansas headquarters. The Walmart Labs team heavily invests in building and managing technologies like cloud, data, DevOps , infrastructure, and security.

Walmart is experiencing massive digital growth as the world's largest retailer . Walmart has been leveraging Big data and advances in data science to build solutions to enhance, optimize and customize the shopping experience and serve their customers in a better way. At Walmart Labs, data scientists are focused on creating data-driven solutions that power the efficiency and effectiveness of complex supply chain management processes. Here are some of the applications of data science at Walmart:

i) Personalized Customer Shopping Experience

Walmart analyses customer preferences and shopping patterns to optimize the stocking and displaying of merchandise in their stores. Analysis of Big data also helps them understand new item sales, make decisions on discontinuing products, and the performance of brands.

ii) Order Sourcing and On-Time Delivery Promise

Millions of customers view items on Walmart.com, and Walmart provides each customer a real-time estimated delivery date for the items purchased. Walmart runs a backend algorithm that estimates this based on the distance between the customer and the fulfillment center, inventory levels, and shipping methods available. The supply chain management system determines the optimum fulfillment center based on distance and inventory levels for every order. It also has to decide on the shipping method to minimize transportation costs while meeting the promised delivery date.

Here's what valued users are saying about ProjectPro

Director Data Analytics at EY / EY Tech

Ameeruddin Mohammed

ETL (Abintio) developer at IBM

Not sure what you are looking for?

iii) Packing Optimization

Also known as Box recommendation is a daily occurrence in the shipping of items in retail and eCommerce business. When items of an order or multiple orders for the same customer are ready for packing, Walmart has developed a recommender system that picks the best-sized box which holds all the ordered items with the least in-box space wastage within a fixed amount of time. This Bin Packing problem is a classic NP-Hard problem familiar to data scientists .

Whenever items of an order or multiple orders placed by the same customer are picked from the shelf and are ready for packing, the box recommendation system determines the best-sized box to hold all the ordered items with a minimum of in-box space wasted. This problem is known as the Bin Packing Problem, another classic NP-Hard problem familiar to data scientists.

Here is a link to a sales prediction data science case study to help you understand the applications of Data Science in the real world. Walmart Sales Forecasting Project uses historical sales data for 45 Walmart stores located in different regions. Each store contains many departments, and you must build a model to project the sales for each department in each store. This data science case study aims to create a predictive model to predict the sales of each product. You can also try your hands-on Inventory Demand Forecasting Data Science Project to develop a machine learning model to forecast inventory demand accurately based on historical sales data.

Get Closer To Your Dream of Becoming a Data Scientist with 70+ Solved End-to-End ML Projects

Amazon is an American multinational technology-based company based in Seattle, USA. It started as an online bookseller, but today it focuses on eCommerce, cloud computing , digital streaming, and artificial intelligence . It hosts an estimate of 1,000,000,000 gigabytes of data across more than 1,400,000 servers. Through its constant innovation in data science and big data Amazon is always ahead in understanding its customers. Here are a few data analytics case study examples at Amazon:

i) Recommendation Systems

Data science models help amazon understand the customers' needs and recommend them to them before the customer searches for a product; this model uses collaborative filtering. Amazon uses 152 million customer purchases data to help users to decide on products to be purchased. The company generates 35% of its annual sales using the Recommendation based systems (RBS) method.

Here is a Recommender System Project to help you build a recommendation system using collaborative filtering.

ii) Retail Price Optimization

Amazon product prices are optimized based on a predictive model that determines the best price so that the users do not refuse to buy it based on price. The model carefully determines the optimal prices considering the customers' likelihood of purchasing the product and thinks the price will affect the customers' future buying patterns. Price for a product is determined according to your activity on the website, competitors' pricing, product availability, item preferences, order history, expected profit margin, and other factors.

Check Out this Retail Price Optimization Project to build a Dynamic Pricing Model.

iii) Fraud Detection

Being a significant eCommerce business, Amazon remains at high risk of retail fraud. As a preemptive measure, the company collects historical and real-time data for every order. It uses Machine learning algorithms to find transactions with a higher probability of being fraudulent. This proactive measure has helped the company restrict clients with an excessive number of returns of products.

You can look at this Credit Card Fraud Detection Project to implement a fraud detection model to classify fraudulent credit card transactions.

New Projects

Let us explore data analytics case study examples in the entertainment indusry.

Ace Your Next Job Interview with Mock Interviews from Experts to Improve Your Skills and Boost Confidence!

Netflix started as a DVD rental service in 1997 and then has expanded into the streaming business. Headquartered in Los Gatos, California, Netflix is the largest content streaming company in the world. Currently, Netflix has over 208 million paid subscribers worldwide, and with thousands of smart devices which are presently streaming supported, Netflix has around 3 billion hours watched every month. The secret to this massive growth and popularity of Netflix is its advanced use of data analytics and recommendation systems to provide personalized and relevant content recommendations to its users. The data is collected over 100 billion events every day. Here are a few examples of data analysis case studies applied at Netflix :

i) Personalized Recommendation System

Netflix uses over 1300 recommendation clusters based on consumer viewing preferences to provide a personalized experience. Some of the data that Netflix collects from its users include Viewing time, platform searches for keywords, Metadata related to content abandonment, such as content pause time, rewind, rewatched. Using this data, Netflix can predict what a viewer is likely to watch and give a personalized watchlist to a user. Some of the algorithms used by the Netflix recommendation system are Personalized video Ranking, Trending now ranker, and the Continue watching now ranker.

ii) Content Development using Data Analytics

Netflix uses data science to analyze the behavior and patterns of its user to recognize themes and categories that the masses prefer to watch. This data is used to produce shows like The umbrella academy, and Orange Is the New Black, and the Queen's Gambit. These shows seem like a huge risk but are significantly based on data analytics using parameters, which assured Netflix that they would succeed with its audience. Data analytics is helping Netflix come up with content that their viewers want to watch even before they know they want to watch it.

iii) Marketing Analytics for Campaigns

Netflix uses data analytics to find the right time to launch shows and ad campaigns to have maximum impact on the target audience. Marketing analytics helps come up with different trailers and thumbnails for other groups of viewers. For example, the House of Cards Season 5 trailer with a giant American flag was launched during the American presidential elections, as it would resonate well with the audience.

Here is a Customer Segmentation Project using association rule mining to understand the primary grouping of customers based on various parameters.

Get FREE Access to Machine Learning Example Codes for Data Cleaning , Data Munging, and Data Visualization

In a world where Purchasing music is a thing of the past and streaming music is a current trend, Spotify has emerged as one of the most popular streaming platforms. With 320 million monthly users, around 4 billion playlists, and approximately 2 million podcasts, Spotify leads the pack among well-known streaming platforms like Apple Music, Wynk, Songza, amazon music, etc. The success of Spotify has mainly depended on data analytics. By analyzing massive volumes of listener data, Spotify provides real-time and personalized services to its listeners. Most of Spotify's revenue comes from paid premium subscriptions. Here are some of the examples of case study on data analytics used by Spotify to provide enhanced services to its listeners:

i) Personalization of Content using Recommendation Systems

Spotify uses Bart or Bayesian Additive Regression Trees to generate music recommendations to its listeners in real-time. Bart ignores any song a user listens to for less than 30 seconds. The model is retrained every day to provide updated recommendations. A new Patent granted to Spotify for an AI application is used to identify a user's musical tastes based on audio signals, gender, age, accent to make better music recommendations.

Spotify creates daily playlists for its listeners, based on the taste profiles called 'Daily Mixes,' which have songs the user has added to their playlists or created by the artists that the user has included in their playlists. It also includes new artists and songs that the user might be unfamiliar with but might improve the playlist. Similar to it is the weekly 'Release Radar' playlists that have newly released artists' songs that the listener follows or has liked before.

ii) Targetted marketing through Customer Segmentation

With user data for enhancing personalized song recommendations, Spotify uses this massive dataset for targeted ad campaigns and personalized service recommendations for its users. Spotify uses ML models to analyze the listener's behavior and group them based on music preferences, age, gender, ethnicity, etc. These insights help them create ad campaigns for a specific target audience. One of their well-known ad campaigns was the meme-inspired ads for potential target customers, which was a huge success globally.

iii) CNN's for Classification of Songs and Audio Tracks

Spotify builds audio models to evaluate the songs and tracks, which helps develop better playlists and recommendations for its users. These allow Spotify to filter new tracks based on their lyrics and rhythms and recommend them to users like similar tracks ( collaborative filtering). Spotify also uses NLP ( Natural language processing) to scan articles and blogs to analyze the words used to describe songs and artists. These analytical insights can help group and identify similar artists and songs and leverage them to build playlists.

Here is a Music Recommender System Project for you to start learning. We have listed another music recommendations dataset for you to use for your projects: Dataset1 . You can use this dataset of Spotify metadata to classify songs based on artists, mood, liveliness. Plot histograms, heatmaps to get a better understanding of the dataset. Use classification algorithms like logistic regression, SVM, and Principal component analysis to generate valuable insights from the dataset.

Explore Categories

Below you will find case studies for data analytics in the travel and tourism industry.

Airbnb was born in 2007 in San Francisco and has since grown to 4 million Hosts and 5.6 million listings worldwide who have welcomed more than 1 billion guest arrivals in almost every country across the globe. Airbnb is active in every country on the planet except for Iran, Sudan, Syria, and North Korea. That is around 97.95% of the world. Using data as a voice of their customers, Airbnb uses the large volume of customer reviews, host inputs to understand trends across communities, rate user experiences, and uses these analytics to make informed decisions to build a better business model. The data scientists at Airbnb are developing exciting new solutions to boost the business and find the best mapping for its customers and hosts. Airbnb data servers serve approximately 10 million requests a day and process around one million search queries. Data is the voice of customers at AirBnB and offers personalized services by creating a perfect match between the guests and hosts for a supreme customer experience.

i) Recommendation Systems and Search Ranking Algorithms

Airbnb helps people find 'local experiences' in a place with the help of search algorithms that make searches and listings precise. Airbnb uses a 'listing quality score' to find homes based on the proximity to the searched location and uses previous guest reviews. Airbnb uses deep neural networks to build models that take the guest's earlier stays into account and area information to find a perfect match. The search algorithms are optimized based on guest and host preferences, rankings, pricing, and availability to understand users’ needs and provide the best match possible.

ii) Natural Language Processing for Review Analysis

Airbnb characterizes data as the voice of its customers. The customer and host reviews give a direct insight into the experience. The star ratings alone cannot be an excellent way to understand it quantitatively. Hence Airbnb uses natural language processing to understand reviews and the sentiments behind them. The NLP models are developed using Convolutional neural networks .

Practice this Sentiment Analysis Project for analyzing product reviews to understand the basic concepts of natural language processing.

iii) Smart Pricing using Predictive Analytics

The Airbnb hosts community uses the service as a supplementary income. The vacation homes and guest houses rented to customers provide for rising local community earnings as Airbnb guests stay 2.4 times longer and spend approximately 2.3 times the money compared to a hotel guest. The profits are a significant positive impact on the local neighborhood community. Airbnb uses predictive analytics to predict the prices of the listings and help the hosts set a competitive and optimal price. The overall profitability of the Airbnb host depends on factors like the time invested by the host and responsiveness to changing demands for different seasons. The factors that impact the real-time smart pricing are the location of the listing, proximity to transport options, season, and amenities available in the neighborhood of the listing.

Here is a Price Prediction Project to help you understand the concept of predictive analysis which is widely common in case studies for data analytics.

Uber is the biggest global taxi service provider. As of December 2018, Uber has 91 million monthly active consumers and 3.8 million drivers. Uber completes 14 million trips each day. Uber uses data analytics and big data-driven technologies to optimize their business processes and provide enhanced customer service. The Data Science team at uber has been exploring futuristic technologies to provide better service constantly. Machine learning and data analytics help Uber make data-driven decisions that enable benefits like ride-sharing, dynamic price surges, better customer support, and demand forecasting. Here are some of the real world data science projects used by uber:

i) Dynamic Pricing for Price Surges and Demand Forecasting

Uber prices change at peak hours based on demand. Uber uses surge pricing to encourage more cab drivers to sign up with the company, to meet the demand from the passengers. When the prices increase, the driver and the passenger are both informed about the surge in price. Uber uses a predictive model for price surging called the 'Geosurge' ( patented). It is based on the demand for the ride and the location.

ii) One-Click Chat

Uber has developed a Machine learning and natural language processing solution called one-click chat or OCC for coordination between drivers and users. This feature anticipates responses for commonly asked questions, making it easy for the drivers to respond to customer messages. Drivers can reply with the clock of just one button. One-Click chat is developed on Uber's machine learning platform Michelangelo to perform NLP on rider chat messages and generate appropriate responses to them.

iii) Customer Retention

Failure to meet the customer demand for cabs could lead to users opting for other services. Uber uses machine learning models to bridge this demand-supply gap. By using prediction models to predict the demand in any location, uber retains its customers. Uber also uses a tier-based reward system, which segments customers into different levels based on usage. The higher level the user achieves, the better are the perks. Uber also provides personalized destination suggestions based on the history of the user and their frequently traveled destinations.

You can take a look at this Python Chatbot Project and build a simple chatbot application to understand better the techniques used for natural language processing. You can also practice the working of a demand forecasting model with this project using time series analysis. You can look at this project which uses time series forecasting and clustering on a dataset containing geospatial data for forecasting customer demand for ola rides.

Explore More Data Science and Machine Learning Projects for Practice. Fast-Track Your Career Transition with ProjectPro

7) LinkedIn