An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

Analysis of Nested Case-Control Study Designs: Revisiting the Inverse Probability Weighting Method

Ryung s. kim.

a Department of Epidemiology and Population Health, Albert Einstein College of Medicine

In nested case-control studies, the most common way to make inference under a proportional hazards model is the conditional logistic approach of Thomas (1977) . Inclusion probability methods are more efficient than the conditional logistic approach of Thomas; however, the epidemiology research community has not accepted the methods as a replacement of the Thomas’ method. This paper promotes the inverse probability weighting method originally proposed by Samuelsen (1997) in combination with an approximate jackknife standard error that can be easily computed using existing software. Simulation studies demonstrate that this approach yields valid type 1 errors and greater powers than the conditional logistic approach in nested case-control designs across various sample sizes and magnitudes of the hazard ratios. A generalization of the method is also made to incorporate additional matching and the stratified Cox model. The proposed method is illustrated with data from a cohort of children with Wilm’s tumor to study the association between histological signatures and relapses.

1. Introduction

The nested case-control design, like the case-cohort design, is a schema in which a representative sample of a full cohort is used. It includes all cases and a pre-specified number of controls randomly chosen from the risk set of each failure time ( Thomas, 1977 ). The design is also referred as incidence density sampling or risk set sampling. It is typically used to reduce the cost of exposure assessment in a prospective epidemiologic study since exposure and other covariate data are obtained from only a subset of the full cohort yet without much loss of efficiency. It is a representative sample of a full cohort and, unlike traditional case-control studies, one can estimate the hazard ratios of the full cohort and the population it represents.

A partial likelihood approach was put forth by Thomas (1977) to estimate the hazard ratios from nested case-control studies. Since his partial likelihood is mathematically equivalent to the conditional logistic likelihood used for matched case-control studies, one can maximize the proposed likelihood by ‘tricking’ statistical software written for conditional logistic regression or equivalently stratified Cox regression. This is accomplished by including multiple inputs for subjects who are selected multiple times, and converting all randomly selected failures to non-failures. It is worth noting that although the mathematical formula for the conditional logistic likelihood is being used, this is not a matched case-control design since failures can be selected as controls and a subject can be selected multiple times across different failure times. Consequently, linear coefficients should be interpreted as log hazard ratios and not as log odds-ratios ( Langholz, 2010 ).

A few have proposed methods that are more efficient than that of Thomas. For example, Samuelsen (1997) proposed an analysis method in which the individual log-likelihood contributions are weighted by the inverse of the inclusion probabilities of ever being included in the nested case-control study. Chen (2001 , 2004) proposed the use of the same form of likelihood but with refined weights by averaging the observed covariates from subjects with similar failure times to estimate the contribution from unselected controls. Both Samuelsen (1997) and Chen (2001) showed that their estimators were more efficient than Thomas’; however, their methods have not been accepted as replacements by the epidemiology research community for the Thomas’ method. Nested case-control studies are still almost exclusively analyzed by means of the conditional logistic regression approach of Thomas. For instance, Kim (2013) recently reported that among sixteen nested case-control studies published in the American Journal of Epidemiology between 2009 and 2011, fourteen were analyzed by the conditional logistic regression approach of Thomas and the remaining two by the unconditional logistic regression approach; none used Samuelsen or Chen’s method.

The lack of acceptance is in part due to the following reasons. First, there was a lack of explanation on how the magnitude of the hazard ratio and the sample size affect the relative performance of different methods. Second, common statistical software cannot compute the complex variance estimators which require a computational memory in the order of O ( n 2 ) to compute all pair-wise co-inclusion probabilities. Finally, the methods were not extended to incorporate additional matching factors or to perform the stratified Cox analysis.

The aim of this paper is to promote the use of the inverse probability weighting methods in nested case-control studies using Cox proportional hazards model through demonstration of their superiority over the conditional logistic approach. I also demonstrate that approximate jackknife standard errors can be used to make valid inferences about log hazard ratios. The standard error estimator was recently proposed for secondary outcome analyses in nested case-control studies ( Kim, 2013 ) and is easily computable with existing software. As an illustration, we will use a cohort of children from the US with Wilm’s tumor ( Breslow and Chatterjee, 1999 ) to study the association of histology with relapses while incorporating matching variables and performing stratified analyses.

Consider a cohort of N subjects who are followed for the occurrence of a failure event. Let a i denote the fixed time i th subject ( i = 1,…, N ) entered the study, T i denote the time to the failure event, C i denote the censoring time that is independent of T i , and Y i = min( T i , C i ) denote the observed time. Assume that the hazard function λ i ( t ) of the failure time for the i th subject follows the proportional hazards model

where λ 0 ( t ) is the baseline hazard function, β is the parameter vector of interest, and X i ( Y i ) is a time-dependent covariate vector for the i th subject. Then, in full cohort studies, inferences on β are typically made by maximizing the Cox partial likelihood ( Cox, 1972 ):

δ i = 1 if subject i failed during the study and 0 otherwise; R i = { j : Y j ≥ Y i > a j } is the set of subjects at risk in the underlying cohort at time Y i . In a more general model that allows different baseline hazard functions across subgroups, the following partial likelihood is maximized where S ( i ) is the index set of the subjects who have the same values of matching variables with subject i :

In nested case-control studies, m controls are sampled from R i ∩{ i } c without replacement at each Y i where δ i = 1. That is, for each case, m controls are randomly selected from the subjects still at risk at the time of the failure of the case. Notice that the controls may include both failures and non-failures. Let S i denote this set of m controls and S = { i : δ i = 1} ∪ (∪ i : δ i =1 S i ) denote all subjects included in the nested case-control study. Then R ∼ i = R i ∩ S is the set of all subjects in the nested case-control study who are at risk at time Y i . Let n indicate the size S . Thomas (1977) proposed maximizing the following partial likelihood to make inferences on β ’s from nested case-control studies:

The partial likelihood produces a model consistent estimator of log hazard ratios ( Borgan and Langholz, 1993 ). For this paper, it is important to notice that the denominators in the Thomas’ likelihood uses only { i } ∩ S i and not all available subjects at risk, namely R ∼ i . As we will see, such ‘partial risk set’ approach is inherently inefficient because it uses only a subset of available subjects at risk. Samuelsen (1997) proposed maximizing the following partial-likelihood:

where w i = 1/ p i , and p i is the probability of subject i ever being included in the nested case-control study. Samuelsen proved the consistency of the resulting estimator: roughly, this is because the partial likelihood (2.5) is a design consistent estimator of Cox’s partial likelihood (2.2) which in turn yields an estimating equation for a model consistent estimator for β . The normality of the estimator was left as a conjecture due to the complexity of the sampling scheme. To allow baseline hazard functions to differ across subgroups, I propose the use of the following partial likelihood where S ( i ) is the index set of the subjects that share a common baseline hazard function with subject i :

Kim (2013) computed the inclusion probabilities in a nested case-control study that account for additional matching factors and ties in failure times. The probability for subject i was:

where k ji is the size of R j ∩ H i where H i is the set of subjects in the full cohort with the same values of matching variables as subject i . In other words, k ji is the number of subjects at risk at Y j with the same values of the matching variables as subject i ; b ji is the number of tied subjects in H i that failed exactly at Y j . In the absence of additional matching variables or ties in failure times, the inclusion probability simplifies to that of Samuelsen (1997) . The calculation of minimum is for the late failure times when all subjects in R j ∩ H i are sampled because k ji − b ji < m b ji .

Notice that we used two different subgroup notations S ( i ) in (2.3) and H ( i ) in (2.7) because the stratifications at design and analysis stages need not be the same. While the disproportionate representation caused by matching is accounted by the inclusion probabilities (2.7) , the stratified analysis is used strictly to allow varying baseline hazard functions. One may choose less number of different baseline hazard functions than the number of matching subgroups in order to increase statistical power. The decision is strictly a modeling issue that does not need to be determined in the study design stage.

3. Standard Error Estimation

Samuelsen (1997) and Chen (2001) both derived asymptotic variances for β , but these cannot be computed using commonly available statistical software and require computational memory in the order of O ( n 2 ) to compute all pair-wise co-inclusion probabilities. Kim (2013) recently proposed an approximate jackknife variance estimator for secondary outcome analysis that can be easily computed with existing software:

Λ w is the diagonal matrix of weights, S w = ( S i j w ( β ^ ) ) where S i j w ( β ) is the typical score residual for Cox’s partial likelihood if w i were the frequency weight, and I w is the negative Jacobian. We will use the estimator for the primary outcome analysis. The approximate jackknife standard error has been used for full cohort analysis by Reid and Crepeau (1985) , and Lin and Wei (1989) , and for case-cohort analysis by Barlow (1994) . Kim (2013) provided simple syntax of codes in R and SAS software.

4. Simulation Studies

Exponential failure times T with the rate of exp( β 1 X 1 + β 2 X 2 ) were generated for full cohorts of size N = 500, 1,000, or 2,000. X 1 , the main exposure variable of interest, was assumed to be distributed as standard normal variable, and X 2 was specified as a Bernoulli variable with a probability of success set at (1 + exp( X 1 )) −1 . The distribution of the covariates was set up so that a mild multicollinearity existed. The true log hazard ratio of interest, β 1 , assumed the values of 0, 0.1, 0.2, …, 1.0; therefore, the hazard ratios ranged between 1 and 3.86 by an increase equivalent to the inter-quartile range of the standard normal variable. The log hazard ratio for X 2 was set as β 2 = 0.5. Censoring times were uniformly distributed between 0 and a . The upper limit of the censoring, a , was chosen so that the proportion of failure was 15% in the full cohort. For each subject, either the failure or censoring time was observed, whichever occurred earlier. The log hazard ratios and their standard errors in the full cohort were estimated under the Cox proportional hazards model ( Cox, 1972 ).

I then selected nested case-control samples with varying numbers of controls, m = 1, 2, or 5, at each failure time. For each simulated nested case-control data set, the log hazard ratios and their standard errors are estimated by three methods: a naïve Cox method ( Cox, 1972 ) treating the nested case-control data as if they were a full cohort, the conditional logistic approach of Thomas (1977) , and the inverse probability method proposed by Samuelsen (1997) . When I used the method by Samuelsen, both the approximate jackknife standard error proposed by Kim (2013) and the original standard error proposed by Samuelsen (1997) were calculated. For simplicity, additional matching factors were not used in the simulation studies. This overall process including the generation of the full cohort, the nested case-control sample, and the recording of estimates was repeated 5,000 times. The standard error proposed by Samuelsen (1997) was computed with only 500 simulated data sets due to prohibitive computing time.

Figure 1 shows the empirical biases of the full cohort estimator and of the three aforementioned estimators based on the nested case-control samples. As expected, the naïve estimates were severely biased since they did not adjust for the disproportionate representation in the nested case-control designs. The inverse probability weighting method estimator by Samuelsen showed noticeably less bias than the conditional logistic estimator of Thomas when N = 500 and the true hazard ratio was large. Most importantly, both the inverse probability weighting method and the conditional logistic approach yielded estimates with low empirical biases (< 5% from the true of log hazard ratio except when N = 500 and m = 1).

The Empirical Biases in the Simulation Study (The empirical biases of the full cohort estimator and the three estimators based on the nested case-control samples - a naïve Cox estimator, the conditional logistic estimator, and the inverse probability weighted (IPW) estimator.)

Figure 2 shows that the empirical standard error of the inverse probability weighted method estimator by Samuelsen is less than that of the conditional logistic estimator by Thomas especially when the hazard ratio is large. In addition, it shows the average approximate jackknife standard errors which accurately estimate the empirical standard errors of the inverse probability weighted estimator and were remarkably close to the standard errors of Samuelsen (1997) . The standard errors were mildly under-estimated when the sample size was small ( N = 500 and m = 1).

The Empirical and the Estimated Standard errors in the Simulation Study (The empirical standard errors of the conditional logistic estimator and the inverse probability weighted estimator are shown. In addition, the average standard error estimates by the approximate jackknife (AJK) method and by Samuelsen (1997) ’s method are shown.)

Controlling the nominal type 1 error rate at 0.05, and setting β 1 = 0, all methods showed valid empirical type 1 error rates to test the null hypothesis, H 0 : β 1 = 0 ( Table 1 ); however, the empirical power of the inclusion probability weighting methods to test the null hypothesis, H 0 : β 1 = 0, was consistently higher than that of the conditional logistic approach ( Figure 3 ). The difference was noticeable when the sample size was moderate. For example, when N = 500, m = 1, and β = 0.5, the empirical power of the inverse probability weighting method in combination with an approximate jackknife standard error was 0.79 and while that for the conditional logistic approach of Thomas was 0.70. When the sample size was sufficient, the differences between two methods were negligible.

The Empirical Power in the Simulation Study (Controlling the nominal type 1 error rate at 0.05, the empirical power by three methods to test the null hypothesis H 0 : β 1 = 0 is measured: Cox analysis in the full cohort, the conditional logistic approach, and the inverse probability weighting method in combination with an approximate jackknife (AJK) standard error).

The empirical type 1 errors in the simulation study

Controlling the nominal type 1 error rate at 0.05, and setting β 1 = 0, the empirical type 1 error rates to test the null hypothesis H 0 : β 1 = 0 were measured by three methods: the conditional logistic approach of Thomas (1977) , and the inverse probability weighting (IPW) method by Samuelsen (1997) in combination with either the standard error he originally proposed or the approximate jackknife standard error.

I analyzed the full cohort data of the relapse or censored times of 4,028 children with Wilms’ tumor from the third and fourth clinical trials of the National Wilms Tumor Study Group ( Breslow and Chatterjee, 1999 ; D’Angio et al ., 1989 ; Green et al ., 1998 ) to further compare the performances of the inverse probability weighting method to the conditional logistic approach of Thomas in the presence of additional matching. Among the 4,028 children in the full cohort, 571 had relapsed. The main predictor is a binary ‘histology outcome of tumors ( x 1 )’ from a central-lab which is either ‘favorable’ or ‘unfavorable’. Patients with tumors composed of one of the rare cell types known collectively as ‘unfavorable histology’ are more likely to relapse and die than patients with tumors of ‘favorable histology’ ( Beckwith and Palmer, 1978 ; Breslow and Chatterjee, 1999 ). Two additional variables considered important to control in the analyses were the tumor stage ( x 2 ) and the age at baseline ( x 3 ). I considered two analytic models: an additive proportional hazards model and a stratified proportional hazards model. For the additive model, I included all three predictors as additive covariates. The estimate of the log hazard ratio β 1 was 1.593 (SE = 0.089). For the stratified model, I used x 1 as the only covariate, and x 2 , x 3 as the stratification factors allowing different shapes of baseline hazards. The estimate of the log hazard ratio β 1 S was 1.499 (SE = 0.092). Interactions were not considered for the sake of simplicity.

A total of 5,000 nested case-control samples were repeatedly selected from the full cohort with varying numbers of controls ( m = 1, 2, 5) matched on tumor stage ( x 2 ) and age ( x 3 ) following the typical practices of controlling for confounding factors with matching. Table 2 shows the number of subjects across different matching subgroups. I used the aforementioned inclusion probabilities (2.7) to account for matching. First, for the additive model, I compared for each sample the full cohort estimate of β 1 with the estimates from naïve Cox regression with additive covariates x 1 , x 2 , and x 3 , Thomas’ conditional logistic method with the only covariate x 1 , and the inverse probability weighting method based on the partial likelihood (2.5) with additive covariates x 1 , x 2 , and x 3 . Similarly, for the stratified model, I compared the full cohort estimate of β 1 S with the estimates from naïve unweighted stratified Cox regression with covariate x 1 and stratification factors x 2 and x 3 , Thomas’ conditional logistic method with the only covariate x 1 , and the inverse probability weighting method based on the stratified partial likelihood (2.6) with covariate x 1 and stratification factors x 2 and x 3 .

The numbers of children in matching subgroups from the Wilm’s Tumor study

The empirical bias for the log hazard ratio was calculated as the average difference between the full cohort estimate and the nested case-control estimates. Both the inverse probability weighting method and the conditional logistic approach of Thomas yielded low empirical biases (< 5% from the full cohort estimate; Table 3 ). This is consistent with the results from the earlier simulations studies. Still, the inclusion probability weighting method showed the lowest bias. In comparison, the average biases of the naïve unweighted Cox estimators were from 9 to100 times greater than those of the inverse probability weighting methods.

The empirical biases in the Wilm’s Tumor study

The empirical bias averaged over 5,000 iterations is shown in each cell. The bias is defined by the difference between the estimates from the nested case-control sample and the full cohort estimate. For the stratified model, I used histology ( x 1 ) as the only covariate, and tumor stage ( x 2 ), and the age at baseline ( x 3 ) as the stratification factors to allow different baseline hazard functions. The full cohort estimate of the log hazard ratio β 1 S was 1.499 (SE = 0.092). For the additive model, all three predictors were included as the additive covariates. The full cohort estimate of the log hazard ratio β 1 was 1.593 (SE = 0.089).

Table 4 shows the empirical standard errors. The empirical variance due to both infinite and finite sampling was computed by adding the estimated variance for the full cohort estimates to the empirical variance resulting from the simulation. The inverse probability weighting method again resulted in less empirical standard error than the conditional logistic approach of Thomas in all settings. In addition, the approximate jackknife standard errors showed very good approximation of the empirical standard errors of the inverse probability weighting method estimators.

The empirical and the estimated standard errors in the Wilm’s Tumor study

In each cell, the empirical standard error is first shown followed by the estimated standard error averaged over 5,000 simulated data sets in parentheses. To capture both infinite and finite sampling variation, the empirical variance is defined as the sum of the empirical variance due to the simulation and the estimated variances of the full cohort estimates. The approximate jackknife standard errors were computed for the inverse probability weighting methods.

6. Discussion

The inclusion probability methods are more efficient than the conditional logistic approach of Thomas; however, the epidemiology research community has not accepted the methods as replacements of the Thomas’ method. To promote the use of the inverse probability weighted estimators, I demonstrated that the estimators have greater power than the conditional logistic approach consistently across various sample sizes and magnitudes of the hazard ratios. I then demonstrated that the easily computable approximate jackknife standard errors originally proposed for secondary outcome analysis in nested case-control studies (2013) showed good approximation of the empirical standard errors in the primary outcome analysis. And they were remarkably close to the standard errors by Samuelsen (1997) . Finally, I made a modest generalization to the partial likelihood and inclusion probabilities in order to incorporate additional matching and the stratified Cox analysis.

Consider a small cohort of ten subjects and one covariate, x , of interest. Three subjects ( i = 1, 2, 3) failed with no ties before the remaining seven subjects ( i = 4, …, 10) were censored ( Figure 4 ). In a nested case-control design with two controls for each case ( i.e. , m = 2), the inclusion probabilities of the subjects were p 1 = p 2 = p 3 = 1, p 4 = ⋯ p 10 = 1 –(1 – 2/9)(1–2/8)(1–2/7) = 0.58. Overall all three failures ( i = 1, 2, 3) and four non-failures ( i = 4, 5, 6, 7) were included in the sample. Consider the contribution of the subjects at 1 month. The figure demonstrates the nested case-control sample with subjects 2 and 4 selected as controls from the risk set at 1 month. The denominator of Cox’s partial likelihood (2.2) is

To approximate this, the partial likelihood of Samuelsen uses

The conditional logistic partial likelihood of Thomas uses

An example of a nested case-control study design (This is an example of a nested case-control design ( m = 2) from a small cohort of ten subjects. For example, three subjects ( i = 1, 2, 3) failed with no ties before the remaining seven subjects ( i = 4, …, 10) were censored. The subjects 2 and 4 were selected as the controls from the risk set at 1 month. Overall all three failures ( i = 1, 2, 3) and four non-failures ( i = 4, 5, 6, 7) were included in the sample.)

Notice that the constant 9/2 does not change the resulting estimator. The second terms in (6.2) and (6.3) are both consistent estimators of the second term in (6.1) with respect to the finite sampling distribution of nested case-control samples. This is why the partial likelihoods of both methods are design consistent estimators of Cox’s partial likelihood. The standard error of (6.3) suffers because the covariate contribution is estimated from only 3 subjects while all 7 available subjects are used in (6.2) .

We may view a nested case-control design as an unequal probability sampling from a full cohort. This explains why the same form of partial-likelihood (2.5) has been used in complex survey designs ( Binder, 1992 ; Lin, 2000 ). However there are differences between a nested case-control study and a complex survey. In the complex survey, a sample is drawn from each disjoint stratum. On the contrary, in nested case-control studies, m subjects are repeatedly selected from overlapping risk sets. Further, unlike in typical survey settings, nested case-control studies almost always need to take both finite and infinite sampling variations into consideration because full cohorts are often only moderate in sizes and they themselves are considered a sample from target populations.

The statistical power of the inverse probability weighting methods decreases as more strata with varying baseline hazards functions are allowed in the analysis stage. If matching is fully exhaustive and the number of failures equals the number of subgroups in the full cohort for matching, and if one decides to assume different hazard functions for all matching subgroups, the method should result in similar power as that of the conditional logistic approach. However, matching factors in the design stage and stratification factors in the analysis stage need not be the same since over-stratification in the analysis stage can unnecessarily decrease power. In addition, if the over-matching causes the inclusion probability of some subjects to be zero, the study by definition does not represent the full-cohort any more.

From the simulation study, we observed a moderate under-estimation of the standard errors of the inverse probability weighted estimators by both the approximate jackknife method and Samuelsen’s method when the sample size was small ( N = 500, m = 1; Figure 2 ). However, the type 1 errors were still acceptable (< 0.06; Table 1 ). Further research to improve the small sample property will be helpful.

Local averaging of covariates to adjust for weights will likely further improve the performance ( Chen, 2001 ). The performance would depend on the correlation between failure times and covariates as well as the parameters for local averaging.

The inverse probability weighting methods break the matching. Any matching variable that could affect the hazard ratio of interest should be controlled for by including them in the regression model. Finally, notice that the inverse probability weighting methods used in this paper requires retrospective access to the censoring times of all non-failures in the full cohort while Thomas (1977) and Chen (2004) do not.

Acknowledgments

The author thanks Professors Mimi Kim and Xiaonan Xue for their encouragement and comments on this work.

This work was supported by National Institutes of Health Grants 1UL1RR025750-01, P30 CA01330-35, and the National Research Foundation of Korea Grant NRF-2012S1A3A2033416.

- Barlow WE. Robust variance estimation for the case-cohort design. Biometrics. 1994; 50 :1064–1072. [ PubMed ] [ Google Scholar ]

- Beckwith JB, Palmer NF. Histopathology and prognosis of Wilms tumor. Cancer. 1978; 41 :1937–1948. [ PubMed ] [ Google Scholar ]

- Binder DA. Fitting Cox’s proportional hazards models from survey data. Biometrika. 1992; 79 :139–147. [ Google Scholar ]

- Borgan O, Langholz B. Nonparametric estimation of relative mortality from nested case-control studies. Biometrics. 1993; 49 [ PubMed ] [ Google Scholar ]

- Breslow N, Chatterjee N. Design and analysis of two-phase studies with binary outcome applied to Wilms tumour prognosis. Applied Statistics. 1999; 48 [ Google Scholar ]

- Chen KN. Generalized case-cohort sampling. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2001; 63 [ Google Scholar ]

- Chen KN. Statistical estimation in the proportional hazards model with risk set sampling. Annals of Statistics. 2004; 32 :1513–1532. [ Google Scholar ]

- Cox DR. Regression models and life tables. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 1972; 34 :187–220. [ Google Scholar ]

- D’angio GJ, Breslow N, Beckwith B, Evans A, Baum E, Delorimier A, Fernbach D, Hrabovsky E, Jones B, Kelalis P, Othersen B, Tefft M, Thomas PRM. Treatment of Wilms’ tumor. Cancer. 1989; 64 :349–360. [ PubMed ] [ Google Scholar ]

- Green DM, Breslow NE, Beckwith JB, Finklestein JZ, Grundy PG, Thomas PRM, Kim T, Shochat S, Haase GM, Ritchey ML, Kelalis PP, D’angio GJ. Comparison between single-dose and divided-dose administration of dactinomycin and doxorubicin for patients with Wilms tumor: a report from the National Wilms Tumor Study Group. Journal of Clinical Oncology. 1998; 16 :237–245. [ PubMed ] [ Google Scholar ]

- Kim RS. Analysis of Secondary Outcomes in Nested Case-Control Study Designs. Division of Biostatistics, Albert Einstein College of Medicine; 2013. (Technical Reports). [ Google Scholar ]

- Langholz B. Case-control studies = Odds ratios: Blame the retrospective model. Epidemiology. 2010; 21 :10–12. [ PubMed ] [ Google Scholar ]

- Lin DY. On fitting Cox’s proportional hazards models to survey data. Biometrika. 2000; 87 :37–47. [ Google Scholar ]

- Lin DY, Wei LJ. The robust inference for the Cox proportional hazards model. Journal of the American Statistical Association. 1989; 84 [ Google Scholar ]

- Reid N, Crepeau H. Influence function for proportional hazards regression. Biometrika. 1985; 72 :1–9. [ Google Scholar ]

- Samuelsen S. A pseudo-likelihood approach to analysis of nested case-control studies. Biometrika. 1997; 84 :379–394. [ Google Scholar ]

- Thomas D. Addendum to ‘Methods of cohort analysis: Appraisal by application to asbestos mining’ In: Liddell FDK, McDonald JC, Thomas DC, editors. Journal of the Royal Statistical Society. A140. 1977. pp. 469–491. [ Google Scholar ]

- Research article

- Open access

- Published: 14 May 2020

Application of the matched nested case-control design to the secondary analysis of trial data

- Christopher Partlett ORCID: orcid.org/0000-0001-5139-3412 1 , 2 ,

- Nigel J. Hall 3 ,

- Alison Leaf 4 , 2 ,

- Edmund Juszczak 2 &

- Louise Linsell 2

BMC Medical Research Methodology volume 20 , Article number: 117 ( 2020 ) Cite this article

18k Accesses

13 Citations

3 Altmetric

Metrics details

A nested case-control study is an efficient design that can be embedded within an existing cohort study or randomised trial. It has a number of advantages compared to the conventional case-control design, and has the potential to answer important research questions using untapped prospectively collected data.

We demonstrate the utility of the matched nested case-control design by applying it to a secondary analysis of the Abnormal Doppler Enteral Prescription Trial. We investigated the role of milk feed type and changes in milk feed type in the development of necrotising enterocolitis in a group of 398 high risk growth-restricted preterm infants.

Using matching, we were able to generate a comparable sample of controls selected from the same population as the cases. In contrast to the standard case-control design, exposure status was ascertained prior to the outcome event occurring and the comparison between the cases and matched controls could be made at the point at which the event occurred. This enabled us to reliably investigate the temporal relationship between feed type and necrotising enterocolitis.

Conclusions

A matched nested case-control study can be used to identify credible associations in a secondary analysis of clinical trial data where the exposure of interest was not randomised, and has several advantages over a standard case-control design. This method offers the potential to make reliable inferences in scenarios where it would be unethical or impractical to perform a randomised clinical trial.

Peer Review reports

Key messages

A matched nested case-control design provides an efficient way to investigate causal relationships using untapped data from prospective cohort studies and randomised controlled trials

This method has several advantages over a standard case-control design, particularly when studying time-dependent exposures on rare outcomes

It offers the potential to make reliable inferences in scenarios where unethical or practical issues preclude the use of a randomised controlled trial

Randomised controlled trials (RCTs) are regarded as the gold standard in evidence based medicine, due to their prospective design and the minimisation of important sources of bias through the use of randomisation, allocation concealment and blinding. However, RCTs are not always appropriate due to ethical or practical issues, particularly when investigating risk factors for an outcome. If beliefs about the causal role of a risk factor are already embedded within a clinical community, based on concrete evidence or otherwise, then it is not possible to conduct an RCT due to lack of equipoise. It is often not feasible to randomise potential risk factors, for example, if they are biological or genetic or if there is a strong element of patient preference involved. In such scenarios, the main alternative is to conduct an observational study; either a prospective cohort study which can be complicated and costly, or a retrospective case-control study with methodological shortcomings.

The nested case-control study design employs case-control methodology within an established prospective cohort study [ 1 ]. It first emerged in the 1970–80s and was typically used when it was expensive or difficult to obtain data on a particular exposure for all members of the cohort; instead a subset of controls would be selected at random [ 2 ]. This method with the use of matching has been shown to be an efficient design that can be used to provide unbiased estimates of relative risk with considerable cost savings [ 3 , 4 , 5 ]. Cases who develop the outcome of interest at a given point in time are matched to a random subset of members of the cohort who have not experienced the outcome at that time. These controls may develop the outcome later and become a case themselves, and they may also act as a control for other cases [ 6 , 7 ]. This approach has a number of advantages compared to the standard case-control design: (1) cases and controls are sampled from the same population, (2) exposures are measured prior to the outcome occurring, and (3) cases can be matched to controls at the time (e.g. age) of the outcome event.

More recently, the nested case-control design has been used within RCTs to investigate the causative role of risk factors in the development of trial outcomes [ 8 , 9 , 10 ]. In this paper we investigate the utility of the matched nested case-control design in a secondary analysis of the ADEPT: Abnormal Doppler Enteral Prescription Trial (ISRCTN87351483) data, to investigate the role of different types of milk feed (and changes in types of milk feed) in the development of necrotising enterocolitis. We illustrate the use of this methodology and explore issues relating to its implementation. We also discuss and appraise the value of this methodology in answering similar challenging research questions using clinical trial data more generally.

ADEPT: Abnormal Doppler Enteral Prescription Trial (ISRCTN87351483) was funded by Action Medical Research (SP4006) and investigated whether early (24–48 h after birth) or late (120–144 h after birth) introduction of milk feeds was a risk factor for necrotising enterocolitis (NEC) in a population of 404 infants born preterm and growth-restricted, following abnormal antenatal Doppler blood flow velocities [ 11 ]. Consent and randomisation occurred in the first 2 days after birth. There was no difference found in the incidence of NEC between the two groups, however there was interest in the association between feed type (formula/fortifier or exclusive mother/donor breast milk) and the development of NEC. Breast milk is one of few factors believed to reduce the risk of NEC that has been widely adopted into clinical practice, despite a paucity of high quality population based data [ 12 , 13 ]. However, due to lack of equipoise it would not be ethical or feasible to conduct a trial randomising newborn infants to formula or breast milk.

With additional funding from Action Medical Research (GN2506), the authors used a matched nested case-control design to investigate the association between feed type and the development of severe NEC, defined as Bell’s staging Stage II or III [ 14 ], using detailed daily feed log data from the ADEPT trial. The feed type and quantity of feed was recorded daily until an infant had reached full feeds and had ceased parenteral nutrition, or until 28 days after birth, whichever was longest. Using this information, infants were classified according to the following predefined exposures:

Exposure to formula milk or fortifier in the first 14 days of life

Exposure to formula milk or fortifier in the first 28 days of life

Any prior exposure to formula milk or fortifier

Change in feed type (between formula, fortifier or breast milk) within the previous 7 days.

In the remainder of the methods section we discuss the challenges of conducting this analysis and practical issues encountered in applying the matched nested case-control methodology. In the results section we present data from different aspects of the analysis, to illustrate the utility of this approach in answering the research question.

Cohort time axis

For the main trial analysis, time of randomisation was defined as time zero, which is the conventional approach given that events occurring prior to randomisation cannot be influenced by the intervention under investigation. However, for the nested case-control analysis, time zero was defined as day of delivery because age in days was considered easier to interpret, and also it was possible for an outcome event to occur prior to randomisation. Infants were followed up until their exit time, which was defined by the first occurrence of NEC, death or the last daily feed log record.

Case definition

An infant was defined as a case at their first recorded incidence of severe NEC, defined as Bell’s staging Stage II or III [ 14 ]. Infants could only be included as a case once; subsequent episodes of NEC in the same infant were not counted. Once an infant had been identified as a case, they could not be included in any future risk sets for other cases, even if the NEC episode had been resolved.

Risk set definition

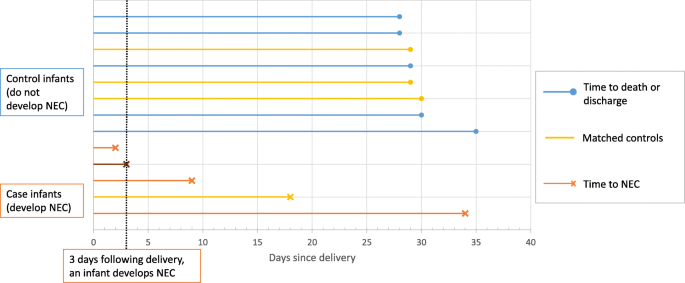

One of the major challenges was identifying an appropriate risk set from which controls could be sampled, whilst also allowing the analysis to incorporate the time dependent feed log data and adjust for known confounders. A diagnosis of NEC has a crucial impact on the subsequent feeding of an infant, therefore it was essential that the analysis only included exposure to non-breast milk feeds prior to the onset of NEC. A standard case-control analysis would have produced misleading results in this context, as infants would have been defined as a cases if they had experienced NEC prior to the end of the study period, regardless of the timing of the event in relation to exposure to non-breast milk. Using a matched nested case-control design allowed us to match an infant with a diagnosis of NEC (case) at a given point in time (days from delivery) to infants with similar characteristics (with respect to other important confounding factors), who had not experienced NEC at the failure time of the case. Figure 1 is a schematic diagram of this process. Each time an outcome event occurred (case), infants that were still at risk were eligible to be selected as a control (risk set). A matching algorithm was used to select a sample of controls with similar characteristics from this risk set. Infants selected as controls could go on to become a case themselves, and could also be included in the risk sets for other cases.

Schematic diagram illustrating the selection of controls from each risk set. Three days following delivery, an infant develops NEC. At this point, there are 11 infants left in the risk set. Four controls with the closest matching are selected, including one infant that becomes a future case on day 18

Selection of matching factors

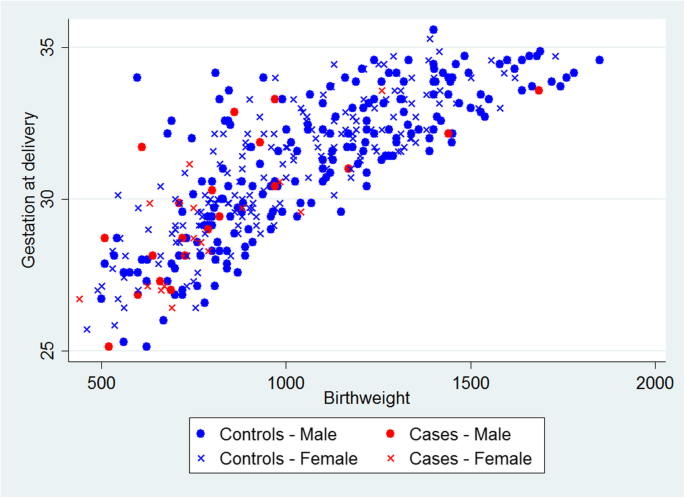

An important consideration was the appropriate selection of matching factors as well as identifying the optimum mechanism for matching. Sex, gestational age and birth weight were considered to be clear candidates for matching factors, as they are all associated with the development NEC. Gestational age and birth weight in particular are both likely to impact the infant’s feeding and thus their exposure to non-breast milk feeds. Both gestational age and birth weight were matched simultaneously, because of the strong collinearity between gestational age and birth weight, illustrated in Fig. 2 . This was achieved by minimising the Mahalanobis distance from the case to prospective controls of the same sex [ 15 ]. That is, selecting the control closest in gestational age and birth weight to the case while taking into account the correlation between these characteristics.

Scatterplot of birth weight versus gestational age for infants with NEC (cases) and those without (controls)

Typically, treatment allocation would be incorporated as a matching factor since in a secondary analysis it is a nuisance factor imposed by the trial design, which should be accounted for. However, in this example, the ADEPT allocation is associated with likelihood of exposure, since it directly influences the feeding regime. For example, an infant randomised to receive early introduction of feeds is more likely to be exposed to non-breast milk feeds in the first 14 days (44%) than an infant randomised to late introduction of feeds (23%). The main trial results also demonstrated no evidence of association with the outcome (NEC) and therefore there was a concern about the potential for overmatching. Overmatching is caused by inappropriate selection of matching factors (i.e. factors which are not associated with the outcome of interest), which may harm the statistical efficiency of the analysis [ 16 ]. Therefore, we did not include the ADEPT allocation as a matching factor, but we conduct an unadjusted and adjusted analysis by trial arm, to examine its impact on the results.

Selection of controls

Another important consideration was the method used to randomly select controls from each risk set for each case. This can be performed with or without replacement and including or excluding the case in the risk set. We chose the recommended option of sampling without replacement and excluding the case from the risk set, which produces the optimal unbiased estimate of relative risk, with greater statistical efficiency [ 17 , 18 ]. However, infants could be included in multiple risk sets and be selected more than once as a control. We also included future cases of NEC as controls in earlier risk sets, as their exclusion can also lead to biased estimates of relative risk [ 19 ].

Number of controls

In standard case-control studies it has been shown that there is little statistical efficiency gained from having more than four matched controls relative to each case [ 20 , 21 ]. Using five controls is only 4% more efficient than using four, therefore there is no added benefit in using additional controls if a cost is attached, for example taking extra biological samples in a prospective cohort setting. However gains in statistical efficiency are possible by using more than four controls if the probability of exposure among controls is low (< 0.1) [ 4 , 5 ]. Neither of these were issues for this particular analysis, as there were no additional costs involved in using more controls and prevalence of the defined exposures to non-breast milk was over 20% among infants without a diagnosis of NEC. However, there was a concern that including additional controls with increasing distance from the gestational age and birth weight of the case may undermine the matching algorithm. Also, increasing the number of controls sampled per case would lead to an increase in repeated sampling, resulting in larger number of duplicates present in the overall matched control population. This was a particular concern as control duplication was most likely to occur for infants with the lowest birth weight and gestational ages, from which there is a much smaller pool of control infants to sample from. This would have resulted in a small number of infants (with low birth weight and gestational age) being sampled multiple times and having disproportionate weighting in the matched control sample. Therefore, we limited the number of matched controls to four per case.

Statistical analysis

The baseline characteristics of infants with NEC, the matched control group, and all infants with no diagnosis of NEC (non-cases) were compared. Numbers (with percentages) were presented for binary and categorical variables, and means (and standard deviations) or medians (with interquartile range and/or range) for continuous variables. Cases were matched to four controls with the same sex and smallest Mahalanobis distance based on gestational age and birth weight. Conditional logistic regression was used to calculate the odds ratio of developing NEC for cases compared matched controls for each predefined exposure with 95% confidence intervals. Unadjusted odds ratios were calculated, along with estimates adjusting for ADEPT allocation.

The results of the full analysis, including the application of this method to explore the relationship between feed type and other clinically relevant outcomes, are reported in a separate clinical paper (in preparation). Of the 404 infants randomised to ADEPT, 398 were included in this analysis (1 infant was randomised in error, 1 set of parents withdrew consent, 3 infants had no daily feed log data and for 1 infant the severity of NEC was unknown). There were 35 cases of severe NEC and 363 infants without a diagnosis of severe NEC (non-cases). Of the 140 matched controls randomly sampled from the risk set, 109 were unique, 31 were sampled more than once, and 8 had a subsequent diagnosis of severe NEC.

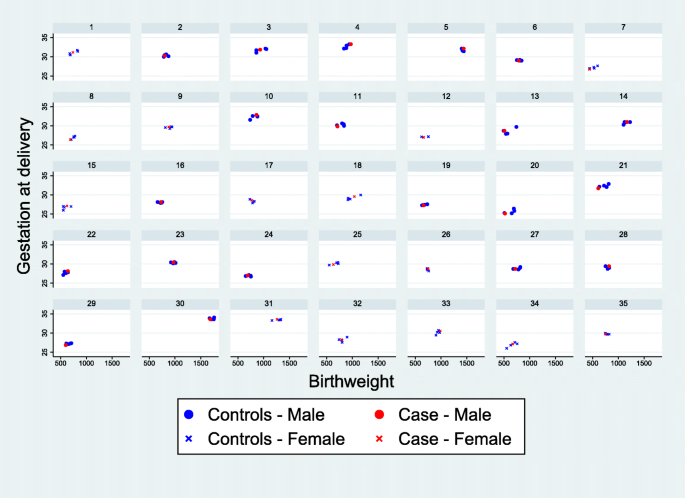

The baseline characteristics of infants with severe NEC (cases) and their matched controls are shown in Table 1 , alongside the characteristics of infants without a diagnosis of severe NEC (non-cases). The matching algorithm successfully produced a well matched collection of controls, based on the majority of these characteristics. There were, however, a slightly higher proportion of infants with the lowest birthweights (< 750 g) among the cases compared to the matched controls (49% vs 38%). The only other factors to show a noticeable difference between cases and matched controls are maternal hypertension (37% vs 49%) and ventilation at trial entry (6% vs 21%), neither of which have been previously identified as risk factors for NEC. Figure 3 shows scatter plots of birth weight and gestational age for the 35 individual cases of NEC and their matched controls, which provides a visual representation of the matching.

Scatterplots showing the matched cases and controls for each case of severe NEC. Each panel contains a separate case of NEC and the matched controls

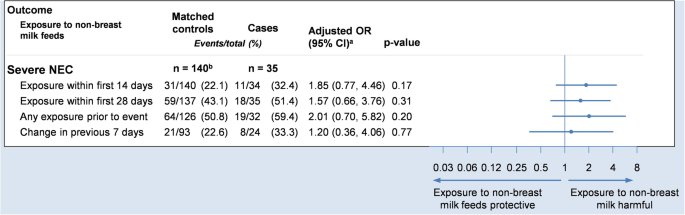

The main results of the adjusted analysis are presented in Fig. 4 . Unadjusted analyses are included in Table A 1 in the supplementary material, alongside a post-hoc sensitivity analysis that additionally includes covariate adjustment for gestational age and birthweight. While the study did not identify any significant trends between feed-type and severe NEC the findings were consistent with the a priori hypothesis, that exposure to non-breast milk feeds is associated with an increased risk of NEC. In addition, the study identified some potential trends in the association of feed-type with other important outcomes, worthy of further investigation.

Forest plot showing the adjusted odds ratio comparing severe NEC to exposures. Odds ratios are adjusted for sex, gestational age and birthweight (via matching) and trial arm (via covariate adjustment). a Odds ratio and 95% confidence interval. b 109 unique controls

Employing a matched nested case-control design for this secondary analysis of clinical trial data overcame many of the limitations of a standard case-control analysis. We were able to select controls from the same population as the cases thus avoiding selection bias. Using matching, we were able to create a comparable sample of controls with respect to important clinical characteristics and confounding factors. This method allowed us to reliably investigate the temporal relationship between feed type and severe NEC since the exposure data was collected prospectively prior to the outcome occurring. We were also able to successfully investigate the relationship between feed type and several other important outcomes such as sepsis. A standard case-control analysis is typically based on recall or retrospective data collection once the outcome is known, which can introduce recall bias. If we had performed a simple comparison between cases and non-cases of NEC without taking into account the timing of the exposure, this would have produced misleading results. Another advantage of the matched nested case-control design was that we were able to match cases to controls at the time of the outcome event so that they were of comparable ages. The methodology is especially powerful when the timing of the exposure is of importance, particularly for time-dependent exposures such as the one studied here.

While the efficient use of existing trial data has a number of benefits, there are of course disadvantages to using data that were collected for another primary purpose. For instance, it is possible that such data are less robustly collected and checked. As a result, researchers may be more likely to encounter participants with either invalid or missing data.

For instance, the some of the additional feed log data collected in ADEPT were never intended to be used to answer clinical research questions, rather, their purpose was to monitor the adherence of participants to the intervention or provide added background information. In this study, it was necessary to make assumptions about missing data to fill small gaps in the daily feed logs. Researchers should take care that such assumptions are fully documented in the statistical analysis plan in advance and determined blinded to the outcome. Another option is to plan these sub-studies at the design phase, however, there needs to be a balance between the potential burden of additional data collection and having a streamlined trial that is able to answer the primary research question.

Another limitation of the methodology is that it is only possible to match on known confounders. This is in contrast to a randomised controlled trial, in which it is possible to balance on unknown and unmeasured baseline characteristics. As a consequence, particular care must be given to select important matching factors, but also to avoid overmatching.

The methodology allows for participants to be selected as controls multiple times, so there is the possibility that systematic duplication of a specific subset of participants (e.g. infants with a lower birthweight and smaller gestational age) could lead to a small number of participants disproportionately influencing the results. Within this study, we conducted sensitivity analyses with fewer controls, and were able to demonstrate that this had a minimal impact on the findings.

We have demonstrated how a matched nested case-control design can be embedded within an RCT to identify credible associations in a secondary analysis of clinical trial data where the exposure of interest was not randomised. We planned this study after the clinical trial data had already been collected, but it could have been built in seamlessly as a SWAT (Study Within A Trial) during the trial design phase, to ensure that all relevant data were collected in advance with minimal effort. This method has several advantages over a standard case-control design and offers the potential to make reliable inferences in scenarios where unethical or practical issues preclude the use of an RCT. Moreover, because of the flexibility of the methodology in terms of the design and analysis, the matched nested case-control design could reasonably be applied to a wide range of challenging research questions. There is an abundance of high quality large prospective studies and clinical trials with well characterised cohorts, in which this methodology could be applied to investigate causal relationships, adding considerable value for money to the original studies.

Availability of data and materials

ADEPT trial data are available upon reasonable request, subject to the NPEU Data Sharing Policy.

Abbreviations

Abnormal Doppler Enteral Prescription Trial

- Randomised controlled trial

Necrotising enterocolitis

Continuous positive airway pressure

Umbilical artery catheter

Umbilical venous catheter

Study within a trial

Breslow N. Design and analysis of case-control studies. Annu Rev Public Health. 1982;3(1):29–54.

Article CAS Google Scholar

Mantel N. Synthetic retrospective studies and related topics. Biometrics. 1973;29(3):479–86.

Breslow NE. Statistics in epidemiology: the case-control study. J Am Stat Assoc. 1996;91(433):14–28.

Breslow NE, Lubin J, Marek P, Langholz B. Multiplicative models and cohort analysis. J Am Stat Assoc. 1983;78(381):1–12.

Article Google Scholar

Goldstein L, Langholz B. Asymptotic theory for nested case-control sampling in the cox regression model. Ann Stat. 1992;20(4):1903–28.

Ernster VL. Nested Case-Control Studies. Prev Med. 1994;23(5):587–90. https://doi.org/10.1006/pmed.1994.1093 .

Article CAS PubMed Google Scholar

Essebag V, Genest J Jr, Suissa S, Pilote L. The nested case-control study in cardiology. Am Heart J. 2003;146(4):581–90. https://doi.org/10.1016/S0002-8703(03)00512-X .

Article PubMed Google Scholar

Nieuwlaat R, Connolly BJ, Hubers LM, Cuddy SM, Eikelboom JW, Yusuf S, et al. Quality of individual INR control and the risk of stroke and bleeding events in atrial fibrillation patients: a nested case control analysis of the ACTIVE W study. Thromb Res. 2012;129(6):715–9.

Fox GJ, Nhung NV, Loi NT, Sy DN, Britton WJ, Marks GB. Barriers to adherence with tuberculosis contact investigation in six provinces of Vietnam: a nested case–control study. BMC Infect Dis. 2015;15(1):103.

Mattson CL, Bailey RC, Agot K, Ndinya-Achola J, Moses S. A nested case-control study of sexual practices and risk factors for prevalent HIV-1 infection among young men in Kisumu, Kenya. Sex Trans Dis. 2007;34(10):731.

Google Scholar

Leaf A, Dorling J, Kempley S, McCormick K, Mannix P, Linsell L, et al. Early or delayed enteral feeding for preterm growth-restricted infants: a randomized trial. Pediatrics. 2012;129(5):e1260–e8. https://doi.org/10.1542/peds.2011-2379 .

Lucas A, Cole TJ. Breast milk and neonatal necrotising enterocolitis. Lancet. 1990;336(8730):1519–23.

McGuire W, Anthony MY. Donor human milk versus formula for preventing necrotising enterocolitis in preterm infants: systematic review. Arch Dis Child Fetal Neonatal Ed. 2003;88(1):F11–F4.

Walsh MC, Kliegman RM. Necrotizing enterocolitis: treatment based on staging criteria. Pediatr Clin N Am. 1986;33:179–201.

Mahalanobis PC. On the Generalized Distance in Statistics. Proceedings of the National Institute of Science of India. 1936;2:49-55.

Brookmeyer R, Liang K, Linet M. Matched case-control designs and overmatched analyses. Am J Epidemiol. 1986;124(4):693–701.

Lubin JH. Extensions of analytic methods for nested and population-based incident case-control studies. J Chronic Dis. 1986;39(5):379–88.

Robins JM, Gail MH, Lubin JH. More on" biased selection of controls for case-control analyses of cohort studies". Biometrics. 1986;42(2):293–9.

Lubin JH, Gail MH. Biased selection of controls for case-control analyses of cohort studies. Biometrics. 1984;40(1):63–75.

Ury HK. Efficiency of case-control studies with multiple controls per case: continuous or dichotomous data. Biometrics. 1975;31(3):643–9.

Gail M, Williams R, Byar DP, Brown C. How many controls? J Chronic Dis. 1976;29(11):723–31.

Meeting abstracts from the 5th International Clinical Trials Methodology Conference (ICTMC 2019). Trials. 2019;20(Suppl 1):579 Brighton, UK. 06–09 October 2019. doi: 10e.1186/s13063-019-3688-6 .

Download references

Acknowledgements

This work was presented at the International Clinical Trials Methodology Conference (ICTMC) in 2019 and the abstract is published within Trials [ 22 ].

This work was supported by Action Medical Research [Grant number GN2506]. The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and affiliations.

Nottingham Clinical Trials Unit, University of Nottingham, Nottingham, UK

Christopher Partlett

National Perinatal Epidemiology Unit, Nuffield Department of Population Health, University of Oxford, Oxford, UK

Christopher Partlett, Alison Leaf, Edmund Juszczak & Louise Linsell

University Surgery Unit, Faculty of Medicine, University of Southampton, Southampton, UK

Nigel J. Hall

Department of Child Health, Faculty of Medicine, University of Southampton, Southampton, UK

Alison Leaf

You can also search for this author in PubMed Google Scholar

Contributions

NH, AL, EJ and LL conceived the project. CP performed the statistical analyses under the supervision of LL and EJ. CP and LL drafted the manuscript and EJ, AL and NH critically reviewed it. All authors were involved in the interpretation of results. The author(s) read and approved the final manuscript.

Corresponding author

Correspondence to Christopher Partlett .

Ethics declarations

Ethics approval and consent to participate.

No ethical approval was required for this study, since it used only previously collected, fully anonymised research data.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interest.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1..

Table A1 Association between exposures and the development of Severe NEC. Each case is matched to 4 controls with the same sex and the smallest distance in terms of the Malhalanobis distance based on gestational age and birthweight.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

Partlett, C., Hall, N.J., Leaf, A. et al. Application of the matched nested case-control design to the secondary analysis of trial data. BMC Med Res Methodol 20 , 117 (2020). https://doi.org/10.1186/s12874-020-01007-w

Download citation

Received : 03 December 2019

Accepted : 05 May 2020

Published : 14 May 2020

DOI : https://doi.org/10.1186/s12874-020-01007-w

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Preterm infants

- Neonatology

- Statistical methods

- Nested case-control

BMC Medical Research Methodology

ISSN: 1471-2288

- General enquiries: [email protected]

Incidence density matching with a simple SAS computer program

Affiliation.

- 1 Department of Community Health, Wellington School of Medicine, Wellington Hospital, New Zealand.

- PMID: 2621036

- DOI: 10.1093/ije/18.4.981

Incidence density matching is the method of choice for selecting controls in nested case-control studies, but its use has been limited by its computational complexity. We describe incidence density matching in nested case-control studies with a simple and flexible SAS computer program. The program contains no restrictions on the number of matching factors, although it is usually only necessary to match on age (in incidence density fashion) and on gender. The program is illustrated with data from an historical cohort study of the mortality experience of workers at an asbestos textile manufacturing plant.

Publication types

- Research Support, U.S. Gov't, P.H.S.

- Case-Control Studies*

- Cohort Studies

- Logistic Models

- Programming Languages*

- Proportional Hazards Models

- Sampling Studies*

IMAGES

VIDEO

COMMENTS

Matched case-control studies control for confounding by introducing stratification in the design phase of a study. Cases are individually matched to a set of controls (1:n ratio); n can vary from 1 to the desired number of controls for each case. Numbers of matched controls may vary dependent on the available controls possessing matching criteria.

An example of a nested case-control study design (This is an example of a nested case-control design (m= 2) from a small cohort of ten subjects. For example, three subjects (i = 1, 2, 3) failed with no ties before the remaining seven subjects (i = 4, …, 10) were censored. The subjects 2 and 4 were selected as the controls from the risk set at ...

The nested case-control study design is an extremely powerful approach that provides an enormous reduction in costs, data collection efforts, and analysis compared to a full study cohort approach. The nested case-control study achieves all this with a relatively minor loss in statistical efficiency. The nested case-control study

Background A nested case-control study is an efficient design that can be embedded within an existing cohort study or randomised trial. It has a number of advantages compared to the conventional case-control design, and has the potential to answer important research questions using untapped prospectively collected data. Methods We demonstrate the utility of the matched nested case-control ...

The analysis of nested case-control studies uses a proportional hazards model and a modification to the partial likelihood used in full-cohort studies, giving estimates of hazard ratios. Extensions to other survival models are possible. In the standard design, controls are selected randomly from the risk set for each case; however, more ...

We describe incidence density matching in nested case-control studies with a simple and flexible SAS computer program. The program contains no restrictions on the number of matching factors, although it is usually only necessary to match on age (in incidence density fashion) and on gender. The program is illustrated with data from an historical ...