Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Systematic Review | Definition, Example, & Guide

Systematic Review | Definition, Example & Guide

Published on June 15, 2022 by Shaun Turney . Revised on November 20, 2023.

A systematic review is a type of review that uses repeatable methods to find, select, and synthesize all available evidence. It answers a clearly formulated research question and explicitly states the methods used to arrive at the answer.

They answered the question “What is the effectiveness of probiotics in reducing eczema symptoms and improving quality of life in patients with eczema?”

In this context, a probiotic is a health product that contains live microorganisms and is taken by mouth. Eczema is a common skin condition that causes red, itchy skin.

Table of contents

What is a systematic review, systematic review vs. meta-analysis, systematic review vs. literature review, systematic review vs. scoping review, when to conduct a systematic review, pros and cons of systematic reviews, step-by-step example of a systematic review, other interesting articles, frequently asked questions about systematic reviews.

A review is an overview of the research that’s already been completed on a topic.

What makes a systematic review different from other types of reviews is that the research methods are designed to reduce bias . The methods are repeatable, and the approach is formal and systematic:

- Formulate a research question

- Develop a protocol

- Search for all relevant studies

- Apply the selection criteria

- Extract the data

- Synthesize the data

- Write and publish a report

Although multiple sets of guidelines exist, the Cochrane Handbook for Systematic Reviews is among the most widely used. It provides detailed guidelines on how to complete each step of the systematic review process.

Systematic reviews are most commonly used in medical and public health research, but they can also be found in other disciplines.

Systematic reviews typically answer their research question by synthesizing all available evidence and evaluating the quality of the evidence. Synthesizing means bringing together different information to tell a single, cohesive story. The synthesis can be narrative ( qualitative ), quantitative , or both.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Systematic reviews often quantitatively synthesize the evidence using a meta-analysis . A meta-analysis is a statistical analysis, not a type of review.

A meta-analysis is a technique to synthesize results from multiple studies. It’s a statistical analysis that combines the results of two or more studies, usually to estimate an effect size .

A literature review is a type of review that uses a less systematic and formal approach than a systematic review. Typically, an expert in a topic will qualitatively summarize and evaluate previous work, without using a formal, explicit method.

Although literature reviews are often less time-consuming and can be insightful or helpful, they have a higher risk of bias and are less transparent than systematic reviews.

Similar to a systematic review, a scoping review is a type of review that tries to minimize bias by using transparent and repeatable methods.

However, a scoping review isn’t a type of systematic review. The most important difference is the goal: rather than answering a specific question, a scoping review explores a topic. The researcher tries to identify the main concepts, theories, and evidence, as well as gaps in the current research.

Sometimes scoping reviews are an exploratory preparation step for a systematic review, and sometimes they are a standalone project.

A systematic review is a good choice of review if you want to answer a question about the effectiveness of an intervention , such as a medical treatment.

To conduct a systematic review, you’ll need the following:

- A precise question , usually about the effectiveness of an intervention. The question needs to be about a topic that’s previously been studied by multiple researchers. If there’s no previous research, there’s nothing to review.

- If you’re doing a systematic review on your own (e.g., for a research paper or thesis ), you should take appropriate measures to ensure the validity and reliability of your research.

- Access to databases and journal archives. Often, your educational institution provides you with access.

- Time. A professional systematic review is a time-consuming process: it will take the lead author about six months of full-time work. If you’re a student, you should narrow the scope of your systematic review and stick to a tight schedule.

- Bibliographic, word-processing, spreadsheet, and statistical software . For example, you could use EndNote, Microsoft Word, Excel, and SPSS.

A systematic review has many pros .

- They minimize research bias by considering all available evidence and evaluating each study for bias.

- Their methods are transparent , so they can be scrutinized by others.

- They’re thorough : they summarize all available evidence.

- They can be replicated and updated by others.

Systematic reviews also have a few cons .

- They’re time-consuming .

- They’re narrow in scope : they only answer the precise research question.

The 7 steps for conducting a systematic review are explained with an example.

Step 1: Formulate a research question

Formulating the research question is probably the most important step of a systematic review. A clear research question will:

- Allow you to more effectively communicate your research to other researchers and practitioners

- Guide your decisions as you plan and conduct your systematic review

A good research question for a systematic review has four components, which you can remember with the acronym PICO :

- Population(s) or problem(s)

- Intervention(s)

- Comparison(s)

You can rearrange these four components to write your research question:

- What is the effectiveness of I versus C for O in P ?

Sometimes, you may want to include a fifth component, the type of study design . In this case, the acronym is PICOT .

- Type of study design(s)

- The population of patients with eczema

- The intervention of probiotics

- In comparison to no treatment, placebo , or non-probiotic treatment

- The outcome of changes in participant-, parent-, and doctor-rated symptoms of eczema and quality of life

- Randomized control trials, a type of study design

Their research question was:

- What is the effectiveness of probiotics versus no treatment, a placebo, or a non-probiotic treatment for reducing eczema symptoms and improving quality of life in patients with eczema?

Step 2: Develop a protocol

A protocol is a document that contains your research plan for the systematic review. This is an important step because having a plan allows you to work more efficiently and reduces bias.

Your protocol should include the following components:

- Background information : Provide the context of the research question, including why it’s important.

- Research objective (s) : Rephrase your research question as an objective.

- Selection criteria: State how you’ll decide which studies to include or exclude from your review.

- Search strategy: Discuss your plan for finding studies.

- Analysis: Explain what information you’ll collect from the studies and how you’ll synthesize the data.

If you’re a professional seeking to publish your review, it’s a good idea to bring together an advisory committee . This is a group of about six people who have experience in the topic you’re researching. They can help you make decisions about your protocol.

It’s highly recommended to register your protocol. Registering your protocol means submitting it to a database such as PROSPERO or ClinicalTrials.gov .

Step 3: Search for all relevant studies

Searching for relevant studies is the most time-consuming step of a systematic review.

To reduce bias, it’s important to search for relevant studies very thoroughly. Your strategy will depend on your field and your research question, but sources generally fall into these four categories:

- Databases: Search multiple databases of peer-reviewed literature, such as PubMed or Scopus . Think carefully about how to phrase your search terms and include multiple synonyms of each word. Use Boolean operators if relevant.

- Handsearching: In addition to searching the primary sources using databases, you’ll also need to search manually. One strategy is to scan relevant journals or conference proceedings. Another strategy is to scan the reference lists of relevant studies.

- Gray literature: Gray literature includes documents produced by governments, universities, and other institutions that aren’t published by traditional publishers. Graduate student theses are an important type of gray literature, which you can search using the Networked Digital Library of Theses and Dissertations (NDLTD) . In medicine, clinical trial registries are another important type of gray literature.

- Experts: Contact experts in the field to ask if they have unpublished studies that should be included in your review.

At this stage of your review, you won’t read the articles yet. Simply save any potentially relevant citations using bibliographic software, such as Scribbr’s APA or MLA Generator .

- Databases: EMBASE, PsycINFO, AMED, LILACS, and ISI Web of Science

- Handsearch: Conference proceedings and reference lists of articles

- Gray literature: The Cochrane Library, the metaRegister of Controlled Trials, and the Ongoing Skin Trials Register

- Experts: Authors of unpublished registered trials, pharmaceutical companies, and manufacturers of probiotics

Step 4: Apply the selection criteria

Applying the selection criteria is a three-person job. Two of you will independently read the studies and decide which to include in your review based on the selection criteria you established in your protocol . The third person’s job is to break any ties.

To increase inter-rater reliability , ensure that everyone thoroughly understands the selection criteria before you begin.

If you’re writing a systematic review as a student for an assignment, you might not have a team. In this case, you’ll have to apply the selection criteria on your own; you can mention this as a limitation in your paper’s discussion.

You should apply the selection criteria in two phases:

- Based on the titles and abstracts : Decide whether each article potentially meets the selection criteria based on the information provided in the abstracts.

- Based on the full texts: Download the articles that weren’t excluded during the first phase. If an article isn’t available online or through your library, you may need to contact the authors to ask for a copy. Read the articles and decide which articles meet the selection criteria.

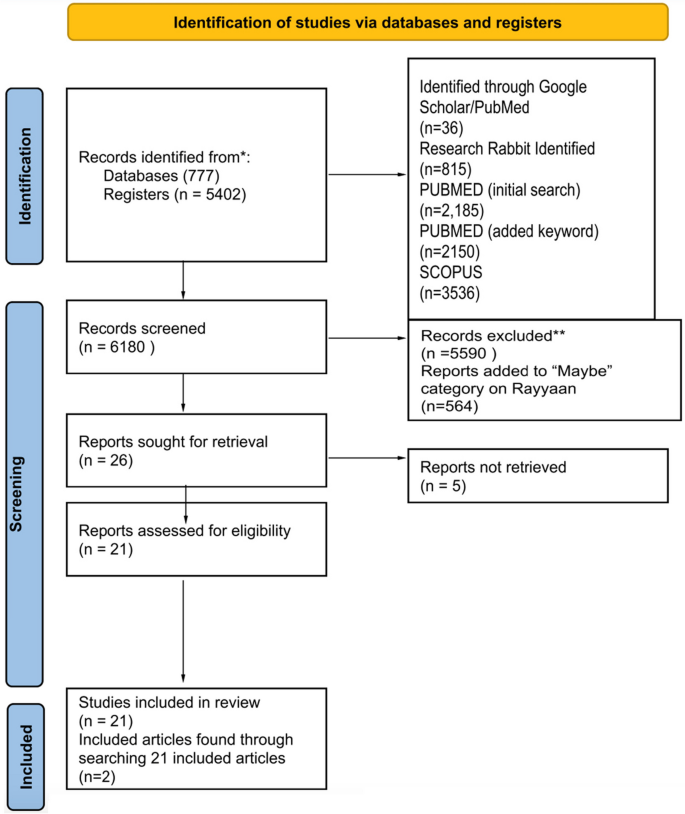

It’s very important to keep a meticulous record of why you included or excluded each article. When the selection process is complete, you can summarize what you did using a PRISMA flow diagram .

Next, Boyle and colleagues found the full texts for each of the remaining studies. Boyle and Tang read through the articles to decide if any more studies needed to be excluded based on the selection criteria.

When Boyle and Tang disagreed about whether a study should be excluded, they discussed it with Varigos until the three researchers came to an agreement.

Step 5: Extract the data

Extracting the data means collecting information from the selected studies in a systematic way. There are two types of information you need to collect from each study:

- Information about the study’s methods and results . The exact information will depend on your research question, but it might include the year, study design , sample size, context, research findings , and conclusions. If any data are missing, you’ll need to contact the study’s authors.

- Your judgment of the quality of the evidence, including risk of bias .

You should collect this information using forms. You can find sample forms in The Registry of Methods and Tools for Evidence-Informed Decision Making and the Grading of Recommendations, Assessment, Development and Evaluations Working Group .

Extracting the data is also a three-person job. Two people should do this step independently, and the third person will resolve any disagreements.

They also collected data about possible sources of bias, such as how the study participants were randomized into the control and treatment groups.

Step 6: Synthesize the data

Synthesizing the data means bringing together the information you collected into a single, cohesive story. There are two main approaches to synthesizing the data:

- Narrative ( qualitative ): Summarize the information in words. You’ll need to discuss the studies and assess their overall quality.

- Quantitative : Use statistical methods to summarize and compare data from different studies. The most common quantitative approach is a meta-analysis , which allows you to combine results from multiple studies into a summary result.

Generally, you should use both approaches together whenever possible. If you don’t have enough data, or the data from different studies aren’t comparable, then you can take just a narrative approach. However, you should justify why a quantitative approach wasn’t possible.

Boyle and colleagues also divided the studies into subgroups, such as studies about babies, children, and adults, and analyzed the effect sizes within each group.

Step 7: Write and publish a report

The purpose of writing a systematic review article is to share the answer to your research question and explain how you arrived at this answer.

Your article should include the following sections:

- Abstract : A summary of the review

- Introduction : Including the rationale and objectives

- Methods : Including the selection criteria, search method, data extraction method, and synthesis method

- Results : Including results of the search and selection process, study characteristics, risk of bias in the studies, and synthesis results

- Discussion : Including interpretation of the results and limitations of the review

- Conclusion : The answer to your research question and implications for practice, policy, or research

To verify that your report includes everything it needs, you can use the PRISMA checklist .

Once your report is written, you can publish it in a systematic review database, such as the Cochrane Database of Systematic Reviews , and/or in a peer-reviewed journal.

In their report, Boyle and colleagues concluded that probiotics cannot be recommended for reducing eczema symptoms or improving quality of life in patients with eczema. Note Generative AI tools like ChatGPT can be useful at various stages of the writing and research process and can help you to write your systematic review. However, we strongly advise against trying to pass AI-generated text off as your own work.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a thesis, dissertation , or research paper , in order to situate your work in relation to existing knowledge.

A literature review is a survey of credible sources on a topic, often used in dissertations , theses, and research papers . Literature reviews give an overview of knowledge on a subject, helping you identify relevant theories and methods, as well as gaps in existing research. Literature reviews are set up similarly to other academic texts , with an introduction , a main body, and a conclusion .

An annotated bibliography is a list of source references that has a short description (called an annotation ) for each of the sources. It is often assigned as part of the research process for a paper .

A systematic review is secondary research because it uses existing research. You don’t collect new data yourself.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Turney, S. (2023, November 20). Systematic Review | Definition, Example & Guide. Scribbr. Retrieved April 9, 2024, from https://www.scribbr.com/methodology/systematic-review/

Is this article helpful?

Shaun Turney

Other students also liked, how to write a literature review | guide, examples, & templates, how to write a research proposal | examples & templates, what is critical thinking | definition & examples, what is your plagiarism score.

Systematic Reviews

- Introduction

- Guidelines and procedures

- Management tools

- Define the question

- Check the topic

- Determine inclusion/exclusion criteria

- Develop a protocol

- Identify keywords

- Databases and search strategies

- Grey literature

- Manage and organise

- Screen & Select

- Locate full text

- Extract data

Example reviews

- Examples of systematic reviews

- Accessing help This link opens in a new window

- Systematic Style Reviews Guide This link opens in a new window

Please choose the tab below for your discipline to see relevant examples.

For more information about how to conduct and write reviews, please see the Guidelines section of this guide.

- Health & Medicine

- Social sciences

- Vibration and bubbles: a systematic review of the effects of helicopter retrieval on injured divers. (2018).

- Nicotine effects on exercise performance and physiological responses in nicotine‐naïve individuals: a systematic review. (2018).

- Association of total white cell count with mortality and major adverse events in patients with peripheral arterial disease: A systematic review. (2014).

- Do MOOCs contribute to student equity and social inclusion? A systematic review 2014–18. (2020).

- Interventions in Foster Family Care: A Systematic Review. (2020).

- Determinants of happiness among healthcare professionals between 2009 and 2019: a systematic review. (2020).

- Systematic review of the outcomes and trade-offs of ten types of decarbonization policy instruments. (2021).

- A systematic review on Asian's farmers' adaptation practices towards climate change. (2018).

- Are concentrations of pollutants in sharks, rays and skates (Elasmobranchii) a cause for concern? A systematic review. (2020).

- << Previous: Write

- Next: Publish >>

- Last Updated: Jan 16, 2024 10:23 AM

- URL: https://libguides.jcu.edu.au/systematic-review

- - Google Chrome

Intended for healthcare professionals

- Access provided by Google Indexer

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- The PRISMA 2020...

The PRISMA 2020 statement: an updated guideline for reporting systematic reviews

PRISMA 2020 explanation and elaboration: updated guidance and exemplars for reporting systematic reviews

- Related content

- Peer review

- Matthew J Page , senior research fellow 1 ,

- Joanne E McKenzie , associate professor 1 ,

- Patrick M Bossuyt , professor 2 ,

- Isabelle Boutron , professor 3 ,

- Tammy C Hoffmann , professor 4 ,

- Cynthia D Mulrow , professor 5 ,

- Larissa Shamseer , doctoral student 6 ,

- Jennifer M Tetzlaff , research product specialist 7 ,

- Elie A Akl , professor 8 ,

- Sue E Brennan , senior research fellow 1 ,

- Roger Chou , professor 9 ,

- Julie Glanville , associate director 10 ,

- Jeremy M Grimshaw , professor 11 ,

- Asbjørn Hróbjartsson , professor 12 ,

- Manoj M Lalu , associate scientist and assistant professor 13 ,

- Tianjing Li , associate professor 14 ,

- Elizabeth W Loder , professor 15 ,

- Evan Mayo-Wilson , associate professor 16 ,

- Steve McDonald , senior research fellow 1 ,

- Luke A McGuinness , research associate 17 ,

- Lesley A Stewart , professor and director 18 ,

- James Thomas , professor 19 ,

- Andrea C Tricco , scientist and associate professor 20 ,

- Vivian A Welch , associate professor 21 ,

- Penny Whiting , associate professor 17 ,

- David Moher , director and professor 22

- 1 School of Public Health and Preventive Medicine, Monash University, Melbourne, Australia

- 2 Department of Clinical Epidemiology, Biostatistics and Bioinformatics, Amsterdam University Medical Centres, University of Amsterdam, Amsterdam, Netherlands

- 3 Université de Paris, Centre of Epidemiology and Statistics (CRESS), Inserm, F 75004 Paris, France

- 4 Institute for Evidence-Based Healthcare, Faculty of Health Sciences and Medicine, Bond University, Gold Coast, Australia

- 5 University of Texas Health Science Center at San Antonio, San Antonio, Texas, USA; Annals of Internal Medicine

- 6 Knowledge Translation Program, Li Ka Shing Knowledge Institute, Toronto, Canada; School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, Canada

- 7 Evidence Partners, Ottawa, Canada

- 8 Clinical Research Institute, American University of Beirut, Beirut, Lebanon; Department of Health Research Methods, Evidence, and Impact, McMaster University, Hamilton, Ontario, Canada

- 9 Department of Medical Informatics and Clinical Epidemiology, Oregon Health & Science University, Portland, Oregon, USA

- 10 York Health Economics Consortium (YHEC Ltd), University of York, York, UK

- 11 Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Canada; School of Epidemiology and Public Health, University of Ottawa, Ottawa, Canada; Department of Medicine, University of Ottawa, Ottawa, Canada

- 12 Centre for Evidence-Based Medicine Odense (CEBMO) and Cochrane Denmark, Department of Clinical Research, University of Southern Denmark, Odense, Denmark; Open Patient data Exploratory Network (OPEN), Odense University Hospital, Odense, Denmark

- 13 Department of Anesthesiology and Pain Medicine, The Ottawa Hospital, Ottawa, Canada; Clinical Epidemiology Program, Blueprint Translational Research Group, Ottawa Hospital Research Institute, Ottawa, Canada; Regenerative Medicine Program, Ottawa Hospital Research Institute, Ottawa, Canada

- 14 Department of Ophthalmology, School of Medicine, University of Colorado Denver, Denver, Colorado, United States; Department of Epidemiology, Johns Hopkins Bloomberg School of Public Health, Baltimore, Maryland, USA

- 15 Division of Headache, Department of Neurology, Brigham and Women's Hospital, Harvard Medical School, Boston, Massachusetts, USA; Head of Research, The BMJ , London, UK

- 16 Department of Epidemiology and Biostatistics, Indiana University School of Public Health-Bloomington, Bloomington, Indiana, USA

- 17 Population Health Sciences, Bristol Medical School, University of Bristol, Bristol, UK

- 18 Centre for Reviews and Dissemination, University of York, York, UK

- 19 EPPI-Centre, UCL Social Research Institute, University College London, London, UK

- 20 Li Ka Shing Knowledge Institute of St. Michael's Hospital, Unity Health Toronto, Toronto, Canada; Epidemiology Division of the Dalla Lana School of Public Health and the Institute of Health Management, Policy, and Evaluation, University of Toronto, Toronto, Canada; Queen's Collaboration for Health Care Quality Joanna Briggs Institute Centre of Excellence, Queen's University, Kingston, Canada

- 21 Methods Centre, Bruyère Research Institute, Ottawa, Ontario, Canada; School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, Canada

- 22 Centre for Journalology, Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Canada; School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, Canada

- Correspondence to: M J Page matthew.page{at}monash.edu

- Accepted 4 January 2021

The Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) statement, published in 2009, was designed to help systematic reviewers transparently report why the review was done, what the authors did, and what they found. Over the past decade, advances in systematic review methodology and terminology have necessitated an update to the guideline. The PRISMA 2020 statement replaces the 2009 statement and includes new reporting guidance that reflects advances in methods to identify, select, appraise, and synthesise studies. The structure and presentation of the items have been modified to facilitate implementation. In this article, we present the PRISMA 2020 27-item checklist, an expanded checklist that details reporting recommendations for each item, the PRISMA 2020 abstract checklist, and the revised flow diagrams for original and updated reviews.

Systematic reviews serve many critical roles. They can provide syntheses of the state of knowledge in a field, from which future research priorities can be identified; they can address questions that otherwise could not be answered by individual studies; they can identify problems in primary research that should be rectified in future studies; and they can generate or evaluate theories about how or why phenomena occur. Systematic reviews therefore generate various types of knowledge for different users of reviews (such as patients, healthcare providers, researchers, and policy makers). 1 2 To ensure a systematic review is valuable to users, authors should prepare a transparent, complete, and accurate account of why the review was done, what they did (such as how studies were identified and selected) and what they found (such as characteristics of contributing studies and results of meta-analyses). Up-to-date reporting guidance facilitates authors achieving this. 3

The Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) statement published in 2009 (hereafter referred to as PRISMA 2009) 4 5 6 7 8 9 10 is a reporting guideline designed to address poor reporting of systematic reviews. 11 The PRISMA 2009 statement comprised a checklist of 27 items recommended for reporting in systematic reviews and an “explanation and elaboration” paper 12 13 14 15 16 providing additional reporting guidance for each item, along with exemplars of reporting. The recommendations have been widely endorsed and adopted, as evidenced by its co-publication in multiple journals, citation in over 60 000 reports (Scopus, August 2020), endorsement from almost 200 journals and systematic review organisations, and adoption in various disciplines. Evidence from observational studies suggests that use of the PRISMA 2009 statement is associated with more complete reporting of systematic reviews, 17 18 19 20 although more could be done to improve adherence to the guideline. 21

Many innovations in the conduct of systematic reviews have occurred since publication of the PRISMA 2009 statement. For example, technological advances have enabled the use of natural language processing and machine learning to identify relevant evidence, 22 23 24 methods have been proposed to synthesise and present findings when meta-analysis is not possible or appropriate, 25 26 27 and new methods have been developed to assess the risk of bias in results of included studies. 28 29 Evidence on sources of bias in systematic reviews has accrued, culminating in the development of new tools to appraise the conduct of systematic reviews. 30 31 Terminology used to describe particular review processes has also evolved, as in the shift from assessing “quality” to assessing “certainty” in the body of evidence. 32 In addition, the publishing landscape has transformed, with multiple avenues now available for registering and disseminating systematic review protocols, 33 34 disseminating reports of systematic reviews, and sharing data and materials, such as preprint servers and publicly accessible repositories. To capture these advances in the reporting of systematic reviews necessitated an update to the PRISMA 2009 statement.

Summary points

To ensure a systematic review is valuable to users, authors should prepare a transparent, complete, and accurate account of why the review was done, what they did, and what they found

The PRISMA 2020 statement provides updated reporting guidance for systematic reviews that reflects advances in methods to identify, select, appraise, and synthesise studies

The PRISMA 2020 statement consists of a 27-item checklist, an expanded checklist that details reporting recommendations for each item, the PRISMA 2020 abstract checklist, and revised flow diagrams for original and updated reviews

We anticipate that the PRISMA 2020 statement will benefit authors, editors, and peer reviewers of systematic reviews, and different users of reviews, including guideline developers, policy makers, healthcare providers, patients, and other stakeholders

Development of PRISMA 2020

A complete description of the methods used to develop PRISMA 2020 is available elsewhere. 35 We identified PRISMA 2009 items that were often reported incompletely by examining the results of studies investigating the transparency of reporting of published reviews. 17 21 36 37 We identified possible modifications to the PRISMA 2009 statement by reviewing 60 documents providing reporting guidance for systematic reviews (including reporting guidelines, handbooks, tools, and meta-research studies). 38 These reviews of the literature were used to inform the content of a survey with suggested possible modifications to the 27 items in PRISMA 2009 and possible additional items. Respondents were asked whether they believed we should keep each PRISMA 2009 item as is, modify it, or remove it, and whether we should add each additional item. Systematic review methodologists and journal editors were invited to complete the online survey (110 of 220 invited responded). We discussed proposed content and wording of the PRISMA 2020 statement, as informed by the review and survey results, at a 21-member, two-day, in-person meeting in September 2018 in Edinburgh, Scotland. Throughout 2019 and 2020, we circulated an initial draft and five revisions of the checklist and explanation and elaboration paper to co-authors for feedback. In April 2020, we invited 22 systematic reviewers who had expressed interest in providing feedback on the PRISMA 2020 checklist to share their views (via an online survey) on the layout and terminology used in a preliminary version of the checklist. Feedback was received from 15 individuals and considered by the first author, and any revisions deemed necessary were incorporated before the final version was approved and endorsed by all co-authors.

The PRISMA 2020 statement

Scope of the guideline.

The PRISMA 2020 statement has been designed primarily for systematic reviews of studies that evaluate the effects of health interventions, irrespective of the design of the included studies. However, the checklist items are applicable to reports of systematic reviews evaluating other interventions (such as social or educational interventions), and many items are applicable to systematic reviews with objectives other than evaluating interventions (such as evaluating aetiology, prevalence, or prognosis). PRISMA 2020 is intended for use in systematic reviews that include synthesis (such as pairwise meta-analysis or other statistical synthesis methods) or do not include synthesis (for example, because only one eligible study is identified). The PRISMA 2020 items are relevant for mixed-methods systematic reviews (which include quantitative and qualitative studies), but reporting guidelines addressing the presentation and synthesis of qualitative data should also be consulted. 39 40 PRISMA 2020 can be used for original systematic reviews, updated systematic reviews, or continually updated (“living”) systematic reviews. However, for updated and living systematic reviews, there may be some additional considerations that need to be addressed. Where there is relevant content from other reporting guidelines, we reference these guidelines within the items in the explanation and elaboration paper 41 (such as PRISMA-Search 42 in items 6 and 7, Synthesis without meta-analysis (SWiM) reporting guideline 27 in item 13d). Box 1 includes a glossary of terms used throughout the PRISMA 2020 statement.

Glossary of terms

Systematic review —A review that uses explicit, systematic methods to collate and synthesise findings of studies that address a clearly formulated question 43

Statistical synthesis —The combination of quantitative results of two or more studies. This encompasses meta-analysis of effect estimates (described below) and other methods, such as combining P values, calculating the range and distribution of observed effects, and vote counting based on the direction of effect (see McKenzie and Brennan 25 for a description of each method)

Meta-analysis of effect estimates —A statistical technique used to synthesise results when study effect estimates and their variances are available, yielding a quantitative summary of results 25

Outcome —An event or measurement collected for participants in a study (such as quality of life, mortality)

Result —The combination of a point estimate (such as a mean difference, risk ratio, or proportion) and a measure of its precision (such as a confidence/credible interval) for a particular outcome

Report —A document (paper or electronic) supplying information about a particular study. It could be a journal article, preprint, conference abstract, study register entry, clinical study report, dissertation, unpublished manuscript, government report, or any other document providing relevant information

Record —The title or abstract (or both) of a report indexed in a database or website (such as a title or abstract for an article indexed in Medline). Records that refer to the same report (such as the same journal article) are “duplicates”; however, records that refer to reports that are merely similar (such as a similar abstract submitted to two different conferences) should be considered unique.

Study —An investigation, such as a clinical trial, that includes a defined group of participants and one or more interventions and outcomes. A “study” might have multiple reports. For example, reports could include the protocol, statistical analysis plan, baseline characteristics, results for the primary outcome, results for harms, results for secondary outcomes, and results for additional mediator and moderator analyses

PRISMA 2020 is not intended to guide systematic review conduct, for which comprehensive resources are available. 43 44 45 46 However, familiarity with PRISMA 2020 is useful when planning and conducting systematic reviews to ensure that all recommended information is captured. PRISMA 2020 should not be used to assess the conduct or methodological quality of systematic reviews; other tools exist for this purpose. 30 31 Furthermore, PRISMA 2020 is not intended to inform the reporting of systematic review protocols, for which a separate statement is available (PRISMA for Protocols (PRISMA-P) 2015 statement 47 48 ). Finally, extensions to the PRISMA 2009 statement have been developed to guide reporting of network meta-analyses, 49 meta-analyses of individual participant data, 50 systematic reviews of harms, 51 systematic reviews of diagnostic test accuracy studies, 52 and scoping reviews 53 ; for these types of reviews we recommend authors report their review in accordance with the recommendations in PRISMA 2020 along with the guidance specific to the extension.

How to use PRISMA 2020

The PRISMA 2020 statement (including the checklists, explanation and elaboration, and flow diagram) replaces the PRISMA 2009 statement, which should no longer be used. Box 2 summarises noteworthy changes from the PRISMA 2009 statement. The PRISMA 2020 checklist includes seven sections with 27 items, some of which include sub-items ( table 1 ). A checklist for journal and conference abstracts for systematic reviews is included in PRISMA 2020. This abstract checklist is an update of the 2013 PRISMA for Abstracts statement, 54 reflecting new and modified content in PRISMA 2020 ( table 2 ). A template PRISMA flow diagram is provided, which can be modified depending on whether the systematic review is original or updated ( fig 1 ).

Noteworthy changes to the PRISMA 2009 statement

Inclusion of the abstract reporting checklist within PRISMA 2020 (see item #2 and table 2 ).

Movement of the ‘Protocol and registration’ item from the start of the Methods section of the checklist to a new Other section, with addition of a sub-item recommending authors describe amendments to information provided at registration or in the protocol (see item #24a-24c).

Modification of the ‘Search’ item to recommend authors present full search strategies for all databases, registers and websites searched, not just at least one database (see item #7).

Modification of the ‘Study selection’ item in the Methods section to emphasise the reporting of how many reviewers screened each record and each report retrieved, whether they worked independently, and if applicable, details of automation tools used in the process (see item #8).

Addition of a sub-item to the ‘Data items’ item recommending authors report how outcomes were defined, which results were sought, and methods for selecting a subset of results from included studies (see item #10a).

Splitting of the ‘Synthesis of results’ item in the Methods section into six sub-items recommending authors describe: the processes used to decide which studies were eligible for each synthesis; any methods required to prepare the data for synthesis; any methods used to tabulate or visually display results of individual studies and syntheses; any methods used to synthesise results; any methods used to explore possible causes of heterogeneity among study results (such as subgroup analysis, meta-regression); and any sensitivity analyses used to assess robustness of the synthesised results (see item #13a-13f).

Addition of a sub-item to the ‘Study selection’ item in the Results section recommending authors cite studies that might appear to meet the inclusion criteria, but which were excluded, and explain why they were excluded (see item #16b).

Splitting of the ‘Synthesis of results’ item in the Results section into four sub-items recommending authors: briefly summarise the characteristics and risk of bias among studies contributing to the synthesis; present results of all statistical syntheses conducted; present results of any investigations of possible causes of heterogeneity among study results; and present results of any sensitivity analyses (see item #20a-20d).

Addition of new items recommending authors report methods for and results of an assessment of certainty (or confidence) in the body of evidence for an outcome (see items #15 and #22).

Addition of a new item recommending authors declare any competing interests (see item #26).

Addition of a new item recommending authors indicate whether data, analytic code and other materials used in the review are publicly available and if so, where they can be found (see item #27).

PRISMA 2020 item checklist

- View inline

PRISMA 2020 for Abstracts checklist*

PRISMA 2020 flow diagram template for systematic reviews. The new design is adapted from flow diagrams proposed by Boers, 55 Mayo-Wilson et al. 56 and Stovold et al. 57 The boxes in grey should only be completed if applicable; otherwise they should be removed from the flow diagram. Note that a “report” could be a journal article, preprint, conference abstract, study register entry, clinical study report, dissertation, unpublished manuscript, government report or any other document providing relevant information.

- Download figure

- Open in new tab

- Download powerpoint

We recommend authors refer to PRISMA 2020 early in the writing process, because prospective consideration of the items may help to ensure that all the items are addressed. To help keep track of which items have been reported, the PRISMA statement website ( http://www.prisma-statement.org/ ) includes fillable templates of the checklists to download and complete (also available in the data supplement on bmj.com). We have also created a web application that allows users to complete the checklist via a user-friendly interface 58 (available at https://prisma.shinyapps.io/checklist/ and adapted from the Transparency Checklist app 59 ). The completed checklist can be exported to Word or PDF. Editable templates of the flow diagram can also be downloaded from the PRISMA statement website.

We have prepared an updated explanation and elaboration paper, in which we explain why reporting of each item is recommended and present bullet points that detail the reporting recommendations (which we refer to as elements). 41 The bullet-point structure is new to PRISMA 2020 and has been adopted to facilitate implementation of the guidance. 60 61 An expanded checklist, which comprises an abridged version of the elements presented in the explanation and elaboration paper, with references and some examples removed, is available in the data supplement on bmj.com. Consulting the explanation and elaboration paper is recommended if further clarity or information is required.

Journals and publishers might impose word and section limits, and limits on the number of tables and figures allowed in the main report. In such cases, if the relevant information for some items already appears in a publicly accessible review protocol, referring to the protocol may suffice. Alternatively, placing detailed descriptions of the methods used or additional results (such as for less critical outcomes) in supplementary files is recommended. Ideally, supplementary files should be deposited to a general-purpose or institutional open-access repository that provides free and permanent access to the material (such as Open Science Framework, Dryad, figshare). A reference or link to the additional information should be included in the main report. Finally, although PRISMA 2020 provides a template for where information might be located, the suggested location should not be seen as prescriptive; the guiding principle is to ensure the information is reported.

Use of PRISMA 2020 has the potential to benefit many stakeholders. Complete reporting allows readers to assess the appropriateness of the methods, and therefore the trustworthiness of the findings. Presenting and summarising characteristics of studies contributing to a synthesis allows healthcare providers and policy makers to evaluate the applicability of the findings to their setting. Describing the certainty in the body of evidence for an outcome and the implications of findings should help policy makers, managers, and other decision makers formulate appropriate recommendations for practice or policy. Complete reporting of all PRISMA 2020 items also facilitates replication and review updates, as well as inclusion of systematic reviews in overviews (of systematic reviews) and guidelines, so teams can leverage work that is already done and decrease research waste. 36 62 63

We updated the PRISMA 2009 statement by adapting the EQUATOR Network’s guidance for developing health research reporting guidelines. 64 We evaluated the reporting completeness of published systematic reviews, 17 21 36 37 reviewed the items included in other documents providing guidance for systematic reviews, 38 surveyed systematic review methodologists and journal editors for their views on how to revise the original PRISMA statement, 35 discussed the findings at an in-person meeting, and prepared this document through an iterative process. Our recommendations are informed by the reviews and survey conducted before the in-person meeting, theoretical considerations about which items facilitate replication and help users assess the risk of bias and applicability of systematic reviews, and co-authors’ experience with authoring and using systematic reviews.

Various strategies to increase the use of reporting guidelines and improve reporting have been proposed. They include educators introducing reporting guidelines into graduate curricula to promote good reporting habits of early career scientists 65 ; journal editors and regulators endorsing use of reporting guidelines 18 ; peer reviewers evaluating adherence to reporting guidelines 61 66 ; journals requiring authors to indicate where in their manuscript they have adhered to each reporting item 67 ; and authors using online writing tools that prompt complete reporting at the writing stage. 60 Multi-pronged interventions, where more than one of these strategies are combined, may be more effective (such as completion of checklists coupled with editorial checks). 68 However, of 31 interventions proposed to increase adherence to reporting guidelines, the effects of only 11 have been evaluated, mostly in observational studies at high risk of bias due to confounding. 69 It is therefore unclear which strategies should be used. Future research might explore barriers and facilitators to the use of PRISMA 2020 by authors, editors, and peer reviewers, designing interventions that address the identified barriers, and evaluating those interventions using randomised trials. To inform possible revisions to the guideline, it would also be valuable to conduct think-aloud studies 70 to understand how systematic reviewers interpret the items, and reliability studies to identify items where there is varied interpretation of the items.

We encourage readers to submit evidence that informs any of the recommendations in PRISMA 2020 (via the PRISMA statement website: http://www.prisma-statement.org/ ). To enhance accessibility of PRISMA 2020, several translations of the guideline are under way (see available translations at the PRISMA statement website). We encourage journal editors and publishers to raise awareness of PRISMA 2020 (for example, by referring to it in journal “Instructions to authors”), endorsing its use, advising editors and peer reviewers to evaluate submitted systematic reviews against the PRISMA 2020 checklists, and making changes to journal policies to accommodate the new reporting recommendations. We recommend existing PRISMA extensions 47 49 50 51 52 53 71 72 be updated to reflect PRISMA 2020 and advise developers of new PRISMA extensions to use PRISMA 2020 as the foundation document.

We anticipate that the PRISMA 2020 statement will benefit authors, editors, and peer reviewers of systematic reviews, and different users of reviews, including guideline developers, policy makers, healthcare providers, patients, and other stakeholders. Ultimately, we hope that uptake of the guideline will lead to more transparent, complete, and accurate reporting of systematic reviews, thus facilitating evidence based decision making.

Acknowledgments

We dedicate this paper to the late Douglas G Altman and Alessandro Liberati, whose contributions were fundamental to the development and implementation of the original PRISMA statement.

We thank the following contributors who completed the survey to inform discussions at the development meeting: Xavier Armoiry, Edoardo Aromataris, Ana Patricia Ayala, Ethan M Balk, Virginia Barbour, Elaine Beller, Jesse A Berlin, Lisa Bero, Zhao-Xiang Bian, Jean Joel Bigna, Ferrán Catalá-López, Anna Chaimani, Mike Clarke, Tammy Clifford, Ioana A Cristea, Miranda Cumpston, Sofia Dias, Corinna Dressler, Ivan D Florez, Joel J Gagnier, Chantelle Garritty, Long Ge, Davina Ghersi, Sean Grant, Gordon Guyatt, Neal R Haddaway, Julian PT Higgins, Sally Hopewell, Brian Hutton, Jamie J Kirkham, Jos Kleijnen, Julia Koricheva, Joey SW Kwong, Toby J Lasserson, Julia H Littell, Yoon K Loke, Malcolm R Macleod, Chris G Maher, Ana Marušic, Dimitris Mavridis, Jessie McGowan, Matthew DF McInnes, Philippa Middleton, Karel G Moons, Zachary Munn, Jane Noyes, Barbara Nußbaumer-Streit, Donald L Patrick, Tatiana Pereira-Cenci, Ba’ Pham, Bob Phillips, Dawid Pieper, Michelle Pollock, Daniel S Quintana, Drummond Rennie, Melissa L Rethlefsen, Hannah R Rothstein, Maroeska M Rovers, Rebecca Ryan, Georgia Salanti, Ian J Saldanha, Margaret Sampson, Nancy Santesso, Rafael Sarkis-Onofre, Jelena Savović, Christopher H Schmid, Kenneth F Schulz, Guido Schwarzer, Beverley J Shea, Paul G Shekelle, Farhad Shokraneh, Mark Simmonds, Nicole Skoetz, Sharon E Straus, Anneliese Synnot, Emily E Tanner-Smith, Brett D Thombs, Hilary Thomson, Alexander Tsertsvadze, Peter Tugwell, Tari Turner, Lesley Uttley, Jeffrey C Valentine, Matt Vassar, Areti Angeliki Veroniki, Meera Viswanathan, Cole Wayant, Paul Whaley, and Kehu Yang. We thank the following contributors who provided feedback on a preliminary version of the PRISMA 2020 checklist: Jo Abbott, Fionn Büttner, Patricia Correia-Santos, Victoria Freeman, Emily A Hennessy, Rakibul Islam, Amalia (Emily) Karahalios, Kasper Krommes, Andreas Lundh, Dafne Port Nascimento, Davina Robson, Catherine Schenck-Yglesias, Mary M Scott, Sarah Tanveer and Pavel Zhelnov. We thank Abigail H Goben, Melissa L Rethlefsen, Tanja Rombey, Anna Scott, and Farhad Shokraneh for their helpful comments on the preprints of the PRISMA 2020 papers. We thank Edoardo Aromataris, Stephanie Chang, Toby Lasserson and David Schriger for their helpful peer review comments on the PRISMA 2020 papers.

Contributors: JEM and DM are joint senior authors. MJP, JEM, PMB, IB, TCH, CDM, LS, and DM conceived this paper and designed the literature review and survey conducted to inform the guideline content. MJP conducted the literature review, administered the survey and analysed the data for both. MJP prepared all materials for the development meeting. MJP and JEM presented proposals at the development meeting. All authors except for TCH, JMT, EAA, SEB, and LAM attended the development meeting. MJP and JEM took and consolidated notes from the development meeting. MJP and JEM led the drafting and editing of the article. JEM, PMB, IB, TCH, LS, JMT, EAA, SEB, RC, JG, AH, TL, EMW, SM, LAM, LAS, JT, ACT, PW, and DM drafted particular sections of the article. All authors were involved in revising the article critically for important intellectual content. All authors approved the final version of the article. MJP is the guarantor of this work. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted.

Funding: There was no direct funding for this research. MJP is supported by an Australian Research Council Discovery Early Career Researcher Award (DE200101618) and was previously supported by an Australian National Health and Medical Research Council (NHMRC) Early Career Fellowship (1088535) during the conduct of this research. JEM is supported by an Australian NHMRC Career Development Fellowship (1143429). TCH is supported by an Australian NHMRC Senior Research Fellowship (1154607). JMT is supported by Evidence Partners Inc. JMG is supported by a Tier 1 Canada Research Chair in Health Knowledge Transfer and Uptake. MML is supported by The Ottawa Hospital Anaesthesia Alternate Funds Association and a Faculty of Medicine Junior Research Chair. TL is supported by funding from the National Eye Institute (UG1EY020522), National Institutes of Health, United States. LAM is supported by a National Institute for Health Research Doctoral Research Fellowship (DRF-2018-11-ST2-048). ACT is supported by a Tier 2 Canada Research Chair in Knowledge Synthesis. DM is supported in part by a University Research Chair, University of Ottawa. The funders had no role in considering the study design or in the collection, analysis, interpretation of data, writing of the report, or decision to submit the article for publication.

Competing interests: All authors have completed the ICMJE uniform disclosure form at http://www.icmje.org/conflicts-of-interest/ and declare: EL is head of research for the BMJ ; MJP is an editorial board member for PLOS Medicine ; ACT is an associate editor and MJP, TL, EMW, and DM are editorial board members for the Journal of Clinical Epidemiology ; DM and LAS were editors in chief, LS, JMT, and ACT are associate editors, and JG is an editorial board member for Systematic Reviews . None of these authors were involved in the peer review process or decision to publish. TCH has received personal fees from Elsevier outside the submitted work. EMW has received personal fees from the American Journal for Public Health , for which he is the editor for systematic reviews. VW is editor in chief of the Campbell Collaboration, which produces systematic reviews, and co-convenor of the Campbell and Cochrane equity methods group. DM is chair of the EQUATOR Network, IB is adjunct director of the French EQUATOR Centre and TCH is co-director of the Australasian EQUATOR Centre, which advocates for the use of reporting guidelines to improve the quality of reporting in research articles. JMT received salary from Evidence Partners, creator of DistillerSR software for systematic reviews; Evidence Partners was not involved in the design or outcomes of the statement, and the views expressed solely represent those of the author.

Provenance and peer review: Not commissioned; externally peer reviewed.

Patient and public involvement: Patients and the public were not involved in this methodological research. We plan to disseminate the research widely, including to community participants in evidence synthesis organisations.

This is an Open Access article distributed in accordance with the terms of the Creative Commons Attribution (CC BY 4.0) license, which permits others to distribute, remix, adapt and build upon this work, for commercial use, provided the original work is properly cited. See: http://creativecommons.org/licenses/by/4.0/ .

- Gurevitch J ,

- Koricheva J ,

- Nakagawa S ,

- Liberati A ,

- Tetzlaff J ,

- Altman DG ,

- PRISMA Group

- Tricco AC ,

- Sampson M ,

- Shamseer L ,

- Leoncini E ,

- de Belvis G ,

- Ricciardi W ,

- Fowler AJ ,

- Leclercq V ,

- Beaudart C ,

- Ajamieh S ,

- Rabenda V ,

- Tirelli E ,

- O’Mara-Eves A ,

- McNaught J ,

- Ananiadou S

- Marshall IJ ,

- Noel-Storr A ,

- Higgins JPT ,

- Chandler J ,

- McKenzie JE ,

- López-López JA ,

- Becker BJ ,

- Campbell M ,

- Sterne JAC ,

- Savović J ,

- Sterne JA ,

- Hernán MA ,

- Reeves BC ,

- Whiting P ,

- Higgins JP ,

- ROBIS group

- Hultcrantz M ,

- Stewart L ,

- Bossuyt PM ,

- Flemming K ,

- McInnes E ,

- France EF ,

- Cunningham M ,

- Rethlefsen ML ,

- Kirtley S ,

- Waffenschmidt S ,

- PRISMA-S Group

- ↵ Higgins JPT, Thomas J, Chandler J, et al, eds. Cochrane Handbook for Systematic Reviews of Interventions : Version 6.0. Cochrane, 2019. Available from https://training.cochrane.org/handbook .

- Dekkers OM ,

- Vandenbroucke JP ,

- Cevallos M ,

- Renehan AG ,

- ↵ Cooper H, Hedges LV, Valentine JV, eds. The Handbook of Research Synthesis and Meta-Analysis. Russell Sage Foundation, 2019.

- IOM (Institute of Medicine)

- PRISMA-P Group

- Salanti G ,

- Caldwell DM ,

- Stewart LA ,

- PRISMA-IPD Development Group

- Zorzela L ,

- Ioannidis JP ,

- PRISMAHarms Group

- McInnes MDF ,

- Thombs BD ,

- and the PRISMA-DTA Group

- Beller EM ,

- Glasziou PP ,

- PRISMA for Abstracts Group

- Mayo-Wilson E ,

- Dickersin K ,

- MUDS investigators

- Stovold E ,

- Beecher D ,

- Noel-Storr A

- McGuinness LA

- Sarafoglou A ,

- Boutron I ,

- Giraudeau B ,

- Porcher R ,

- Chauvin A ,

- Schulz KF ,

- Schroter S ,

- Stevens A ,

- Weinstein E ,

- Macleod MR ,

- IICARus Collaboration

- Kirkham JJ ,

- Petticrew M ,

- Tugwell P ,

- PRISMA-Equity Bellagio group

- UNC Libraries

- HSL Academic Process

- Systematic Reviews

- Step 8: Write the Review

Systematic Reviews: Step 8: Write the Review

Created by health science librarians.

- Step 1: Complete Pre-Review Tasks

- Step 2: Develop a Protocol

- Step 3: Conduct Literature Searches

- Step 4: Manage Citations

- Step 5: Screen Citations

- Step 6: Assess Quality of Included Studies

- Step 7: Extract Data from Included Studies

About Step 8: Write the Review

Write your review, report your review with prisma, review sections, plain language summaries for systematic reviews, writing the review- webinars.

- Writing the Review FAQs

Check our FAQ's

Email us

Chat with us (during business hours)

Call (919) 962-0800

Make an appointment with a librarian

Request a systematic or scoping review consultation

Search the FAQs

In Step 8, you will write an article or a paper about your systematic review. It will likely have five sections: introduction, methods, results, discussion, and conclusion. You will:

- Review the reporting standards you will use, such as PRISMA.

- Gather your completed data tables and PRISMA chart.

- Write the Introduction to the topic and your study, Methods of your research, Results of your research, and Discussion of your results.

- Write an Abstract describing your study and a Conclusion summarizing your paper.

- Cite the studies included in your systematic review and any other articles you may have used in your paper.

- If you wish to publish your work, choose a target journal for your article.

The PRISMA Checklist will help you report the details of your systematic review. Your paper will also include a PRISMA chart that is an image of your research process.

Click an item below to see how it applies to Step 8: Write the Review.

Reporting your review with PRISMA

To write your review, you will need the data from your PRISMA flow diagram . Review the PRISMA checklist to see which items you should report in your methods section.

Managing your review with Covidence

When you screen in Covidence, it will record the numbers you need for your PRISMA flow diagram from duplicate removal through inclusion of studies. You may need to add additional information, such as the number of references from each database, citations you find through grey literature or other searching methods, or the number of studies found in your previous work if you are updating a systematic review.

How a librarian can help with Step 8

A librarian can advise you on the process of organizing and writing up your systematic review, including:

- Applying the PRISMA reporting templates and the level of detail to include for each element

- How to report a systematic review search strategy and your review methodology in the completed review

- How to use prior published reviews to guide you in organizing your manuscript

Reporting standards & guidelines

Be sure to reference reporting standards when writing your review. This helps ensure that you communicate essential components of your methods, results, and conclusions. There are a number of tools that can be used to ensure compliance with reporting guidelines. A few review-writing resources are listed below.

- Cochrane Handbook - Chapter 15: Interpreting results and drawing conclusions

- JBI Manual for Evidence Synthesis - Chapter 12.3 The systematic review

- PRISMA 2020 (Preferred Reporting Items for Systematic Reviews and Meta-Analysis) The aim of the PRISMA Statement is to help authors improve the reporting of systematic reviews and meta-analyses.

Tools for writing your review

- RevMan (Cochrane Training)

- Methods Wizard (Systematic Review Accelerator) The Methods Wizard is part of the Systematic Review Accelerator created by Bond University and the Institute for Evidence-Based Healthcare.

- UNC HSL Systematic Review Manuscript Template Systematic review manuscript template(.doc) adapted from the PRISMA 2020 checklist. This document provides authors with template for writing about their systematic review. Each table contains a PRISMA checklist item that should be written about in that section, the matching PRISMA Item number, and a box where authors can indicate if an item has been completed. Once text has been added, delete any remaining instructions and the PRISMA checklist tables from the end of each section.

- The PRISMA 2020 statement: an updated guideline for reporting systematic reviews The PRISMA 2020 statement replaces the 2009 statement and includes new reporting guidance that reflects advances in methods to identify, select, appraise, and synthesise studies.

- PRISMA 2020 explanation and elaboration: updated guidance and exemplars for reporting systematic reviews This document is intended to enhance the use, understanding and dissemination of the PRISMA 2020 Statement. Through examples and explanations, the meaning and rationale for each checklist item are presented.

The PRISMA checklist

The Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) is a 27-item checklist used to improve transparency in systematic reviews. These items cover all aspects of the manuscript, including title, abstract, introduction, methods, results, discussion, and funding. The PRISMA checklist can be downloaded in PDF or Word files.

- PRISMA 2020 Checklists Download the 2020 PRISMA Checklists in Word or PDF formats or download the expanded checklist (PDF).

The PRISMA flow diagram

The PRISMA Flow Diagram visually depicts the flow of studies through each phase of the review process. The PRISMA Flow Diagram can be downloaded in Word files.

- PRISMA 2020 Flow Diagrams The flow diagram depicts the flow of information through the different phases of a systematic review. It maps out the number of records identified, included and excluded, and the reasons for exclusions. Different templates are available depending on the type of review (new or updated) and sources used to identify studies.

Documenting grey literature and/or hand searches

If you have also searched additional sources, such as professional organization websites, cited or citing references, etc., document your grey literature search using the flow diagram template version 1 PRISMA 2020 flow diagram for new systematic reviews which included searches of databases, registers and other sources or the version 2 PRISMA 2020 flow diagram for updated systematic reviews which included searches of databases, registers and other sources .

Complete the boxes documenting your database searches, Identification of studies via databases and registers, according to the PRISMA flow diagram instructions. Complete the boxes documenting your grey literature and/or hand searches on the right side of the template, Identification of studies via other methods, using the steps below.

Need help completing the PRISMA flow diagram?

There are different PRISMA flow diagram templates for new and updated reviews, as well as different templates for reviews with and without grey literature searches. Be sure you download the correct template to match your review methods, then follow the steps below for each portion of the diagram you have available.

View the step-by-step explanation of the PRISMA flow diagram

Step 1: Preparation Download the flow diagram template version 1 PRISMA 2020 flow diagram for new systematic reviews which included searches of databases and registers only or the version 2 PRISMA 2020 flow diagram for updated systematic reviews which included searches of databases and registers only .

View the step-by-step explanation of the grey literature & hand searching portion of the PRISMA flow diagram

Step 1: Preparation Download the flow diagram template version 1 PRISMA 2020 flow diagram for new systematic reviews which included searches of databases, registers and other sources or the version 2 PRISMA 2020 flow diagram for updated systematic reviews which included searches of databases, registers and other sources .

View the step-by-step explanation of review update portion of the PRISMA flow diagram

Step 1: Preparation Download the flow diagram template version 2 PRISMA 2020 flow diagram for updated systematic reviews which included searches of databases and registers only or the version 2 PRISMA 2020 flow diagram for updated systematic reviews which included searches of databases, registers and other sources .

For more information about updating your systematic review, see the box Updating Your Review? on the Step 3: Conduct Literature Searches page of the guide.

Sections of a Scientific Manuscript

Scientific articles often follow the IMRaD format: Introduction, Methods, Results, and Discussion. You will also need a title and an abstract to summarize your research.

You can read more about scientific writing through the library guides below.

- Structure of Scholarly Articles & Peer Review • Explains the standard parts of a medical research article • Compares scholarly journals, professional trade journals, and magazines • Explains peer review and how to find peer reviewed articles and journals

- Writing in the Health Sciences (For Students and Instructors)

- Citing & Writing Tools & Guides Includes links to guides for popular citation managers such as EndNote, Sciwheel, Zotero; copyright basics; APA & AMA Style guides; Plagiarism & Citing Sources; Citing & Writing: How to Write Scientific Papers

Sections of a Systematic Review Manuscript

Systematic reviews follow the same structure as original research articles, but you will need to report on your search instead of on details like the participants or sampling. Sections of your manuscript are shown as bold headings in the PRISMA checklist.

Refer to the PRISMA checklist for more information.

Consider including a Plain Language Summary (PLS) when you publish your systematic review. Like an abstract, a PLS gives an overview of your study, but is specifically written and formatted to be easy for non-experts to understand.

Tips for writing a PLS:

- Use clear headings e.g. "why did we do this study?"; "what did we do?"; "what did we find?"

- Use active voice e.g. "we searched for articles in 5 databases instead of "5 databases were searched"

- Consider need-to-know vs. nice-to-know: what is most important for readers to understand about your study? Be sure to provide the most important points without misrepresenting your study or misleading the reader.

- Keep it short: Many journals recommend keeping your plain language summary less than 250 words.

- Check journal guidelines: Your journal may have specific guidelines about the format of your plain language summary and when you can publish it. Look at journal guidelines before submitting your article.

Learn more about Plain Language Summaries:

- Rosenberg, A., Baróniková, S., & Feighery, L. (2021). Open Pharma recommendations for plain language summaries of peer-reviewed medical journal publications. Current Medical Research and Opinion, 37(11), 2015–2016. https://doi.org/10.1080/03007995.2021.1971185

- Lobban, D., Gardner, J., & Matheis, R. (2021). Plain language summaries of publications of company-sponsored medical research: what key questions do we need to address? Current Medical Research and Opinion, 1–12. https://doi.org/10.1080/03007995.2021.1997221

- Cochrane Community. (2022, March 21). Updated template and guidance for writing Plain Language Summaries in Cochrane Reviews now available. https://community.cochrane.org/news/updated-template-and-guidance-writing-plain-language-summaries-cochrane-reviews-now-available

- You can also look at our Health Literacy LibGuide: https://guides.lib.unc.edu/healthliteracy

How to Approach Writing a Background Section

What Makes a Good Discussion Section

Writing Up Risk of Bias

Developing Your Implications for Research Section

- << Previous: Step 7: Extract Data from Included Studies

- Next: FAQs >>

- Last Updated: Mar 28, 2024 9:43 AM

- URL: https://guides.lib.unc.edu/systematic-reviews

Search & Find

- E-Research by Discipline

- More Search & Find

Places & Spaces

- Places to Study

- Book a Study Room

- Printers, Scanners, & Computers

- More Places & Spaces

- Borrowing & Circulation

- Request a Title for Purchase

- Schedule Instruction Session

- More Services

Support & Guides

- Course Reserves

- Research Guides

- Citing & Writing

- More Support & Guides

- Mission Statement

- Diversity Statement

- Staff Directory

- Job Opportunities

- Give to the Libraries

- News & Exhibits

- Reckoning Initiative

- More About Us

- Search This Site

- Privacy Policy

- Accessibility

- Give Us Your Feedback

- 208 Raleigh Street CB #3916

- Chapel Hill, NC 27515-8890

- 919-962-1053

Systematic Review

- Library Help

- What is a Systematic Review (SR)?

Steps of a Systematic Review

- Framing a Research Question

- Developing a Search Strategy

- Searching the Literature

- Managing the Process

- Meta-analysis

- Publishing your Systematic Review

Forms and templates

Image: David Parmenter's Shop

- PICO Template

- Inclusion/Exclusion Criteria

- Database Search Log

- Review Matrix

- Cochrane Tool for Assessing Risk of Bias in Included Studies

• PRISMA Flow Diagram - Record the numbers of retrieved references and included/excluded studies. You can use the Create Flow Diagram tool to automate the process.

• PRISMA Checklist - Checklist of items to include when reporting a systematic review or meta-analysis

PRISMA 2020 and PRISMA-S: Common Questions on Tracking Records and the Flow Diagram

- PROSPERO Template

- Manuscript Template

- Steps of SR (text)

- Steps of SR (visual)

- Steps of SR (PIECES)

Adapted from A Guide to Conducting Systematic Reviews: Steps in a Systematic Review by Cornell University Library

Source: Cochrane Consumers and Communications (infographics are free to use and licensed under Creative Commons )

Check the following visual resources titled " What Are Systematic Reviews?"

- Video with closed captions available

- Animated Storyboard

- << Previous: What is a Systematic Review (SR)?

- Next: Framing a Research Question >>

- Last Updated: Mar 4, 2024 12:09 PM

- URL: https://lib.guides.umd.edu/SR

Jump to navigation

Cochrane Training

Chapter iii: reporting the review.

Miranda Cumpston, Toby Lasserson, Ella Flemyng, Matthew J Page

Key Points:

- Clear reporting of a systematic review allows readers to evaluate the rigour of the methods applied, and to interpret the findings appropriately. Transparency can facilitate attempts to verify or reproduce the results, and make the review more usable for health care decision makers.

- The target audience for Cochrane Reviews is people making decisions about health care, including healthcare professionals, consumers and policy makers. Cochrane Reviews should be written so that they are easy to read and understand by someone with a basic sense of the topic who may not necessarily be an expert in the area.

- Cochrane Protocols and Reviews should comply with the PRISMA 2020 and PRISMA for Protocols reporting guidelines.

- Guidance on the composition of plain language summaries of Cochrane Reviews is also available to help review authors specify the key messages in terms that are accessible to consumers and non-expert readers.

- Review authors should ensure that reporting of objectives, important outcomes, results, caveats and conclusions is consistent across the main text, the abstract, and any other summary versions of the review (e.g. plain language summary).

This chapter should be cited as: Cumpston M, Lasserson T, Flemyng E, Page MJ. Chapter III: Reporting the review. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (editors). Cochrane Handbook for Systematic Reviews of Interventions version 6.4 (updated August 2023). Cochrane, 2023. Available from www.training.cochrane.org/handbook .

III.1 Introduction

The effort of undertaking a systematic review is wasted if review authors do not report clearly what they did and what they found ( Glasziou et al 2014 ). Clear reporting enables others to replicate the methods used in the review, which can facilitate attempts to verify or reproduce the results ( Page et al 2018 ). Transparency can also make the review more usable for healthcare decision makers. For example, clearly describing the interventions assigned in the included studies can help users determine how best to deliver effective interventions in practice ( Hoffmann et al 2017 ). Also, comprehensively describing the eligibility criteria applied, sources consulted, analyses conducted, and post-hoc decisions made, can reduce uncertainties in assessments of risk of bias in the review findings ( Whiting et al 2016 ). For these reasons, transparent reporting is an essential component of all systematic reviews.

Surveys of the transparency of published systematic reviews suggest that many elements of systematic reviews could be reported better. For example, Nguyen and colleagues evaluated a random sample of 300 systematic reviews of interventions indexed in bibliographic databases in November 2020 ( Nguyen et al 2022 ). They found that in at least 20% of the reviews there was no information about the years of coverage of the search, the methods used to collect data and appraise studies, or the funding source of the review. Less than half of the reviews provided information on a protocol or registration record for the review. However, Cochrane Reviews, which accounted for 3% of the sample, had more complete reporting than all other types of systematic reviews.

Possible reasons why more complete reporting of Cochrane Reviews has been observed include the use of software (RevMan, https://training.cochrane.org/online-learning/core-software-cochrane-reviews/revman ) and strategies in the editorial process that promote good reporting. RevMan includes many standard headings and subheadings which are designed to prompt Cochrane Review authors to document their methods and results clearly.

Cochrane Reviews of interventions should adhere to the PRISMA 2020 (Preferred Reporting Items for Systematic reviews and Meta-Analysis) reporting guideline; see http://www.prisma-statement.org/ . PRISMA is an evidence-based, minimum set of items for reporting systematic reviews and meta-analyses to ensure the highest possible standard for reporting is met. Extensions to PRISMA and additional reporting guidelines for specific areas of methods are cited in the relevant sections below.

Cochrane’s Methodological Expectations of Cochrane Intervention Reviews (MECIR) detail standards for the conduct of Cochrane Reviews of interventions. They provide expectations for the general methodological approach to be followed from designing the review up to interpreting the findings at the end. There is a good reason to distinguish between conduct (MECIR) and reporting (PRISMA): good conduct does not necessarily lead to good reporting, good reporting cannot improve poor conduct, and poor reporting can obscure good or poor conduct of a review. The MECIR expectations of conduct are embedded in the relevant chapters of this Handbook and authors should adhere to MECIR throughout the development of their systematic review. MECIR conduct guidance for updates of Cochrane Reviews of interventions are presented in Chapter IV . For the latest version of all MECIR conduct guidance, readers should consult the MECIR web pages, available at https://methods.cochrane.org/mecir .

This chapter is built on reporting guidance from PRISMA 2020 ( Page et al 2021a , Page et al 2021b ) and is divided into sections for Cochrane Review protocols ( Section III.2) and new Cochrane Reviews ( Section III.3 ). Many of the standard headings recommended for use in Cochrane Reviews are referred to in this chapter, although the precise headings available in RevMan may be amended as new versions are released. New headings can be added and some standard headings can be deactivated; if the latter is done, review authors should ensure that all information expected (as outlined in PRISMA 2020) is still reported somewhere in the review.

III.2 Reporting of protocols of new Cochrane Reviews