Healthcare and Life Sciences

The combination of increasingly powerful computers and AI offers the possibility to be able to detect, diagnose, and cure diseases like never before. At IBM Research, we’re working on creating software and AI systems that can convert reams of health data into useable information for clinicians the world over.

IBM and Cleveland Clinic unveil the first quantum computer dedicated to healthcare research

- Accelerated Discovery

- Quantum Network

- Quantum Systems

IBM Research and JDRF continue to advance biomarker discovery research

- Life Sciences

Accelerating discoveries in immunotherapy and disease treatment

Why now is the time to accelerate discoveries in health care.

IBM is partnering with the Oxford Pandemic Sciences Institute

Computer simulations identify new ways to boost the skin’s natural protectors

Materials discovery.

- Physical Sciences

- See more of our work on Healthcare and Life Sciences

Publications

- Niharika S. D‘Souza

- Hongzhi Wang

- Medical Image Analysis

- Vaishnavi Subramanian

- Tanveer Syeda-Mahmood

- Artificial Intelligence in Medicine

- Simona Rabinovici-Cohen

- Neomi Fridman

- Shai Ginsburg

- Patil Korkeen

- Jiaoyan Chen

Research leads IBM’s response to COVID-19

To meet the global challenge of COVID-19, the world must come together. IBM has resources to share — like supercomputing power, virus tracking systems, and an AI assistant to answer citizens’ questions.

Related topics

Conversational ai, fairness, accountability, transparency, explainable ai.

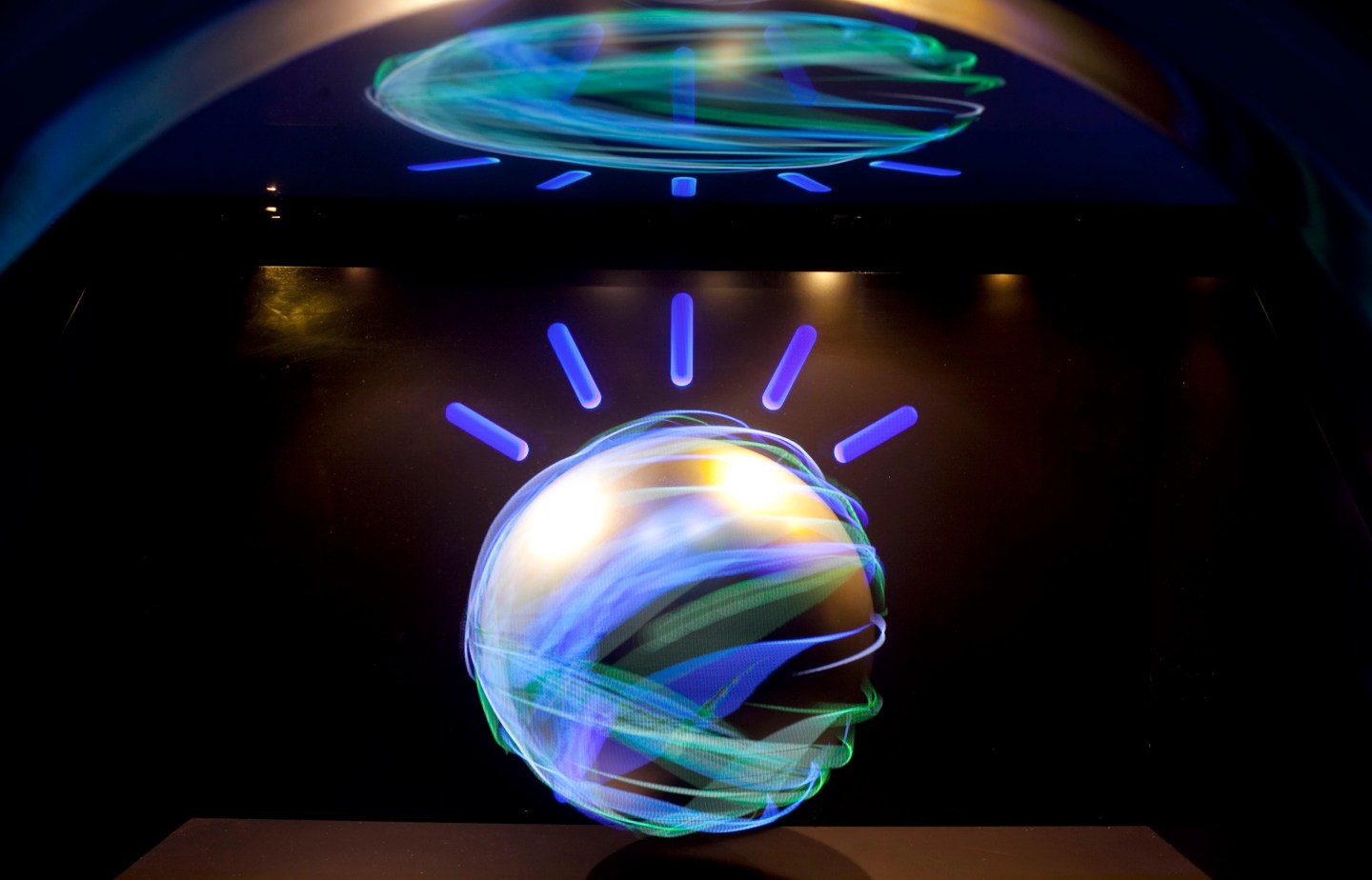

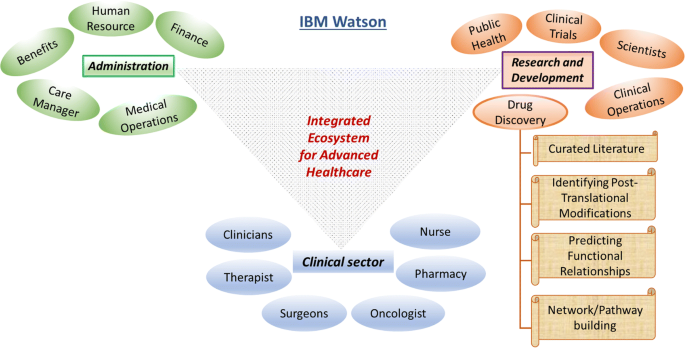

Here’s How IBM Watson Health Is Transforming the Health Care Industry

Imagine if you had a rare, undiagnosed disease that’s stumped doctor after doctor. What if there were a single, secure database that could read your symptoms then run through thousands of clinical studies, similar patient records, and medical textbooks to present a risk-matched list of potential diseases?

Just one year after its launch, IBM Watson Health is already starting to make this seemingly impossible task a reality, thanks to its powerful cognitive computing platform and a wide-reaching partnership strategy.

Watson’s vision is to enable better care by surfacing insights from the massive amounts of personal and academic health data that’s being generated every day, but IBM (IBM) needs partners within the medical, pharmaceutical, and hospital fields to make that relevant to on-the-ground practitioners. It’s institutions and companies like the Mayo Clinic, CVS Health (CVS), and Memorial Sloan Kettering Cancer Center that are adapting the innovative new technology to real-life applications.

“No one company is big enough to transform an industry on its own,” says Kathy McGroddy, vice president of IBM Watson Health. “It takes a village to change.”

One of IBM’s tentpole program within health care is the Watson for Oncology application developed in partnership with New York’s Memorial Sloan Kettering Cancer Center (MSK).

Some MSK oncologists have a highly specific expertise in certain cancers. By training Watson to think like they do, that knowledge expands from one specialist to any doctor who is querying Watson. That means that a patient can get the same top-tier care as if they traveled directly to the center’s offices in Manhattan. IBM’s Watson provides the framework to learn, connect, and store the data, while MSK is imparting its knowledge to train the computer.

The app, which can be run on an iPad or other tablet, is able to pack in all the expertise of MSK oncologists into one place so that any doctor anywhere is able to provide elite cancer care. This is significant for patients who live in areas without world-class medical services, like lower-income countries or rural America.

“The handwriting was on the wall. This kind of a concept was not an ‘if’ question but a ‘when’ question,” said Mark Kris, a medical oncologist at MSK and the lead physician for the institution’s IBM Watson collaboration. “We knew we wanted to be part of the team that developed it.”

IBM and MSK have been closely linked for many years, making their partnership an natural evolution. Both IBM CEO Ginni Rometty and former IBM chairman Lou Gerstner sit on MSK’s board. However, it was MSK that first approached IBM about using Watson after watching the computer defeat two past grand-champions on the Jeopardy! TV quiz show, David Kerr, director of corporate strategy at IBM, wrote in an article for the Huffington Post in 2012.

“I credit leaders at Memorial Sloan-Kettering for envisioning a way to have a huge impact on cancer treatment worldwide,” wrote Kerr.

Revolutionizing Access

On the ground, the partnership means that any doctor anywhere can query Watson for Oncology on the iPad app, if the hospital has licensed the program.

For example, say a patient has a rare, genetically linked form of lung cancer. A generalized cancer doctor likely hasn’t had the time to keep up with the latest in specific lung cancer treatments. In the last year alone, there have been at least seven new lung cancer drugs approved by the FDA. That doctor may not be aware of how best to use those drugs or even if they apply to this patient.

Meanwhile, Watson for Oncology has been fed previous case studies on patients like this by lung cancer specialists at MSK, so it understands the case and will spit out a list of potential treatments for the doctor, with a percentage rank of certitude and risk next to each option. The doctor then reviews the list and makes the final treatment decision in consult with the patient.

It’s this level of specificity that is transformative for both doctors and patients, taking centralized expertise and fanning it out across areas as far as India and Thailand, where Watson for Oncology is already being used in select hospitals.

“If it doesn’t get to people who benefit, it’s just irrelevant,” said Kris. “This isn’t meant to be theoretical.”

IBM and MSK didn’t release details of the joint venture but there is a shared revenue agreement in place, Kris said, similar to how licensing agreements are struck between pharmaceutical companies for new drugs.

Beyond Cancer

While Watson Health collaborates with more than a dozen cancer institutes to find new ways to treat the disease using genomic data, it’s partnerships also expand well beyond cancer care. It is working with CVS Health to use predictive analytics to transform care management for patients with chronic diseases, an important way to extend ongoing medical care beyond the standard doctor’s office.

Other Watson Health partners include:

- Medtronic (MDT): Predicting hypoglycemic episodes in diabetic patients nearly three hours before its onset, preventing devastating seizures.

- Apple (AAPL): Storing and analyzing ResearchKit data.

- Johnson & Johnson(JNJ): Analyzing scientific papers to find new connections for drug development.

- Under Armour (UA): Powering a “Cognitive Coaching System” that provides athletes coaching around sleep, fitness, activity and nutrition.

Each of these programs are an equal partnership between IBM and the other company. The two work hand-in-hand to train Watson and establish a functional platform to query the super computer—and each has its own unique business model.

“What’s happening in health care is new things, new ideas, and new models. There’s an opportunity to really look at different ways to create and share value together,” says McGroddy, who declined to release details of those business arrangements.

On August 6, 2015 IBM announced that Watson will to gain the ability to “see” by bringing together Watson’s advanced image analytics and cognitive capabilities with data and images obtained from Merge Healthcare Incorporated’s medical imaging management platform.

The Watson Health Ecosystem

IBM also opens up its platform to allow other companies to tinker or develop their own programs. Watson makes its capabilities available as an API (application program interface), so companies of all sizes can use cognitive computing to meet their needs. Developers, entrepreneurs, data hobbyists, and students have built more than 7,000 applications through the Watson Ecosystem.

Welltok is one of those examples. The company, which IBM has invested in, works directly with self-insured companies and insurers to find ways to engage customers so they choose healthier behaviors. It’s CafeWell Concierge app uses Watson’s cognitive computing capabilities to provide customized insights to patients based on their personal profiles, helping them with things like finding a healthy restaurant nearby or answering detailed questions about their health.

Ultimately, these large and small partnerships are meant to give IBM’s Watson Health the breadth to help patients and doctors make better decisions. The goal is to eventually deliver more effective care, whether through quicker drug development, personalized health recommendations, or uncovering new genetically-linked treatments.

“These are huge benefits,” says Rob Merkel, vice president of IBM Watson’s health group. “This isn’t like a traditional business reengineering process where you’re cutting a few points of inefficiencies and saving money. You’re talking about fundamentally changing people’s lives.”

Transforming Big Blue

Watson is also intended to be an engine of transformation within IBM itself, a 104-year-old company that has been in the Fortune 500 for the past 21 years. The company has been honing its cognitive computing division across subject areas—from financial services to artificial intelligence to health care—and Watson’s role in Big Blue is becoming more vital.

“The vision for Watson Health is to serve as a catalyst to save and improve lives around the world and lower costs through cognitive computing,” says McGroddy. “Overall, from a business standpoint, we need to get this very big, very fast. It’s not just about transforming health care, it’s about the transformation of IBM, as well.”

Rometty has continually stressed the importance of cognitive computing at large, calling it “the dawn of a new era.” IBM launched the Watson Group in 2014, followed by the health care-dedicated division a year later.

Watch Rometty explain her vision for Watson in this 2014 interview:

[fortune-brightcove videoid=3447758385001 width=”840″ height=”484″]

Rometty has devoted more than $4 billion to build out Watson Health’s platform via acquisitions, indicating just how important this business is to the company. Most recently, Big Blue paid $2.6 billion for Truven Health Analytics , which will bring its total database to 300 million patients; the deal will also double IBM Watson’s size to nearly 5,000 employees.

However, in terms of Watson’s profit potential, IBM hasn’t said exactly how much it contributes to the company’s bottom line. Mike Rhodin, a senior vice president of the IBM Watson Group, told Fortune in September that Watson “is a big part of our growth in overall analytics which was $17 billion last year.”

There is no question that technology and big data have the potential to transform health care, in particular. One patient’s electronic health record holds an average of about 400 gigabytes worth of information. If you add in a patient’s genetic information, that ups a person’s total health data to 6 terabytes, says Merkel. Then, think about cross referencing an individual’s total data with existing medical literature or even thousands of other similar patients.

“It’s beyond human cognition to read that much information,” says Merkel. “We’re trying to provide insight across these two realms: knowledge and data. Ultimately, we want to impact people’s lives by providing new levels of insight.”

Sign up for the Fortune Features email list so you don’t miss our biggest features, exclusive interviews, and investigations.

The Future of IBM’s Watson Health – A Case Study of Pioneering

- September 23, 2019

- Posted by: TreehillPartners

- In: Leadership & Restructuring , Strategy & Corporate Development

IBM is known as one of the world’s computing juggernauts. So when IBM first announced its artificial intelligence (AI) question-answer based project Watson (named after IBM’s first CEO Thomas J. Watson ) in 2010 there was a lot of interest into potential practical uses for the technology, specifically in the health sector. According to IBM, “The goal is to have computers start to interact in natural human terms across a range of applications and processes, understanding the questions that humans ask and providing answers that humans can understand and justify.”

In 2011, Watson wowed the public relations crowd by competing on the legendary USA television quiz show Jeopardy. Over three matches, IBM Watson won Jeopardy’s covotted 1M USD prize. IBM’s Christopher Padilla said of the match, “The technology behind Watson represents a major advancement in computing. In the data-intensive environment of government, this type of technology can help organizations make better decisions and improve how government helps its citizens.”

Since Jeopardy, Watson has had numerous other public relation scores announcing several industry health partnerships for Watson.

Despite seemingly early success for Watson, nearly a decade has passed without any real major practical Watson successes at scale. As public perception noticed a growing gap between Watson’s promise of “ushering in system wide healthcare innovation through the use of big data” and actual progress, the company decided to make leadership changes. Enter Paul Roma, IBM’s Watson Health newly appointed general manager late July 2019.

“Going forward, the word is focus,” says Roma. Reading between the lines, it seems that many of IBM Watson’s health projects were not successful as anticipated. “We’re going to double down on what’s working, and we’re going to get super focused on execution… On a back-to-basics level, if we can execute on what’s in front of us with the clients that we have now, we’re going to be really successful,” Roma went on to say.

Perhaps IBM’s Watson does have a prosperous future ahead of it given the general fact that healthcare still has all the unmet needs Watson promised to innovate years ago. Roma’s new role as health GM for Watson comes with a successful prior tenure at Ciox, a informational technology company currently handling medical records data for over 3,000 hospitals across the United States. Before Ciox, Roma was Deloitte’s global consultancy practice analytics chief.

What industry in general and Watson specifically has lagged so far is really understanding, and utilizing, the value of health data and resulting possibilities to at scale improve tools doctors use in the field and yield the best solutions for patient care. Roma comments “…it is my belief that we’re at a point in time, both in technology and medicine, where the technology needs to do better at helping doctors and patients interpret all this information at the right time, and give them a better way to approach their healthcare.” Some others say we are yet decades away from achieving this goal and so far it has remained hard to prove them wrong.

“It is clear to me that the challenges in front of us require an integrated platform,” IBM’s Roma says. “You have to have a deep bench, not just in technology experience, but also in the ability to productize it and bring it to the market at scale.”

While pharmaceutical product development is a lengthy process of identifying and measuring patient benefit, innovating an entire industry through complex integrated technology solutions that span across types of value chain participants, is not often something that can be achieved single handedly either.

We will see the success or failure of this pioneer program and continue working with clients to build strategies, partnerships and financings to take forward their own innovations.

- Leadership & Restructuring , Strategy & Corporate Development

Subscribe to our mailings!

Email address:

Please choose your subscriptions:

News Roundup

Viewpoint Articles

Customized Online Advertisement

Unsubscribe any time using the footer of our emails. For information about our privacy practices, please visit our privacy policy .

We use Mailchimp as our marketing platform. By clicking below to subscribe, you acknowledge that your information will be transferred to Mailchimp for processing. Learn more about Mailchimp's privacy practices here.

Privacy Overview

IBM Cloud Case Study

I chose this IBM case study because it provides a clear overview of how legacy systems can be modernized through cloud adoption. The consulting firm Deloitte partnered with IBM to improve the Medicaid Management Information Systems (MMIS). The collaboration resulted in an innovative solution for state healthcare agencies to align with CMS guidelines and adopt a more modular approach to enterprise IT (Deloitte Consulting | Ibm, 2024, p. 2). As the case study outlines, the MES platform was specifically designed to help state healthcare agencies transition away from the old cumbersome Medicaid Management Information Systems.

State healthcare agencies can reduce costs and save time by implementing the new MES platform. State agencies can update individual components as needed using the MES platform’s modular architecture, thus avoiding full-scale system overhauls (Deloitte Consulting | Ibm, 2024, p. 2). Moreover, the cloud-based deployment model allows agencies to rapidly scale resources while maintaining robust security controls (Deloitte Consulting | Ibm, 2024, p. 2). According to Brian Erdahl, Principal and Market Offering Leader for Deloitte, “The beauty of MES is that if an agency ends up not liking the functionality of a particular provider module, the organization can pull it and easily deploy a new module without disrupting what’s going on in claims processing or other modules that have been implemented,” (Deloitte Consulting | Ibm, 2024, p. 2). This flexibility enables state healthcare agencies to optimize their systems continuously without facing operational disruptions.

Despite the numerous benefits offered by cloud computing, companies must also be mindful of its challenges and drawbacks. One concern is the reliance on internet connectivity, which is vital for the seamless operation of cloud-based systems. Knowing that a simple internet outage could lead to productivity losses, customers must ensure to have a backup plan in place. Additionally, another primary concern of implementing cloud-based technology is security. This means IBM must uphold stringent cyber security standards to protect the data of its customers.

I believe Deloitte made a great decision to partner with IBM to develop a cloud-based solution for the Medicaid Management Information System. By utilizing cloud computing, state healthcare agencies can achieve significant cost savings and operational efficiencies. Despite the risks associated with cloud computing, “the VMware Cloud Foundation on the IBM Cloud platform offers firewall services, virtual SAN components, and other virtual resources for the rapid deployment of the MES solution” (Deloitte Consulting | Ibm, 2024, p. 2). Hence, the benefits of the IBM Cloud solution, along with the combined expertise of Deloitte and IBM, outweigh the potential challenges, making it a strategic choice for modernizing Medicaid Management Information Systems.

Deloitte consulting | ibm. (2024, February 1). https://www.ibm.com/case-studies/deloitte-consulting

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Case Study: IBM - i3ARCHIVE

- Deployment Country

- Pennsylvania - USA

- Healthcare, Life Sciences

- Business-to-Business, Business-to-Consumer, Business Performance Transformation, Business Resiliency, Content Management, Data Warehouse, Database Management, Digital Media, Grid Computing, Innovation that matters.

- i3ARCHIVE needed to give healthcare providers a means of managing and transporting digital medical images that fit seamlessly into their operations.

- Business need:

- Rising costs require healthcare providers to seek flexible, cost-effective solutions.

- Using grid technology, i3ARCHIVE created a system that enables providers and researchers to "plug in" to its base of two million images for more accurate testing and diagnosis.

- Fifty percent reduction in a hospital's overall medical imaging transport costs, resulting in savings of up to US$1M annually for larger hospitals.

"Hospitals are yearning for more on demand services so they can focus less on IT and more on healthcare ... With IBM's help and technology, we're giving them the services and information they need to change the way they provide care."-- Derek Danois, CEO, i3ARCHIVE.

On Demand Business defined

An enterprise whose business processes—integrated end-to-end across the company and with key partners, suppliers and customers—can respond with speed to any customer demand, market opportunity or external threat.”

- Why Become an On Demand Business?

- Key Benefits

In recent years, the healthcare industry has witnessed a dramatic growth in its information technology investments. Driven by necessity and enabled by innovative new technologies and approaches, healthcare institutions are increasingly looking to IT as the cornerstone of a new way of delivering healthcare services. Clinicians and researchers see powerful processing resources combined with sophisticated analytical tools as a way to uncover better ways of diagnosing and treating illnesses.

This infusion of IT into clinical activities has already produced a surge of dramatic results and even higher expectations for the future. Put simply, the tools are within reach for clinicians and researchers to find and understand the underlying patterns of disease like they never could before.

On Demand Business Benefits

- Fifty percent reduction in a hospital’s overall medical imaging transport costs, resulting in savings of up to US$1 million annually for larger hospitals

- Reduction in time to deliver medical files to physicians’ offices from days to minutes

- Improved diagnostic accuracy

- Faster FDA approval of imaging devices by leveraging NDMA data

- Ability of patients to access personal health information on demand and the inherent cost savings associated with self-management

- More efficient allocation of investment resources by hospitals through the avoidance of IT expenditures and support costs

Learning to share

Despite their widening embrace of technology, healthcare institutions – perhaps more than ever -- don't want to be in the information technology business. Despite a clear need for advanced IT capabilities, hospitals and clinics have been compelled by rapidly rising healthcare costs to focus their resources on their core mission. As a result, hospital executives are under growing pressure to avoid investments in costly IT infrastructure and the staff required to support it.

However, this presents a paradox and a challenge for healthcare providers seeking to achieve the vision of information-based medicine outlined above. In the effort to glean insights from patterns within clinical data, the biggest payoff naturally comes from aggregating the same type of data (e.g., test results) from different sources (e.g., hospitals). With clinicians and researchers trying to discern the subtle patterns within the "big picture," the more data points -- or "pixels" -- they can add to the picture, the better their chances of finding these patterns. Medical imaging illustrates this well. While many hospitals are embracing digital imaging technology (including the PACS, or picture archiving and communication systems, that enable them to share imaging files within a given hospital), most hospitals lack the ability to share their medical imaging data with other hospitals. This means the power of pooled data goes largely untapped. With few if any ho spitals ready to face the technical and economic challenges that bridging this gulf entailed, innovation was needed to fundamentally change the equation for hospitals. That's just what i3ARCHIVE did.

“ What we’re doing fits into a trend of hospitals wanting to get out of the IT business. If you talk to hospital CFOs and CEOs, they will tell you the thing they don’t want to be doing is spending lots of money to build up their own IT infrastructures.” – Derek Danois

Based in Berwyn, Pa., i3ARCHIVE originated as a federally funded research project led by the University of Pennsylvania whose goal was to create an open, secure and nationwide repository for digital medical images and data designed to encourage collaboration among hospitals, clinics and researchers. To succeed, i3 had to meet stiff challenges on two levels. First, it needed to create a powerful, flexible and resilient infrastructure capable of handling enormous transaction volumes as large numbers of healthcare facilities across the U.S. added and accessed large digital medical image files to and from the system. An even greater challenge was to deliver this industrial-strength processing capability in a way that was available on demand, completely seamless to the clinicians and researchers using it, and imposed no additional infrastructure or support burdens on facilities that adopted it. The company was able to create an innovative shared-services infrastructure known as the National Digital Medical Archive (NDMA) that puts the power, as well as the management requirement, in the backend. For the front end of the solution, i3 created an interface it calls the WallPlugTM that enables healthcare facilities to procure NDMA-based services by essentially plugging into the enormous, real-time archive running invisibly in the background. The NDMA currently houses over two million patient images and is steadily growing every day.

Plugging into information power

For users, one of the biggest values of i3's approach is that it offers a fundamental change in the way they access leading-edge technology. Most hospitals would agree that they have enough servers, workstations, monitors and software to support and, if given the choice, would prefer to invest in things that generate revenue -- such as a new kind of testing device. i3's offering does this by making the NDMA a seamlessly connected service in a hospital's existing clinical information network. The key is its support for DICOM (Digital Imaging and Communications in Medicine), an industry standard for networked imaging devices in the healthcare industry. Accessing the NDMA's services like a utility, clinicians can either upload their patients' medical images such as X-rays, MRIs and CT scans into the NDMA for storage, or download select files of patients from around the country in order to perform comparisons and make a more accurate diagnosis. Requests are handled by a highly flexible grid-based infrastructure that dynamically shifts processing to any of 64 nodes distributed across three strategically placed hosting locations. For security, a pair of servers within each hospital acts as a buffer between the hospital's internal systems and the backend grid; encrypted data is passed across this link. To enable the service's key value proposition -- that it imposes no support burden on hospital staff or operations -- i3 relied on IBM eServer xSeries servers based on their powerful remote monitoring and management capability. xSeries servers, running IBM DB2 Universal Database Extended Enterprise Edition to perform high-volume parallel processing and DB2 Content Manager to manage the solution's content, also constitute the core of the grid.

Key Components

- • IBM DB2® Universal Database™ Extended Enterprise Edition • IBM DB2 Content Manager

- • IBM eServer™xSeries® server • IBM EXP300 STOR Expansion Units

- • IBM Global Services e-business Hosting™

- • Upfront development: one year • Expansion/deployment: ongoing

A study in leverage

Having set out to develop a flexible and versatile solution, i3 has exceeded its own initial expectations. One true hallmark of a solution's flexibility is the ability to leverage it -- to use it as a cost-effective base for adding new services or capabilities. Since i3 launched its core NDMA service, examples of such ROI-enhancing leverage have become abundant. A good example is the company's Storage, Disaster Recovery and Business Continuity offerings, which directly leverage the archive and require no added investments in storage media by hospitals. By creating a mirrored image of their PACS system within the archive, hospitals can use the NDMA for real-time failover if their internal systems go down.

Another example of "pure" leverage is i3's recently introduced communications services. Because the NDMA is an open platform, multiple hospitals can plug into it and use it as a conduit to send digital medical image files to and from their PACS systems. Through this service, one hospital expects to save US$1 million annually in processing and courier costs. But perhaps the greatest expression of the NDMA's inherent versatility is its ability to support an entirely new business model. In late 2005, i3 created a standalone business unit known as MyNDMA.com, which gives patients the ability to manage their own digital health records. The portal-based service also enables physicians to access their patients' records electronically, thus sparing patients the burden of physically retrieving and transporting their files to a new doctor in the event of a referral, second opinion or change in carrier. In addition to the obvious improvement in clinical efficiency, myNDMA gives patients an unprecedented degree of accessibility to their medical records and images, as well as the truly groundbreaking ability to participate in the management and security of their personal health records. Like all of its services, MyNDMA was created to meet the concrete demands of hospitals, physicians and patients. The openness and flexibility of the underlying archive enabled i3 to meet these needs rapidly and cost-effectively.

Why it matters

As a rule, clinicians can do a better job of diagnosing, tracking and treating illness when they have more clinical information at their disposal. However, constraints in both IT budgets and support staff have limited their ability to make the investments necessary to gain easier access to it. i3's innovation was to create a shared, on demand service that plugged directly into a hospital's existing medical information networks, thus shielding it from the complexity, costs and support requirements of a new IT infrastructure.

Finally, there's the issue of scale. With medical imaging data spread across unconnected islands, seeing the important patterns that can improve care is effectively impossible. In one of its earliest breakthrough applications, i3 was able to help medical imaging equipment vendors detect subtle gradations in the performance of certain devices over time such as the effect of heat and varying radiation output on image quality -- all with existing, real-time archive data. This gave vendors a solid basis to change the way their machines are configured to give more accurate results.

Overall, CEO Derek Danois sees i3's NDMA as a catalyst to transformation across the healthcare industry. "Hospitals are yearning for more on demand services so they can focus less on IT and more on healthcare, which requires a more efficient IT infrastructure. The NDMA resolves this paradox by delivering state-of-the-art information management services to its customers, built on the reliability and power of IBM technology."

For more information: Please contact your IBM sales representative or IBM Business Partner. Visit us at: ibm.com/ondemand

Products and Services Used

IBM products and services that were used in this case study.

- xSeries Servers

- DB2 Universal Database Enterprise Server Edition, DB2 Content Manager

- IBM e-business Hosting, IBM Global Services

©Copyright IBM Corporation 2006 IBM Corporation Global Solution Sales New Orchard Road Armonk, NY 10504 U.S.A. Produced in the United States of America 6-06 All Rights Reserved IBM, the IBM logo, ibm.com, the On Demand Business logo, DB2, DB2 Universal Database, e-business Hosting, e(logo)server and xSeries are trademarks of International Business Machines Corporation in the United States, other countries, or both. Other company, product, or service names may be trademarks or service marks of others. Many factors contributed to the results and benefits achieved by the IBM customer described in this document. IBM does not guarantee comparable results. ODB-0148-00

- Survey paper

- Open access

- Published: 19 June 2019

Big data in healthcare: management, analysis and future prospects

- Sabyasachi Dash 1 na1 ,

- Sushil Kumar Shakyawar 2 , 3 na1 ,

- Mohit Sharma 4 , 5 &

- Sandeep Kaushik 6

Journal of Big Data volume 6 , Article number: 54 ( 2019 ) Cite this article

442k Accesses

682 Citations

103 Altmetric

Metrics details

‘Big data’ is massive amounts of information that can work wonders. It has become a topic of special interest for the past two decades because of a great potential that is hidden in it. Various public and private sector industries generate, store, and analyze big data with an aim to improve the services they provide. In the healthcare industry, various sources for big data include hospital records, medical records of patients, results of medical examinations, and devices that are a part of internet of things. Biomedical research also generates a significant portion of big data relevant to public healthcare. This data requires proper management and analysis in order to derive meaningful information. Otherwise, seeking solution by analyzing big data quickly becomes comparable to finding a needle in the haystack. There are various challenges associated with each step of handling big data which can only be surpassed by using high-end computing solutions for big data analysis. That is why, to provide relevant solutions for improving public health, healthcare providers are required to be fully equipped with appropriate infrastructure to systematically generate and analyze big data. An efficient management, analysis, and interpretation of big data can change the game by opening new avenues for modern healthcare. That is exactly why various industries, including the healthcare industry, are taking vigorous steps to convert this potential into better services and financial advantages. With a strong integration of biomedical and healthcare data, modern healthcare organizations can possibly revolutionize the medical therapies and personalized medicine.

Introduction

Information has been the key to a better organization and new developments. The more information we have, the more optimally we can organize ourselves to deliver the best outcomes. That is why data collection is an important part for every organization. We can also use this data for the prediction of current trends of certain parameters and future events. As we are becoming more and more aware of this, we have started producing and collecting more data about almost everything by introducing technological developments in this direction. Today, we are facing a situation wherein we are flooded with tons of data from every aspect of our life such as social activities, science, work, health, etc. In a way, we can compare the present situation to a data deluge. The technological advances have helped us in generating more and more data, even to a level where it has become unmanageable with currently available technologies. This has led to the creation of the term ‘big data’ to describe data that is large and unmanageable. In order to meet our present and future social needs, we need to develop new strategies to organize this data and derive meaningful information. One such special social need is healthcare. Like every other industry, healthcare organizations are producing data at a tremendous rate that presents many advantages and challenges at the same time. In this review, we discuss about the basics of big data including its management, analysis and future prospects especially in healthcare sector.

The data overload

Every day, people working with various organizations around the world are generating a massive amount of data. The term “digital universe” quantitatively defines such massive amounts of data created, replicated, and consumed in a single year. International Data Corporation (IDC) estimated the approximate size of the digital universe in 2005 to be 130 exabytes (EB). The digital universe in 2017 expanded to about 16,000 EB or 16 zettabytes (ZB). IDC predicted that the digital universe would expand to 40,000 EB by the year 2020. To imagine this size, we would have to assign about 5200 gigabytes (GB) of data to all individuals. This exemplifies the phenomenal speed at which the digital universe is expanding. The internet giants, like Google and Facebook, have been collecting and storing massive amounts of data. For instance, depending on our preferences, Google may store a variety of information including user location, advertisement preferences, list of applications used, internet browsing history, contacts, bookmarks, emails, and other necessary information associated with the user. Similarly, Facebook stores and analyzes more than about 30 petabytes (PB) of user-generated data. Such large amounts of data constitute ‘ big data ’. Over the past decade, big data has been successfully used by the IT industry to generate critical information that can generate significant revenue.

These observations have become so conspicuous that has eventually led to the birth of a new field of science termed ‘ Data Science ’. Data science deals with various aspects including data management and analysis, to extract deeper insights for improving the functionality or services of a system (for example, healthcare and transport system). Additionally, with the availability of some of the most creative and meaningful ways to visualize big data post-analysis, it has become easier to understand the functioning of any complex system. As a large section of society is becoming aware of, and involved in generating big data, it has become necessary to define what big data is. Therefore, in this review, we attempt to provide details on the impact of big data in the transformation of global healthcare sector and its impact on our daily lives.

Defining big data

As the name suggests, ‘big data’ represents large amounts of data that is unmanageable using traditional software or internet-based platforms. It surpasses the traditionally used amount of storage, processing and analytical power. Even though a number of definitions for big data exist, the most popular and well-accepted definition was given by Douglas Laney. Laney observed that (big) data was growing in three different dimensions namely, volume, velocity and variety (known as the 3 Vs) [ 1 ]. The ‘big’ part of big data is indicative of its large volume. In addition to volume, the big data description also includes velocity and variety. Velocity indicates the speed or rate of data collection and making it accessible for further analysis; while, variety remarks on the different types of organized and unorganized data that any firm or system can collect, such as transaction-level data, video, audio, text or log files. These three Vs have become the standard definition of big data. Although, other people have added several other Vs to this definition [ 2 ], the most accepted 4th V remains ‘veracity’.

The term “ big data ” has become extremely popular across the globe in recent years. Almost every sector of research, whether it relates to industry or academics, is generating and analyzing big data for various purposes. The most challenging task regarding this huge heap of data that can be organized and unorganized, is its management. Given the fact that big data is unmanageable using the traditional software, we need technically advanced applications and software that can utilize fast and cost-efficient high-end computational power for such tasks. Implementation of artificial intelligence (AI) algorithms and novel fusion algorithms would be necessary to make sense from this large amount of data. Indeed, it would be a great feat to achieve automated decision-making by the implementation of machine learning (ML) methods like neural networks and other AI techniques. However, in absence of appropriate software and hardware support, big data can be quite hazy. We need to develop better techniques to handle this ‘endless sea’ of data and smart web applications for efficient analysis to gain workable insights. With proper storage and analytical tools in hand, the information and insights derived from big data can make the critical social infrastructure components and services (like healthcare, safety or transportation) more aware, interactive and efficient [ 3 ]. In addition, visualization of big data in a user-friendly manner will be a critical factor for societal development.

Healthcare as a big-data repository

Healthcare is a multi-dimensional system established with the sole aim for the prevention, diagnosis, and treatment of health-related issues or impairments in human beings. The major components of a healthcare system are the health professionals (physicians or nurses), health facilities (clinics, hospitals for delivering medicines and other diagnosis or treatment technologies), and a financing institution supporting the former two. The health professionals belong to various health sectors like dentistry, medicine, midwifery, nursing, psychology, physiotherapy, and many others. Healthcare is required at several levels depending on the urgency of situation. Professionals serve it as the first point of consultation (for primary care), acute care requiring skilled professionals (secondary care), advanced medical investigation and treatment (tertiary care) and highly uncommon diagnostic or surgical procedures (quaternary care). At all these levels, the health professionals are responsible for different kinds of information such as patient’s medical history (diagnosis and prescriptions related data), medical and clinical data (like data from imaging and laboratory examinations), and other private or personal medical data. Previously, the common practice to store such medical records for a patient was in the form of either handwritten notes or typed reports [ 4 ]. Even the results from a medical examination were stored in a paper file system. In fact, this practice is really old, with the oldest case reports existing on a papyrus text from Egypt that dates back to 1600 BC [ 5 ]. In Stanley Reiser’s words, the clinical case records freeze the episode of illness as a story in which patient, family and the doctor are a part of the plot” [ 6 ].

With the advent of computer systems and its potential, the digitization of all clinical exams and medical records in the healthcare systems has become a standard and widely adopted practice nowadays. In 2003, a division of the National Academies of Sciences, Engineering, and Medicine known as Institute of Medicine chose the term “ electronic health records ” to represent records maintained for improving the health care sector towards the benefit of patients and clinicians. Electronic health records (EHR) as defined by Murphy, Hanken and Waters are computerized medical records for patients any information relating to the past, present or future physical/mental health or condition of an individual which resides in electronic system(s) used to capture, transmit, receive, store, retrieve, link and manipulate multimedia data for the primary purpose of providing healthcare and health-related services” [ 7 ].

Electronic health records

It is important to note that the National Institutes of Health (NIH) recently announced the “All of Us” initiative ( https://allofus.nih.gov/ ) that aims to collect one million or more patients’ data such as EHR, including medical imaging, socio-behavioral, and environmental data over the next few years. EHRs have introduced many advantages for handling modern healthcare related data. Below, we describe some of the characteristic advantages of using EHRs. The first advantage of EHRs is that healthcare professionals have an improved access to the entire medical history of a patient. The information includes medical diagnoses, prescriptions, data related to known allergies, demographics, clinical narratives, and the results obtained from various laboratory tests. The recognition and treatment of medical conditions thus is time efficient due to a reduction in the lag time of previous test results. With time we have observed a significant decrease in the redundant and additional examinations, lost orders and ambiguities caused by illegible handwriting, and an improved care coordination between multiple healthcare providers. Overcoming such logistical errors has led to reduction in the number of drug allergies by reducing errors in medication dose and frequency. Healthcare professionals have also found access over web based and electronic platforms to improve their medical practices significantly using automatic reminders and prompts regarding vaccinations, abnormal laboratory results, cancer screening, and other periodic checkups. There would be a greater continuity of care and timely interventions by facilitating communication among multiple healthcare providers and patients. They can be associated to electronic authorization and immediate insurance approvals due to less paperwork. EHRs enable faster data retrieval and facilitate reporting of key healthcare quality indicators to the organizations, and also improve public health surveillance by immediate reporting of disease outbreaks. EHRs also provide relevant data regarding the quality of care for the beneficiaries of employee health insurance programs and can help control the increasing costs of health insurance benefits. Finally, EHRs can reduce or absolutely eliminate delays and confusion in the billing and claims management area. The EHRs and internet together help provide access to millions of health-related medical information critical for patient life.

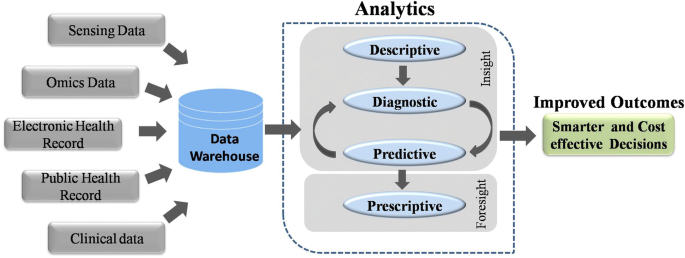

Digitization of healthcare and big data

Similar to EHR, an electronic medical record (EMR) stores the standard medical and clinical data gathered from the patients. EHRs, EMRs, personal health record (PHR), medical practice management software (MPM), and many other healthcare data components collectively have the potential to improve the quality, service efficiency, and costs of healthcare along with the reduction of medical errors. The big data in healthcare includes the healthcare payer-provider data (such as EMRs, pharmacy prescription, and insurance records) along with the genomics-driven experiments (such as genotyping, gene expression data) and other data acquired from the smart web of internet of things (IoT) (Fig. 1 ). The adoption of EHRs was slow at the beginning of the 21st century however it has grown substantially after 2009 [ 7 , 8 ]. The management and usage of such healthcare data has been increasingly dependent on information technology. The development and usage of wellness monitoring devices and related software that can generate alerts and share the health related data of a patient with the respective health care providers has gained momentum, especially in establishing a real-time biomedical and health monitoring system. These devices are generating a huge amount of data that can be analyzed to provide real-time clinical or medical care [ 9 ]. The use of big data from healthcare shows promise for improving health outcomes and controlling costs.

Workflow of Big data Analytics. Data warehouses store massive amounts of data generated from various sources. This data is processed using analytic pipelines to obtain smarter and affordable healthcare options

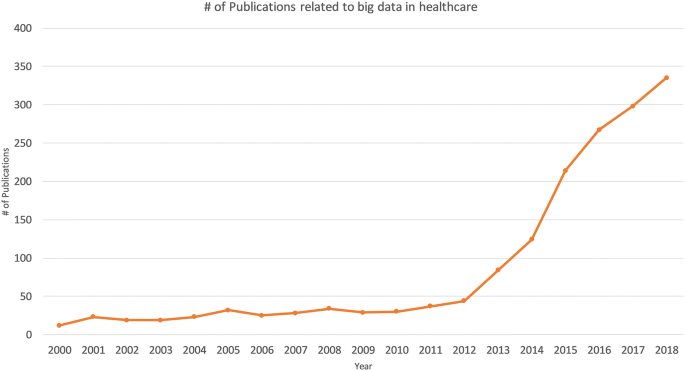

Big data in biomedical research

A biological system, such as a human cell, exhibits molecular and physical events of complex interplay. In order to understand interdependencies of various components and events of such a complex system, a biomedical or biological experiment usually gathers data on a smaller and/or simpler component. Consequently, it requires multiple simplified experiments to generate a wide map of a given biological phenomenon of interest. This indicates that more the data we have, the better we understand the biological processes. With this idea, modern techniques have evolved at a great pace. For instance, one can imagine the amount of data generated since the integration of efficient technologies like next-generation sequencing (NGS) and Genome wide association studies (GWAS) to decode human genetics. NGS-based data provides information at depths that were previously inaccessible and takes the experimental scenario to a completely new dimension. It has increased the resolution at which we observe or record biological events associated with specific diseases in a real time manner. The idea that large amounts of data can provide us a good amount of information that often remains unidentified or hidden in smaller experimental methods has ushered-in the ‘- omics ’ era. The ‘ omics ’ discipline has witnessed significant progress as instead of studying a single ‘ gene ’ scientists can now study the whole ‘ genome ’ of an organism in ‘ genomics ’ studies within a given amount of time. Similarly, instead of studying the expression or ‘ transcription ’ of single gene, we can now study the expression of all the genes or the entire ‘ transcriptome ’ of an organism under ‘ transcriptomics ’ studies. Each of these individual experiments generate a large amount of data with more depth of information than ever before. Yet, this depth and resolution might be insufficient to provide all the details required to explain a particular mechanism or event. Therefore, one usually finds oneself analyzing a large amount of data obtained from multiple experiments to gain novel insights. This fact is supported by a continuous rise in the number of publications regarding big data in healthcare (Fig. 2 ). Analysis of such big data from medical and healthcare systems can be of immense help in providing novel strategies for healthcare. The latest technological developments in data generation, collection and analysis, have raised expectations towards a revolution in the field of personalized medicine in near future.

Publications associated with big data in healthcare. The numbers of publications in PubMed are plotted by year

Big data from omics studies

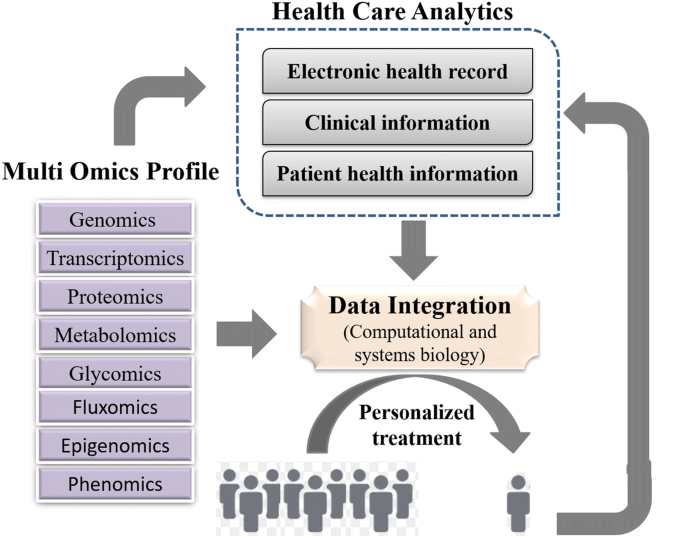

NGS has greatly simplified the sequencing and decreased the costs for generating whole genome sequence data. The cost of complete genome sequencing has fallen from millions to a couple of thousand dollars [ 10 ]. NGS technology has resulted in an increased volume of biomedical data that comes from genomic and transcriptomic studies. According to an estimate, the number of human genomes sequenced by 2025 could be between 100 million to 2 billion [ 11 ]. Combining the genomic and transcriptomic data with proteomic and metabolomic data can greatly enhance our knowledge about the individual profile of a patient—an approach often ascribed as “individual, personalized or precision health care”. Systematic and integrative analysis of omics data in conjugation with healthcare analytics can help design better treatment strategies towards precision and personalized medicine (Fig. 3 ). The genomics-driven experiments e.g., genotyping, gene expression, and NGS-based studies are the major source of big data in biomedical healthcare along with EMRs, pharmacy prescription information, and insurance records. Healthcare requires a strong integration of such biomedical data from various sources to provide better treatments and patient care. These prospects are so exciting that even though genomic data from patients would have many variables to be accounted, yet commercial organizations are already using human genome data to help the providers in making personalized medical decisions. This might turn out to be a game-changer in future medicine and health.

A framework for integrating omics data and health care analytics to promote personalized treatment

Internet of Things (IOT)

Healthcare industry has not been quick enough to adapt to the big data movement compared to other industries. Therefore, big data usage in the healthcare sector is still in its infancy. For example, healthcare and biomedical big data have not yet converged to enhance healthcare data with molecular pathology. Such convergence can help unravel various mechanisms of action or other aspects of predictive biology. Therefore, to assess an individual’s health status, biomolecular and clinical datasets need to be married. One such source of clinical data in healthcare is ‘internet of things’ (IoT).

In fact, IoT is another big player implemented in a number of other industries including healthcare. Until recently, the objects of common use such as cars, watches, refrigerators and health-monitoring devices, did not usually produce or handle data and lacked internet connectivity. However, furnishing such objects with computer chips and sensors that enable data collection and transmission over internet has opened new avenues. The device technologies such as Radio Frequency IDentification (RFID) tags and readers, and Near Field Communication (NFC) devices, that can not only gather information but interact physically, are being increasingly used as the information and communication systems [ 3 ]. This enables objects with RFID or NFC to communicate and function as a web of smart things. The analysis of data collected from these chips or sensors may reveal critical information that might be beneficial in improving lifestyle, establishing measures for energy conservation, improving transportation, and healthcare. In fact, IoT has become a rising movement in the field of healthcare. IoT devices create a continuous stream of data while monitoring the health of people (or patients) which makes these devices a major contributor to big data in healthcare. Such resources can interconnect various devices to provide a reliable, effective and smart healthcare service to the elderly and patients with a chronic illness [ 12 ].

Advantages of IoT in healthcare

Using the web of IoT devices, a doctor can measure and monitor various parameters from his/her clients in their respective locations for example, home or office. Therefore, through early intervention and treatment, a patient might not need hospitalization or even visit the doctor resulting in significant cost reduction in healthcare expenses. Some examples of IoT devices used in healthcare include fitness or health-tracking wearable devices, biosensors, clinical devices for monitoring vital signs, and others types of devices or clinical instruments. Such IoT devices generate a large amount of health related data. If we can integrate this data with other existing healthcare data like EMRs or PHRs, we can predict a patients’ health status and its progression from subclinical to pathological state [ 9 ]. In fact, big data generated from IoT has been quiet advantageous in several areas in offering better investigation and predictions. On a larger scale, the data from such devices can help in personnel health monitoring, modelling the spread of a disease and finding ways to contain a particular disease outbreak.

The analysis of data from IoT would require an updated operating software because of its specific nature along with advanced hardware and software applications. We would need to manage data inflow from IoT instruments in real-time and analyze it by the minute. Associates in the healthcare system are trying to trim down the cost and ameliorate the quality of care by applying advanced analytics to both internally and externally generated data.

Mobile computing and mobile health (mHealth)

In today’s digital world, every individual seems to be obsessed to track their fitness and health statistics using the in-built pedometer of their portable and wearable devices such as, smartphones, smartwatches, fitness dashboards or tablets. With an increasingly mobile society in almost all aspects of life, the healthcare infrastructure needs remodeling to accommodate mobile devices [ 13 ]. The practice of medicine and public health using mobile devices, known as mHealth or mobile health, pervades different degrees of health care especially for chronic diseases, such as diabetes and cancer [ 14 ]. Healthcare organizations are increasingly using mobile health and wellness services for implementing novel and innovative ways to provide care and coordinate health as well as wellness. Mobile platforms can improve healthcare by accelerating interactive communication between patients and healthcare providers. In fact, Apple and Google have developed devoted platforms like Apple’s ResearchKit and Google Fit for developing research applications for fitness and health statistics [ 15 ]. These applications support seamless interaction with various consumer devices and embedded sensors for data integration. These apps help the doctors to have direct access to your overall health data. Both the user and their doctors get to know the real-time status of your body. These apps and smart devices also help by improving our wellness planning and encouraging healthy lifestyles. The users or patients can become advocates for their own health.

Nature of the big data in healthcare

EHRs can enable advanced analytics and help clinical decision-making by providing enormous data. However, a large proportion of this data is currently unstructured in nature. An unstructured data is the information that does not adhere to a pre-defined model or organizational framework. The reason for this choice may simply be that we can record it in a myriad of formats. Another reason for opting unstructured format is that often the structured input options (drop-down menus, radio buttons, and check boxes) can fall short for capturing data of complex nature. For example, we cannot record the non-standard data regarding a patient’s clinical suspicions, socioeconomic data, patient preferences, key lifestyle factors, and other related information in any other way but an unstructured format. It is difficult to group such varied, yet critical, sources of information into an intuitive or unified data format for further analysis using algorithms to understand and leverage the patients care. Nonetheless, the healthcare industry is required to utilize the full potential of these rich streams of information to enhance the patient experience. In the healthcare sector, it could materialize in terms of better management, care and low-cost treatments. We are miles away from realizing the benefits of big data in a meaningful way and harnessing the insights that come from it. In order to achieve these goals, we need to manage and analyze the big data in a systematic manner.

Management and analysis of big data

Big data is the huge amounts of a variety of data generated at a rapid rate. The data gathered from various sources is mostly required for optimizing consumer services rather than consumer consumption. This is also true for big data from the biomedical research and healthcare. The major challenge with big data is how to handle this large volume of information. To make it available for scientific community, the data is required to be stored in a file format that is easily accessible and readable for an efficient analysis. In the context of healthcare data, another major challenge is the implementation of high-end computing tools, protocols and high-end hardware in the clinical setting. Experts from diverse backgrounds including biology, information technology, statistics, and mathematics are required to work together to achieve this goal. The data collected using the sensors can be made available on a storage cloud with pre-installed software tools developed by analytic tool developers. These tools would have data mining and ML functions developed by AI experts to convert the information stored as data into knowledge. Upon implementation, it would enhance the efficiency of acquiring, storing, analyzing, and visualization of big data from healthcare. The main task is to annotate, integrate, and present this complex data in an appropriate manner for a better understanding. In absence of such relevant information, the (healthcare) data remains quite cloudy and may not lead the biomedical researchers any further. Finally, visualization tools developed by computer graphics designers can efficiently display this newly gained knowledge.

Heterogeneity of data is another challenge in big data analysis. The huge size and highly heterogeneous nature of big data in healthcare renders it relatively less informative using the conventional technologies. The most common platforms for operating the software framework that assists big data analysis are high power computing clusters accessed via grid computing infrastructures. Cloud computing is such a system that has virtualized storage technologies and provides reliable services. It offers high reliability, scalability and autonomy along with ubiquitous access, dynamic resource discovery and composability. Such platforms can act as a receiver of data from the ubiquitous sensors, as a computer to analyze and interpret the data, as well as providing the user with easy to understand web-based visualization. In IoT, the big data processing and analytics can be performed closer to data source using the services of mobile edge computing cloudlets and fog computing. Advanced algorithms are required to implement ML and AI approaches for big data analysis on computing clusters. A programming language suitable for working on big data (e.g. Python, R or other languages) could be used to write such algorithms or software. Therefore, a good knowledge of biology and IT is required to handle the big data from biomedical research. Such a combination of both the trades usually fits for bioinformaticians. The most common among various platforms used for working with big data include Hadoop and Apache Spark. We briefly introduce these platforms below.

Loading large amounts of (big) data into the memory of even the most powerful of computing clusters is not an efficient way to work with big data. Therefore, the best logical approach for analyzing huge volumes of complex big data is to distribute and process it in parallel on multiple nodes. However, the size of data is usually so large that thousands of computing machines are required to distribute and finish processing in a reasonable amount of time. When working with hundreds or thousands of nodes, one has to handle issues like how to parallelize the computation, distribute the data, and handle failures. One of most popular open-source distributed application for this purpose is Hadoop [ 16 ]. Hadoop implements MapReduce algorithm for processing and generating large datasets. MapReduce uses map and reduce primitives to map each logical record’ in the input into a set of intermediate key/value pairs, and reduce operation combines all the values that shared the same key [ 17 ]. It efficiently parallelizes the computation, handles failures, and schedules inter-machine communication across large-scale clusters of machines. Hadoop Distributed File System (HDFS) is the file system component that provides a scalable, efficient, and replica based storage of data at various nodes that form a part of a cluster [ 16 ]. Hadoop has other tools that enhance the storage and processing components therefore many large companies like Yahoo, Facebook, and others have rapidly adopted it. Hadoop has enabled researchers to use data sets otherwise impossible to handle. Many large projects, like the determination of a correlation between the air quality data and asthma admissions, drug development using genomic and proteomic data, and other such aspects of healthcare are implementing Hadoop. Therefore, with the implementation of Hadoop system, the healthcare analytics will not be held back.

Apache Spark

Apache Spark is another open source alternative to Hadoop. It is a unified engine for distributed data processing that includes higher-level libraries for supporting SQL queries ( Spark SQL ), streaming data ( Spark Streaming ), machine learning ( MLlib ) and graph processing ( GraphX ) [ 18 ]. These libraries help in increasing developer productivity because the programming interface requires lesser coding efforts and can be seamlessly combined to create more types of complex computations. By implementing Resilient distributed Datasets (RDDs), in-memory processing of data is supported that can make Spark about 100× faster than Hadoop in multi-pass analytics (on smaller datasets) [ 19 , 20 ]. This is more true when the data size is smaller than the available memory [ 21 ]. This indicates that processing of really big data with Apache Spark would require a large amount of memory. Since, the cost of memory is higher than the hard drive, MapReduce is expected to be more cost effective for large datasets compared to Apache Spark. Similarly, Apache Storm was developed to provide a real-time framework for data stream processing. This platform supports most of the programming languages. Additionally, it offers good horizontal scalability and built-in-fault-tolerance capability for big data analysis.

Machine learning for information extraction, data analysis and predictions

In healthcare, patient data contains recorded signals for instance, electrocardiogram (ECG), images, and videos. Healthcare providers have barely managed to convert such healthcare data into EHRs. Efforts are underway to digitize patient-histories from pre-EHR era notes and supplement the standardization process by turning static images into machine-readable text. For example, optical character recognition (OCR) software is one such approach that can recognize handwriting as well as computer fonts and push digitization. Such unstructured and structured healthcare datasets have untapped wealth of information that can be harnessed using advanced AI programs to draw critical actionable insights in the context of patient care. In fact, AI has emerged as the method of choice for big data applications in medicine. This smart system has quickly found its niche in decision making process for the diagnosis of diseases. Healthcare professionals analyze such data for targeted abnormalities using appropriate ML approaches. ML can filter out structured information from such raw data.

Extracting information from EHR datasets

Emerging ML or AI based strategies are helping to refine healthcare industry’s information processing capabilities. For example, natural language processing (NLP) is a rapidly developing area of machine learning that can identify key syntactic structures in free text, help in speech recognition and extract the meaning behind a narrative. NLP tools can help generate new documents, like a clinical visit summary, or to dictate clinical notes. The unique content and complexity of clinical documentation can be challenging for many NLP developers. Nonetheless, we should be able to extract relevant information from healthcare data using such approaches as NLP.

AI has also been used to provide predictive capabilities to healthcare big data. For example, ML algorithms can convert the diagnostic system of medical images into automated decision-making. Though it is apparent that healthcare professionals may not be replaced by machines in the near future, yet AI can definitely assist physicians to make better clinical decisions or even replace human judgment in certain functional areas of healthcare.

Image analytics

Some of the most widely used imaging techniques in healthcare include computed tomography (CT), magnetic resonance imaging (MRI), X-ray, molecular imaging, ultrasound, photo-acoustic imaging, functional MRI (fMRI), positron emission tomography (PET), electroencephalography (EEG), and mammograms. These techniques capture high definition medical images (patient data) of large sizes. Healthcare professionals like radiologists, doctors and others do an excellent job in analyzing medical data in the form of these files for targeted abnormalities. However, it is also important to acknowledge the lack of specialized professionals for many diseases. In order to compensate for this dearth of professionals, efficient systems like Picture Archiving and Communication System (PACS) have been developed for storing and convenient access to medical image and reports data [ 22 ]. PACSs are popular for delivering images to local workstations, accomplished by protocols such as digital image communication in medicine (DICOM). However, data exchange with a PACS relies on using structured data to retrieve medical images. This by nature misses out on the unstructured information contained in some of the biomedical images. Moreover, it is possible to miss an additional information about a patient’s health status that is present in these images or similar data. A professional focused on diagnosing an unrelated condition might not observe it, especially when the condition is still emerging. To help in such situations, image analytics is making an impact on healthcare by actively extracting disease biomarkers from biomedical images. This approach uses ML and pattern recognition techniques to draw insights from massive volumes of clinical image data to transform the diagnosis, treatment and monitoring of patients. It focuses on enhancing the diagnostic capability of medical imaging for clinical decision-making.

A number of software tools have been developed based on functionalities such as generic, registration, segmentation, visualization, reconstruction, simulation and diffusion to perform medical image analysis in order to dig out the hidden information. For example, Visualization Toolkit is a freely available software which allows powerful processing and analysis of 3D images from medical tests [ 23 ], while SPM can process and analyze 5 different types of brain images (e.g. MRI, fMRI, PET, CT-Scan and EEG) [ 24 ]. Other software like GIMIAS, Elastix, and MITK support all types of images. Various other widely used tools and their features in this domain are listed in Table 1 . Such bioinformatics-based big data analysis may extract greater insights and value from imaging data to boost and support precision medicine projects, clinical decision support tools, and other modes of healthcare. For example, we can also use it to monitor new targeted-treatments for cancer.

Big data from omics

The big data from “omics” studies is a new kind of challenge for the bioinformaticians. Robust algorithms are required to analyze such complex data from biological systems. The ultimate goal is to convert this huge data into an informative knowledge base. The application of bioinformatics approaches to transform the biomedical and genomics data into predictive and preventive health is known as translational bioinformatics. It is at the forefront of data-driven healthcare. Various kinds of quantitative data in healthcare, for example from laboratory measurements, medication data and genomic profiles, can be combined and used to identify new meta-data that can help precision therapies [ 25 ]. This is why emerging new technologies are required to help in analyzing this digital wealth. In fact, highly ambitious multimillion-dollar projects like “ Big Data Research and Development Initiative ” have been launched that aim to enhance the quality of big data tools and techniques for a better organization, efficient access and smart analysis of big data. There are many advantages anticipated from the processing of ‘ omics’ data from large-scale Human Genome Project and other population sequencing projects. In the population sequencing projects like 1000 genomes, the researchers will have access to a marvelous amount of raw data. Similarly, Human Genome Project based Encyclopedia of DNA Elements (ENCODE) project aimed to determine all functional elements in the human genome using bioinformatics approaches. Here, we list some of the widely used bioinformatics-based tools for big data analytics on omics data.

SparkSeq is an efficient and cloud-ready platform based on Apache Spark framework and Hadoop library that is used for analyses of genomic data for interactive genomic data analysis with nucleotide precision

SAMQA identifies errors and ensures the quality of large-scale genomic data. This tool was originally built for the National Institutes of Health Cancer Genome Atlas project to identify and report errors including sequence alignment/map [SAM] format error and empty reads.

ART can simulate profiles of read errors and read lengths for data obtained using high throughput sequencing platforms including SOLiD and Illumina platforms.

DistMap is another toolkit used for distributed short-read mapping based on Hadoop cluster that aims to cover a wider range of sequencing applications. For instance, one of its applications namely the BWA mapper can perform 500 million read pairs in about 6 h, approximately 13 times faster than a conventional single-node mapper.

SeqWare is a query engine based on Apache HBase database system that enables access for large-scale whole-genome datasets by integrating genome browsers and tools.

CloudBurst is a parallel computing model utilized in genome mapping experiments to improve the scalability of reading large sequencing data.

Hydra uses the Hadoop-distributed computing framework for processing large peptide and spectra databases for proteomics datasets. This specific tool is capable of performing 27 billion peptide scorings in less than 60 min on a Hadoop cluster.

BlueSNP is an R package based on Hadoop platform used for genome-wide association studies (GWAS) analysis, primarily aiming on the statistical readouts to obtain significant associations between genotype–phenotype datasets. The efficiency of this tool is estimated to analyze 1000 phenotypes on 10 6 SNPs in 10 4 individuals in a duration of half-an-hour.

Myrna the cloud-based pipeline, provides information on the expression level differences of genes, including read alignments, data normalization, and statistical modeling.

The past few years have witnessed a tremendous increase in disease specific datasets from omics platforms. For example, the ArrayExpress Archive of Functional Genomics data repository contains information from approximately 30,000 experiments and more than one million functional assays. The growing amount of data demands for better and efficient bioinformatics driven packages to analyze and interpret the information obtained. This has also led to the birth of specific tools to analyze such massive amounts of data. Below, we mention some of the most popular commercial platforms for big data analytics.

Commercial platforms for healthcare data analytics

In order to tackle big data challenges and perform smoother analytics, various companies have implemented AI to analyze published results, textual data, and image data to obtain meaningful outcomes. IBM Corporation is one of the biggest and experienced players in this sector to provide healthcare analytics services commercially. IBM’s Watson Health is an AI platform to share and analyze health data among hospitals, providers and researchers. Similarly, Flatiron Health provides technology-oriented services in healthcare analytics specially focused in cancer research. Other big companies such as Oracle Corporation and Google Inc. are also focusing to develop cloud-based storage and distributed computing power platforms. Interestingly, in the recent few years, several companies and start-ups have also emerged to provide health care-based analytics and solutions. Some of the vendors in healthcare sector are provided in Table 2 . Below we discuss a few of these commercial solutions.

Ayasdi is one such big vendor which focuses on ML based methodologies to primarily provide machine intelligence platform along with an application framework with tried & tested enterprise scalability. It provides various applications for healthcare analytics, for example, to understand and manage clinical variation, and to transform clinical care costs. It is also capable of analyzing and managing how hospitals are organized, conversation between doctors, risk-oriented decisions by doctors for treatment, and the care they deliver to patients. It also provides an application for the assessment and management of population health, a proactive strategy that goes beyond traditional risk analysis methodologies. It uses ML intelligence for predicting future risk trajectories, identifying risk drivers, and providing solutions for best outcomes. A strategic illustration of the company’s methodology for analytics is provided in Fig. 4 .

Illustration of application of “Intelligent Application Suite” provided by AYASDI for various analyses such as clinical variation, population health, and risk management in healthcare sector

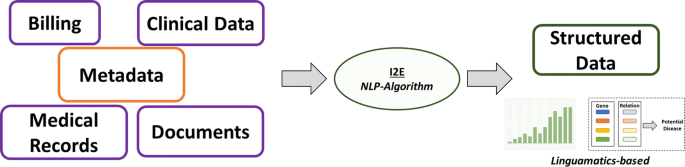

Linguamatics

It is an NLP based algorithm that relies on an interactive text mining algorithm (I2E). I2E can extract and analyze a wide array of information. Results obtained using this technique are tenfold faster than other tools and does not require expert knowledge for data interpretation. This approach can provide information on genetic relationships and facts from unstructured data. Classical, ML requires well-curated data as input to generate clean and filtered results. However, NLP when integrated in EHR or clinical records per se facilitates the extraction of clean and structured information that often remains hidden in unstructured input data (Fig. 5 ).

Schematic representation for the working principle of NLP-based AI system used in massive data retention and analysis in Linguamatics