If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Biology library

Course: biology library > unit 1, the scientific method.

- Controlled experiments

- The scientific method and experimental design

Introduction

- Make an observation.

- Ask a question.

- Form a hypothesis , or testable explanation.

- Make a prediction based on the hypothesis.

- Test the prediction.

- Iterate: use the results to make new hypotheses or predictions.

Scientific method example: Failure to toast

1. make an observation..

- Observation: the toaster won't toast.

2. Ask a question.

- Question: Why won't my toaster toast?

3. Propose a hypothesis.

- Hypothesis: Maybe the outlet is broken.

4. Make predictions.

- Prediction: If I plug the toaster into a different outlet, then it will toast the bread.

5. Test the predictions.

- Test of prediction: Plug the toaster into a different outlet and try again.

- If the toaster does toast, then the hypothesis is supported—likely correct.

- If the toaster doesn't toast, then the hypothesis is not supported—likely wrong.

Logical possibility

Practical possibility, building a body of evidence, 6. iterate..

- Iteration time!

- If the hypothesis was supported, we might do additional tests to confirm it, or revise it to be more specific. For instance, we might investigate why the outlet is broken.

- If the hypothesis was not supported, we would come up with a new hypothesis. For instance, the next hypothesis might be that there's a broken wire in the toaster.

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

How it works

For Business

Join Mind Tools

Article • 5 min read

Using the Scientific Method to Solve Problems

How the scientific method and reasoning can help simplify processes and solve problems.

By the Mind Tools Content Team

The processes of problem-solving and decision-making can be complicated and drawn out. In this article we look at how the scientific method, along with deductive and inductive reasoning can help simplify these processes.

‘It is a capital mistake to theorize before one has information. Insensibly one begins to twist facts to suit our theories, instead of theories to suit facts.’ Sherlock Holmes

The Scientific Method

The scientific method is a process used to explore observations and answer questions. Originally used by scientists looking to prove new theories, its use has spread into many other areas, including that of problem-solving and decision-making.

The scientific method is designed to eliminate the influences of bias, prejudice and personal beliefs when testing a hypothesis or theory. It has developed alongside science itself, with origins going back to the 13th century. The scientific method is generally described as a series of steps.

- observations/theory

- explanation/conclusion

The first step is to develop a theory about the particular area of interest. A theory, in the context of logic or problem-solving, is a conjecture or speculation about something that is not necessarily fact, often based on a series of observations.

Once a theory has been devised, it can be questioned and refined into more specific hypotheses that can be tested. The hypotheses are potential explanations for the theory.

The testing, and subsequent analysis, of these hypotheses will eventually lead to a conclus ion which can prove or disprove the original theory.

Applying the Scientific Method to Problem-Solving

How can the scientific method be used to solve a problem, such as the color printer is not working?

1. Use observations to develop a theory.

In order to solve the problem, it must first be clear what the problem is. Observations made about the problem should be used to develop a theory. In this particular problem the theory might be that the color printer has run out of ink. This theory is developed as the result of observing the increasingly faded output from the printer.

2. Form a hypothesis.

Note down all the possible reasons for the problem. In this situation they might include:

- The printer is set up as the default printer for all 40 people in the department and so is used more frequently than necessary.

- There has been increased usage of the printer due to non-work related printing.

- In an attempt to reduce costs, poor quality ink cartridges with limited amounts of ink in them have been purchased.

- The printer is faulty.

All these possible reasons are hypotheses.

3. Test the hypothesis.

Once as many hypotheses (or reasons) as possible have been thought of, then each one can be tested to discern if it is the cause of the problem. An appropriate test needs to be devised for each hypothesis. For example, it is fairly quick to ask everyone to check the default settings of the printer on each PC, or to check if the cartridge supplier has changed.

4. Analyze the test results.

Once all the hypotheses have been tested, the results can be analyzed. The type and depth of analysis will be dependant on each individual problem, and the tests appropriate to it. In many cases the analysis will be a very quick thought process. In others, where considerable information has been collated, a more structured approach, such as the use of graphs, tables or spreadsheets, may be required.

5. Draw a conclusion.

Based on the results of the tests, a conclusion can then be drawn about exactly what is causing the problem. The appropriate remedial action can then be taken, such as asking everyone to amend their default print settings, or changing the cartridge supplier.

Inductive and Deductive Reasoning

The scientific method involves the use of two basic types of reasoning, inductive and deductive.

Inductive reasoning makes a conclusion based on a set of empirical results. Empirical results are the product of the collection of evidence from observations. For example:

‘Every time it rains the pavement gets wet, therefore rain must be water’.

There has been no scientific determination in the hypothesis that rain is water, it is purely based on observation. The formation of a hypothesis in this manner is sometimes referred to as an educated guess. An educated guess, whilst not based on hard facts, must still be plausible, and consistent with what we already know, in order to present a reasonable argument.

Deductive reasoning can be thought of most simply in terms of ‘If A and B, then C’. For example:

- if the window is above the desk, and

- the desk is above the floor, then

- the window must be above the floor

It works by building on a series of conclusions, which results in one final answer.

Social Sciences and the Scientific Method

The scientific method can be used to address any situation or problem where a theory can be developed. Although more often associated with natural sciences, it can also be used to develop theories in social sciences (such as psychology, sociology and linguistics), using both quantitative and qualitative methods.

Quantitative information is information that can be measured, and tends to focus on numbers and frequencies. Typically quantitative information might be gathered by experiments, questionnaires or psychometric tests. Qualitative information, on the other hand, is based on information describing meaning, such as human behavior, and the reasons behind it. Qualitative information is gathered by way of interviews and case studies, which are possibly not as statistically accurate as quantitative methods, but provide a more in-depth and rich description.

The resultant information can then be used to prove, or disprove, a hypothesis. Using a mix of quantitative and qualitative information is more likely to produce a rounded result based on the factual, quantitative information enriched and backed up by actual experience and qualitative information.

In terms of problem-solving or decision-making, for example, the qualitative information is that gained by looking at the ‘how’ and ‘why’ , whereas quantitative information would come from the ‘where’, ‘what’ and ‘when’.

It may seem easy to come up with a brilliant idea, or to suspect what the cause of a problem may be. However things can get more complicated when the idea needs to be evaluated, or when there may be more than one potential cause of a problem. In these situations, the use of the scientific method, and its associated reasoning, can help the user come to a decision, or reach a solution, secure in the knowledge that all options have been considered.

Join Mind Tools and get access to exclusive content.

This resource is only available to Mind Tools members.

Already a member? Please Login here

Team Management

Learn the key aspects of managing a team, from building and developing your team, to working with different types of teams, and troubleshooting common problems.

Sign-up to our newsletter

Subscribing to the Mind Tools newsletter will keep you up-to-date with our latest updates and newest resources.

Subscribe now

Business Skills

Personal Development

Leadership and Management

Member Extras

Most Popular

Newest Releases

SWOT Analysis

SMART Goals

Mind Tools Store

About Mind Tools Content

Discover something new today

How to stop procrastinating.

Overcoming the Habit of Delaying Important Tasks

What Is Time Management?

Working Smarter to Enhance Productivity

How Emotionally Intelligent Are You?

Boosting Your People Skills

Self-Assessment

What's Your Leadership Style?

Learn About the Strengths and Weaknesses of the Way You Like to Lead

Recommended for you

The addie model.

Developing Learning Sessions from the Ground Up

Business Operations and Process Management

Strategy Tools

Customer Service

Business Ethics and Values

Handling Information and Data

Project Management

Knowledge Management

Self-Development and Goal Setting

Time Management

Presentation Skills

Learning Skills

Career Skills

Communication Skills

Negotiation, Persuasion and Influence

Working With Others

Difficult Conversations

Creativity Tools

Self-Management

Work-Life Balance

Stress Management and Wellbeing

Coaching and Mentoring

Change Management

Managing Conflict

Delegation and Empowerment

Performance Management

Leadership Skills

Developing Your Team

Talent Management

Problem Solving

Decision Making

Member Podcast

Choose Your Test

Sat / act prep online guides and tips, the 6 scientific method steps and how to use them.

General Education

When you’re faced with a scientific problem, solving it can seem like an impossible prospect. There are so many possible explanations for everything we see and experience—how can you possibly make sense of them all? Science has a simple answer: the scientific method.

The scientific method is a method of asking and answering questions about the world. These guiding principles give scientists a model to work through when trying to understand the world, but where did that model come from, and how does it work?

In this article, we’ll define the scientific method, discuss its long history, and cover each of the scientific method steps in detail.

What Is the Scientific Method?

At its most basic, the scientific method is a procedure for conducting scientific experiments. It’s a set model that scientists in a variety of fields can follow, going from initial observation to conclusion in a loose but concrete format.

The number of steps varies, but the process begins with an observation, progresses through an experiment, and concludes with analysis and sharing data. One of the most important pieces to the scientific method is skepticism —the goal is to find truth, not to confirm a particular thought. That requires reevaluation and repeated experimentation, as well as examining your thinking through rigorous study.

There are in fact multiple scientific methods, as the basic structure can be easily modified. The one we typically learn about in school is the basic method, based in logic and problem solving, typically used in “hard” science fields like biology, chemistry, and physics. It may vary in other fields, such as psychology, but the basic premise of making observations, testing, and continuing to improve a theory from the results remain the same.

The History of the Scientific Method

The scientific method as we know it today is based on thousands of years of scientific study. Its development goes all the way back to ancient Mesopotamia, Greece, and India.

The Ancient World

In ancient Greece, Aristotle devised an inductive-deductive process , which weighs broad generalizations from data against conclusions reached by narrowing down possibilities from a general statement. However, he favored deductive reasoning, as it identifies causes, which he saw as more important.

Aristotle wrote a great deal about logic and many of his ideas about reasoning echo those found in the modern scientific method, such as ignoring circular evidence and limiting the number of middle terms between the beginning of an experiment and the end. Though his model isn’t the one that we use today, the reliance on logic and thorough testing are still key parts of science today.

The Middle Ages

The next big step toward the development of the modern scientific method came in the Middle Ages, particularly in the Islamic world. Ibn al-Haytham, a physicist from what we now know as Iraq, developed a method of testing, observing, and deducing for his research on vision. al-Haytham was critical of Aristotle’s lack of inductive reasoning, which played an important role in his own research.

Other scientists, including Abū Rayhān al-Bīrūnī, Ibn Sina, and Robert Grosseteste also developed models of scientific reasoning to test their own theories. Though they frequently disagreed with one another and Aristotle, those disagreements and refinements of their methods led to the scientific method we have today.

Following those major developments, particularly Grosseteste’s work, Roger Bacon developed his own cycle of observation (seeing that something occurs), hypothesis (making a guess about why that thing occurs), experimentation (testing that the thing occurs), and verification (an outside person ensuring that the result of the experiment is consistent).

After joining the Franciscan Order, Bacon was granted a special commission to write about science; typically, Friars were not allowed to write books or pamphlets. With this commission, Bacon outlined important tenets of the scientific method, including causes of error, methods of knowledge, and the differences between speculative and experimental science. He also used his own principles to investigate the causes of a rainbow, demonstrating the method’s effectiveness.

Scientific Revolution

Throughout the Renaissance, more great thinkers became involved in devising a thorough, rigorous method of scientific study. Francis Bacon brought inductive reasoning further into the method, whereas Descartes argued that the laws of the universe meant that deductive reasoning was sufficient. Galileo’s research was also inductive reasoning-heavy, as he believed that researchers could not account for every possible variable; therefore, repetition was necessary to eliminate faulty hypotheses and experiments.

All of this led to the birth of the Scientific Revolution , which took place during the sixteenth and seventeenth centuries. In 1660, a group of philosophers and physicians joined together to work on scientific advancement. After approval from England’s crown , the group became known as the Royal Society, which helped create a thriving scientific community and an early academic journal to help introduce rigorous study and peer review.

Previous generations of scientists had touched on the importance of induction and deduction, but Sir Isaac Newton proposed that both were equally important. This contribution helped establish the importance of multiple kinds of reasoning, leading to more rigorous study.

As science began to splinter into separate areas of study, it became necessary to define different methods for different fields. Karl Popper was a leader in this area—he established that science could be subject to error, sometimes intentionally. This was particularly tricky for “soft” sciences like psychology and social sciences, which require different methods. Popper’s theories furthered the divide between sciences like psychology and “hard” sciences like chemistry or physics.

Paul Feyerabend argued that Popper’s methods were too restrictive for certain fields, and followed a less restrictive method hinged on “anything goes,” as great scientists had made discoveries without the Scientific Method. Feyerabend suggested that throughout history scientists had adapted their methods as necessary, and that sometimes it would be necessary to break the rules. This approach suited social and behavioral scientists particularly well, leading to a more diverse range of models for scientists in multiple fields to use.

The Scientific Method Steps

Though different fields may have variations on the model, the basic scientific method is as follows:

#1: Make Observations

Notice something, such as the air temperature during the winter, what happens when ice cream melts, or how your plants behave when you forget to water them.

#2: Ask a Question

Turn your observation into a question. Why is the temperature lower during the winter? Why does my ice cream melt? Why does my toast always fall butter-side down?

This step can also include doing some research. You may be able to find answers to these questions already, but you can still test them!

#3: Make a Hypothesis

A hypothesis is an educated guess of the answer to your question. Why does your toast always fall butter-side down? Maybe it’s because the butter makes that side of the bread heavier.

A good hypothesis leads to a prediction that you can test, phrased as an if/then statement. In this case, we can pick something like, “If toast is buttered, then it will hit the ground butter-first.”

#4: Experiment

Your experiment is designed to test whether your predication about what will happen is true. A good experiment will test one variable at a time —for example, we’re trying to test whether butter weighs down one side of toast, making it more likely to hit the ground first.

The unbuttered toast is our control variable. If we determine the chance that a slice of unbuttered toast, marked with a dot, will hit the ground on a particular side, we can compare those results to our buttered toast to see if there’s a correlation between the presence of butter and which way the toast falls.

If we decided not to toast the bread, that would be introducing a new question—whether or not toasting the bread has any impact on how it falls. Since that’s not part of our test, we’ll stick with determining whether the presence of butter has any impact on which side hits the ground first.

#5: Analyze Data

After our experiment, we discover that both buttered toast and unbuttered toast have a 50/50 chance of hitting the ground on the buttered or marked side when dropped from a consistent height, straight down. It looks like our hypothesis was incorrect—it’s not the butter that makes the toast hit the ground in a particular way, so it must be something else.

Since we didn’t get the desired result, it’s back to the drawing board. Our hypothesis wasn’t correct, so we’ll need to start fresh. Now that you think about it, your toast seems to hit the ground butter-first when it slides off your plate, not when you drop it from a consistent height. That can be the basis for your new experiment.

#6: Communicate Your Results

Good science needs verification. Your experiment should be replicable by other people, so you can put together a report about how you ran your experiment to see if other peoples’ findings are consistent with yours.

This may be useful for class or a science fair. Professional scientists may publish their findings in scientific journals, where other scientists can read and attempt their own versions of the same experiments. Being part of a scientific community helps your experiments be stronger because other people can see if there are flaws in your approach—such as if you tested with different kinds of bread, or sometimes used peanut butter instead of butter—that can lead you closer to a good answer.

A Scientific Method Example: Falling Toast

We’ve run through a quick recap of the scientific method steps, but let’s look a little deeper by trying again to figure out why toast so often falls butter side down.

#1: Make Observations

At the end of our last experiment, where we learned that butter doesn’t actually make toast more likely to hit the ground on that side, we remembered that the times when our toast hits the ground butter side first are usually when it’s falling off a plate.

The easiest question we can ask is, “Why is that?”

We can actually search this online and find a pretty detailed answer as to why this is true. But we’re budding scientists—we want to see it in action and verify it for ourselves! After all, good science should be replicable, and we have all the tools we need to test out what’s really going on.

Why do we think that buttered toast hits the ground butter-first? We know it’s not because it’s heavier, so we can strike that out. Maybe it’s because of the shape of our plate?

That’s something we can test. We’ll phrase our hypothesis as, “If my toast slides off my plate, then it will fall butter-side down.”

Just seeing that toast falls off a plate butter-side down isn’t enough for us. We want to know why, so we’re going to take things a step further—we’ll set up a slow-motion camera to capture what happens as the toast slides off the plate.

We’ll run the test ten times, each time tilting the same plate until the toast slides off. We’ll make note of each time the butter side lands first and see what’s happening on the video so we can see what’s going on.

When we review the footage, we’ll likely notice that the bread starts to flip when it slides off the edge, changing how it falls in a way that didn’t happen when we dropped it ourselves.

That answers our question, but it’s not the complete picture —how do other plates affect how often toast hits the ground butter-first? What if the toast is already butter-side down when it falls? These are things we can test in further experiments with new hypotheses!

Now that we have results, we can share them with others who can verify our results. As mentioned above, being part of the scientific community can lead to better results. If your results were wildly different from the established thinking about buttered toast, that might be cause for reevaluation. If they’re the same, they might lead others to make new discoveries about buttered toast. At the very least, you have a cool experiment you can share with your friends!

Key Scientific Method Tips

Though science can be complex, the benefit of the scientific method is that it gives you an easy-to-follow means of thinking about why and how things happen. To use it effectively, keep these things in mind!

Don’t Worry About Proving Your Hypothesis

One of the important things to remember about the scientific method is that it’s not necessarily meant to prove your hypothesis right. It’s great if you do manage to guess the reason for something right the first time, but the ultimate goal of an experiment is to find the true reason for your observation to occur, not to prove your hypothesis right.

Good science sometimes means that you’re wrong. That’s not a bad thing—a well-designed experiment with an unanticipated result can be just as revealing, if not more, than an experiment that confirms your hypothesis.

Be Prepared to Try Again

If the data from your experiment doesn’t match your hypothesis, that’s not a bad thing. You’ve eliminated one possible explanation, which brings you one step closer to discovering the truth.

The scientific method isn’t something you’re meant to do exactly once to prove a point. It’s meant to be repeated and adapted to bring you closer to a solution. Even if you can demonstrate truth in your hypothesis, a good scientist will run an experiment again to be sure that the results are replicable. You can even tweak a successful hypothesis to test another factor, such as if we redid our buttered toast experiment to find out whether different kinds of plates affect whether or not the toast falls butter-first. The more we test our hypothesis, the stronger it becomes!

What’s Next?

Want to learn more about the scientific method? These important high school science classes will no doubt cover it in a variety of different contexts.

Test your ability to follow the scientific method using these at-home science experiments for kids !

Need some proof that science is fun? Try making slime

Melissa Brinks graduated from the University of Washington in 2014 with a Bachelor's in English with a creative writing emphasis. She has spent several years tutoring K-12 students in many subjects, including in SAT prep, to help them prepare for their college education.

Student and Parent Forum

Our new student and parent forum, at ExpertHub.PrepScholar.com , allow you to interact with your peers and the PrepScholar staff. See how other students and parents are navigating high school, college, and the college admissions process. Ask questions; get answers.

Ask a Question Below

Have any questions about this article or other topics? Ask below and we'll reply!

Improve With Our Famous Guides

- For All Students

The 5 Strategies You Must Be Using to Improve 160+ SAT Points

How to Get a Perfect 1600, by a Perfect Scorer

Series: How to Get 800 on Each SAT Section:

Score 800 on SAT Math

Score 800 on SAT Reading

Score 800 on SAT Writing

Series: How to Get to 600 on Each SAT Section:

Score 600 on SAT Math

Score 600 on SAT Reading

Score 600 on SAT Writing

Free Complete Official SAT Practice Tests

What SAT Target Score Should You Be Aiming For?

15 Strategies to Improve Your SAT Essay

The 5 Strategies You Must Be Using to Improve 4+ ACT Points

How to Get a Perfect 36 ACT, by a Perfect Scorer

Series: How to Get 36 on Each ACT Section:

36 on ACT English

36 on ACT Math

36 on ACT Reading

36 on ACT Science

Series: How to Get to 24 on Each ACT Section:

24 on ACT English

24 on ACT Math

24 on ACT Reading

24 on ACT Science

What ACT target score should you be aiming for?

ACT Vocabulary You Must Know

ACT Writing: 15 Tips to Raise Your Essay Score

How to Get Into Harvard and the Ivy League

How to Get a Perfect 4.0 GPA

How to Write an Amazing College Essay

What Exactly Are Colleges Looking For?

Is the ACT easier than the SAT? A Comprehensive Guide

Should you retake your SAT or ACT?

When should you take the SAT or ACT?

Stay Informed

Get the latest articles and test prep tips!

Looking for Graduate School Test Prep?

Check out our top-rated graduate blogs here:

GRE Online Prep Blog

GMAT Online Prep Blog

TOEFL Online Prep Blog

Holly R. "I am absolutely overjoyed and cannot thank you enough for helping me!”

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1.3: The Scientific Method - How Chemists Think

- Last updated

- Save as PDF

- Page ID 47444

Learning Objectives

- Identify the components of the scientific method.

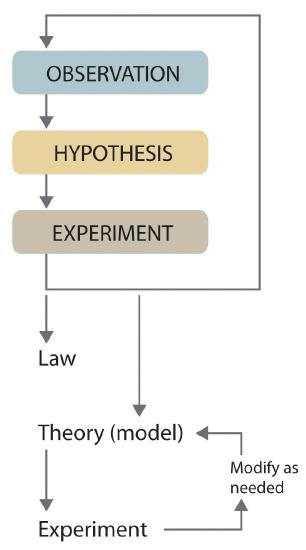

Scientists search for answers to questions and solutions to problems by using a procedure called the scientific method. This procedure consists of making observations, formulating hypotheses, and designing experiments; which leads to additional observations, hypotheses, and experiments in repeated cycles (Figure \(\PageIndex{1}\)).

Step 1: Make observations

Observations can be qualitative or quantitative. Qualitative observations describe properties or occurrences in ways that do not rely on numbers. Examples of qualitative observations include the following: "the outside air temperature is cooler during the winter season," "table salt is a crystalline solid," "sulfur crystals are yellow," and "dissolving a penny in dilute nitric acid forms a blue solution and a brown gas." Quantitative observations are measurements, which by definition consist of both a number and a unit. Examples of quantitative observations include the following: "the melting point of crystalline sulfur is 115.21° Celsius," and "35.9 grams of table salt—the chemical name of which is sodium chloride—dissolve in 100 grams of water at 20° Celsius." For the question of the dinosaurs’ extinction, the initial observation was quantitative: iridium concentrations in sediments dating to 66 million years ago were 20–160 times higher than normal.

Step 2: Formulate a hypothesis

After deciding to learn more about an observation or a set of observations, scientists generally begin an investigation by forming a hypothesis, a tentative explanation for the observation(s). The hypothesis may not be correct, but it puts the scientist’s understanding of the system being studied into a form that can be tested. For example, the observation that we experience alternating periods of light and darkness corresponding to observed movements of the sun, moon, clouds, and shadows is consistent with either one of two hypotheses:

- Earth rotates on its axis every 24 hours, alternately exposing one side to the sun.

- The sun revolves around Earth every 24 hours.

Suitable experiments can be designed to choose between these two alternatives. For the disappearance of the dinosaurs, the hypothesis was that the impact of a large extraterrestrial object caused their extinction. Unfortunately (or perhaps fortunately), this hypothesis does not lend itself to direct testing by any obvious experiment, but scientists can collect additional data that either support or refute it.

Step 3: Design and perform experiments

After a hypothesis has been formed, scientists conduct experiments to test its validity. Experiments are systematic observations or measurements, preferably made under controlled conditions—that is—under conditions in which a single variable changes.

Step 4: Accept or modify the hypothesis

A properly designed and executed experiment enables a scientist to determine whether or not the original hypothesis is valid. If the hypothesis is valid, the scientist can proceed to step 5. In other cases, experiments often demonstrate that the hypothesis is incorrect or that it must be modified and requires further experimentation.

Step 5: Development into a law and/or theory

More experimental data are then collected and analyzed, at which point a scientist may begin to think that the results are sufficiently reproducible (i.e., dependable) to merit being summarized in a law, a verbal or mathematical description of a phenomenon that allows for general predictions. A law simply states what happens; it does not address the question of why.

One example of a law, the law of definite proportions , which was discovered by the French scientist Joseph Proust (1754–1826), states that a chemical substance always contains the same proportions of elements by mass. Thus, sodium chloride (table salt) always contains the same proportion by mass of sodium to chlorine, in this case 39.34% sodium and 60.66% chlorine by mass, and sucrose (table sugar) is always 42.11% carbon, 6.48% hydrogen, and 51.41% oxygen by mass.

Whereas a law states only what happens, a theory attempts to explain why nature behaves as it does. Laws are unlikely to change greatly over time unless a major experimental error is discovered. In contrast, a theory, by definition, is incomplete and imperfect, evolving with time to explain new facts as they are discovered.

Because scientists can enter the cycle shown in Figure \(\PageIndex{1}\) at any point, the actual application of the scientific method to different topics can take many different forms. For example, a scientist may start with a hypothesis formed by reading about work done by others in the field, rather than by making direct observations.

Example \(\PageIndex{1}\)

Classify each statement as a law, a theory, an experiment, a hypothesis, an observation.

- Ice always floats on liquid water.

- Birds evolved from dinosaurs.

- Hot air is less dense than cold air, probably because the components of hot air are moving more rapidly.

- When 10 g of ice were added to 100 mL of water at 25°C, the temperature of the water decreased to 15.5°C after the ice melted.

- The ingredients of Ivory soap were analyzed to see whether it really is 99.44% pure, as advertised.

- This is a general statement of a relationship between the properties of liquid and solid water, so it is a law.

- This is a possible explanation for the origin of birds, so it is a hypothesis.

- This is a statement that tries to explain the relationship between the temperature and the density of air based on fundamental principles, so it is a theory.

- The temperature is measured before and after a change is made in a system, so these are observations.

- This is an analysis designed to test a hypothesis (in this case, the manufacturer’s claim of purity), so it is an experiment.

Exercise \(\PageIndex{1}\)

Classify each statement as a law, a theory, an experiment, a hypothesis, a qualitative observation, or a quantitative observation.

- Measured amounts of acid were added to a Rolaids tablet to see whether it really “consumes 47 times its weight in excess stomach acid.”

- Heat always flows from hot objects to cooler ones, not in the opposite direction.

- The universe was formed by a massive explosion that propelled matter into a vacuum.

- Michael Jordan is the greatest pure shooter to ever play professional basketball.

- Limestone is relatively insoluble in water, but dissolves readily in dilute acid with the evolution of a gas.

The scientific method is a method of investigation involving experimentation and observation to acquire new knowledge, solve problems, and answer questions. The key steps in the scientific method include the following:

- Step 1: Make observations.

- Step 2: Formulate a hypothesis.

- Step 3: Test the hypothesis through experimentation.

- Step 4: Accept or modify the hypothesis.

- Step 5: Develop into a law and/or a theory.

Contributions & Attributions

- Publications

- Conferences & Events

- Professional Learning

- Science Standards

- Awards & Competitions

- Daily Do Lesson Plans

- Free Resources

- American Rescue Plan

- For Preservice Teachers

- NCCSTS Case Collection

- Partner Jobs in Education

- Interactive eBooks+

- Digital Catalog

- Regional Product Representatives

- e-Newsletters

- Bestselling Books

- Latest Books

- Popular Book Series

- Prospective Authors

- Web Seminars

- Exhibits & Sponsorship

- Conference Reviewers

- National Conference • Denver 24

- Leaders Institute 2024

- National Conference • New Orleans 24

- Submit a Proposal

- Latest Resources

- Professional Learning Units & Courses

- For Districts

- Online Course Providers

- Schools & Districts

- College Professors & Students

- The Standards

- Teachers and Admin

- eCYBERMISSION

- Toshiba/NSTA ExploraVision

- Junior Science & Humanities Symposium

- Teaching Awards

- Climate Change

- Earth & Space Science

- New Science Teachers

- Early Childhood

- Middle School

- High School

- Postsecondary

- Informal Education

- Journal Articles

- Lesson Plans

- e-newsletters

- Science & Children

- Science Scope

- The Science Teacher

- Journal of College Sci. Teaching

- Connected Science Learning

- NSTA Reports

- Next-Gen Navigator

- Science Update

- Teacher Tip Tuesday

- Trans. Sci. Learning

MyNSTA Community

- My Collections

A Problem-Solving Experiment

Using Beer’s Law to Find the Concentration of Tartrazine

The Science Teacher—January/February 2022 (Volume 89, Issue 3)

By Kevin Mason, Steve Schieffer, Tara Rose, and Greg Matthias

Share Start a Discussion

A problem-solving experiment is a learning activity that uses experimental design to solve an authentic problem. It combines two evidence-based teaching strategies: problem-based learning and inquiry-based learning. The use of problem-based learning and scientific inquiry as an effective pedagogical tool in the science classroom has been well established and strongly supported by research ( Akinoglu and Tandogan 2007 ; Areepattamannil 2012 ; Furtak, Seidel, and Iverson 2012 ; Inel and Balim 2010 ; Merritt et al. 2017 ; Panasan and Nuangchalerm 2010 ; Wilson, Taylor, and Kowalski 2010 ).

Floyd James Rutherford, the founder of the American Association for the Advancement of Science (AAAS) Project 2061 once stated, “To separate conceptually scientific content from scientific inquiry,” he underscored, “is to make it highly probable that the student will properly understand neither” (1964, p. 84). A more recent study using randomized control trials showed that teachers that used an inquiry and problem-based pedagogy for seven months improved student performance in math and science ( Bando, Nashlund-Hadley, and Gertler 2019 ). A problem-solving experiment uses problem-based learning by posing an authentic or meaningful problem for students to solve and inquiry-based learning by requiring students to design an experiment to collect and analyze data to solve the problem.

In the problem-solving experiment described in this article, students used Beer’s Law to collect and analyze data to determine if a person consumed a hazardous amount of tartrazine (Yellow Dye #5) for their body weight. The students used their knowledge of solutions, molarity, dilutions, and Beer’s Law to design their own experiment and calculate the amount of tartrazine in a yellow sports drink (or citrus-flavored soda).

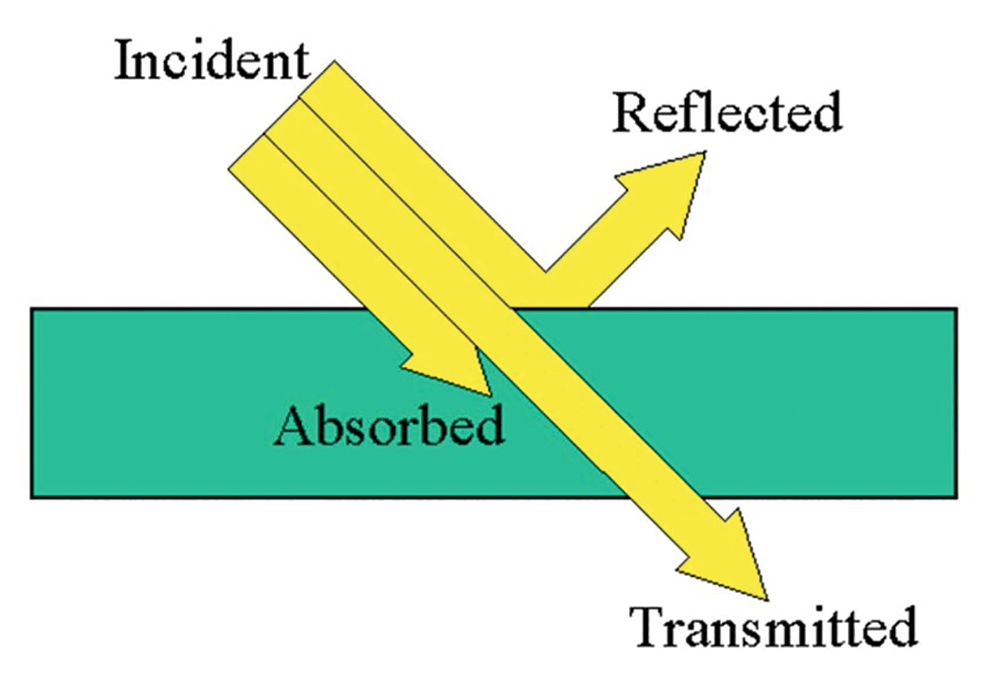

According to the Next Generation Science Standards, energy is defined as “a quantitative property of a system that depends on the motion and interactions of matter and radiation with that system” ( NGSS Lead States 2013 ). Interactions of matter and radiation can be some of the most challenging for students to observe, investigate, and conceptually understand. As a result, students need opportunities to observe and investigate the interactions of matter and radiation. Light is one example of radiation that interacts with matter.

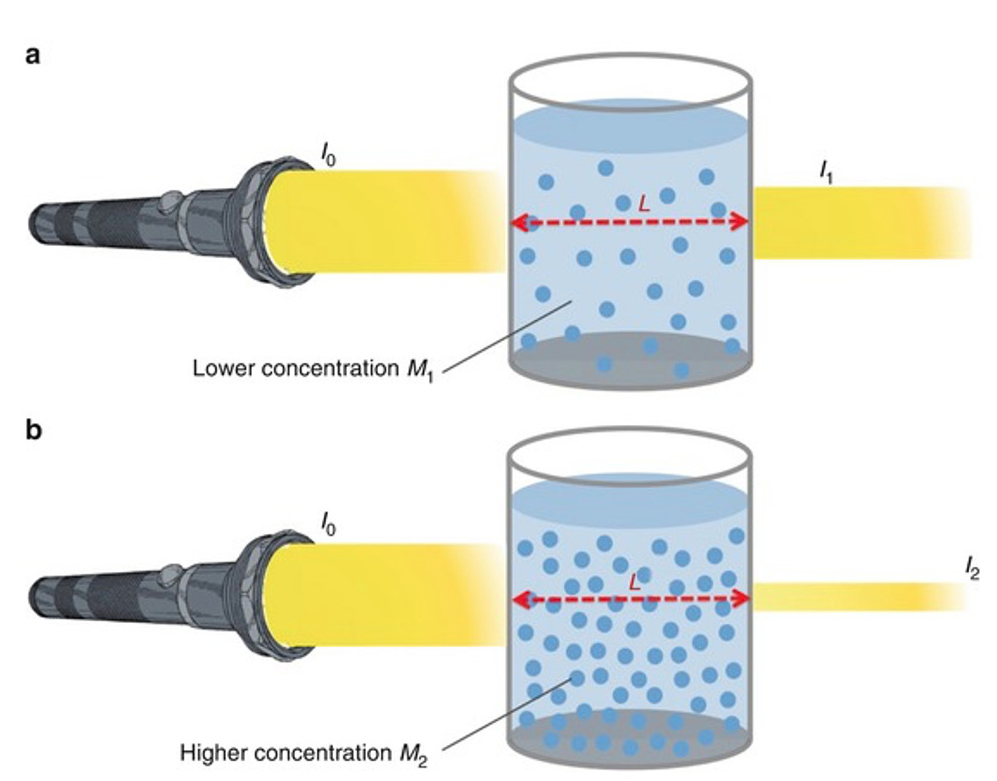

Light is electromagnetic radiation that is detectable to the human eye and exhibits properties of both a wave and a particle. When light interacts with matter, light can be reflected at the surface, absorbed by the matter, or transmitted through the matter ( Figure 1 ). When a single beam of light enters a substance at a perpendicularly (at a 90 ° angle to the surface), the amount of reflection is minimal. Therefore, the light will either be absorbed by the substance or be transmitted through the substance. When a given wavelength of light shines into a solution, the amount of light that is absorbed will depend on the identity of the substance, the thickness of the container, and the concentration of the solution.

Light interacting with matter.

(Retrieved from https://etorgerson.files.wordpress.com/2011/05/light-reflect-refract-absorb-label.jpg ).

Beer’s Law states the amount of light absorbed is directly proportional to the thickness and concentration of a solution. Beer’s Law is also sometimes known as the Beer-Lambert Law. A solution of a higher concentration will absorb more light and transmit less light ( Figure 2 ). Similarly, if the solution is placed in a thicker container that requires the light to pass through a greater distance, then the solution will absorb more light and transmit less light.

Light transmitted through a solution.

(Retrieved from https://media.springernature.com/original/springer-static/image/chp%3A10.1007%2F978-3-319-57330-4_13/MediaObjects/432946_1_En_13_Fig4_HTML.jpg ).

Definitions of key terms.

Absorbance (A) – the process of light energy being captured by a substance

Beer’s Law (Beer-Lambert Law) – the absorbance (A) of light is directly proportional to the molar absorptivity (ε), thickness (b), and concentration (C) of the solution (A = εbC)

Concentration (C) – the amount of solute dissolved per amount of solution

Cuvette – a container used to hold a sample to be tested in a spectrophotometer

Energy (E) – a quantitative property of a system that depends on motion and interactions of matter and radiation with that system (NGSS Lead States 2013).

Intensity (I) – the amount or brightness of light

Light – electromagnetic radiation that is detectable to the human eye and exhibits properties of both a wave and a particle

Molar Absorptivity (ε) – a property that represents the amount of light absorbed by a given substance per molarity of the solution and per centimeter of thickness (M-1 cm-1)

Molarity (M) – the number of moles of solute per liters of solution (Mol/L)

Reflection – the process of light energy bouncing off the surface of a substance

Spectrophotometer – a device used to measure the absorbance of light by a substance

Tartrazine – widely used food and liquid dye

Transmittance (T) – the process of light energy passing through a substance

The amount of light absorbed by a solution can be measured using a spectrophotometer. The solution of a given concentration is placed in a small container called a cuvette. The cuvette has a known thickness that can be held constant during the experiment. It is also possible to obtain cuvettes of different thicknesses to study the effect of thickness on the absorption of light. The key definitions of the terms related to Beer’s Law and the learning activity presented in this article are provided in Figure 3 .

Overview of the problem-solving experiment

In the problem presented to students, a 140-pound athlete drinks two bottles of yellow sports drink every day ( Figure 4 ; see Online Connections). When she starts to notice a rash on her skin, she reads the label of the sports drink and notices that it contains a yellow dye known as tartrazine. While tartrazine is safe to drink, it may produce some potential side effects in large amounts, including rashes, hives, or swelling. The students must design an experiment to determine the concentration of tartrazine in the yellow sports drink and the number of milligrams of tartrazine in two bottles of the sports drink.

While a sports drink may have many ingredients, the vast majority of ingredients—such as sugar or electrolytes—are colorless when dissolved in water solution. The dyes added to the sports drink are responsible for the color of the sports drink. Food manufacturers may use different dyes to color sports drinks to the desired color. Red dye #40 (allura red), blue dye #1 (brilliant blue), yellow dye #5 (tartrazine), and yellow dye #6 (sunset yellow) are the four most common dyes or colorants in sports drinks and many other commercial food products ( Stevens et al. 2015 ). The concentration of the dye in the sports drink affects the amount of light absorbed.

In this problem-solving experiment, the students used the previously studied concept of Beer’s Law—using serial dilutions and absorbance—to find the concentration (molarity) of tartrazine in the sports drink. Based on the evidence, the students then determined if the person had exceeded the maximum recommended daily allowance of tartrazine, given in mg/kg of body mass. The learning targets for this problem-solving experiment are shown in Figure 5 (see Online Connections).

Pre-laboratory experiences

A problem-solving experiment is a form of guided inquiry, which will generally require some prerequisite knowledge and experience. In this activity, the students needed prior knowledge and experience with Beer’s Law and the techniques in using Beer’s Law to determine an unknown concentration. Prior to the activity, students learned how Beer’s Law is used to relate absorbance to concentration as well as how to use the equation M 1 V 1 = M 2 V 2 to determine concentrations of dilutions. The students had a general understanding of molarity and using dimensional analysis to change units in measurements.

The techniques for using Beer’s Law were introduced in part through a laboratory experiment using various concentrations of copper sulfate. A known concentration of copper sulfate was provided and the students followed a procedure to prepare dilutions. Students learned the technique for choosing the wavelength that provided the maximum absorbance for the solution to be tested ( λ max ), which is important for Beer’s Law to create a linear relationship between absorbance and solution concentration. Students graphed the absorbance of each concentration in a spreadsheet as a scatterplot and added a linear trend line. Through class discussion, the teacher checked for understanding in using the equation of the line to determine the concentration of an unknown copper sulfate solution.

After the students graphed the data, they discussed how the R2 value related to the data set used to construct the graph. After completing this experiment, the students were comfortable making dilutions from a stock solution, calculating concentrations, and using the spectrophotometer to use Beer’s Law to determine an unknown concentration.

Introducing the problem

After the initial experiment on Beer’s Law, the problem-solving experiment was introduced. The problem presented to students is shown in Figure 4 (see Online Connections). A problem-solving experiment provides students with a valuable opportunity to collaborate with other students in designing an experiment and solving a problem. For this activity, the students were assigned to heterogeneous or mixed-ability laboratory groups. Groups should be diversified based on gender; research has shown that gender diversity among groups improves academic performance, while racial diversity has no significant effect ( Hansen, Owan, and Pan 2015 ). It is also important to support students with special needs when assigning groups. The mixed-ability groups were assigned intentionally to place students with special needs with a peer who has the academic ability and disposition to provide support. In addition, some students may need additional accommodations or modifications for this learning activity, such as an outlined lab report, a shortened lab report format, or extended time to complete the analysis. All students were required to wear chemical-splash goggles and gloves, and use caution when handling solutions and glass apparatuses.

Designing the experiment

During this activity, students worked in lab groups to design their own experiment to solve a problem. The teacher used small-group and whole-class discussions to help students understand the problem. Students discussed what information was provided and what they need to know and do to solve the problem. In planning the experiment, the teacher did not provide a procedure and intentionally provided only minimal support to the students as needed. The students designed their own experimental procedure, which encouraged critical thinking and problem solving. The students needed to be allowed to struggle to some extent. The teacher provided some direction and guidance by posing questions for students to consider and answer for themselves. Students were also frequently reminded to review their notes and the previous experiment on Beer’s Law to help them better use their resources to solve the problem. The use of heterogeneous or mixed-ability groups also helped each group be more self-sufficient and successful in designing and conducting the experiment.

Students created a procedure for their experiment with the teacher providing suggestions or posing questions to enhance the experimental design, if needed. Safety was addressed during this consultation to correct safety concerns in the experimental design or provide safety precautions for the experiment. Students needed to wear splash-proof goggles and gloves throughout the experiment. In a few cases, students realized some opportunities to improve their experimental design during the experiment. This was allowed with the teacher’s approval, and the changes to the procedure were documented for the final lab report.

Conducting the experiment

A sample of the sports drink and a stock solution of 0.01 M stock solution of tartrazine were provided to the students. There are many choices of sports drinks available, but it is recommended that the ingredients are checked to verify that tartrazine (yellow dye #5) is the only colorant added. This will prevent other colorants from affecting the spectroscopy results in the experiment. A citrus-flavored soda could also be used as an alternative because many sodas have tartrazine added as well. It is important to note that tartrazine is considered safe to drink, but it may produce some potential side effects in large amounts, including rashes, hives, or swelling. A list of the materials needed for this problem-solving experiment is shown in Figure 6 (see Online Connections).

This problem-solving experiment required students to create dilutions of known concentrations of tartrazine as a reference to determine the unknown concentration of tartrazine in a sports drink. To create the dilutions, the students were provided with a 0.01 M stock solution of tartrazine. The teacher purchased powdered tartrazine, available from numerous vendors, to create the stock solution. The 0.01 M stock solution was prepared by weighing 0.534 g of tartrazine and dissolving it in enough distilled water to make a 100 ml solution. Yellow food coloring could be used as an alternative, but it would take some research to determine its concentration. Since students have previously explored the experimental techniques, they should know to prepare dilutions that are somewhat darker and somewhat lighter in color than the yellow sports drink sample. Students should use five dilutions for best results.

Typically, a good range for the yellow sports drink is standard dilutions ranging from 1 × 10-3 M to 1 × 10-5 M. The teacher may need to caution the students that if a dilution is too dark, it will not yield good results and lower the R2 value. Students that used very dark dilutions often realized that eliminating that data point created a better linear trendline, as long as it didn’t reduce the number of data points to fewer than four data points. Some students even tried to use the 0.01 M stock solution without any dilution. This was much too dark. The students needed to do substantial dilutions to get the solutions in the range of the sports drink.

After the dilutions are created, the absorbance of each dilution was measured using a spectrophotometer. A Vernier SpectroVis (~$400) spectrophotometer was used to measure the absorbance of the prepared dilutions with known concentrations. The students adjusted the spectrophotometer to use different wavelengths of light and selected the wavelength with the highest absorbance reading. The same wavelength was then used for each measurement of absorbance. A wavelength of 650 nanometers (nm) provided an accurate measurement and good linear relationship. After measuring the absorbance of the dilutions of known concentrations, the students measured the absorbance of the sports drink with an unknown concentration of tartrazine using the spectrophotometer at the same wavelength. If a spectrophotometer is not available, a color comparison can be used as a low-cost alternative for completing this problem-solving experiment ( Figure 7 ; see Online Connections).

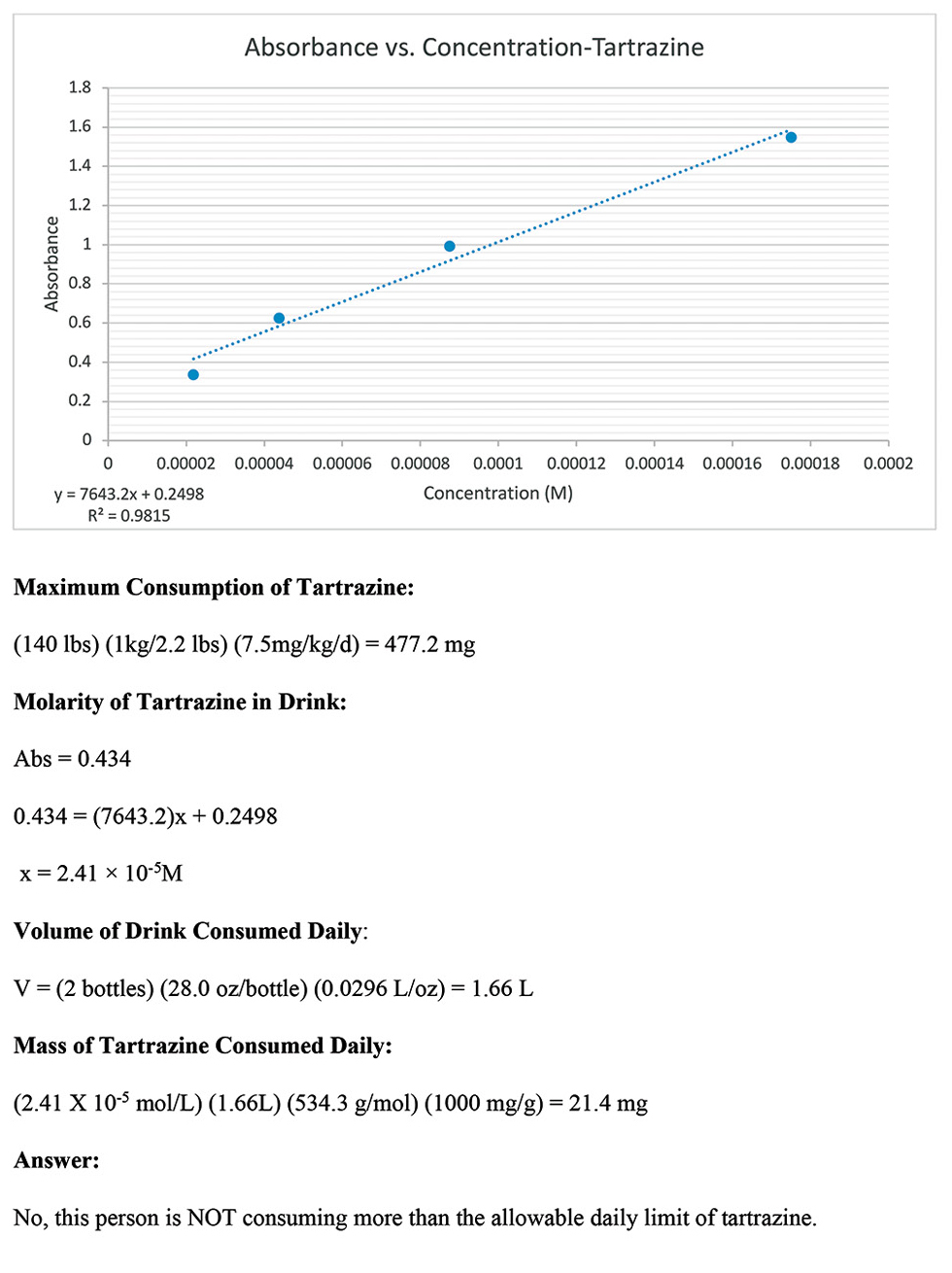

Analyzing the results

After completing the experiment, the students graphed the absorbance and known tartrazine concentrations of the dilutions on a scatter-plot to create a linear trendline. In this experiment, absorbance was the dependent variable, which should be graphed on the y -axis. Some students mistakenly reversed the axes on the scatter-plot. Next, the students used the graph to find the equation for the line. Then, the students solve for the unknown concentration (molarity) of tartrazine in the sports drink given the linear equation and the absorbance of the sports drink measured experimentally.

To answer the question posed in the problem, the students also calculated the maximum amount of tartrazine that could be safely consumed by a 140 lb. person, using the information given in the problem. A common error in solving the problem was not converting the units of volume given in the problem from ounces to liters. With the molarity and volume in liters, the students then calculated the mass of tartrazine consumed per day in milligrams. A sample of the graph and calculations from one student group are shown in Figure 8 . Finally, based on their calculations, the students answered the question posed in the original problem and determined if the person’s daily consumption of tartrazine exceeded the threshold for safe consumption. In this case, the students concluded that the person did NOT consume more than the allowable daily limit of tartrazine.

Sample graph and calculations from a student group.

Communicating the results

After conducting the experiment, students reported their results in a written laboratory report that included the following sections: title, purpose, introduction, hypothesis, materials and methods, data and calculations, conclusion, and discussion. The laboratory report was assessed using the scoring rubric shown in Figure 9 (see Online Connections). In general, the students did very well on this problem-solving experiment. Students typically scored a three or higher on each criteria of the rubric. Throughout the activity, the students successfully demonstrated their ability to design an experiment, collect data, perform calculations, solve a problem, and effectively communicate those results.

This activity is authentic problem-based learning in science as the true concentration of tartrazine in the sports drink was not provided by the teacher or known by the students. The students were generally somewhat biased as they assumed the experiment would result in exceeding the recommended maximum consumption of tartrazine. Some students struggled with reporting that the recommended limit was far higher than the two sports drinks consumed by the person each day. This allows for a great discussion about the use of scientific methods and evidence to provide unbiased answers to meaningful questions and problems.

The most common errors in this problem-solving experiment were calculation errors, with the most common being calculating the concentrations of the dilutions (perhaps due to the use of very small concentrations). There were also several common errors in communicating the results in the laboratory report. In some cases, students did not provide enough background information in the introduction of the report. When the students communicated the results, some students also failed to reference specific data from the experiment. Finally, in the discussion section, some students expressed concern or doubts in the results, not because there was an obvious error, but because they did not believe the level consumed could be so much less than the recommended consumption limit of tartrazine.

The scientific study and investigation of energy and matter are salient topics addressed in the Next Generation Science Standards ( Figure 10 ; see Online Connections). In a chemistry classroom, students should have multiple opportunities to observe and investigate the interaction of energy and matter. In this problem-solving experiment students used Beer’s Law to collect and analyze data to determine if a person consumed an amount of tartrazine that exceeded the maximum recommended daily allowance. The students correctly concluded that the person in the problem did not consume more than the recommended daily amount of tartrazine for their body weight.

In this activity students learned to work collaboratively to design an experiment, collect and analyze data, and solve a problem. These skills extend beyond any one science subject or class. Through this activity, students had the opportunity to do real-world science to solve a problem without a previously known result. The process of designing an experiment may be difficult for some students that are often accustomed to being given an experimental procedure in their previous science classroom experiences. However, because students sometimes struggled to design their own experiment and perform the calculations, students also learned to persevere in collecting and analyzing data to solve a problem, which is a valuable life lesson for all students. ■

Online Connections

The Beer-Lambert Law at Chemistry LibreTexts: https://bit.ly/3lNpPEi

Beer’s Law – Theoretical Principles: https://teaching.shu.ac.uk/hwb/chemistry/tutorials/molspec/beers1.htm

Beer’s Law at Illustrated Glossary of Organic Chemistry: http://www.chem.ucla.edu/~harding/IGOC/B/beers_law.html

Beer Lambert Law at Edinburgh Instruments: https://www.edinst.com/blog/the-beer-lambert-law/

Beer’s Law Lab at PhET Interactive Simulations: https://phet.colorado.edu/en/simulation/beers-law-lab

Figure 4. Problem-solving experiment problem statement: https://bit.ly/3pAYHtj

Figure 5. Learning targets: https://bit.ly/307BHtb

Figure 6. Materials list: https://bit.ly/308a57h

Figure 7. The use of color comparison as a low-cost alternative: https://bit.ly/3du1uyO

Figure 9. Summative performance-based assessment rubric: https://bit.ly/31KoZRj

Figure 10. Connecting to the Next Generation Science Standards : https://bit.ly/3GlJnY0

Kevin Mason ( [email protected] ) is Professor of Education at the University of Wisconsin–Stout, Menomonie, WI; Steve Schieffer is a chemistry teacher at Amery High School, Amery, WI; Tara Rose is a chemistry teacher at Amery High School, Amery, WI; and Greg Matthias is Assistant Professor of Education at the University of Wisconsin–Stout, Menomonie, WI.

Akinoglu, O., and R. Tandogan. 2007. The effects of problem-based active learning in science education on students’ academic achievement, attitude and concept learning. Eurasia Journal of Mathematics, Science, and Technology Education 3 (1): 77–81.

Areepattamannil, S. 2012. Effects of inquiry-based science instruction on science achievement and interest in science: Evidence from Qatar. The Journal of Educational Research 105 (2): 134–146.

Bando R., E. Nashlund-Hadley, and P. Gertler. 2019. Effect of inquiry and problem-based pedagogy on learning: Evidence from 10 field experiments in four countries. The National Bureau of Economic Research 26280.

Furtak, E., T. Seidel, and H. Iverson. 2012. Experimental and quasi-experimental studies of inquiry-based science teaching: A meta-analysis. Review of Educational Research 82 (3): 300–329.

Hansen, Z., H. Owan, and J. Pan. 2015. The impact of group diversity on class performance. Education Economics 23 (2): 238–258.

Inel, D., and A. Balim. 2010. The effects of using problem-based learning in science and technology teaching upon students’ academic achievement and levels of structuring concepts. Pacific Forum on Science Learning and Teaching 11 (2): 1–23.

Merritt, J., M. Lee, P. Rillero, and B. Kinach. 2017. Problem-based learning in K–8 mathematics and science education: A literature review. The Interdisciplinary Journal of Problem-based Learning 11 (2).

NGSS Lead States. 2013. Next Generation Science Standards: For states, by states. Washington, DC: National Academies Press.

Panasan, M., and P. Nuangchalerm. 2010. Learning outcomes of project-based and inquiry-based learning activities. Journal of Social Sciences 6 (2): 252–255.

Rutherford, F.J. 1964. The role of inquiry in science teaching. Journal of Research in Science Teaching 2 (2): 80–84.

Stevens, L.J., J.R. Burgess, M.A. Stochelski, and T. Kuczek. 2015. Amounts of artificial food dyes and added sugars in foods and sweets commonly consumed by children. Clinical Pediatrics 54 (4): 309–321.

Wilson, C., J. Taylor, and S. Kowalski. 2010. The relative effects and equity of inquiry-based and commonplace science teaching on students’ knowledge, reasoning, and argumentation. Journal of Research in Science Teaching 47 (3): 276–301.

Chemistry Crosscutting Concepts Curriculum Disciplinary Core Ideas General Science Inquiry Instructional Materials Labs Lesson Plans Mathematics NGSS Pedagogy Science and Engineering Practices STEM Teaching Strategies Technology Three-Dimensional Learning High School

You may also like

Reports Article

Web Seminar

Join us on Thursday, June 13, 2024, from 7:00 PM to 8:00 PM ET, to learn about the science and technology of firefighting. Wildfires have become an e...

Join us on Thursday, October 10, 2024, from 7:00 to 8:00 PM ET, for a Science Update web seminar presented by NOAA about climate science and marine sa...

Secondary Pre-service Teachers! Join us on Monday, October 21, 2024, from 7:00 to 8:15 PM ET to learn about safety considerations for the science labo...

Module 7: Exponents

Problem solving with scientific notation, learning outcome.

- Solve application problems involving scientific notation

A water molecule.

Solve Application Problems

Learning rules for exponents seems pointless without context, so let us explore some examples of using scientific notation that involve real problems. First, let us look at an example of how scientific notation can be used to describe real measurements.

Think About It

Match each length in the table with the appropriate number of meters described in scientific notation below. Write your ideas in the textboxes provided before you look at the solution.

Several red blood cells.

One of the most important parts of solving a “real-world” problem is translating the words into appropriate mathematical terms and recognizing when a well known formula may help. Here is an example that requires you to find the density of a cell given its mass and volume. Cells are not visible to the naked eye, so their measurements, as described with scientific notation, involve negative exponents.

Human cells come in a wide variety of shapes and sizes. The mass of an average human cell is about [latex]2\times10^{-11}[/latex] grams. [1] Red blood cells are one of the smallest types of cells [2] , clocking in at a volume of approximately [latex]10^{-6}\text{ meters }^3[/latex]. [3] Biologists have recently discovered how to use the density of some types of cells to indicate the presence of disorders such as sickle cell anemia or leukemia. [4] Density is calculated as [latex]\frac{\text{ mass }}{\text{ volume }}[/latex]. Calculate the density of an average human cell.

Read and Understand: We are given an average cellular mass and volume as well as the formula for density. We are looking for the density of an average human cell.

Define and Translate: [latex]m=\text{mass}=2\times10^{-11}[/latex], [latex]v=\text{volume}=10^{-6}\text{ meters}^3[/latex], [latex]\text{density}=\frac{\text{ mass }}{\text{ volume }}[/latex]

Write and Solve: Use the quotient rule to simplify the ratio.

[latex]\begin{array}{c}\text{ density }=\frac{2\times10^{-11}\text{ grams }}{10^{-6}\text{ meter }^3}\\\text{ }\\\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,=2\times10^{-11-\left(-6\right)}\frac{\text{ grams }}{\text{ meter }^3}\\\text{ }\\\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,=2\times10^{-5}\frac{\text{ grams }}{\text{ meter }^3}\\\end{array}[/latex]

If scientists know the density of healthy cells, they can compare the density of a sick person’s cells to that to rule out or test for disorders or diseases that may affect cellular density.

The average density of a human cell is [latex]2\times10^{-5}\frac{\text{ grams }}{\text{ meter }^3}[/latex]

The following video provides an example of how to find the number of operations a computer can perform in a very short amount of time.

Light traveling from the sun to the earth.

In the next example, you will use another well known formula, [latex]d=r\cdot{t}[/latex], to find how long it takes light to travel from the sun to Earth. Unlike the previous example, the distance between the earth and the sun is massive, so the numbers you will work with have positive exponents.

The speed of light is [latex]3\times10^{8}\frac{\text{ meters }}{\text{ second }}[/latex]. If the sun is [latex]1.5\times10^{11}[/latex] meters from earth, how many seconds does it take for sunlight to reach the earth? Write your answer in scientific notation.

Read and Understand: We are looking for how long—an amount of time. We are given a rate which has units of meters per second and a distance in meters. This is a [latex]d=r\cdot{t}[/latex] problem.

Define and Translate:

[latex]\begin{array}{l}d=1.5\times10^{11}\\r=3\times10^{8}\frac{\text{ meters }}{\text{ second }}\\t=\text{ ? }\end{array}[/latex]

Write and Solve: Substitute the values we are given into the [latex]d=r\cdot{t}[/latex] equation. We will work without units to make it easier. Often, scientists will work with units to make sure they have made correct calculations.

[latex]\begin{array}{c}d=r\cdot{t}\\1.5\times10^{11}=3\times10^{8}\cdot{t}\end{array}[/latex]

Divide both sides of the equation by [latex]3\times10^{8}[/latex] to isolate t.

[latex]\begin{array}{c}\frac{1.5\times10^{11}}{3\times10^{8}}=\frac{3\times10^{8}}{3\times10^{8}}\cdot{t}\end{array}[/latex]

On the left side, you will need to use the quotient rule of exponents to simplify, and on the right, you are left with t.

[latex]\begin{array}{c}\text{ }\\\left(\frac{1.5}{3}\right)\times\left(\frac{10^{11}}{10^{8}}\right)=t\\\text{ }\\\left(0.5\right)\times\left(10^{11-8}\right)=t\\0.5\times10^3=t\end{array}[/latex]

This answer is not in scientific notation, so we will move the decimal to the right, which means we need to subtract one factor of [latex]10[/latex].

[latex]0.5\times10^3=5.0\times10^2=t[/latex]

The time it takes light to travel from the sun to Earth is [latex]5.0\times10^2[/latex] seconds, or in standard notation, [latex]500[/latex] seconds. That is not bad considering how far it has to travel!

Scientific notation was developed to assist mathematicians, scientists, and others when expressing and working with very large and very small numbers. Scientific notation follows a very specific format in which a number is expressed as the product of a number greater than or equal to one and less than ten times a power of [latex]10[/latex]. The format is written [latex]a\times10^{n}[/latex], where [latex]1\leq{a}<10[/latex] and n is an integer. To multiply or divide numbers in scientific notation, you can use the commutative and associative properties to group the exponential terms together and apply the rules of exponents.

- Orders of magnitude (mass). (n.d.). Retrieved May 26, 2016, from https://en.wikipedia.org/wiki/Orders_of_magnitude_(mass) ↵

- How Big is a Human Cell? ↵

- How big is a human cell? - Weizmann Institute of Science. (n.d.). Retrieved May [latex]26, 2016[/latex], from http://www.weizmann.ac.il/plants/Milo/images/humanCellSize120116Clean.pdf ↵

- Grover, W. H., Bryan, A. K., Diez-Silva, M., Suresh, S., Higgins, J. M., & Manalis, S. R. (2011). Measuring single-cell density. Proceedings of the National Academy of Sciences, 108(27), 10992-10996. doi:10.1073/pnas.1104651108 ↵

- Revision and Adaptation. Provided by : Lumen Learning. License : CC BY: Attribution

- Application of Scientific Notation - Quotient 1 (Number of Times Around the Earth). Authored by : James Sousa (Mathispower4u.com) for Lumen Learning. Located at : https://youtu.be/san2avgwu6k . License : CC BY: Attribution

- Application of Scientific Notation - Quotient 2 (Time for Computer Operations). Authored by : James Sousa (Mathispower4u.com) for Lumen Learning. Located at : https://youtu.be/Cbm6ejEbu-o . License : CC BY: Attribution

- Unit 11: Exponents and Polynomials, from Developmental Math: An Open Program. Provided by : Monterey Institute of Technology and Education. Located at : http://nrocnetwork.org/dm-opentext . License : CC BY: Attribution

Encyclopedia of Science Education pp 786–790 Cite as

Problem Solving in Science Learning

- Edit Yerushalmi 2 &

- Bat-Sheva Eylon 3

- Reference work entry

- First Online: 01 January 2015

232 Accesses

1 Citations

3 Altmetric

We’re sorry, something doesn't seem to be working properly.

Please try refreshing the page. If that doesn't work, please contact support so we can address the problem.

Introduction: Problem Solving in the Science Classroom

Problem solving plays a central role in school science, serving both as a learning goal and as an instructional tool. As a learning goal, problem-solving expertise is considered as a means of promoting both proficiency in solving practice problems and competency in tackling novel problems, a hallmark of successful scientists and engineers. As an instructional tool, problem solving attempts to situate the learning of scientific ideas and practices in an applicative context, thus providing an opportunity to transform science learning into an active, relevant, and motivating experience. Problem solving is also frequently a central strategy in the assessment of students’ performance on various measures (e.g., mastery of procedural skills, conceptual understanding, as well as scientific and learning practices).

A problem is often defined as an unfamiliar task that requires one to make judicious decisions when searching through a problem space with the intent of devising a sequence of actions to reach a certain goal. Problem space is defined as “an individual’s representation of the objects in the problem situation, the goal of the problem, and the actions that can be performed in working on the problem” (Greeno and Simon 1984 , pp. 591). This exploratory nature of problem solving mandates an innovative, iterative, and adaptive process. In contrast, in an exercise the solvers apply a preset procedure, with which they are acquainted, to reach the problem’s goal, and therefore the solvers’ choices are limited (Reif 2008 ). Whether a task serves as a problem or an exercise for a particular solver depends on the prior knowledge of the solver.

School science problems share common traits that stem from their being grounded in a scientific knowledge domain: prediction is derived from well-specified premises and requires precision; real-world phenomena are simplified and their description is reduced to a few variables. Where appropriate, school science problems require the use of formal, explicit rules that are part of a scientific theory. These rules must be interpreted unambiguously, as compared with the plausible, implicit knowledge structures that serve in everyday reasoning. However, the problems typically used in science classrooms vary greatly along dimensions such as authenticity (i.e., abstract vs. real-world context), specifications of known and target variables (i.e., well vs. ill structured), duration (a few minutes to several months), complexity (i.e., the amount of intermediate variables), and ownership (i.e., the problem defined by the teacher or by the student). At one end of the problem spectrum, one can find the abstract, well-structured, short, and simple problems that are commonly found in traditional textbooks. At the other end, one can find design or inquiry projects that introduce a real-world context, are ill structured and complex, and involve the students themselves who define the project goals and who engage in extended investigations that they have roles in determining.

Domain-Specific Knowledge and Problem Solving

The relevance of research on problem solving to science instruction increased in the 1970s, when researchers began studying problems anchored in specific knowledge domains (e.g., chess, physics, and medicine). Some of this research focused on short and well-structured problems encountered in science classrooms, all of which require well-defined problem-solving procedures. This research originally took an information processing perspective. It involved two central methodologies: the analysis of “think aloud” protocols of both more and less experienced subjects (described in this research as “experts vs. novices”) in a specific domain to determine how each group approached problem solving and the construction of computer programs to simulate and validate theories of human behavior in solving problems. Research on experts and novices has underscored the importance of domain-specific knowledge in problem solving, as well as the role of problem solving in developing domain-specific knowledge and general problem-solving methods.

Experts’ and Novices’ Approaches to Problem Solving

One aspect in which experts and novices in a certain domain differ is their prior knowledge structures and how they use these. When approaching problems, both experts and novices rely on specific cues (e.g., keywords, idealized objects, and previously encountered contexts) in the problem situation to automatically retrieve domain-relevant information. However, experts store in their memory hierarchical structures (schemas) of domain-specific knowledge (declarative and procedural) that allow them to use cues in problems to quickly encode information (chunking) and to reliably retrieve schemas. Experts’ schemas revolve around in-depth features (e.g., underlying physics principles), in comparison with novices’ problem schemas, which rely on surface features (e.g., “pulleys”) and are fragmented. However, other researchers (Smith et al. 1993 ) who have taken a constructivist, “knowledge-in-pieces” view of learning argue that novices abstract deep structures of intuitive ideas rather than representations organized around concrete surface features.

When solving problems, both experts and novices were found to use heuristics – general but weak methods to enhance their search process, such as a means-ends analysis, starting from the problem’s goal and working backward to the given problem situation, recursively identifying the gap between the problem or goal and the current state and by taking actions to bridge this gap. However, unlike novices, in describing a problem, experts devote considerable time to qualitative analysis of the problem situation before turning to a quantitative representation (Reif 2008 ). More specifically, experts often make simplifying assumptions, construct a pictorial or diagrammatic representation, and map the problem situation to appropriate theoretical models by retrieving effective representations that derive from the experts’ larger and better organized knowledge base. In addition, experts differ from novices in their approach towards constructing the solution: while novices approach problems in a haphazard manner (e.g., plugging numbers into formulae), experts devote sufficient time to effectively plan a strategy for constructing a solution by devising useful subproblems. Experts also have better metacognitive skills and spend more time than do novices in monitoring their progress: they evaluate their solutions in light of task constraints, reflect on their former analysis and decisions, and revise their choices accordingly as necessary.

Problem Solving as a Learning Process

Problem solving may trigger a process of conceptual change, leading learners to develop scientifically acceptable domain-specific declarative knowledge . Namely, learners can refine and elaborate their conceptual understanding by engaging in problem solving in a deliberate manner: articulating how they apply domain concepts and principles, acknowledging conflicts between their ideas and counterevidence, and searching for mechanisms to resolve these conflicts.