- Subject List

- Take a Tour

- For Authors

- Subscriber Services

- Publications

- African American Studies

- African Studies

- American Literature

- Anthropology

- Architecture Planning and Preservation

- Art History

- Atlantic History

- Biblical Studies

- British and Irish Literature

- Childhood Studies

- Chinese Studies

- Cinema and Media Studies

- Communication

- Criminology

- Environmental Science

- Evolutionary Biology

- International Law

- International Relations

- Islamic Studies

- Jewish Studies

- Latin American Studies

- Latino Studies

Linguistics

- Literary and Critical Theory

- Medieval Studies

- Military History

- Political Science

- Public Health

- Renaissance and Reformation

- Social Work

- Urban Studies

- Victorian Literature

- Browse All Subjects

How to Subscribe

- Free Trials

In This Article Expand or collapse the "in this article" section Lexicon

Introduction, general overviews and textbooks.

- The Lexicon from Aristotle to Structuralism

- Structural Linguistics

- Generative Grammar

- Head-Driven Phrase Structure Grammar (HPSG)

- Lexical-Functional Grammar (LFG)

- Construction Grammar (CxG)

- Generative Lexicon (GL)

- Formal Semantics

- Conceptual Semantics (CS)

- Cognitive Linguistics (CL)

- Prototype Theory

- Natural Semantic Metalanguage (NSM)

- Distributional Semantics and Vector-Based Models of Lexical Knowledge

- Lexical Ambiguity: Polysemy and Homonymy

- Underspecification and Vagueness

- Argument Structure (AS)

- Lexical Aspect and Event Structure (ES)

- Semantic Types and Qualia Structure (QS)

- Conceptual Structures

- General Architecture of the Lexicon

- Compositionality in the Mapping from the Lexicon to Syntax

- Lexical Databases and Computational Lexicons

Related Articles Expand or collapse the "related articles" section about

About related articles close popup.

Lorem Ipsum Sit Dolor Amet

Vestibulum ante ipsum primis in faucibus orci luctus et ultrices posuere cubilia Curae; Aliquam ligula odio, euismod ut aliquam et, vestibulum nec risus. Nulla viverra, arcu et iaculis consequat, justo diam ornare tellus, semper ultrices tellus nunc eu tellus.

- Analogy in Language and Linguistics

- Argument Structure

- Bilingualism and Multilingualism

- Cognitive Linguistics

- Cognitive Mechanisms for Lexical Access

- Default Semantics

- Dependencies

- Derivational Morphology

- Distinctive Features

- Idiom and Phraseology

- Language Contact

- Lexical Semantics

- Lexicography

- Second Language Vocabulary

- Semantic Maps

- The Mental Lexicon

- Translation

Other Subject Areas

Forthcoming articles expand or collapse the "forthcoming articles" section.

- Cognitive Grammar

- Edward Sapir

- Teaching Pragmatics

- Find more forthcoming articles...

- Export Citations

- Share This Facebook LinkedIn Twitter

Lexicon by Olga Batiukova , James Pustejovsky LAST MODIFIED: 25 September 2023 DOI: 10.1093/obo/9780199772810-0310

The lexicon is a collection of words. In theoretical linguistics, the term mental lexicon is used to refer to the component of the grammar that contains the knowledge that speakers and hearers have about the words in a language. This typically covers the acoustic or graphic makeup of the word, its meaning, and its contextual or syntactic properties, which are encoded as part of the lexical entry in linguistics and lexicography (see Properties of the Lexical Entry and Structure of the Lexical Entry and Lexical Structures . Until relatively recently, the lexicon was viewed as the most passive module of grammar in the service of other, more dynamic and genuinely generative components, such as syntax and morphology. In the last fifty years, different linguistic theories have progressively increased the amount and, more importantly, the complexity of information associated with lexical items. Many of them explicitly argue that the lexicon is a dynamic module of grammar, which incorporates as well as dictates essential components of syntactic and semantic interpretation. One of the main issues facing present-day lexical research is that, in spite of the growing interest in lexical issues and significant progress achieved by different frameworks, there is no unified theory of the lexicon, but rather many different, often compatible and even overlapping, partial models of the lexicon. This is the result of each framework (or family of frameworks) defending its own vision of the lexicon, and also of the inherently intricate nature of the word, involving other components of grammar: semantics, syntax, morphology, and phonology. Of course, the lexicon and the studies dealing with its different facets cannot be embraced in full in a review chapter like the present one. To narrow its scope, we chose the most prominent research issues and frameworks of the last few decades. This article focuses on the literature dealing with the interaction of the lexicon with syntax (cf. Lexicon in Syntactic Frameworks and Compositionality in the Mapping from the Lexicon to Syntax ) and semantics (cf. Lexicon in Semantic Frameworks ), on the structure and properties of the lexical entry ( Properties of the Lexical Entry and Structure of the Lexical Entry and Lexical Structures ), and on the general structure of the lexicon (in General Architecture of the Lexicon ). It also includes basic pointers to references dealing with computational lexicons (in Lexical Databases and Computational Lexicons ). This entry uses adapted materials from the authors’ joint book, Pustejovsky and Batiukova 2019 , cited under General Overviews and Textbooks .

There are very few broadly themed collections and anthologies that are focused on the lexicon specifically, and all of them are fairly recent: see Hanks 2008 ; Cruse, et al. 2002 ; Taylor 2015 ; and Pirrelli, et al. 2020 . Some of the comprehensive treatments of lexical structure and design have been published in textbook series, such as Aitchison 2012 , Singleton 2012 , De Miguel 2009 , Ježek 2016 , and Pustejovsky and Batiukova 2019 .

Aitchison, Jean. 2012. Words in the mind: An introduction to the mental lexicon . 4th ed. Chichester, UK: John Wiley & Sons.

Cognitively oriented basic-level introduction to the organization of the mental lexicon. Entertaining and profusely illustrated with real-text excerpts and graphic materials (diagrams, schemes, and drawings).

Cruse, David Alan, Franz Hundsnurscher, Michael Job, and Peter Rolf Lutzeier, eds. 2002. Lexikologie: Ein internationales Handbuch zur Natur und Struktur von Wörtern und Wortschätzen . 2 vols. Berlin: De Gruyter.

This two-volume collective handbook includes 251 chapters (written in English and German) covering all the significant areas of the study of the lexicon: wordhood (chaps. 7–13, 229–232), form and content of the words (chaps. 25–45), lexical-semantic structures and relations (chaps. 57–71), general architecture of the lexicon (chaps. 72–116), methodology of lexical research (chaps. 117–120), lexical change (chaps. 184–187), relation between the lexicon and other components of grammar (chaps. 218–228), etc.

De Miguel, Elena, ed. 2009. Panorama de la lexicología . Barcelona: Ariel.

A collective volume that offers the state of the art on the study of the lexicon. Structured in four parts focused on the basic units of study and the notion of word; word meaning, meaning change, and meaning variation; theoretical frameworks of the study of the lexicon; and experimental and applied perspectives on the lexicon.

Hanks, Patrick W., ed. 2008. Lexicology: Critical concepts in linguistics . London: Routledge.

Six-volume collection of milestone contributions (ranging from Aristotle to contemporary frameworks) to the understanding of different aspects of the lexicon, including its philosophical and cognitive underpinnings, lexical structures and primitives, polysemy, contextual properties of lexical items, and its role in first language acquisition, among many others.

Ježek, Elisabetta. 2016. The Lexicon: An introduction . Oxford: Oxford Univ. Press.

Introduction covering a wide range of topics related to the internal structure of the lexicon and its usage. Structured in two parts: Part I covers the properties of individual words, and Part II the general structure of the lexicon.

Pirrelli, Vito, Ingo Plag, and Wolfgang U. Dressler, eds. 2020. Word knowledge and word usage . Berlin and Boston: De Gruyter.

This open access collective volume is authored by teams of experts from different fields of study, who offer a cross-disciplinary overview of the research methods and topics related to the mental lexicon. It is structured in three parts: “Technologies, Tools and Data,” “Topical Issues,” and “Words in Usage.”

Pustejovsky, James, and Olga Batiukova. 2019. The lexicon . Cambridge, UK: Cambridge Univ. Press.

DOI: 10.1017/9780511982378

In-depth introduction to the theory and structure of the lexicon in linguistics and linguistic theory. Offers a comprehensive treatment of lexical structure and design, the relation of the lexicon to grammar as a whole (in particular to syntax and semantics), and to methods of interpretation driven by the lexicon.

Singleton, David. 2012. Language and the lexicon: An introduction . London and New York: Routledge.

Broad descriptive introduction focused on issues typically dealt with in lexicological studies: word meaning, lexical variation and change, collocational properties of words, relation of the lexicon with syntax and morphology, etc.

Taylor, John R., ed. 2015. The Oxford handbook of the word . Oxford: Oxford Univ. Press.

Collective volume dealing with the word as a linguistic unit. Topics covered include the notion of wordhood and its role in linguistic analysis, word structure and meaning, properties of the mental lexicon, words in first and second language acquisition, etc.

back to top

Users without a subscription are not able to see the full content on this page. Please subscribe or login .

Oxford Bibliographies Online is available by subscription and perpetual access to institutions. For more information or to contact an Oxford Sales Representative click here .

- About Linguistics »

- Meet the Editorial Board »

- Acceptability Judgments

- Acquisition, Second Language, and Bilingualism, Psycholin...

- Adpositions

- African Linguistics

- Afroasiatic Languages

- Algonquian Linguistics

- Altaic Languages

- Ambiguity, Lexical

- Animal Communication

- Applicatives

- Applied Linguistics, Critical

- Arawak Languages

- Artificial Languages

- Australian Languages

- Austronesian Linguistics

- Auxiliaries

- Balkans, The Languages of the

- Baudouin de Courtenay, Jan

- Berber Languages and Linguistics

- Biology of Language

- Borrowing, Structural

- Caddoan Languages

- Caucasian Languages

- Celtic Languages

- Celtic Mutations

- Chomsky, Noam

- Chumashan Languages

- Classifiers

- Clauses, Relative

- Clinical Linguistics

- Colonial Place Names

- Comparative Reconstruction in Linguistics

- Comparative-Historical Linguistics

- Complementation

- Complexity, Linguistic

- Compositionality

- Compounding

- Computational Linguistics

- Conditionals

- Conjunctions

- Connectionism

- Consonant Epenthesis

- Constructions, Verb-Particle

- Contrastive Analysis in Linguistics

- Conversation Analysis

- Conversation, Maxims of

- Conversational Implicature

- Cooperative Principle

- Coordination

- Creoles, Grammatical Categories in

- Critical Periods

- Cross-Language Speech Perception and Production

- Cyberpragmatics

- Definiteness

- Dementia and Language

- Dene (Athabaskan) Languages

- Dené-Yeniseian Hypothesis, The

- Dependencies, Long Distance

- Determiners

- Dialectology

- Dravidian Languages

- Endangered Languages

- English as a Lingua Franca

- English, Early Modern

- English, Old

- Eskimo-Aleut

- Euphemisms and Dysphemisms

- Evidentials

- Exemplar-Based Models in Linguistics

- Existential

- Existential Wh-Constructions

- Experimental Linguistics

- Fieldwork, Sociolinguistic

- Finite State Languages

- First Language Attrition

- Formulaic Language

- Francoprovençal

- French Grammars

- Gabelentz, Georg von der

- Genealogical Classification

- Generative Syntax

- Genetics and Language

- Grammar, Categorial

- Grammar, Construction

- Grammar, Descriptive

- Grammar, Functional Discourse

- Grammars, Phrase Structure

- Grammaticalization

- Harris, Zellig

- Heritage Languages

- History of Linguistics

- History of the English Language

- Hmong-Mien Languages

- Hokan Languages

- Humor in Language

- Hungarian Vowel Harmony

- Imperatives

- Indefiniteness

- Indo-European Etymology

- Inflected Infinitives

- Information Structure

- Interface Between Phonology and Phonetics

- Interjections

- Iroquoian Languages

- Isolates, Language

- Jakobson, Roman

- Japanese Word Accent

- Jones, Daniel

- Juncture and Boundary

- Khoisan Languages

- Kiowa-Tanoan Languages

- Kra-Dai Languages

- Labov, William

- Language Acquisition

- Language and Law

- Language Documentation

- Language, Embodiment and

- Language for Specific Purposes/Specialized Communication

- Language, Gender, and Sexuality

- Language Geography

- Language Ideologies and Language Attitudes

- Language in Autism Spectrum Disorders

- Language Nests

- Language Revitalization

- Language Shift

- Language Standardization

- Language, Synesthesia and

- Languages of Africa

- Languages of the Americas, Indigenous

- Languages of the World

- Learnability

- Lexical Access, Cognitive Mechanisms for

- Lexical-Functional Grammar

- Lexicography, Bilingual

- Linguistic Accommodation

- Linguistic Anthropology

- Linguistic Areas

- Linguistic Landscapes

- Linguistic Prescriptivism

- Linguistic Profiling and Language-Based Discrimination

- Linguistic Relativity

- Linguistics, Educational

- Listening, Second Language

- Literature and Linguistics

- Machine Translation

- Maintenance, Language

- Mande Languages

- Mass-Count Distinction

- Mathematical Linguistics

- Mayan Languages

- Mental Health Disorders, Language in

- Mental Lexicon, The

- Mesoamerican Languages

- Minority Languages

- Mixed Languages

- Mixe-Zoquean Languages

- Modification

- Mon-Khmer Languages

- Morphological Change

- Morphology, Blending in

- Morphology, Subtractive

- Munda Languages

- Muskogean Languages

- Nasals and Nasalization

- Niger-Congo Languages

- Non-Pama-Nyungan Languages

- Northeast Caucasian Languages

- Oceanic Languages

- Papuan Languages

- Penutian Languages

- Philosophy of Language

- Phonetics, Acoustic

- Phonetics, Articulatory

- Phonological Research, Psycholinguistic Methodology in

- Phonology, Computational

- Phonology, Early Child

- Policy and Planning, Language

- Politeness in Language

- Positive Discourse Analysis

- Possessives, Acquisition of

- Pragmatics, Acquisition of

- Pragmatics, Cognitive

- Pragmatics, Computational

- Pragmatics, Cross-Cultural

- Pragmatics, Developmental

- Pragmatics, Experimental

- Pragmatics, Game Theory in

- Pragmatics, Historical

- Pragmatics, Institutional

- Pragmatics, Second Language

- Prague Linguistic Circle, The

- Presupposition

- Psycholinguistics

- Quechuan and Aymaran Languages

- Reading, Second-Language

- Reciprocals

- Reduplication

- Reflexives and Reflexivity

- Register and Register Variation

- Relevance Theory

- Representation and Processing of Multi-Word Expressions in...

- Salish Languages

- Sapir, Edward

- Saussure, Ferdinand de

- Second Language Acquisition, Anaphora Resolution in

- Semantic Roles

- Semantic-Pragmatic Change

- Semantics, Cognitive

- Sentence Processing in Monolingual and Bilingual Speakers

- Sign Language Linguistics

- Sociolinguistics

- Sociolinguistics, Variationist

- Sociopragmatics

- Sound Change

- South American Indian Languages

- Specific Language Impairment

- Speech, Deceptive

- Speech Perception

- Speech Production

- Speech Synthesis

- Switch-Reference

- Syntactic Change

- Syntactic Knowledge, Children’s Acquisition of

- Tense, Aspect, and Mood

- Text Mining

- Tone Sandhi

- Transcription

- Transitivity and Voice

- Translanguaging

- Trubetzkoy, Nikolai

- Tucanoan Languages

- Tupian Languages

- Usage-Based Linguistics

- Uto-Aztecan Languages

- Valency Theory

- Verbs, Serial

- Vocabulary, Second Language

- Voice and Voice Quality

- Vowel Harmony

- Whitney, William Dwight

- Word Classes

- Word Formation in Japanese

- Word Recognition, Spoken

- Word Recognition, Visual

- Word Stress

- Writing, Second Language

- Writing Systems

- Zapotecan Languages

- Privacy Policy

- Cookie Policy

- Legal Notice

- Accessibility

Powered by:

- [66.249.64.20|193.7.198.129]

- 193.7.198.129

- More from M-W

- To save this word, you'll need to log in. Log In

Definition of lexicon

Did you know.

Tips on Using Lexicon in a Sentence

The word lexicon has a number of closely-related meanings, which can easily lead to confusion and may cause the word to be used in an awkward way. Lexicon can refer to a general dictionary of a language (as in "a lexicon of the Hebrew language") and also to a narrower printed compilation of words within some sphere (as in "a medical lexicon" or "a lexicon of the German in Finnegans Wake ").

Similarly, lexicon can refer both to the vocabulary of a specific group of people ("the lexicon of French") or to the general language used by an unspecified group of people ("a word that has not entered the general lexicon yet"). It may also often be found in reference to the vocabulary employed by a particular speaker ("'Failure' is not a word in my lexicon").

Examples of lexicon in a Sentence

These examples are programmatically compiled from various online sources to illustrate current usage of the word 'lexicon.' Any opinions expressed in the examples do not represent those of Merriam-Webster or its editors. Send us feedback about these examples.

Word History

Late Greek lexikon , from neuter of lexikos of words, from Greek lexis word, speech, from legein to say — more at legend

1580, in the meaning defined at sense 1

Articles Related to lexicon

The Words of the Week - 09/17/2021

The words that defined the week ending September 17th, 2021

Dictionary Entries Near lexicon

Cite this entry.

“Lexicon.” Merriam-Webster.com Dictionary , Merriam-Webster, https://www.merriam-webster.com/dictionary/lexicon. Accessed 11 Apr. 2024.

Kids Definition

Kids definition of lexicon, more from merriam-webster on lexicon.

Nglish: Translation of lexicon for Spanish Speakers

Britannica English: Translation of lexicon for Arabic Speakers

Britannica.com: Encyclopedia article about lexicon

Subscribe to America's largest dictionary and get thousands more definitions and advanced search—ad free!

Can you solve 4 words at once?

Word of the day.

See Definitions and Examples »

Get Word of the Day daily email!

Popular in Grammar & Usage

Your vs. you're: how to use them correctly, every letter is silent, sometimes: a-z list of examples, more commonly mispronounced words, how to use em dashes (—), en dashes (–) , and hyphens (-), absent letters that are heard anyway, popular in wordplay, the words of the week - apr. 5, 12 bird names that sound like compliments, 10 scrabble words without any vowels, 12 more bird names that sound like insults (and sometimes are), 8 uncommon words related to love, games & quizzes.

- Get IGI Global News

- All Products

- Book Chapters

- Journal Articles

- Video Lessons

- Teaching Cases

Shortly You Will Be Redirected to Our Partner eContent Pro's Website

eContent Pro powers all IGI Global Author Services. From this website, you will be able to receive your 25% discount (automatically applied at checkout), receive a free quote, place an order, and retrieve your final documents .

What is Lexical Annotation

Related Books View All Books

Related Journals View All Journals

Natural Language Annotation for Machine Learning by James Pustejovsky, Amber Stubbs

Get full access to Natural Language Annotation for Machine Learning and 60K+ other titles, with a free 10-day trial of O'Reilly.

There are also live events, courses curated by job role, and more.

Chapter 1. The Basics

It seems as though every day there are new and exciting problems that people have taught computers to solve, from how to win at chess or Jeopardy to determining shortest-path driving directions. But there are still many tasks that computers cannot perform, particularly in the realm of understanding human language. Statistical methods have proven to be an effective way to approach these problems, but machine learning (ML) techniques often work better when the algorithms are provided with pointers to what is relevant about a dataset, rather than just massive amounts of data. When discussing natural language, these pointers often come in the form of annotations—metadata that provides additional information about the text. However, in order to teach a computer effectively, it’s important to give it the right data, and for it to have enough data to learn from. The purpose of this book is to provide you with the tools to create good data for your own ML task. In this chapter we will cover:

Why annotation is an important tool for linguists and computer scientists alike

How corpus linguistics became the field that it is today

The different areas of linguistics and how they relate to annotation and ML tasks

What a corpus is, and what makes a corpus balanced

How some classic ML problems are represented with annotations

The basics of the annotation development cycle

The Importance of Language Annotation

Everyone knows that the Internet is an amazing resource for all sorts of information that can teach you just about anything: juggling, programming, playing an instrument, and so on. However, there is another layer of information that the Internet contains, and that is how all those lessons (and blogs, forums, tweets, etc.) are being communicated. The Web contains information in all forms of media—including texts, images, movies, and sounds—and language is the communication medium that allows people to understand the content, and to link the content to other media. However, while computers are excellent at delivering this information to interested users, they are much less adept at understanding language itself.

Theoretical and computational linguistics are focused on unraveling the deeper nature of language and capturing the computational properties of linguistic structures. Human language technologies (HLTs) attempt to adopt these insights and algorithms and turn them into functioning, high-performance programs that can impact the ways we interact with computers using language. With more and more people using the Internet every day, the amount of linguistic data available to researchers has increased significantly, allowing linguistic modeling problems to be viewed as ML tasks, rather than limited to the relatively small amounts of data that humans are able to process on their own.

However, it is not enough to simply provide a computer with a large amount of data and expect it to learn to speak—the data has to be prepared in such a way that the computer can more easily find patterns and inferences. This is usually done by adding relevant metadata to a dataset. Any metadata tag used to mark up elements of the dataset is called an annotation over the input. However, in order for the algorithms to learn efficiently and effectively, the annotation done on the data must be accurate, and relevant to the task the machine is being asked to perform. For this reason, the discipline of language annotation is a critical link in developing intelligent human language technologies.

Giving an ML algorithm too much information can slow it down and lead to inaccurate results, or result in the algorithm being so molded to the training data that it becomes “overfit” and provides less accurate results than it might otherwise on new data. It’s important to think carefully about what you are trying to accomplish, and what information is most relevant to that goal. Later in the book we will give examples of how to find that information, and how to determine how well your algorithm is performing at the task you’ve set for it.

Datasets of natural language are referred to as corpora , and a single set of data annotated with the same specification is called an annotated corpus . Annotated corpora can be used to train ML algorithms. In this chapter we will define what a corpus is, explain what is meant by an annotation, and describe the methodology used for enriching a linguistic data collection with annotations for machine learning.

The Layers of Linguistic Description

While it is not necessary to have formal linguistic training in order to create an annotated corpus, we will be drawing on examples of many different types of annotation tasks, and you will find this book more helpful if you have a basic understanding of the different aspects of language that are studied and used for annotations. Grammar is the name typically given to the mechanisms responsible for creating well-formed structures in language. Most linguists view grammar as itself consisting of distinct modules or systems, either by cognitive design or for descriptive convenience. These areas usually include syntax, semantics, morphology, phonology (and phonetics), and the lexicon. Areas beyond grammar that relate to how language is embedded in human activity include discourse, pragmatics, and text theory. The following list provides more detailed descriptions of these areas:

The study of how words are combined to form sentences. This includes examining parts of speech and how they combine to make larger constructions.

The study of meaning in language. Semantics examines the relations between words and what they are being used to represent.

The study of units of meaning in a language. A morpheme is the smallest unit of language that has meaning or function, a definition that includes words, prefixes, affixes, and other word structures that impart meaning.

The study of the sound patterns of a particular language. Aspects of study include determining which phones are significant and have meaning (i.e., the phonemes); how syllables are structured and combined; and what features are needed to describe the discrete units (segments) in the language, and how they are interpreted.

The study of the sounds of human speech, and how they are made and perceived. A phoneme is the term for an individual sound, and is essentially the smallest unit of human speech.

The study of the words and phrases used in a language, that is, a language’s vocabulary.

The study of exchanges of information, usually in the form of conversations, and particularly the flow of information across sentence boundaries.

The study of how the context of text affects the meaning of an expression, and what information is necessary to infer a hidden or presupposed meaning.

The study of how narratives and other textual styles are constructed to make larger textual compositions.

Throughout this book we will present examples of annotation projects that make use of various combinations of the different concepts outlined in the preceding list.

What Is Natural Language Processing?

Natural Language Processing (NLP) is a field of computer science and engineering that has developed from the study of language and computational linguistics within the field of Artificial Intelligence. The goals of NLP are to design and build applications that facilitate human interaction with machines and other devices through the use of natural language. Some of the major areas of NLP include:

Imagine being able to actually ask your computer or your phone what time your favorite restaurant in New York stops serving dinner on Friday nights. Rather than typing in the (still) clumsy set of keywords into a search browser window, you could simply ask in plain, natural language—your own , whether it’s English, Mandarin, or Spanish. (While systems such as Siri for the iPhone are a good start to this process, it’s clear that Siri doesn’t fully understand all of natural language, just a subset of key phrases.)

This area includes applications that can take a collection of documents or emails and produce a coherent summary of their content. Such programs also aim to provide snap “elevator summaries” of longer documents, and possibly even turn them into slide presentations.

The holy grail of NLP applications, this was the first major area of research and engineering in the field. Programs such as Google Translate are getting better and better, but the real killer app will be the BabelFish that translates in real time when you’re looking for the right train to catch in Beijing.

This is one of the most difficult problems in NLP. There has been great progress in building models that can be used on your phone or computer to recognize spoken language utterances that are questions and commands. Unfortunately, while these Automatic Speech Recognition (ASR) systems are ubiquitous, they work best in narrowly defined domains and don’t allow the speaker to stray from the expected scripted input ( “Please say or type your card number now” ).

This is one of the most successful areas of NLP, wherein the task is to identify in which category (or bin ) a document should be placed. This has proved to be enormously useful for applications such as spam filtering, news article classification, and movie reviews, among others. One reason this has had such a big impact is the relative simplicity of the learning models needed for training the algorithms that do the classification.

As we mentioned in the Preface , the Natural Language Toolkit (NLTK), described in the O’Reilly book Natural Language Processing with Python , is a wonderful introduction to the techniques necessary to build many of the applications described in the preceding list. One of the goals of this book is to give you the knowledge to build specialized language corpora (i.e., training and test datasets) that are necessary for developing such applications.

A Brief History of Corpus Linguistics

In the mid-20th century, linguistics was practiced primarily as a descriptive field, used to study structural properties within a language and typological variations between languages. This work resulted in fairly sophisticated models of the different informational components comprising linguistic utterances. As in the other social sciences, the collection and analysis of data was also being subjected to quantitative techniques from statistics. In the 1940s, linguists such as Bloomfield were starting to think that language could be explained in probabilistic and behaviorist terms. Empirical and statistical methods became popular in the 1950s, and Shannon’s information-theoretic view to language analysis appeared to provide a solid quantitative approach for modeling qualitative descriptions of linguistic structure.

Unfortunately, the development of statistical and quantitative methods for linguistic analysis hit a brick wall in the 1950s. This was due primarily to two factors. First, there was the problem of data availability. One of the problems with applying statistical methods to the language data at the time was that the datasets were generally so small that it was not possible to make interesting statistical generalizations over large numbers of linguistic phenomena. Second, and perhaps more important, there was a general shift in the social sciences from data-oriented descriptions of human behavior to introspective modeling of cognitive functions.

As part of this new attitude toward human activity, the linguist Noam Chomsky focused on both a formal methodology and a theory of linguistics that not only ignored quantitative language data, but also claimed that it was misleading for formulating models of language behavior ( Chomsky 1957 ).

This view was very influential throughout the 1960s and 1970s, largely because the formal approach was able to develop extremely sophisticated rule-based language models using mostly introspective (or self-generated) data. This was a very attractive alternative to trying to create statistical language models on the basis of still relatively small datasets of linguistic utterances from the existing corpora in the field. Formal modeling and rule-based generalizations, in fact, have always been an integral step in theory formation, and in this respect, Chomsky’s approach on how to do linguistics has yielded rich and elaborate models of language.

Here’s a quick overview of some of the milestones in the field, leading up to where we are now.

1950s : Descriptive linguists compile collections of spoken and written utterances of various languages from field research. Literary researchers begin compiling systematic collections of the complete works of different authors. Key Word in Context (KWIC) is invented as a means of indexing documents and creating concordances.

1960s : Kucera and Francis publish A Standard Corpus of Present-Day American English (the Brown Corpus ), the first broadly available large corpus of language texts. Work in Information Retrieval (IR) develops techniques for statistical similarity of document content.

1970s : Stochastic models developed from speech corpora make Speech Recognition systems possible. The vector space model is developed for document indexing. The London-Lund Corpus (LLC) is developed through the work of the Survey of English Usage .

1980s : The Lancaster-Oslo-Bergen (LOB) Corpus, designed to match the Brown Corpus in terms of size and genres, is compiled. The COBUILD (Collins Birmingham University International Language Database) dictionary is published, the first based on examining usage from a large English corpus, the Bank of English. The Survey of English Usage Corpus inspires the creation of a comprehensive corpus-based grammar, Grammar of English . The Child Language Data Exchange System (CHILDES) Corpus is released as a repository for first language acquisition data.

1990s : The Penn TreeBank is released. This is a corpus of tagged and parsed sentences of naturally occurring English (4.5 million words). The British National Corpus (BNC) is compiled and released as the largest corpus of English to date (100 million words). The Text Encoding Initiative (TEI) is established to develop and maintain a standard for the representation of texts in digital form.

2000s : As the World Wide Web grows, more data is available for statistical models for Machine Translation and other applications. The American National Corpus (ANC) project releases a 22-million-word subcorpus, and the Corpus of Contemporary American English (COCA) is released (400 million words). Google releases its Google N-gram Corpus of 1 trillion word tokens from public web pages. The corpus holds up to five n-grams for each word token, along with their frequencies .

2010s : International standards organizations, such as ISO, begin to recognize and co-develop text encoding formats that are being used for corpus annotation efforts. The Web continues to make enough data available to build models for a whole new range of linguistic phenomena. Entirely new forms of text corpora, such as Twitter , Facebook , and blogs , become available as a resource.

Theory construction, however, also involves testing and evaluating your hypotheses against observed phenomena. As more linguistic data has gradually become available, something significant has changed in the way linguists look at data. The phenomena are now observable in millions of texts and billions of sentences over the Web, and this has left little doubt that quantitative techniques can be meaningfully applied to both test and create the language models correlated with the datasets. This has given rise to the modern age of corpus linguistics. As a result, the corpus is the entry point from which all linguistic analysis will be done in the future.

You gotta have data! As philosopher of science Thomas Kuhn said: “When measurement departs from theory, it is likely to yield mere numbers, and their very neutrality makes them particularly sterile as a source of remedial suggestions. But numbers register the departure from theory with an authority and finesse that no qualitative technique can duplicate, and that departure is often enough to start a search” ( Kuhn 1961 ).

The assembly and collection of texts into more coherent datasets that we can call corpora started in the 1960s.

Some of the most important corpora are listed in Table 1-1 .

What Is a Corpus?

A corpus is a collection of machine-readable texts that have been produced in a natural communicative setting. They have been sampled to be representative and balanced with respect to particular factors; for example, by genre—newspaper articles, literary fiction, spoken speech, blogs and diaries, and legal documents. A corpus is said to be “representative of a language variety” if the content of the corpus can be generalized to that variety ( Leech 1991 ).

This is not as circular as it may sound. Basically, if the content of the corpus, defined by specifications of linguistic phenomena examined or studied, reflects that of the larger population from which it is taken, then we can say that it “represents that language variety.”

The notion of a corpus being balanced is an idea that has been around since the 1980s, but it is still a rather fuzzy notion and difficult to define strictly. Atkins and Ostler (1992) propose a formulation of attributes that can be used to define the types of text, and thereby contribute to creating a balanced corpus.

Two well-known corpora can be compared for their effort to balance the content of the texts. The Penn TreeBank ( Marcus et al. 1993 ) is a 4.5-million-word corpus that contains texts from four sources: the Wall Street Journal , the Brown Corpus, ATIS, and the Switchboard Corpus. By contrast, the BNC is a 100-million-word corpus that contains texts from a broad range of genres, domains, and media.

The most diverse subcorpus within the Penn TreeBank is the Brown Corpus, which is a 1-million-word corpus consisting of 500 English text samples, each one approximately 2,000 words. It was collected and compiled by Henry Kucera and W. Nelson Francis of Brown University (hence its name) from a broad range of contemporary American English in 1961. In 1967, they released a fairly extensive statistical analysis of the word frequencies and behavior within the corpus, the first of its kind in print, as well as the Brown Corpus Manual ( Francis and Kucera 1964 ).

There has never been any doubt that all linguistic analysis must be grounded on specific datasets. What has recently emerged is the realization that all linguistics will be bound to corpus-oriented techniques, one way or the other. Corpora are becoming the standard data exchange format for discussing linguistic observations and theoretical generalizations, and certainly for evaluation of systems, both statistical and rule-based.

Table 1-2 shows how the Brown Corpus compares to other corpora that are also still in use.

Looking at the way the files of the Brown Corpus can be categorized gives us an idea of what sorts of data were used to represent the English language. The top two general data categories are informative, with 374 samples, and imaginative, with 126 samples.

These two domains are further distinguished into the following topic areas:

Press: reportage (44), Press: editorial (27), Press: reviews (17), Religion (17), Skills and Hobbies (36), Popular Lore (48), Belles Lettres, Biography, Memoirs (75), Miscellaneous (30), Natural Sciences (12), Medicine (5), Mathematics (4), Social and Behavioral Sciences (14), Political Science, Law, Education (15), Humanities (18), Technology and Engineering (12)

General Fiction (29), Mystery and Detective Fiction (24), Science Fiction (6), Adventure and Western Fiction (29), Romance and Love Story (29) Humor (9)

Similarly, the BNC can be categorized into informative and imaginative prose, and further into subdomains such as educational , public , business , and so on. A further discussion of how the BNC can be categorized can be found in Distributions Within Corpora .

As you can see from the numbers given for the Brown Corpus, not every category is equally represented, which seems to be a violation of the rule of “representative and balanced” that we discussed before. However, these corpora were not assembled with a specific task in mind; rather, they were meant to represent written and spoken language as a whole. Because of this, they attempt to embody a large cross section of existing texts, though whether they succeed in representing percentages of texts in the world is debatable (but also not terribly important).

For your own corpus, you may find yourself wanting to cover a wide variety of text, but it is likely that you will have a more specific task domain, and so your potential corpus will not need to include the full range of human expression. The Switchboard Corpus is an example of a corpus that was collected for a very specific purpose—Speech Recognition for phone operation—and so was balanced and representative of the different sexes and all different dialects in the United States.

Early Use of Corpora

One of the most common uses of corpora from the early days was the construction of concordances . These are alphabetical listings of the words in an article or text collection with references given to the passages in which they occur. Concordances position a word within its context, and thereby make it much easier to study how it is used in a language, both syntactically and semantically. In the 1950s and 1960s, programs were written to automatically create concordances for the contents of a collection, and the results of these automatically created indexes were called “Key Word in Context” indexes, or KWIC indexes . A KWIC index is an index created by sorting the words in an article or a larger collection such as a corpus, and aligning them in a format so that they can be searched alphabetically in the index. This was a relatively efficient means for searching a collection before full-text document search became available.

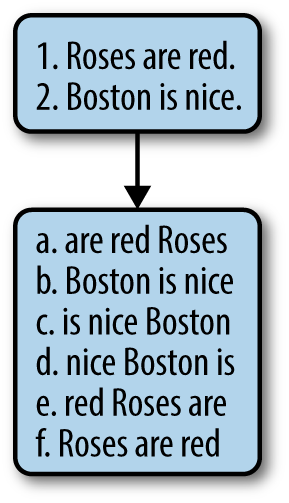

The way a KWIC index works is as follows. The input to a KWIC system is a file or collection structured as a sequence of lines. The output is a sequence of lines, circularly shifted and presented in alphabetical order of the first word. For an example, consider a short article of two sentences, shown in Figure 1-1 with the KWIC index output that is generated.

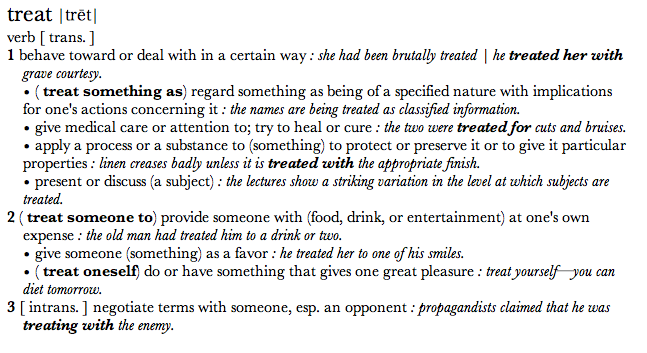

Another benefit of concordancing is that, by displaying the keyword in its context, you can visually inspect how the word is being used in a given sentence. To take a specific example, consider the different meanings of the English verb treat . Specifically, let’s look at the first two senses within sense (1) from the dictionary entry shown in Figure 1-2 .

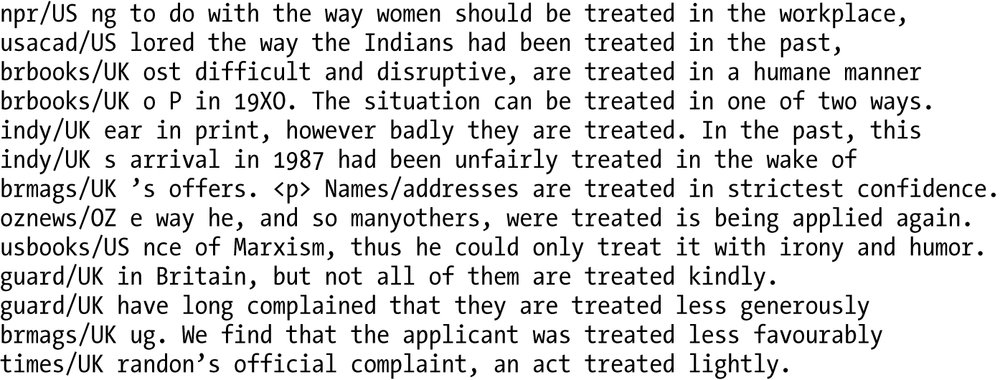

Now let’s look at the concordances compiled for this verb from the BNC, as differentiated by these two senses.

These concordances were compiled using the Word Sketch Engine , by the lexicographer Patrick Hanks, and are part of a large resource of sentence patterns using a technique called Corpus Pattern Analysis ( Pustejovsky et al. 2004 ; Hanks and Pustejovsky 2005 ).

What is striking when one examines the concordance entries for each of these senses is the fact that the contexts are so distinct. These are presented in Figures 1-3 and 1-4 .

The NLTK provides functionality for creating concordances. The easiest way to make a concordance is to simply load the preprocessed texts into the NLTK and then use the concordance function, like this:

If you have your own set of data for which you would like to create a concordance, then the process is a little more involved: you will need to read in your files and use the NLTK functions to process them before you can create your own concordance. Here is some sample code for a corpus of text files (replace the directory location with your own folder of text files):

You can see if the files were read by checking what file IDs are present:

Next, process the words in the files and then use the concordance function to examine the data:

Corpora Today

When did researchers start to actually use corpora for modeling language phenomena and training algorithms? Beginning in the 1980s, researchers in Speech Recognition began to compile enough spoken language data to create language models (from transcriptions using n-grams and Hidden Markov Models [HMMS]) that worked well enough to recognize a limited vocabulary of words in a very narrow domain. In the 1990s, work in Machine Translation began to see the influence of larger and larger datasets, and with this, the rise of statistical language modeling for translation.

Eventually, both memory and computer hardware became sophisticated enough to collect and analyze increasingly larger datasets of language fragments. This entailed being able to create statistical language models that actually performed with some reasonable accuracy for different natural language tasks.

As one example of the increasing availability of data, Google has recently released the Google Ngram Corpus . The Google Ngram dataset allows users to search for single words (unigrams) or collocations of up to five words (5-grams). The dataset is available for download from the Linguistic Data Consortium, and directly from Google . It is also viewable online through the Google Ngram Viewer . The Ngram dataset consists of more than one trillion tokens (words, numbers, etc.) taken from publicly available websites and sorted by year, making it easy to view trends in language use. In addition to English, Google provides n-grams for Chinese, French, German, Hebrew, Russian, and Spanish, as well as subsets of the English corpus such as American English and English Fiction.

N-grams are sets of items (often words, but they can be letters, phonemes, etc.) that are part of a sequence. By examining how often the items occur together we can learn about their usage in a language, and predict what would likely follow a given sequence (using n-grams for this purpose is called n-gram modeling ).

N-grams are applied in a variety of ways every day, such as in websites that provide search suggestions once a few letters are typed in, and for determining likely substitutions for spelling errors. They are also used in speech disambiguation—if a person speaks unclearly but utters a sequence that does not commonly (or ever) occur in the language being spoken, an n-gram model can help recognize that problem and find the words that the speaker probably intended to say.

Another modern corpus is ClueWeb09 ( http://lemurproject.org/clueweb09.php/ ), a dataset “created to support research on information retrieval and related human language technologies. It consists of about 1 billion web pages in ten languages that were collected in January and February 2009.” This corpus is too large to use for an annotation project (it’s about 25 terabytes uncompressed), but some projects have taken parts of the dataset (such as a subset of the English websites) and used them for research ( Pomikálek et al. 2012 ). Data collection from the Internet is an increasingly common way to create corpora, as new and varied content is always being created.

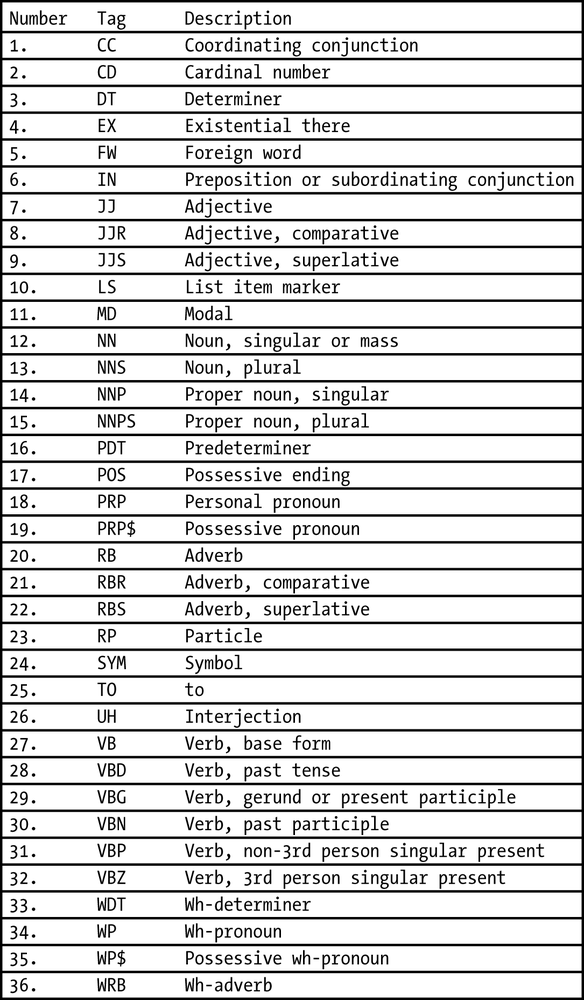

Kinds of Annotation

Consider the different parts of a language’s syntax that can be annotated. These include part of speech (POS) , phrase structure , and dependency structure . Table 1-3 shows examples of each of these. There are many different tagsets for the parts of speech of a language that you can choose from.

The tagset in Figure 1-5 is taken from the Penn TreeBank, and is the basis for all subsequent annotation over that corpus.

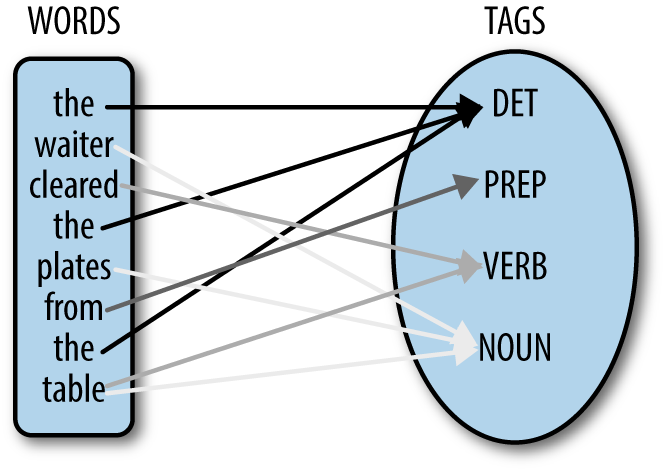

The POS tagging process involves assigning the right lexical class marker(s) to all the words in a sentence (or corpus). This is illustrated in a simple example, “The waiter cleared the plates from the table.” (See Figure 1-6 .)

POS tagging is a critical step in many NLP applications, since it is important to know what category a word is assigned to in order to perform subsequent analysis on it, such as the following:

Is the word a noun or a verb? Examples include object , overflow , insult , and suspect . Without context, each of these words could be either a noun or a verb.

You need POS tags in order to make larger syntactic units. For example, in the following sentences, is “clean dishes” a noun phrase or an imperative verb phrase?

Getting the POS tags and the subsequent parse right makes all the difference when translating the expressions in the preceding list item into another language, such as French: “Des assiettes propres” (Clean dishes) versus “Fais la vaisselle!” (Clean the dishes!).

Consider how these tags are used in the following sentence, from the Penn TreeBank ( Marcus et al. 1993 ):

Identifying the correct parts of speech in a sentence is a necessary step in building many natural language applications, such as parsers, Named Entity Recognizers, QAS, and Machine Translation systems. It is also an important step toward identifying larger structural units such as phrase structure.

Use the NLTK tagger to assign POS tags to the example sentence shown here, and then with other sentences that might be more ambiguous:

Look for places where the tagger doesn’t work, and think about what rules might be causing these errors. For example, what happens when you try “Clean dishes are in the cabinet.” and “Clean dishes before going to work!”?

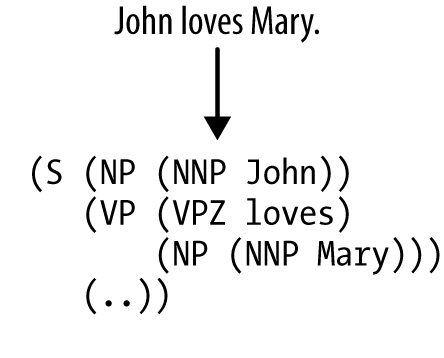

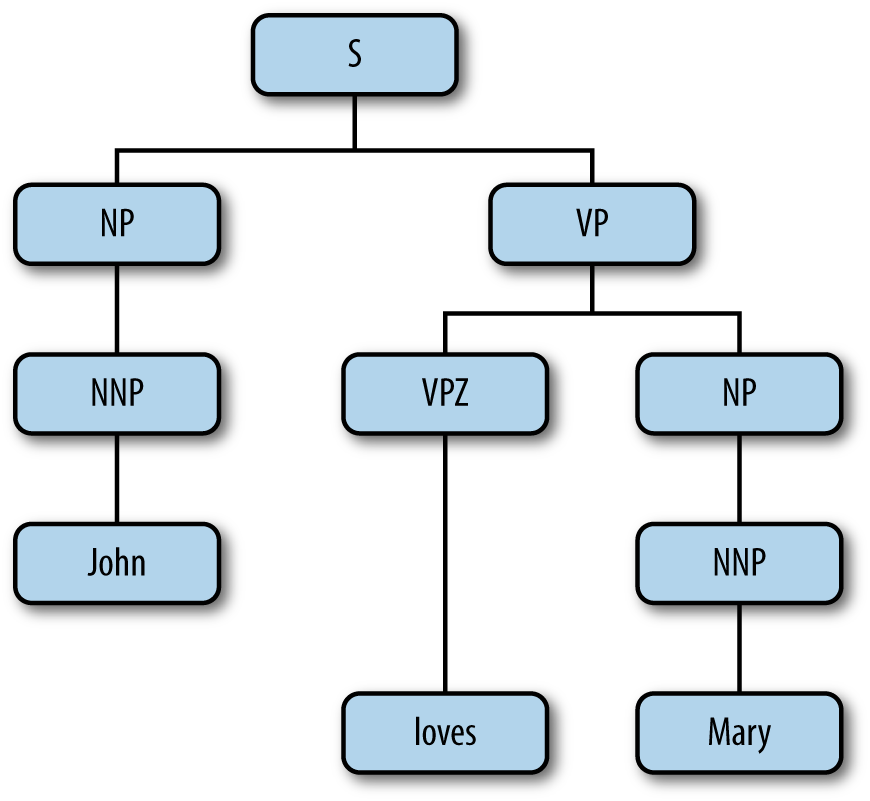

While words have labels associated with them (the POS tags mentioned earlier), specific sequences of words also have labels that can be associated with them. This is called syntactic bracketing (or labeling) and is the structure that organizes all the words we hear into coherent phrases. As mentioned earlier, syntax is the name given to the structure associated with a sentence. The Penn TreeBank is an annotated corpus with syntactic bracketing explicitly marked over the text. An example annotation is shown in Figure 1-7 .

This is a bracketed representation of the syntactic tree structure, which is shown in Figure 1-8 .

Notice that syntactic bracketing introduces two relations between the words in a sentence: order (precedence) and hierarchy (dominance). For example, the tree structure in Figure 1-8 encodes these relations by the very nature of a tree as a directed acyclic graph (DAG). In a very compact form, the tree captures the precedence and dominance relations given in the following list:

{Dom(NNP1,John), Dom(VPZ,loves), Dom(NNP2,Mary), Dom(NP1,NNP1), Dom(NP2,NNP2), Dom(S,NP1), Dom(VP,VPZ), Dom(VP,NP2), Dom(S,VP), Prec(NP1,VP), Prec(VPZ,NP2)}

Any sophisticated natural language application requires some level of syntactic analysis, including Machine Translation. If the resources for full parsing (such as that shown earlier) are not available, then some sort of shallow parsing can be used. This is when partial syntactic bracketing is applied to sequences of words, without worrying about the details of the structure inside a phrase. We will return to this idea in later chapters.

In addition to POS tagging and syntactic bracketing, it is useful to annotate texts in a corpus for their semantic value, that is, what the words mean in the sentence. We can distinguish two kinds of annotation for semantic content within a sentence: what something is , and what role something plays. Here is a more detailed explanation of each:

A word or phrase in the sentence is labeled with a type identifier (from a reserved vocabulary or ontology), indicating what it denotes.

A word or phrase in the sentence is identified as playing a specific semantic role relative to a role assigner, such as a verb.

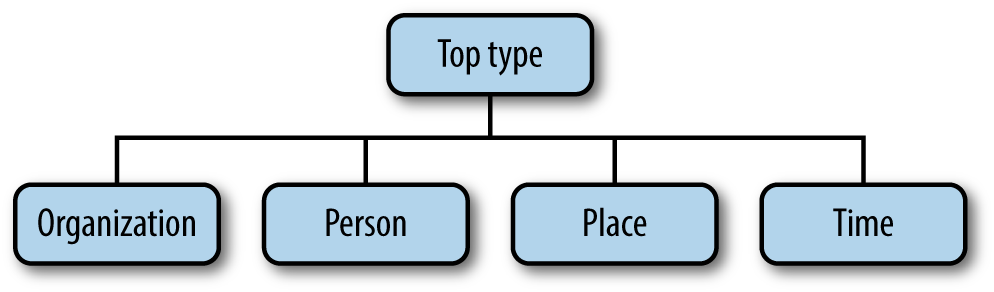

Let’s consider what annotation using these two strategies would look like, starting with semantic types. Types are commonly defined using an ontology, such as that shown in Figure 1-9 .

The word ontology has its roots in philosophy, but ontologies also have a place in computational linguistics, where they are used to create categorized hierarchies that group similar concepts and objects. By assigning words semantic types in an ontology, we can create relationships between different branches of the ontology, and determine whether linguistic rules hold true when applied to all the words in a category.

The ontology in Figure 1-9 is rather simple, with a small set of categories. However, even this small ontology can be used to illustrate some interesting features of language. Consider the following example, with semantic types marked:

[Ms. Ramirez] Person of [QBC Productions] Organization visited [Boston] Place on [Saturday] Time , where she had lunch with [Mr. Harris] Person of [STU Enterprises] Organization at [1:15 pm] Time .

From this small example, we can start to make observations about how these objects interact with one other. People can visit places, people have “of” relationships with organizations, and lunch can happen on Saturday at 1:15 p.m. Given a large enough corpus of similarly labeled sentences, we can start to detect patterns in usage that will tell us more about how these labels do and do not interact.

A corpus of these examples can also tell us where our categories might need to be expanded. There are two “times” in this sentence: Saturday and 1:15 p.m. We can see that events can occur “on” Saturday, but “at” 1:15 p.m. A larger corpus would show that this pattern remains true with other days of the week and hour designations—there is a difference in usage here that cannot be inferred from the semantic types. However, not all ontologies will capture all information—the applications of the ontology will determine whether it is important to capture the difference between Saturday and 1:15 p.m.

The annotation strategy we just described marks up what a linguistic expression refers to. But let’s say we want to know the basics for Question Answering , namely, the who , what , where , and when of a sentence. This involves identifying what are called the semantic role labels associated with a verb. What are semantic roles? Although there is no complete agreement on what roles exist in language (there rarely is with linguists), the following list is a fair representation of the kinds of semantic labels associated with different verbs:

The event participant that is doing or causing the event to occur

The event participant who undergoes a change in position or state

The event participant who experiences or perceives something

The location or place from which the motion begins; the person from whom the theme is given

The location or place to which the motion is directed or terminates

The person who comes into possession of the theme

The event participant who is affected by the event

The event participant used by the agent to do or cause the event

The location or place associated with the event itself

The annotated data that results explicitly identifies entity extents and the target relations between the entities:

[The man] agent painted [the wall] patient with [a paint brush] instrument .

[Mary] figure walked to [the cafe] goal from [her house] source .

[John] agent gave [his mother] recipient [a necklace] theme .

[My brother] theme lives in [Milwaukee] location .

Language Data and Machine Learning

Now that we have reviewed the methodology of language annotation along with some examples of annotation formats over linguistic data, we will describe the computational framework within which such annotated corpora are used, namely, that of machine learning. Machine learning is the name given to the area of Artificial Intelligence concerned with the development of algorithms that learn or improve their performance from experience or previous encounters with data. They are said to learn (or generate) a function that maps particular input data to the desired output. For our purposes, the “data” that an ML algorithm encounters is natural language, most often in the form of text, and typically annotated with tags that highlight the specific features that are relevant to the learning task. As we will see, the annotation schemas discussed earlier, for example, provide rich starting points as the input data source for the ML process (the training phase).

When working with annotated datasets in NLP, three major types of ML algorithms are typically used:

Any technique that generates a function mapping from inputs to a fixed set of labels (the desired output). The labels are typically metadata tags provided by humans who annotate the corpus for training purposes.

Any technique that tries to find structure from an input set of unlabeled data.

Any technique that generates a function mapping from inputs of both labeled data and unlabeled data; a combination of both supervised and unsupervised learning.

Table 1-4 shows a general overview of ML algorithms and some of the annotation tasks they are frequently used to emulate. We’ll talk more about why these algorithms are used for these different tasks in Chapter 7 .

You’ll notice that some of the tasks appear with more than one algorithm. That’s because different approaches have been tried successfully for different types of annotation tasks, and depending on the most relevant features of your own corpus, different algorithms may prove to be more or less effective. Just to give you an idea of what the algorithms listed in that table mean, the rest of this section gives an overview of the main types of ML algorithms.

Classification

Classification is the task of identifying the labeling for a single entity from a set of data. For example, in order to distinguish spam from not-spam in your email inbox, an algorithm called a classifier is trained on a set of labeled data, where individual emails have been assigned the label [+spam] or [-spam]. It is the presence of certain (known) words or phrases in an email that helps to identify an email as spam. These words are essentially treated as features that the classifier will use to model the positive instances of spam as compared to not-spam. Another example of a classification problem is patient diagnosis, from the presence of known symptoms and other attributes. Here we would identify a patient as having a particular disease, A, and label the patient record as [+disease-A] or [-disease-A], based on specific features from the record or text. This might include blood pressure, weight, gender, age, existence of symptoms, and so forth. The most common algorithms used in classification tasks are Maximum Entropy (MaxEnt), Naïve Bayes, decision trees, and Support Vector Machines (SVMs).

Clustering is the name given to ML algorithms that find natural groupings and patterns from the input data, without any labeling or training at all. The problem is generally viewed as an unsupervised learning task, where either the dataset is unlabeled or the labels are ignored in the process of making clusters. The clusters that are formed are “similar in some respect,” and the other clusters formed are “dissimilar to the objects” in other clusters. Some of the more common algorithms used for this task include k-means, hierarchical clustering, Kernel Principle Component Analysis, and Fuzzy C-Means (FCM).

Structured Pattern Induction

Structured pattern induction involves learning not only the label or category of a single entity, but rather learning a sequence of labels, or other structural dependencies between the labeled items. For example, a sequence of labels might be a stream of phonemes in a speech signal (in Speech Recognition); a sequence of POS tags in a sentence corresponding to a syntactic unit (phrase); a sequence of dialog moves in a phone conversation; or steps in a task such as parsing, coreference resolution, or grammar induction. Algorithms used for such problems include Hidden Markov Models (HMMs), Conditional Random Fields (CRFs), and Maximum Entropy Markov Models (MEMMs).

We will return to these approaches in more detail when we discuss machine learning in greater depth in Chapter 7 .

The Annotation Development Cycle

The features we use for encoding a specific linguistic phenomenon must be rich enough to capture the desired behavior in the algorithm that we are training. These linguistic descriptions are typically distilled from extensive theoretical modeling of the phenomenon. The descriptions in turn form the basis for the annotation values of the specification language, which are themselves the features used in a development cycle for training and testing an identification or labeling algorithm over text. Finally, based on an analysis and evaluation of the performance of a system, the model of the phenomenon may be revised for retraining and testing.

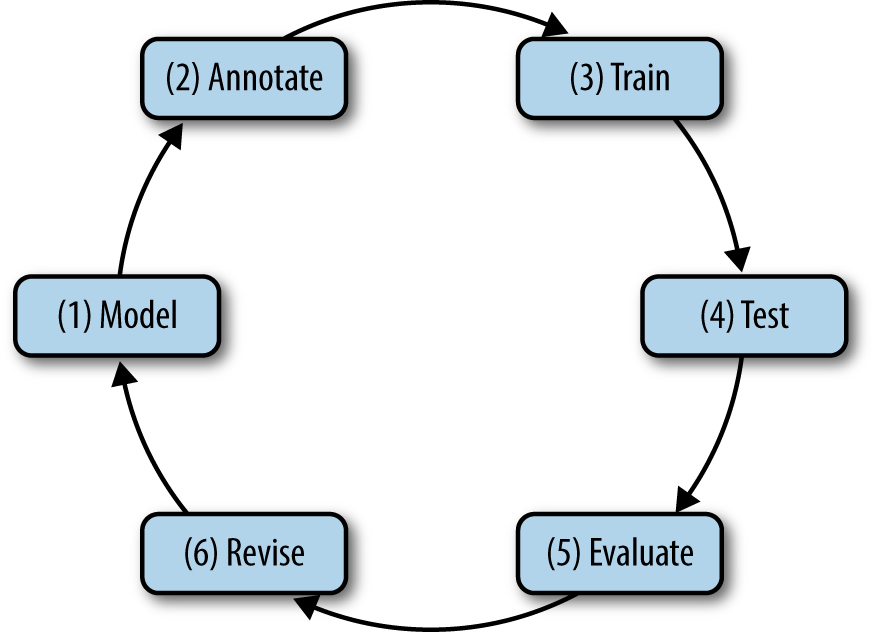

We call this particular cycle of development the MATTER methodology, as detailed here and shown in Figure 1-10 ( Pustejovsky 2006 ):

Structural descriptions provide theoretically informed attributes derived from empirical observations over the data.

An annotation scheme assumes a feature set that encodes specific structural descriptions and properties of the input data.

The algorithm is trained over a corpus annotated with the target feature set.

The algorithm is tested against held-out data.

A standardized evaluation of results is conducted.

The model and the annotation specification are revisited in order to make the annotation more robust and reliable with use in the algorithm.

We assume some particular problem or phenomenon has sparked your interest, for which you will need to label natural language data for training for machine learning. Consider two kinds of problems. First imagine a direct text classification task. It might be that you are interested in classifying your email according to its content or with a particular interest in filtering out spam. Or perhaps you are interested in rating your incoming mail on a scale of what emotional content is being expressed in the message.

Now let’s consider a more involved task, performed over this same email corpus: identifying what are known as Named Entities (NEs) . These are references to everyday things in our world that have proper names associated with them; for example, people, countries, products, holidays, companies, sports, religions, and so on.

Finally, imagine an even more complicated task, that of identifying all the different events that have been mentioned in your mail (birthdays, parties, concerts, classes, airline reservations, upcoming meetings, etc.). Once this has been done, you will need to “timestamp” them and order them, that is, identify when they happened, if in fact they did happen. This is called the temporal awareness problem , and is one of the most difficult in the field.

We will use these different tasks throughout this section to help us clarify what is involved with the different steps in the annotation development cycle.

Model the Phenomenon

The first step in the MATTER development cycle is “Model the Phenomenon.” The steps involved in modeling, however, vary greatly, depending on the nature of the task you have defined for yourself. In this section, we will look at what modeling entails and how you know when you have an adequate first approximation of a model for your task.

The parameters associated with creating a model are quite diverse, and it is difficult to get different communities to agree on just what a model is. In this section we will be pragmatic and discuss a number of approaches to modeling and show how they provide the basis from which to created annotated datasets. Briefly, a model is a characterization of a certain phenomenon in terms that are more abstract than the elements in the domain being modeled. For the following discussion, we will define a model as consisting of a vocabulary of terms, T , the relations between these terms, R , and their interpretation, I . So, a model, M , can be seen as a triple, M = <T,R,I> . To better understand this notion of a model, let us consider the scenarios introduced earlier. For spam detection, we can treat it as a binary text classification task, requiring the simplest model with the categories (terms) spam and not-spam associated with the entire email document. Hence, our model is simply:

T = {Document_type, Spam, Not-Spam}

R = {Document_type ::= Spam | Not-Spam}

I = {Spam = “something we don’t want!”, Not-Spam = “something we do want!"}

The document itself is labeled as being a member of one of these categories. This is called document annotation and is the simplest (and most coarse-grained) annotation possible. Now, when we say that the model contains only the label names for the categories (e.g., sports, finance, news, editorials, fashion, etc.), this means there is no other annotation involved. This does not mean the content of the files is not subject to further scrutiny, however. A document that is labeled as a category, A , for example, is actually analyzed as a large-feature vector containing at least the words in the document. A more fine-grained annotation for the same task would be to identify specific words or phrases in the document and label them as also being associated with the category directly. We’ll return to this strategy in Chapter 4 . Essentially, the goal of designing a good model of the phenomenon (task) is that this is where you start for designing the features that go into your learning algorithm. The better the features, the better the performance of the ML algorithm!

Preparing a corpus with annotations of NEs, as mentioned earlier, involves a richer model than the spam-filter application just discussed. We introduced a four-category ontology for NEs in the previous section, and this will be the basis for our model to identify NEs in text. The model is illustrated as follows:

T = {Named_Entity, Organization, Person, Place, Time}

R = {Named_Entity ::= Organization | Person | Place | Time}

I = {Organization = “list of organizations in a database”, Person = “list of people in a database”, Place = “list of countries, geographic locations, etc.”, Time = “all possible dates on the calendar”}

This model is necessarily more detailed, because we are actually annotating spans of natural language text, rather than simply labeling documents (e.g., emails) as spam or not-spam. That is, within the document, we are recognizing mentions of companies, actors, countries, and dates.

Finally, what about an even more involved task, that of recognizing all temporal information in a document? That is, questions such as the following:

When did that meeting take place?

How long was John on vacation?

Did Jill get promoted before or after she went on maternity leave?

We won’t go into the full model for this domain, but let’s see what is minimally necessary in order to create annotation features to understand such questions. First we need to distinguish between Time expressions (“yesterday,” “January 27,” “Monday”), Events (“promoted,” “meeting,” “vacation”), and Temporal relations (“before,” “after,” “during”). Because our model is so much more detailed, let’s divide the descriptive content by domain:

Time_Expression ::= TIME | DATE | DURATION | SET

TIME: 10:15 a.m., 3 o’clock, etc.

DATE: Monday, April 2011

DURATION: 30 minutes, two years, four days

SET: every hour, every other month

Event: Meeting, vacation, promotion, maternity leave, etc.

Temporal_Relations ::= BEFORE | AFTER | DURING | EQUAL | OVERLAP | ...

We will come back to this problem in a later chapter, when we discuss the impact of the initial model on the subsequent performance of the algorithms you are trying to train over your labeled data.

In later chapters, we’ll see that there are actually several models that might be appropriate for describing a phenomenon, each providing a different view of the data. We’ll call this multimodel annotation of the phenomenon. A common scenario for multimodel annotation involves annotators who have domain expertise in an area (such as biomedical knowledge). They are told to identify specific entities, events, attributes, or facts from documents, given their knowledge and interpretation of a specific area. From this annotation, nonexperts can be used to mark up the structural (syntactic) aspects of these same phenomena, making it possible to gain domain expert understanding without forcing the domain experts to learn linguistic theory as well.

Once you have an initial model for the phenomena associated with the problem task you are trying to solve, you effectively have the first tag specification , or spec , for the annotation. This is the document from which you will create the blueprint for how to annotate the corpus with the features in the model. This is called the annotation guideline , and we talk about this in the next section.

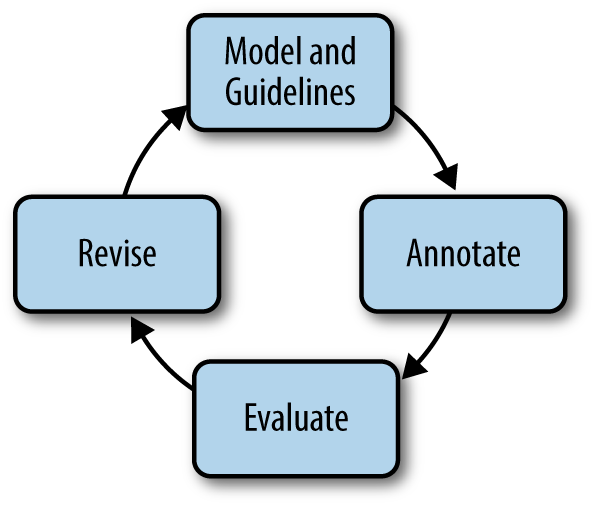

Annotate with the Specification

Now that you have a model of the phenomenon encoded as a specification document, you will need to train human annotators to mark up the dataset according to the tags that are important to you. This is easier said than done, and in fact often requires multiple iterations of modeling and annotating, as shown in Figure 1-11 . This process is called the MAMA (Model-Annotate-Model-Annotate) cycle, or the “babeling” phase of MATTER. The annotation guideline helps direct the annotators in the task of identifying the elements and then associating the appropriate features with them, when they are identified.

Two kinds of tags will concern us when annotating natural language data: consuming tags and nonconsuming tags. A consuming tag refers to a metadata tag that has real content from the dataset associated with it (e.g., it “consumes” some text); a nonconsuming tag, on the other hand, is a metadata tag that is inserted into the file but is not associated with any actual part of the text. An example will help make this distinction clear. Say that we want to annotate text for temporal information, as discussed earlier. Namely, we want to annotate for three kinds of tags: times (called Timex tags), temporal relations (TempRels), and Events. In the first sentence in the following example, each tag is expressed directly as real text. That is, they are all consuming tags (“promoted” is marked as an Event, “before” is marked as a TempRel, and “the summer” is marked as a Timex). Notice, however, that in the second sentence, there is no explicit temporal relation in the text, even though we know that it’s something like “on”. So, we actually insert a TempRel with the value of “on” in our corpus, but the tag is flagged as a “nonconsuming” tag.

John was [promoted] Event [before] TempRel [the summer] Timex .

John was [promoted] Event [Monday] Timex .

An important factor when creating an annotated corpus of your text is, of course, consistency in the way the annotators mark up the text with the different tags. One of the most seemingly trivial problems is the most problematic when comparing annotations: namely, the extent or the span of the tag . Compare the three annotations that follow. In the first, the Organization tag spans “QBC Productions,” leaving out the company identifier “Inc.” and the location “of East Anglia,” while these are included in varying spans in the next two annotations.

[QBC Productions] Organization Inc. of East Anglia

[QBC Productions Inc.] Organization of East Anglia

[QBC Productions Inc. of East Anglia] Organization

Each of these might look correct to an annotator, but only one actually corresponds to the correct markup in the annotation guideline. How are these compared and resolved?

In order to assess how well an annotation task is defined, we use Inter-Annotator Agreement (IAA) scores to show how individual annotators compare to one another. If an IAA score is high, that is an indication that the task is well defined and other annotators will be able to continue the work. This is typically defined using a statistical measure called a Kappa Statistic . For comparing two annotations against each other, the Cohen Kappa is usually used, while when comparing more than two annotations, a Fleiss Kappa measure is used. These will be defined in Chapter 8 .

Note that having a high IAA score doesn’t necessarily mean the annotations are correct; it simply means the annotators are all interpreting your instructions consistently in the same way. Your task may still need to be revised even if your IAA scores are high. This will be discussed further in Chapter 9 .

Once you have your corpus annotated by at least two people (more is preferable, but not always practical), it’s time to create the gold standard corpus . The gold standard is the final version of your annotated data. It uses the most up-to-date specification that you created during the annotation process, and it has everything tagged correctly according to the most recent guidelines. This is the corpus that you will use for machine learning, and it is created through the process of adjudication . At this point in the process, you (or someone equally familiar with all the tasks) will compare the annotations and determine which tags in the annotations are correct and should be included in the gold standard.

Train and Test the Algorithms over the Corpus

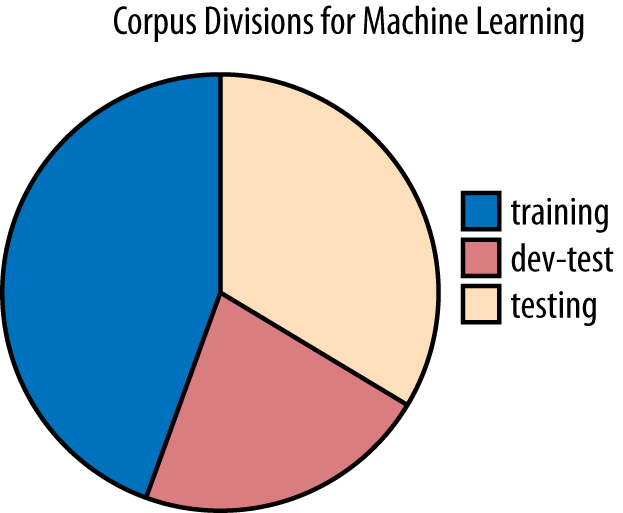

Now that you have adjudicated your corpus, you can use your newly created gold standard for machine learning. The most common way to do this is to divide your corpus into two parts: the development corpus and the test corpus . The development corpus is then further divided into two parts: the training set and the development-test set . Figure 1-12 shows a standard breakdown of a corpus, though different distributions might be used for different tasks. The files are normally distributed randomly into the different sets.

The training set is used to train the algorithm that you will use for your task. The development-test (dev-test) set is used for error analysis. Once the algorithm is trained, it is run on the dev-test set and a list of errors can be generated to find where the algorithm is failing to correctly label the corpus. Once sources of error are found, the algorithm can be adjusted and retrained, then tested against the dev-test set again. This procedure can be repeated until satisfactory results are obtained.

Once the training portion is completed, the algorithm is run against the held-out test corpus, which until this point has not been involved in training or dev-testing. By holding out the data, we can show how well the algorithm will perform on new data, which gives an expectation of how it would perform on data that someone else creates as well. Figure 1-13 shows the “TTER” portion of the MATTER cycle, with the different corpus divisions and steps.

Evaluate the Results

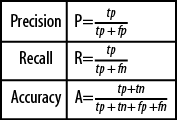

The most common method for evaluating the performance of your algorithm is to calculate how accurately it labels your dataset. This can be done by measuring the fraction of the results from the dataset that are labeled correctly using a standard technique of “relevance judgment” called the Precision and Recall metric .

Here’s how it works. For each label you are using to identify elements in the data, the dataset is divided into two subsets: one that is labeled “relevant” to the label, and one that is not relevant. Precision is a metric that is computed as the fraction of the correct instances from those that the algorithm labeled as being in the relevant subset. Recall is computed as the fraction of correct items among those that actually belong to the relevant subset. The following confusion matrix helps illustrate how this works:

Given this matrix, we can define both precision and recall as shown in Figure 1-14 , along with a conventional definition of accuracy .

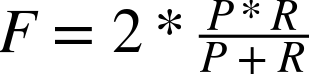

The values of P and R are typically combined into a single metric called the F-measure , which is the harmonic mean of the two.

This creates an overall score used for evaluation where precision and recall are measured equally, though depending on the purpose of your corpus and algorithm, a variation of this measure, such as one that rates precision higher than recall, may be more useful to you. We will give more detail about how these equations are used for evaluation in Chapter 8 .

Revise the Model and Algorithms