ENCYCLOPEDIC ENTRY

The Y2K bug was a computer flaw, or bug, that may have caused problems when dealing with dates beyond December 31, 1999

Geography, Human Geography, Social Studies, World History

Loading ...

The Y2K bug was a computer flaw , or bug , that may have caused problems when dealing with dates beyond December 31, 1999. The flaw , faced by computer programmers and users all over the world on January 1, 2000, is also known as the "millennium bug ." (The letter K, which stands for kilo (a unit of 1000), is commonly used to represent the number 1,000. So, Y2K stands for Year 2000.) Many skeptics believe it was barely a problem at all.

When complicated computer programs were being written during the 1960s through the 1980s, computer engineers used a two-digit code for the year. The "19" was left out. Instead of a date reading 1970, it read 70. Engineers shortened the date because data storage in computers was costly and took up a lot of space.

As the year 2000 approached, computer programmers realized that computers might not interpret 00 as 2000, but as 1900. Activities that were programmed on a daily or yearly basis would be damaged or flawed. As December 31, 1999, turned into January 1, 2000, computers might interpret December 31, 1999, turning into January 1, 1900.

Banks, which calculate interest rates on a daily basis, faced real problems. Interest rates are the amount of money a lender, such as a bank, charges a customer, such as an individual or business, for a loan. Instead of the rate of interest for one day, the computer would calculate a rate of interest for minus almost 100 years!

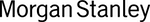

Centers of technology, such as power plants , were also threatened by the Y2K bug . Power plants depend on routine computer maintenance for safety checks, such as water pressure or radiation levels. Not having the correct date would throw off these calculations and possibly put nearby residents at risk.

Transportation also depends on the correct time and date. Airlines in particular were put at risk, as computers with records of all scheduled flights would be threatenedafter all, there were very few airline flights in 1900.

Y2K was both a software and hardware problem. Software refers to the electronic programs used to tell the computer what to do. Hardware is the machinery of the computer itself. Software and hardware companies raced to fix the bug and provided "Y2K compliant " programs to help. The simplest solution was the best: The date was simply expanded to a four-digit number. Governments, especially in the United States and the United Kingdom, worked to address the problem.

In the end, there were very few problems. A nuclear energy facility in Ishikawa, Japan, had some of its radiation equipment fail, but backup facilities ensured there was no threat to the public. The U.S. detected missile launches in Russia and attributed that to the Y2K bug . But the missile launches were planned ahead of time as part of Russias conflict in its republic of Chechnya. There was no computer malfunction.

Countries such as Italy, Russia, and South Korea had done little to prepare for Y2K. They had no more technological problems than those countries, like the U.S., that spent millions of dollars to combat the problem.

Due to the lack of results, many people dismissed the Y2K bug as a hoax or an end-of-the-world cult .

Better Safe Than Sorry Australia invested millions of dollars in preparing for the Y2K bug. Russia invested nearly none. Australia recalled almost its entire embassy staff from Russia prior to January 1, 2000, over fears of what might happen if communications or transportation networks broke down. Nothing happened.

Media Credits

The audio, illustrations, photos, and videos are credited beneath the media asset, except for promotional images, which generally link to another page that contains the media credit. The Rights Holder for media is the person or group credited.

Illustrators

Educator reviewer, last updated.

October 19, 2023

User Permissions

For information on user permissions, please read our Terms of Service. If you have questions about how to cite anything on our website in your project or classroom presentation, please contact your teacher. They will best know the preferred format. When you reach out to them, you will need the page title, URL, and the date you accessed the resource.

If a media asset is downloadable, a download button appears in the corner of the media viewer. If no button appears, you cannot download or save the media.

Text on this page is printable and can be used according to our Terms of Service .

Interactives

Any interactives on this page can only be played while you are visiting our website. You cannot download interactives.

Related Resources

How-To Geek

What was the y2k bug, and why did it terrify the world.

Remember Y2K? This millennium bug was supposed to cause catastrophic problems on January 1, 2000. So, what really happened?

Quick Links

How we planted our own time bomb, the eventual gotcha, this is going to take some time, y2k compliant prove it, the millennium dawns, what did happen, the legacy: 20 years later.

Billions of dollars were spent addressing the Y2K bug. Government, military, and corporate systems were all at risk, yet we made it through, more or less, unscathed. So, was the threat even real?

In the 1950s and '60s, representing years with two digits became the norm. One reason for this was to save space. The earliest computers had small storage capacities, and only a fraction of the RAM of modern machines. Programs had to be as compact and efficient as possible. Programs were read from punched cards, which had an obvious finite width (typically, 80 columns). You couldn’t type past the end of the line on a punched card.

Wherever space could be saved, it was. An easy---and, therefore, common---trick was to store year values as two digits. For example, someone would punch in 66 instead of 1966. Because the software treated all dates as occurring in the 20th century, it was understood that 66 meant 1966.

Eventually, hardware capabilities improved. There were faster processors, more RAM, and computer terminals replaced punched cards and tapes . Magnetic media, such as tapes and hard drives, were used to store data and programs. However, by this time there was a large body of existing data.

Computer technology was moving on, but the functions of the departments that used these systems remained the same. Even when software was renewed or replaced, the data format remained unchanged. Software continued to use and expect two-digit years. As more data accumulated, the problem was compounded. The body of data was huge in some cases.

Making the data format into a sacred cow was another reason. All new software had to pander to the data, which was never converted to use four-digit years.

Storage and memory limitations arise in contemporary systems, too. For example, embedded systems , such as firmware in routers and firewalls, are obviously constrained by space limitations.

Programmable l ogic c ontrollers (PLCs), automated machinery, robotic production lines, and industrial control systems were all programmed to use a data representation that was as compact possible.

Trimming four digits down to two is quite a space saver---it's a quick way to cut your storage requirement in half. Plus, the more dates you have to deal with, the bigger the benefit.

If you only use two digits for year values, you can’t differentiate between dates in different centuries. The software was written to treat all dates as though they were in the 20th century. This gives false results when you hit the next century. The year 2000 would be stored as 00. Therefore, the program would interpret it as 1900, 2015 would be treated as 1915, and so on.

At the stroke of midnight on Dec. 31, 1999, every computer---and every device with a microprocessor and embedded software---that stored and processed dates as two digits would face this problem. Perhaps the software would accept the wrong date and carry on, producing garbage output. Or, perhaps it would throw an error and carry on---or, completely choke and crash.

This didn’t just apply to mainframes, minicomputers, networks, and desktops. Microprocessors were running in aircraft, factories, power stations, missile control systems, and communication satellites. Practically everything that was automated, electronic, or configurable had some code in it. The scale of the issue was monumental.

What would happen if all these systems flicked from 1999 one second to 1900 the next?

Typically, some quarters predicted the end of days and the fall of society. In scenes that will resonate with many in the current pandemic, some took to stockpiling essential supplies . Others called the whole thing a hoax, but, undeniably, it was big news. It became known as the "millennium," "Year 2000," and "Y2K" bug.

There were other, secondary, concerns. The year 2000 was a leap year, and many computers---even leap-year savvy systems---didn't take this into account. If a year is divisible by four, it's a leap year; if it's divisible by 100, it isn't.

According to another (not so widely known) rule, if a year is divisible by 400, it's a leap year . Much of the software that had been written hadn't applied the latter rule. Therefore, it wouldn't recognize the year 2000 as a leap year. As a result, how it would perform on Feb. 29, 2000, was unpredictable.

In President Bill Clinton’s 1999 State of the Union, he said:

"We need every state and local government, every business, large and small, to work with us to make sure that [the] Y2K computer bug will be remembered as the last headache of the 20th century, not the first crisis of the 21st century."

The previous October, Clinton had signed the Year 2000 Information and Readiness Disclosure act .

Long before 1999, governments and companies worldwide had been working hard to find fixes and implement work-arounds for Y2K.

At first, it seemed the simplest fix was to expand the date or year field to hold two more digits, add 1900 to each year value, and ta-da! You then had four-digit years. Your old data would be preserved correctly, and new data would slot in nicely.

Sadly, in many cases that solution wasn’t possible due to cost, perceived data risk, and the sheer size of the task. Where possible, it was the best thing to do. Your systems would be date-safe right up to 9999.

Of course, this just corrected the data. Software also had to be converted to handle, calculate, store, and display four-digit years. Some creative solutions appeared that removed the need to increase the storage for years. Month values can’t be higher than 12, but two digits can hold values up to 99. So, you could use the month value as a flag.

You could adopt a scheme like the following:

- For a month between 1 and 12, add 1900 to the year value.

- For a month between 41 and 52, add 2000 to the year value, and then subtract 40 from the month.

- For a month between 21 and 32, add 1800 to the year value, and then subtract 20 from the month.

You had to modify the programs to encode and decode the slightly obfuscated dates, of course. The logic in the data verification routines had to be adjusted, as well, to accept crazy values (like 44 for a month). Other schemes used variations of this approach. Encoding the dates as 14-bit, binary numbers and storing the integer representations in the date fields was a similar approach at the bit-level.

Another system that repurposed the six digits used to store dates dispensed with months entirely. Instead of storing

, they swapped to a

- DDD: The day of the year (1 to 365, or 366 for leap years).

- C: A flag representing the century.

- YY: The year.

Work-arounds abounded, too. One method was to pick a year as a pivot year. If all your existing data was newer than 1921, you could use 1920 as the pivot year. Any dates between 00 and 20 were taken to mean 2000 to 2020. Anything from 21 to 99 meant 1921 to 1999.

These were short-term fixes, of course. It bought you a couple of decades to implement a real fix or migrate to a newer system.

Revisit working systems to update old fixes that are still running? Yeah, right! Unfortunately, society doesn't do that much---just look at all the COBOL applications that are still widely in use.

Related: What Is COBOL, and Why Do So Many Institutions Rely on It?

Fixing in-house systems was one thing. Fixing code, and then distributing patches to all of the customer devices out in the field was another, entirely. And what about software development tools, like software libraries? Had they jeopardized your product? Did you use development partners or suppliers for some of the code in your product? Was their code safe and Y2K compliant? Who was responsible if a customer or client had an issue?

Businesses found themselves in the middle of a paperwork storm. Companies were falling over themselves requesting legally-binding statements of compliance from software suppliers and development partners. They wanted to see your overarching Y2K Preparedness Plan, and your system-specific Y2K Code Review and Remediation reports.

They also wanted a statement verifying your code was Y2K safe, and that, in the event something bad happened on or after Jan. 1, 2000, you’d accept responsibility and they’d be absolved.

In 1999, I was working as the Development Manager of a U.K.-based software house. We made products that interfaced with business telephone systems. Our products provided the automatic call-handling professional call centers rely on daily. Our customers were major players in this field, including BT , Nortel , and Avaya . They were reselling our rebadged products to untold numbers of their customers around the globe.

On the backs of these giants, our software was running in 97 different countries. Due to different time zones, the software was also going to go through midnight on New Year's Eve, 1999, over 30 times !

Needless to say, these market leaders were feeling somewhat exposed. They wanted hard evidence that our code was compliant. They also wanted to know the methodology of our code reviews and test suites were sound, and that the test results were repeatable. We went through the mangle, but came through it with a clean bill of health. Of course, dealing with all of this took time and money. Even though our code was compliant, we had to withstand the financial hit of proving it.

Still, we got off lighter than most. The total global cost of preparing for Y2K was estimated to be between $300 to $600 billion by Gartner , and $825 billion by Capgemini . The U.S. alone spent over $100 billion. It's also been calculated that thousands of man-years were devoted to addressing the Y2K bug.

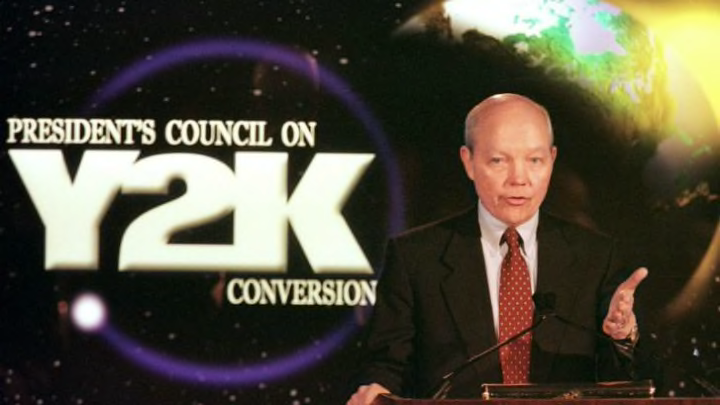

There’s nothing like putting your money where your mouth is. On New Year’s Eve, 1999, John Koskinen, chairman of the President's Council on Year 2000 Conversion, boarded a flight that would still be in the air at midnight. Koskinen wanted to demonstrate to the public his faith in the vastly expensive, multiyear remediation it had taken to get the U.S. millennium-ready. He landed safely.

It’s easy for non-techies to look back and think the millennium bug was overblown, overhyped, and just a way for people to make money. Nothing happened, right? So, what was the fuss about?

Imagine there’s a dam in the mountains, holding back a lake. Below it is a village. A shepherd announces to the village he’s seen cracks in the dam, and it won’t last more than a year. A plan is drawn up and work begins to stabilize the dam. Finally, the construction work is finished, and the predicted failure date rolls past without incident.

Some villagers might start muttering they knew there was nothing to worry about, and look, nothing's happened. It’s as if they have a blind spot for the time where the threat was identified, addressed, and eliminated.

The Y2K equivalent of the shepherd was Peter de Jager, the man credited with bringing the issue into the public consciousness in a 1993 article of Computerworld magazine . He continued to campaign until it was taken seriously.

As the new millennium dawned, de Jager was also en route on a flight from Chicago to London . And also, just like Koskinen’s, de Jager's flight arrived safely and without incident.

Despite the herculean efforts to prevent Y2K from affecting computer systems, there were cases that slipped through the net. The situation in which the world would have found itself without a net would've been unthinkable.

Planes didn’t fall from the sky and nuclear missiles didn’t self-launch, despite predictions from doom-mongers. Although personnel at a U.S. tracking station did get a slight frisson when they observed the launch of three missiles from Russia .

This, however, was a human-ordered launch of three SCUD missiles as the Russian-Chechnyan dispute continued to escalate. It did raise eyebrows and heart rates, though.

Here are some other incidents that occurred:

- Two Nuclear power plants in Japan developed faults that were quickly addressed . The faults were described as minor and nonthreatening.

- The age of the first baby born in the new millennium in Denmark was registered as 100 .

- Bus tickets in Australia were printed with the wrong date and rejected by ticket scanning hardware.

- Egypt’s national newswire service failed, but was reinstated quickly .

- U.S. spy satellites were knocked off-air for three days due to a faulty patch to correct the Y2K bug .

- A man returning a copy of The General's Daughter to a video store in New York was presented with a bill for $91,250 for bringing the tape back 100 years late.

- Several months into the 2000s, a health official in one region of England spotted a statistical anomaly in the number of children born with Down’s Syndrome . The ages of 154 mothers had been incorrectly calculated in January, skewing test results. The ages of these women put them in a high-risk group, but it wasn't detected. If the risks had been correctly identified, the mothers would have been offered an amniocentesis test . Four children were born with Down’s Syndrome and two pregnancies were terminated.

Remember those pivot years we mentioned? They were the work-around that bought people and companies a few decades to put in a real fix for Y2K. There are some systems that are still relying on this temporary fix and are still in service. We've already seen some in-service failures.

At the beginning of this year, parking meters in New York stopped accepting credit card payments . This was attributed to the fact that they hit the upper bounds of their pivot year. All 14,000 parking meters had to be individually visited and updated.

In other words, the big time bomb spawned a lot of little time bombs.

20 Years Later, the Y2K Bug Seems Like a Joke—Because Those Behind the Scenes Took It Seriously

I n the final hours of Dec. 31, 1999, John Koskinen boarded an airplane bound for New York City. He was accompanied by a handful of reporters but few other passengers, among them a tuxedo-clad reveler who was troubled to learn that, going by the Greenwich Mean Time clock used by airlines, he too would enter the 20th century in the air.

Koskinen, however, had timed his flight that way on purpose. He was President Bill Clinton’s Y2K “czar,” and he flew that night to prove to a jittery public — and scrutinizing press — that after an extensive, multi-year effort, the country was ready for the new millennium.

The term Y2K had become shorthand for a problem stemming from the clash of the upcoming Year 2000 and the two-digit year format utilized by early coders to minimize use of computer memory, then an expensive commodity. If computers interpreted the “00” in 2000 as 1900, this could mean headaches ranging from wildly erroneous mortgage calculations to, some speculated, large-scale blackouts and infrastructure damage.

It was an issue that everyone was talking about 20 years ago, but few truly understood. “The vast majority of people have absolutely no clue how computers work. So when someone comes along and says look we have a problem…[involving] a two-digit year rather than a four-digit year, their eyes start to glaze over,” says Peter de Jeger, host of the podcast “ Y2K: An Autobiography .”

Yet it was hardly a new concern — technology professionals had been discussing it for years, long before Y2K entered the popular vernacular.

President Clinton had exhorted the government in mid-1998 to “ put our own house in order ,” and large businesses — spurred by their own testing — responded in kind, racking up an estimated expenditure of $100 billion in the United States alone. Their preparations encompassed extensive coordination on a national and local level, as well as on a global scale, with other digitally reliant nations examining their own systems.

It was as years of behind-the-scenes work culminated that public awareness peaked. Amid the uncertainty, some Americans stocked up on food, water and guns in anticipation of a computer-induced apocalypse. Ominous news reports warned of possible chaos if critical systems failed, but, behind the scenes, those tasked with avoiding the problem were — correctly — confident the new year’s beginning would not bring disaster.

“The Y2K crisis didn’t happen precisely because people started preparing for it over a decade in advance. And the general public who was busy stocking up on supplies and stuff just didn’t have a sense that the programmers were on the job,” says Paul Saffo, a futurist and adjunct professor at Stanford University.

But even among corporations that were sure in their preparations, there was sufficient doubt to hold off on declaring victory prematurely. The former IT director of a grocery chain recalls executives’ reticence to publicize their efforts for fear of embarrassing headlines about nationwide cash register outages. As Saffo notes, “better to be an anonymous success than a public failure.”

Get your history fix in one place: sign up for the weekly TIME History newsletter

After the collective sigh of relief in the first few days of January 2000, however, Y2K morphed into a punch line, as relief gave way to derision — as is so often the case when warnings appear unnecessary after they are heeded. It was called a big hoax; the effort to fix it a waste of time .

But what if no one had taken steps address the matter? Isolated incidents that illustrate the potential for adverse consequences — albeit of varying degrees of severity — ranging from a comically absurd century’s worth of late fees at a video rental store to a malfunction at a nuclear plant in Tennessee. “We had a problem. For the most part, we fixed it. The notion that nothing happened is somewhat ludicrous,” says de Jager, who was criticized for delivering dire early warnings.

“Industries and companies don’t spend $100 billion dollars or devote these personnel resources to a problem they think is not serious,” Koskinen says, looking back two decades later. “…[T]he people who knew best were the ones who were working the hardest and spending the most.”

The innumerable programmers who devoted months and years to implementing fixes received scant recognition. (One programmer recalls the reward for a five-year project at his company: lunch and a pen.) It was a tedious, unglamorous effort, hardly the stuff of heroic narratives — nor conducive to an outpouring of public gratitude, even though some of the fixes put in place in 1999 are still used today to keep the world’s computer systems running smoothly.

“There was no incentive for everybody to say, ‘we should put up a monument to the anonymous COBOL [common business language] programmer who changed two lines of code in the software at your bank.’ Because this was solved by many people in small ways,” says Saffo.

The inherent conundrum of the Y2K debate is that those on both ends of the spectrum — from naysayers to doomsayers — can claim that the outcome proved their predictions correct.

Koskinen and others in the know felt a high degree of confidence 20 years ago, but only because they were aware of the steps that had been taken — an awareness that, decades later, can still lend a bit more gravity to the idea of Y2K. “If nobody had done anything,” he says, “I wouldn’t have taken the flight.”

More Must-Reads From TIME

- Jane Fonda Champions Climate Action for Every Generation

- Passengers Are Flying up to 30 Hours to See Four Minutes of the Eclipse

- Biden’s Campaign Is In Trouble. Will the Turnaround Plan Work?

- Essay: The Complicated Dread of Early Spring

- Why Walking Isn’t Enough When It Comes to Exercise

- The Financial Influencers Women Actually Want to Listen To

- The Best TV Shows to Watch on Peacock

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Contact us at [email protected]

You May Also Like

Advertisement

How the Year 2000 Problem Worked

- Share Content on Facebook

- Share Content on LinkedIn

- Share Content on Flipboard

- Share Content on Reddit

- Share Content via Email

You will be hearing about the "Year 2000" problem constantly in the news this year. And you will hear a lot of conflicting information in the process. There is also a good bit of "end of the world" rhetoric floating around on the Internet. What should you believe?

In this edition of How Stuff Works we will discuss the Year 2000 problem (also known as the Y2K problem) so that you understand exactly what is happening and what is being done about it. You can also explore a variety of links. From this information you draw your own informed conclusions.

What Is the Y2K Problem?

The cause of the Y2K problem is pretty simple. Until recently, computer programmers have been in the habit of using two digit placeholders for the year portion of the date in their software. For example, the expiration date for a typical insurance policy or credit card is stored in a computer file in MM/DD/YY format (e.g. - 08/31/99). Programmers have done this for a variety of reasons, including:

- That's how everyone does it in their normal lives. When you write a check by hand and you use the "slash" format for the date, you write it like that.

- It takes less space to store 2 digits instead of 4 (not a big deal now because hard disks are so cheap, but it was once a big deal on older machines).

- Standards agencies did not recommend a 4-digit date format until recently.

- No one expected a lot of this software to have such a long lifetime. People writing software in 1970 had no reason to believe the software would still be in use 30 years later.

The 2-digit year format creates a problem for most programs when "00" is entered for the year. The software does not know whether to interpret "00" as "1900" or "2000". Most programs therefore default to 1900. That is, the code that most programmer's wrote either prepends "19" to the front of the two-digit date, or it makes no assumption about the century and therefore, by default, it is "19". This wouldn't be a problem except that programs perform lots of calculations on dates. For example, to calculate how old you are a program will take today's date and subtract your birthdate from it. That subtraction works fine on two-digit year dates until today's date and your birthdate are in different centuries. Then the calculation no longer works. For example, if the program thinks that today's date is 1/1/00 and your birthday is 1/1/65, then it may calculate that you are -65 years old rather than 35 years old. As a result, date calculations give erroneous output and software crashes or produces the wrong results.

The important thing to recognize is that that's it. That is the whole Year 2000 problem. Many programmers used a 2-digit format for the year in their programs, and as a result their date calculations won't produce the right answers on 1/1/2000. There is nothing more to it than that.

The solution, obviously, is to fix the programs so that they work properly. There are a couple of standard solutions:

- Recode the software so that it understands that years like 00, 01, 02, etc. really mean 2000, 2001, 2002, etc.

- "Truly fix the problem" by using 4-digit placeholders for years and recoding all the software to deal with 4-digit dates. [Interesting thought question - why use 4 digits for the year? Why not use 5, or even 6? Because most people assume that no one will be using this software 8,000 years from now, and that seems like a reasonable assumption. Now you can see how we got ourselves into the Y2K problem...]

Either of these fixes is easy to do at the conceptual level - you go into the code, find every date calculation and change them to handle things properly. It's just that there are millions of places in software that have to be fixed, and each fix has to be done by hand and then tested. For example, an insurance company might have 20 or 30 million lines of code that performs its insurance calculations. Inside the code there might be 100,000 or 200,000 date calculations. Depending on how the code was written, it may be that programmers have to go in by hand and modify each point in the program that uses a date. Then they have to test each change. The testing is the hard part in most cases - it can take a lot of time.

If you figure it takes one day to make and test each change, and there's 100,000 changes to make, and a person works 200 days a year, then that means it will take 500 people a year to make all the changes. If you also figure that most companies don't have 500 idle programmers sitting around for a year to do it and they have to go hire those people, you can see why this can become a pretty expensive problem. If you figure that a programmer costs something like $150,000 per year (once you include everything like the programmer's salary, benefits, office space, equipment, management, training, etc.), you can see that it can cost a company tens of millions of dollars to fix all of the date calculations in a large program.

Although the Y2K problem came and went in January of 2000, we have saved this article as an archived editon of HowStuffWorks because of its historical value. Published at the beginning of 1999 at the height of the Y2K panic in the media, this article is noteworthy for the sentence, "In reality, nothing will happen." In retrospect, that sentence was completely correct, but in January 1999 that was definitely not the picture the mainstream media was painting. HowStuffWorks received quite a bit of flaming email for making this simple prediction.

What will happen on 1/1/2000?

On January 1, 2000, software that has not been fixed will stop working or will produce output that is incorrect. The big question is, "How big an effect will that have on the world as we know it?"

Some people are predicting that the world will end. For example, worldwide power failures, a total breakdown of the transportation infrastructure (meaning food cannot get to stores, etc.), planes falling out of the sky, and so on are the scenarios these people foresee. The prediction is that the fabric of society will collapse, people everywhere will riot and the world will burn to the ground. Of course, the people making these predictions all tend to be: A) militia members, B) survivalists and C) religious zealots. It is important to recognize the source of these predictions.

In reality, nothing will happen. There may be a week or two of inconvenience as unforeseen problems present themselves and are worked around. Otherwise there will be no effect. That is an easy prediction to make because:

- Most companies and government agencies will have their software fixed, or will have work-arounds in place, by the end of 1999. If they don't they will go out of business, and that's a strong incentive to get the job done.

- No matter how dependent we think we are on computers, most everything is run by people, not silicon. Take food for example. The tomatoes and lettuce will keep growing, and the people that pick it will keep picking, and the cannery will still can it, the truck drivers will still drive their trucks and the grocery stores will still sell it. In other words, the world will not stop even if a few computers do.

- Some companies will not have their acts together and will have problems. They will go out of business. That is normal capitalism at work. There will be a little disruption as the winners and losers sort it out, but what else is new?

Another thing to keep in mind is that we experience inconvenience all the time and it has little or no effect on us. For example, when UPS (United Parcel Service) went on strike in 1997, it shut down something like 80% of the package delivery infrastructure in the U.S. The world did not end - everyone used the Post Office and Fedex instead. On 1/3/1999 Chicago and Detroit experienced their worst snowstorms in 30 years. It shut down air travel nationwide, delayed the opening of the Detroit auto show, stranded tens of thousands of people, etc. Somehow we all managed to survive with the inconvenience. On 1/1/2000 there will be a some companies that have problems. But there will be lots of other companies that don't. It may create inconvenience, but that is all that it will create and two weeks later we will have sorted it out. It's no different from a big snowstorm or a big strike - we figure out ways around the problems and life goes on.

There are many scare tactics and exagerations used around the Year 2000 problem. In all of them there is a fairly broad assumption that people cannot do their jobs anymore. The important thing to recognize is that, even if many of the computers in the world were to suddenly shut down on 1/1/2000, the total effect would be minimal because people know what they are doing. Let me show you why:

- Let's say that every ATM in the U.S stopped working. There are still tellers and you can still talk to a teller at the bank during normal business hours to make deposits and withdrawals.

- Let's say that every computer at UPS were to shut down. UPS is a bunch of people driving around their brown trucks, and they can all read address labels. The packages will still get delivered.

- Let's say that every barcode scanner in the stores stopped working. Cashiers can still type in the prices.

- Let's say that every computer at the FAA were to shut down, and all the automatic pilot computers in airplanes stopped working. Air traffic controllers are people, and pilots can still fly airplanes. We might not be able to land 2 planes every minute at busy airports, but planes will still fly.

- One of the biggest scare tactics used around the Y2K problem is "failure of the power grid". Let's say that there was something that went wrong somewhere. There are thousands of competent people who manage and repair the power grid - these are the same people who put the grid back together after every major hurricane, ice storm, etc. Also important to note is that the power grid is not something magical. Please read How the Power Grid Works and educate yourself. The grid is made up of passive wires and transformers. Electrons will still flow through wires on 1/1/2000.

There is an assumption among doomsdayers that somehow, on 1/1/2000, every computer will fail (which is silly), AND that every human being will somehow "fail" as well. If you think about it, you can see how untrue that is. We all know how to do our jobs, and we all want to live our lives. On 1/1/2000 we will all be the same. We will get in our cars and we will want to go buy something. The people selling the something will still want to sell it so they can make money. That is never going to change.

- The Year 2000 Problem - J.P. Morgan

- Year 2000 information center

Frequently Answered Questions

What does y2k mean, why was y2k a big deal, what was the y2k virus.

Please copy/paste the following text to properly cite this HowStuffWorks.com article:

The Silicon Underground

David L. Farquhar, computer security professional, train hobbyist, and landlord

Home » Retro Computing » What was the Y2K problem and what was the solution?

What was the Y2K problem and what was the solution?

Last Updated on July 8, 2022 by Dave Farquhar

Hang around enough people like me who’ve been in IT for decades and eventually the Y2K problem comes up. But what was the Y2K problem? What was the solution? And was the problem overblown?

I was in an odd position. I argued in 1999 and 2000 that any problems we had would be relatively minor. But I don’t think the efforts to fix Y2K were overblown. I may be in the minority opinion on that but I’ll explain.

What was the Y2K problem?

But first, let’s understand the problem. In the late 1990s, we had lots of computer systems and programs running that never expected to still be around in the year 2000. They represented dates as two-digit years, to save precious memory. Yes, bytes were precious on many systems so that two bytes was significant into the 1980s on many systems. The problem was no one knew what would happen when the two-digit dates rolled over from 99 to 00. Would the computer think it was 2000? 1900? Some other goofy date?

So we spent lots of time, effort and money in the late 1990s finding these old systems and putting fixes in place. We also spent time trying to convince people it wasn’t going to be the apocalypse. But there were a lot of Chicken Littles running around who amassed hoards of food, water, supplies, batteries, and other things they thought they would need when the disaster hit.

As I recall, I withdrew about $300 from the bank, made sure I had about a week’s supply of extra groceries on hand, and filled my bathtub with water. And I stayed home that night, waiting for phone calls that never happened. I stayed sober and played video games, probably Civilization.

What was the solution?

The solution to the Y2K problem depended on the system and the software. In the event that the software was still being made, you just applied a patch, the same way we apply patches every month for security updates today. The difference in 1999 was that centralized deployment systems were relatively rare, and those early versions of SCCM (it was called SMS back then) were terrible. So we did a lot of running around and installing patches manually.

There were lots of off-the-shelf Y2K “shims” for PCs that fixed various Y2K-related problems. When you didn’t have anything specific to load, you loaded one of those on all of your PCs.

But a lot of places had custom software written on mainframes or minicomputers. If you couldn’t replace the software with off-the-shelf software, you found a programmer who could understand that old software and revised it. They had to hunt through the code and find any variables that used years and make sure they were set to use four digits. They also had to find any code that did any date-related math and revise it to handle four-digit years. I have a relative by marriage who spent several years in the late 1990s doing nothing but rewriting old computer code to handle four-digit dates.

When switching to 4-digit dates wasn’t practical, they changed the logic behind 2-digit dates, such as assuming dates from 00-20 were in the 21st century rather than the 20th. This bought 20 years but means some devices that were Y2K compliant weren’t year 2020 compliant. Such as VCRs , but some computers as well.

If you’ve seen the movie Office Space , the main character was supposed to be fixing Y2K code.

Consequences of flawed year logic

There were some systems that didn’t handle the transition properly, and the date would switch to some random date in the past. Which date depended on any number of things. Many of these systems worked fine once you reset the date to January 1, 2000 or later, however.

In some cases, we just had to discard old systems because there wasn’t anything we could do to make them Y2K compliant. We had the same problem then we do now, with 20-year-old systems laying around that nobody understood except there was this one business-critical function that used it. There were less of them than today. A lot of them got replaced as part of Y2K projects just in case.

Was Y2K overblown?

When I read about Y2K today, people seem to think it was overblown. They look at it as a financial disaster, because every company in the world spent large sums of money, and nothing happened.

Here’s a similar situation. About two years ago, my 14-year-old Honda Civic didn’t pass its safety inspection, so I bought a Toyota Camry. But I haven’t been in a car accident since then, so did I waste my money by buying that car?

No and no. Both cases are examples of taking precautions to keep something bad from happening. And then something bad didn’t happen. That means the precautions worked.

There was an added benefit. We replaced a lot of aged technology with new stuff that was faster and nicer. The problem was that too many people slashed their IT budgets right after Y2K and that made the dotcom bust worse than it otherwise would have been.

People forget there was a tremendous amount of pressure to fix it. People literally thought the world was going to end. There were survivalist magazines about it. It was never going to be as bad as the worst-case scenarios people were assuming. So, from that perspective, yes, it was overblown. The important lesson from Y2K was that nobody knew how big the problem would be, but the people who knew how to find and fixed it did just that, and did such a good job that nothing bad happened.

The “other” Y2K problem

Of course, one reason Y2K wasn’t as big of a problem as it could have been was because there were (and still are) large numbers of systems that don’t have a Y2K problem. Unix systems never did use two-digit dates by default and couldn’t care less about 1900. Unix systems measure time by offsetting time from January 1, 1970. To Unix systems, there was nothing at all special about January 1, 2000. All you had to do was make sure that any custom software you had running under a Unix system wasn’t doing its own date work.

The problem with Unix systems is their clock is going to wrap around on January 19, 2038, and suddenly think it’s December 13, 1901.

We have a little less than 20 years now to find and patch or replace all of the old 32-bit Unix and Linux systems that are hanging around. I hope people don’t dismiss it as fearmongering. We’ll see.

- share

- save 13

- share

- share 6

- RSS feed

David Farquhar is a computer security professional, entrepreneur, and author. He started his career as a part-time computer technician in 1994, worked his way up to system administrator by 1997, and has specialized in vulnerability management since 2013. He invests in real estate on the side and his hobbies include O gauge trains, baseball cards, and retro computers and video games. A University of Missouri graduate, he holds CISSP and Security+ certifications. He lives in St. Louis with his family.

Related stories by Dave Farquhar

- ← Countif example: Data analysis with Excel

- Does retail count as sales experience? →

Site Navigation

The term Year 2000 bug, also known as the millennium bug and abbreviated as Y2K, referred to potential computer problems which might have resulted when dates used in computer systems moved from the year 1999 to the year 2000.

In the early days of electronic computers, memory was expensive so, in order to save space, programmers abbreviated the four-digit year designation and stored only the final two digits. For example, computers recognized “98” as “1998.” How programs interpret “00” when the date changed to the year 2000? Would “00” be translated as 0000, 1000, 1900, or 2000? (Early in 1999, Computer Chronicles host Stewart Cheifet became aware of the possible problem when he received a credit card from a major gasoline company with an expiration date of 1000.)

Mainframe computers in use in important areas such as banking, utilities, communications, insurance, manufacturing, and government were considered the most vulnerable. The problem was not only with systems running conventional software, but it extended to devices such as medical equipment, temperature-control systems, and elevators which used computer chips.

The fear was that when clocks struck midnight on January 1, 2000, affected computer systems, unsure of the year, would fail to operate and cause massive power outages, transportation systems to shut down, and banks to close. Widespread chaos would ensue.

Research firm Gartner estimated the cost of Y2K remediation to be $300 - $600 billion. Businesses and government organizations created special technology teams to ensure that all hardware and software was Y2K compliant (Y2KC). The goal was to check every system that relied on dates, before midnight December 31, 1999. In some cases, the fix was to replace outdated hardware and/or software. Other cases required time-consuming analysis of program code, replacing or rewriting code as needed, and the testing of hardware reliant on computer chips.

In October 1998, the US government passed the Year 2000 Information and Readiness Disclosure Act. The purpose of the act was to encourage companies to share information about the status of their Year 2000 compliance efforts. It also provided some protection against false compliance statements and limited liability for companies issuing Year 2000 Readiness Disclosures.

By December 1998, in response to growing uncertainty regarding the effect of Y2K on the world economy and physical infrastructure, the United Nations convened an international conference on Y2K for its members to share information and report on remediation efforts.

Donors of Y2K objects expressed how all-consuming their Y2K remediation projects were, literally overtaking every aspect of their lives. The project had no room for error and a fixed deadline that could not be extended. The doom, spread through media outlets, added to the overall fear of major system failures. Monarch Home Video, a commercial film producer, released one of the few Y2K themed products with their 1999 one-hour “family survival guide” video. Actor Leonard Nimoy narrated the show, and in a slow, controlled voice, described the disasters the world was about to face.

There were in fact some minor disruptions, mainly in small businesses, but no major end-of-the-world events or significant issues occurred at 12:00 AM on January 1, 2000. Some hailed the Y2K update efforts an overall success, yet others remained skeptical and still considered the issue a hoax. In any case, the bug had caused no epidemic of failures.

References: [last accessed 2019-09-11]

- https://corporate.findlaw.com/law-library/year-2000-information-and-readiness-disclosure-act.html

- https://www.britannica.com/technology/Y2K-bug

- https://www.computerhope.com/jargon/y/y2k.htm

- http://www.cnn.com/TECH/computing/9903/15/rippley2k.idg/

- https://www.computerworld.com.au/article/108362/ripple_effect_y2k_supply_chain/

- https://news.avclub.com/let-s-remember-the-quaint-apocalyptic-hysteria-of-the-y-1798260073 [includes the video “Y2K Family Survival Guide” with Leonard Nimoy, 1999; 1 hour video]

- https://www.youtube.com/watch?v=nAFIsPX3_3A [Computer Chronicles – Year 2000 – 30 min video]

- https://www.sec.gov/news/extra/y2k/mktwplan.htm

- https://money.cnn.com/magazines/fortune/fortune_archive/2000/02/07/272831/index.htm

- https://news.avclub.com/looking-back-at-all-the-ways-y2k-was-used-to-sell-us-sh-1836982164

- https://www.sfgate.com/business/article/Y2K-Angst-Spooks-Merchandisers-Companies-avoid-2906421.php

- https://www.zazzle.com/y2k_bumper_sticker-128586199957014928

- http://www.cnn.com/US/9906/09/y2k.wacky/

- https://www.gamespot.com/reviews/y2k-the-game-review/1900-2532010/

As people plan for this year’s New Year’s Eve celebration, chances are that most will not be stocking up on canned goods, taking out large amounts of cash from the bank or purchasing a backup generator. But 20 years ago excitement for the start of the year 2000 was mixed with fear that the rollover to 2000 might cause computer systems to fail globally, with potentially apocalyptic consequences. Year dates had been entered as two digits — e.g. “99” — and the rollover to “00” might cause catastrophic failures: Would the lights stay on? Would the banks fail? Would planes fall out of the sky? These questions — the Y2K bug — overshadowed the transition to the new millennium.

But as The Washington Post announced in a Jan. 1 headline: “The Bug Didn’t Bite: Computers Pass Their Date With Destiny.” The millennium bug had been squashed.

To the extent that Y2K is remembered today, it is largely as something of a joke: a massive techno-panic stoked by the media that ultimately amounted to nothing. Yet, avoiding catastrophe was the result of serious hard work. As John Koskinen, the chairman of President Bill Clinton’s Council on Y2K, testified in the early weeks of 2000, “I don’t know of a single person working on Y2K who thinks that they did not confront and avoid a major risk of systemic failure.”

That danger was averted thanks to a group of experts recognizing a problem, bringing it to the attention of those in power and those in power actually listening to experts. Twenty years later, we are able to look at Y2K with derision, not because Y2K was a hoax, but because concerned people took the threat seriously and did something about it — a lesson for addressing myriad problems today.

The origins of the Y2K problem seem almost quaint at a time when people regularly carry hundreds of gigabytes of computing power in their pockets. But in the 1960s, computer memory was limited and expensive. Therefore, programmers chose to represent dates using six characters rather than eight, meaning the date Oct. 22, 1965, was rendered as 102265. This format worked for decades, saving valuable memory and speeding up processors. Insofar as it represented a potential risk, it was a problem for the future. And thus, even though computer scientist Robert Bemer tried to sound the alarm in 1971, it seemed like there was plenty of time to fix the two-digit date problem.

Computers assumed that the first two digits of any year was “19” — and they began running into problems once they started encountering dates that occurred after Dec. 31, 1999. These problems ranged from the seemingly comical (a 104-year-old woman being told to report to kindergarten), to the frustrating (credit cards with expiration dates of “00” were denied as having expired in 1900), to the potentially catastrophic (the risk that essential infrastructure could fail).

While computers had made important inroads into the business and government sectors in the preceding decades, the 1990s saw personal computer use increasing dramatically. The ’90s saw the launches of the first graphical Web browser (Mosaic), Microsoft’s Windows 95, Apple’s first iMac and iconic video games such as Wolfenstein 3D and Tomb Raider. As a crisis involving computers, Y2K hit at the very moment when more and more people were coming to see computers as integral to their daily lives.

By the time Peter de Jager published his attention-getting article “Doomsday 2000,” in 1993 (in Computerworld), the Y2K crisis was no longer some far-off threat — and people in power took the problem seriously. Writing to Clinton in July 1996, Sen. Daniel Patrick Moynihan (D-N.Y.) warned that a study by the Congressional Research Service had confirmed that “each line of computer code needs to be analyzed and either passed on or be rewritten.” Moynihan offered a provocative warning, “the computer has been a blessing; if we don’t act quickly, however, it could become the curse of the age.”

Luckily, in the less than four years between Moynihan’s letter and Dec. 31, 1999, a great deal was done. Members of both parties in Congress worked closely together (even in the midst of an impeachment) to monitor the compliance efforts being made by the government and industry — devoting particular attention to the work being done to ensure that utilities, as well as the financial and health-care sectors, would be ready. Clinton launched his own Council on Y2K, headed by Koskinen, to coordinate efforts in the United States, while the World Bank-backed International Y2K Cooperation Center worked to help other countries prepare for Y2K. In a 1998 speech, Clinton said that “if we act properly, we won’t look back on this as a headache, sort of the last failed challenge of the 20th century. It will be the first challenge of the 21st century successfully met.”

Nevertheless, there was widespread concern that something would go wrong. With barely a year until the rollover, a poll conducted by Time magazine and CNN found that 59 percent of respondents were either “somewhat” or “very concerned” in regards to the “Y2K bug problem.” And those foretelling doom were not only to be found on the societal fringe. In its “100 Day Report” issued on Sept. 22, 1999, the Senate’s Special Committee on the Year 2000 Technology Problem applauded the progress that had been made but couched this praise in lingering concerns.

A legion of programmers and IT professionals squashed the millennium bug by checking and rewriting millions, if not billions, of lines of code. Best practices and solutions were liberally shared between the government and businesses, and carefully constructed contingency plans were put in place just in case the bug was still lurking in some systems. The Y2K problem was solved, and the world woke up on Jan. 1 to a normal, non-apocalyptic day. But instead of registering this outcome as a challenge successfully met, the public experienced the nonevent of Y2K as a joke or even a hoax.

Two flawed understandings drove the idea that Y2K was all just an expensive hoax: that anticipated and much-hyped international failures did not happen, and that nothing went wrong. But actually, Y2K remediation efforts revealed that many other countries were not as computer reliant as the United States, which reduced the chance of failures. Furthermore, thanks to the sharing of information and expertise, other nations were able to benefit quickly from solutions to Y2K that had been devised in the United States.

And while many believed that “nothing happened,” there were actually hundreds of Y2K-related incidents. These included problems at more than a dozen nuclear power plants, delays in millions of dollars of Medicare payments, ATM issues worldwide and problems with the Defense Department’s satellite-based intelligence system. That problems were fixed quickly is largely attributable to the small army of programmers who spent the first hours of the year 2000 monitoring sensitive systems.

Y2K was a technological problem, but it revealed that as societies became heavily reliant on complex computerized systems, technological problems became a matter of concern for everyone. Faced with a potentially catastrophic problem, experts, businesses and the government mobilized the resources necessary to mitigate the risks before they could trigger a disaster. Most estimates suggest that $100 billion was spent in the United States, $8.5 billion of that by the federal government, to squash the millennium bug, and though it is impossible to say that every cent was well spent, that amount still dwarfs the estimated cost of doing nothing.

Twenty years after the successful navigation of the Y2K crisis, few of us worry that a date rollover is going to cause the world’s computer systems to crash. However, we do worry about the pervasiveness of misinformation online, the spread of corporate surveillance, the ways racist and misogynistic biases are reproduced in algorithms, about massive data breaches and about a deluge of other problems that seem to have been exacerbated as computerized devices have become ubiquitous. Y2K revealed the ways we expose ourselves to new risks as our lives and societies become entangled with complex computer systems the inner workings of which we often fail to understand, and that is a problem that is still very much with us today.

But it also showed that addressing these challenges is possible when there is close cooperation among researchers, government and businesses, both nationally and internationally. People rose to the challenge in 2000. That’s not something to laugh at, that’s something to celebrate.

June 21, 1999

What are the main problems with the Y2K computer crisis and how are people trying to solve them?

Josh Hodas, assistant professor of computer science at Harvey Mudd College, gives the following overview:

The "Y2K Problem" includes a whole range of problems that may persist for several years and result from the way some computer software and hardware represent dates--hence the name "Y2K," which stands for "Year 2000" (K is an abbreviation for "kilo," or 1,000). In short, because many computer systems store only the last two digits of the year in a date, you can't really tell in which century that date falls.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing . By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Until recently, this ambiguity hasn't mattered in most instances. Computers have manipulated dates relating to recent events, and so they have been able to treat all dates as belonging to the current century. As we get closer to the new millennium, however, many more systems will need to juggle dates from two different centuries, and they will have to be able to distinguish between them if they are to avert myriad failures.

Consider the new credit cards you might be carrying--many of which already have expiration dates in the next century. When you try to buy something, the credit card terminal has to determine whether or not the card has expired. To do so, it runs a program that checks whether the expiration date is greater than the current date. If the card expires in 2003, then obviously the answer should be yes. But if the system uses only two digits to represent the year, it will find that 03 is not greater than 99, and that your card has already expired. This kind of problem--faced already by some major credit cards--can plague any system that depends on date comparisons.

In the situation above, there is at least one person standing by who can intervene when the problem occurs: the card owner or issuer. More disturbing, perhaps, are the "silent killer" versions of the Y2K problem, which can occur with any of the millions of embedded processors used in computers, toasters, cars, power plants and many other devices.

Next, consider a system that is required to run an internal safety test once a year. The system regularly checks whether the difference between the current date and the last test date is greater than 365 days. When a last test date in this century is subtracted from a date in the next century, though, the answer is a negative number less than 365, so the system will not believe it is time to perform the test. And in fact, left as is, the system will not perform another safety check for another 100 years.

Why do these systems make such bad assumptions? In most cases, designers just never thought the programs they were writing would be in use for so long--namely into the 21st century. And there were real practical advantages to using shorter, two-digit years in dates at the time they were put in place.

There are many different ways to fix the problem. Ideally we could just rewrite all the offending programs and modify all the existing stored data. But in many cases the program is so old that the original "source code" (the form written by the programmer before it is converted into a digital form understood by the computer) is lost and it is impractical or impossible to modify the digital form.

When the source code does exist, there may be no "compilers" (the programs that convert source code to its digital form) compatible with that version of the code's language anymore. And even if the program could be successfully modified, changing all the stored data would be impractical. Many non-date-dependent programs would also need to be changed because the placement of data in the file would change when space for the additional date digits was added.

In the last case, where code can be changed but stored data cannot, it is sometimes possible to buy some time. The most popular technique is called "windowing:" it takes advantage of the fact that many systems store information only about a relatively brief period, called the window. For example, at my college, which was founded in 1955, we can safely assume that any stored graduation date in the range from 00 to 55 refers to the next century. As a result, no ambiguity about graduation dates will occur for another 55 years, and with a little programming, we can put off the larger problem until then. Of course, for recording students' birth dates, we must use a different window. For the birth dates of faculty members, there is yet a different window and so forth.

Another popular technique involves reusing the space allocated for a two-digit date in a more efficient way. Since the same space is used, other data doesn't move, and other programs that don't access the date fields don't need to be rewritten. It turns out that this is possible because in many older databases, the numbers are stored using a fairly inefficient representation, called Extended Binary Coded Decimal Interchange Code (EBCDIC, pronounced "ehb-sih-dik").

Although there are many proposed solutions floating around, the real problem is that it is hard to imagine how all the systems that need fixing can be fixed in the necessary time frame. Moreover, in the case of the millions of embedded processors, it is unclear how the fix might be disseminated.

So, should you sell your house and move to a cabin with a 10,000-gallon fuel tank and a bunker full of food? As bad as all this sounds, there are many who feel that the doom-and-gloom predictions are really just variations on millennialist fever. Although there will certainly be bumps in the road, most experts believe that the worst problems will be avoided. Many major industries and government agencies have already run tests: They set the clocks on their computers forward to various dates in the next century to see what would happen. And in most important cases--including banks, nothing did.

Advertisement

A lazy fix 20 years ago means the Y2K bug is taking down computers now

By Chris Stokel-Walker

7 January 2020

The change in year has caused a few issues

Dmitrii_Guzhanin/Getty

Parking meters, cash registers and a professional wrestling video game have fallen foul of a computer glitch related to the Y2K bug.

The Y2020 bug, which has taken many payment and computer systems offline, is a long-lingering side effect of attempts to fix the Y2K, or millennium bug .

Both stem from the way computers store dates. Many older systems express years using two numbers – 98, for instance, for 1998 – in an effort to save memory. The Y2K bug was a fear that computers would treat 00 as 1900, rather than 2000.

Programmers wanting to avoid the Y2K bug had two broad options: entirely rewrite their code, or adopt a quick fix called “windowing”, which would treat all dates from 00 to 20, as from the 2000s, rather than the 1900s. An estimated 80 per cent of computers fixed in 1999 used the quicker, cheaper option.

“Windowing, even during Y2K, was the worst of all possible solutions because it kicked the problem down the road,” says Dylan Mulvin at the London School of Economics.

Read more: Binary babel: Fixing computing’s coding bugs

Coders chose 1920 to 2020 as the standard window because of the significance of the midpoint, 1970. “Many programming languages and systems handle dates and times as seconds from 1970/01/01, also called Unix time,” says Tatsuhiko Miyagawa, an engineer at cloud platform provider Fastly.

Unix is a widely used operating system in a variety of industries, and this “epoch time” is seen as a standard.

The theory was that these windowed systems would be outmoded by the time 2020 arrived, but many are still hanging on and in some cases the issue had been forgotten.

“Fixing bugs in old legacy systems is a nightmare: it’s spaghetti and nobody who wrote it is still around,” says Paul Lomax, who handled the Y2K bug for Vodafone. “Clearly they assumed their systems would be long out of use by 2020. Much as those in the 60s didn’t think their code would still be around in the year 2000.”

Those systems that used the quick fix have now reached the end of that window, and have rolled back to 1920. Utility company bills have reportedly been produced with the erroneous date 1920, while tens of thousands of parking meters in New York City have declined credit card transactions because of the date glitch.

Read more: How tech bugs could be killing thousands in our hospitals

Thousands of cash registers manufactured by Polish firm Novitus have been unable to print receipts due to a glitch in the register’s clock. The company is attempting to fix the machines.

WWE 2K20 , a professional wrestling video game, also stopped working at midnight on 1 January 2020. Within 24 hours, the game’s developers, 2K, issued a downloadable fix.

Another piece of software, Splunk, which ironically looks for errors in computer systems, was found to be vulnerable to the Y2020 bug in November. The company rolled out a fix to users the same week – which include 92 of the Fortune 100 , the top 100 companies in the US.

Some hardware and software glitches have been incorrectly attributed to the bug. One healthcare professional claimed Y2020 hit a system developed by McKesson, which produces software for hospitals. A spokesperson for McKesson told New Scientist the firm was unaware of any outage tied to Y2020.

Exactly how long these Y2020 fixes will last is unknown, as companies haven’t disclosed details about them. If the window has simply been pushed back again, we can expect to see the same error crop up.

Another date storage problem also faces us in the year 2038. The issue again stems from Unix’s epoch time: the data is stored as a 32-bit integer, which will run out of capacity at 3.14 am on 19 January 2038.

Sign up to our weekly newsletter

Receive a weekly dose of discovery in your inbox! We'll also keep you up to date with New Scientist events and special offers.

More from New Scientist

Explore the latest news, articles and features

AI chatbots are improving at an even faster rate than computer chips

Subscriber-only

The existence of a new kind of magnetism has been confirmed

Self-assembling dna computer can sort simple images into categories, first 'thermodynamic computer' uses random noise to calculate, popular articles.

Trending New Scientist articles

- Search Search Please fill out this field.

What Is Y2K?

Understanding y2k, special considerations, what led to y2k, why was y2k scary, how was y2k avoided.

- Macroeconomics

The Truth About Y2K: What Did and Didn't Happen in the Year 2000

:max_bytes(150000):strip_icc():format(webp)/Clay_Halton_BW_web_ready-13-69177d5045e84abd939938c80735cd05.jpg)

Katrina Ávila Munichiello is an experienced editor, writer, fact-checker, and proofreader with more than fourteen years of experience working with print and online publications.

:max_bytes(150000):strip_icc():format(webp)/KatrinaAvilaMunichiellophoto-9d116d50f0874b61887d2d214d440889.jpg)

Investopedia / Hilary Allison

Y2K is the shorthand term for "the year 2000." Y2K was commonly used to refer to a widespread computer programming shortcut that was expected to cause extensive havoc as the year changed from 1999 to 2000. Instead of allowing four digits for the year, many computer programs only allowed two digits (e.g., 99 instead of 1999). As a result, there was immense panic that computers would be unable to operate at the turn of the millennium when the date descended from "99" to "00".

Key Takeaways

- Y2K was commonly used to refer to a widespread computer programming shortcut that was expected to cause extensive havoc as the year changed from 1999 to 2000.

- The change was expected to bring down computer systems infrastructures, such as those for banking and power plants.

- While there was a widespread outcry about the potential implications of this change, not much happened.

In the years and months leading up to the turn of the millennium, computer experts and financial analysts feared that the switch from the two-digit year '99 to '00 would wreak havoc on computer systems ranging from airline reservations to financial databases to government systems. Millions of dollars were spent in the lead-up to Y2K in IT and software development to create patches and workarounds to squash the bug.

While there were a few minor issues once Jan. 1, 2000, arrived, there were no massive malfunctions. Some people attribute the smooth transition to major efforts undertaken by businesses and government organizations to correct the Y2K bug in advance. Others say that the problem was overstated and wouldn't have caused significant problems regardless.

At the time, which was the early days of the internet, the Y2K scare—or the Millennium bug as it was also called—had many plausible reasons for concern. For instance, for much of financial history, financial institutions have not generally been considered cutting edge technology-wise.

Knowing most big banks ran on dated computers and technologies, it wasn't irrational for depositors to worry the Y2K issue would seize the banking system up, thereby preventing people from withdrawing money or engaging in important transactions. Extended to a global scale, these worries of an epidemic-like panic had international markets holding their breath heading into the turn of the century.

The research firm Gartner estimated that the global costs to fix the bug were expected to be between $300 billion to $600 billion. Individual companies also offered their estimates of the bug's economic impact on their top-line figures. For example, General Motors stated that it would cost $565 million to fix problems arising from the bug. Citicorp estimated that it would cost $600 million, while MCI stated that it would take $400 million.

In response, the United States government passed the Year 2000 Information and Readiness Disclosure Act to prepare for the event and formed a President's Council that consisted of senior officials from the administration and officials from agencies like the Federal Emergency Management Agency (FEMA). The council monitored efforts made by private companies to prepare their systems for the event.

In actuality, the episode came and went with little fanfare.

Y2K came about largely due to economics. At the dawn of the computer age, the programs being written required the type of data storage that was extremely costly. Since not many anticipated the success of this new technology or the speed with which it would take over, firms were judicious in their budgets. This lack of foresight, especially given that the millennium was just about 40 years away, led to programmers being forced to using a 2-digit code instead of a 4-digit code to designate the year.

Experts feared that the switch from the two-digit year '99 to '00 would wreak havoc on computer systems ranging from airline reservations to financial databases to government systems. For instance, the banking system relied on dated computers and technologies and it wasn't irrational for depositors to worry about being able to withdraw funds or engage in important transactions. Bankers were worried that interest might be calculated for a thousand years (1000 to 1999) instead of a single day.

The U.S. government passed the Year 2000 Information and Readiness Disclosure Act to prepare for the event and formed a President's Council, that consisted of senior officials from the administration and officials from agencies like the Federal Emergency Management Agency (FEMA), to monitor efforts of private companies to prepare their systems for the event. The research firm Gartner estimated that the global costs to avoid Y2K could have been as much as $600 billion.

U.S. House of Representatives. " The Year 2000 Problem: Fourth Report by the Committee on Government Reform and Oversight ," Pages 3 and 20. Accessed Sept. 13, 2021.

The White House, President Bill Clinton. " President Clinton: Addressing the Y2K Computer Problem ." Accessed Sept. 13, 2021.

U.S. Department of Homeland Security. " Emergency Preparedness and the Year 2000 Challenger ." Accessed Sept. 13, 2021.

:max_bytes(150000):strip_icc():format(webp)/GettyImages-1047306646-87a4ed6751a7495890614fa1a7dc96fe.jpg)

- Terms of Service

- Editorial Policy

- Privacy Policy

- Your Privacy Choices

The Enlightened Mindset

Exploring the World of Knowledge and Understanding

Welcome to the world's first fully AI generated website!

The Y2K Problem: How It Was Solved and Who Were the Unsung Heroes

By Happy Sharer

Introduction

The Y2K problem, also known as the “year 2000 bug”, was a widespread issue that impacted computer systems worldwide in the late 1990s and early 2000s. The issue arose when software developers failed to anticipate the need for four-digit year formats when programming computers. This meant that many computer systems were unable to recognize dates beyond December 31st, 1999, leading to potential errors in data processing.

The impact of the Y2K problem was potentially devastating, with experts warning of economic collapse, power outages, and other issues if the problem was not solved in time. Fortunately, due to the hard work of many dedicated individuals, teams, and organizations, the issue was successfully addressed and resolved, allowing us to enjoy the benefits of modern technology without fear of a global disaster.

Interview with a Key Individual Involved in Solving the Y2K Problem

To gain further insight into the resolution of the Y2K problem, we spoke to Dr. Emma Smith, who was part of the team responsible for addressing the issue. Dr. Smith is an experienced software engineer and has been working in the IT industry for over 20 years.

When asked about her role in solving the Y2K problem, Dr. Smith explained: “My primary responsibility was to develop software programs that would ensure the accuracy of date calculations and prevent any errors from occurring. I worked closely with other members of the team to identify potential issues and develop solutions that could be implemented quickly and efficiently.”

Dr. Smith went on to discuss the challenges faced by the team: “One of the biggest challenges was the sheer scale of the task. We had to identify and address potential issues across all computer systems – from mainframes to personal computers – and this required a huge effort from everyone involved. In addition, we had to ensure that our solutions did not introduce any new issues or cause any disruption to existing systems.”

Ultimately, the team was successful in resolving the Y2K problem and Dr. Smith believes that this was due to their commitment and dedication: “We all worked tirelessly to ensure that the issue was addressed in time. We put in long hours and worked closely together to identify potential issues and develop appropriate solutions.”

Highlighting a Timeline of Events Leading Up to the Successful Resolution of the Y2K Problem

The Y2K problem was first identified in the late 1980s, with experts warning of the potential impact of the issue. In response to these warnings, various teams and organizations were formed to address the issue, including the International Y2K Cooperation Center (IY2KCC), the Coordinating Committee on Multilateral Export Controls (CoCom), and the Year 2000 Information and Readiness Disclosure Act (Y2IRDA).

These teams and organizations developed strategies to mitigate the risk posed by the Y2K problem, such as creating awareness campaigns, developing software solutions, and testing systems for compatibility. As the year 2000 approached, the teams worked around the clock to ensure that the issue was successfully addressed and resolved.

Fortunately, their efforts paid off, and the Y2K problem was successfully resolved on January 1st, 2000. While there were some minor issues reported in certain areas, overall the transition was smooth and there were no major disruptions to systems or services.

An Overview of the Teams and Organizations That Worked Together to Solve the Y2K Problem

As mentioned above, several teams and organizations played an important role in solving the Y2K problem. These included the IY2KCC, CoCom, and Y2IRDA, as well as numerous other government agencies, businesses, and non-profit organizations.

Each team and organization had its own unique role to play in addressing the issue. For example, the IY2KCC focused on raising awareness of the issue and providing advice and assistance to those affected. CoCom worked to coordinate international efforts to address the issue, while Y2IRDA developed legal frameworks to ensure compliance with new regulations.

Other teams and organizations worked to develop software solutions, test systems for compatibility, and provide technical support to those affected. All of these efforts combined to ensure that the Y2K problem was successfully resolved.

A Case Study on the Strategies Used to Successfully Solve the Y2K Problem

The successful resolution of the Y2K problem was due to a combination of strategies employed by the various teams and organizations involved. These strategies can be broken down into three key areas: creating awareness, implementing solutions, and testing systems.