7.3 Problem-Solving

Learning objectives.

By the end of this section, you will be able to:

- Describe problem solving strategies

- Define algorithm and heuristic

- Explain some common roadblocks to effective problem solving

People face problems every day—usually, multiple problems throughout the day. Sometimes these problems are straightforward: To double a recipe for pizza dough, for example, all that is required is that each ingredient in the recipe be doubled. Sometimes, however, the problems we encounter are more complex. For example, say you have a work deadline, and you must mail a printed copy of a report to your supervisor by the end of the business day. The report is time-sensitive and must be sent overnight. You finished the report last night, but your printer will not work today. What should you do? First, you need to identify the problem and then apply a strategy for solving the problem.

The study of human and animal problem solving processes has provided much insight toward the understanding of our conscious experience and led to advancements in computer science and artificial intelligence. Essentially much of cognitive science today represents studies of how we consciously and unconsciously make decisions and solve problems. For instance, when encountered with a large amount of information, how do we go about making decisions about the most efficient way of sorting and analyzing all the information in order to find what you are looking for as in visual search paradigms in cognitive psychology. Or in a situation where a piece of machinery is not working properly, how do we go about organizing how to address the issue and understand what the cause of the problem might be. How do we sort the procedures that will be needed and focus attention on what is important in order to solve problems efficiently. Within this section we will discuss some of these issues and examine processes related to human, animal and computer problem solving.

PROBLEM-SOLVING STRATEGIES

When people are presented with a problem—whether it is a complex mathematical problem or a broken printer, how do you solve it? Before finding a solution to the problem, the problem must first be clearly identified. After that, one of many problem solving strategies can be applied, hopefully resulting in a solution.

Problems themselves can be classified into two different categories known as ill-defined and well-defined problems (Schacter, 2009). Ill-defined problems represent issues that do not have clear goals, solution paths, or expected solutions whereas well-defined problems have specific goals, clearly defined solutions, and clear expected solutions. Problem solving often incorporates pragmatics (logical reasoning) and semantics (interpretation of meanings behind the problem), and also in many cases require abstract thinking and creativity in order to find novel solutions. Within psychology, problem solving refers to a motivational drive for reading a definite “goal” from a present situation or condition that is either not moving toward that goal, is distant from it, or requires more complex logical analysis for finding a missing description of conditions or steps toward that goal. Processes relating to problem solving include problem finding also known as problem analysis, problem shaping where the organization of the problem occurs, generating alternative strategies, implementation of attempted solutions, and verification of the selected solution. Various methods of studying problem solving exist within the field of psychology including introspection, behavior analysis and behaviorism, simulation, computer modeling, and experimentation.

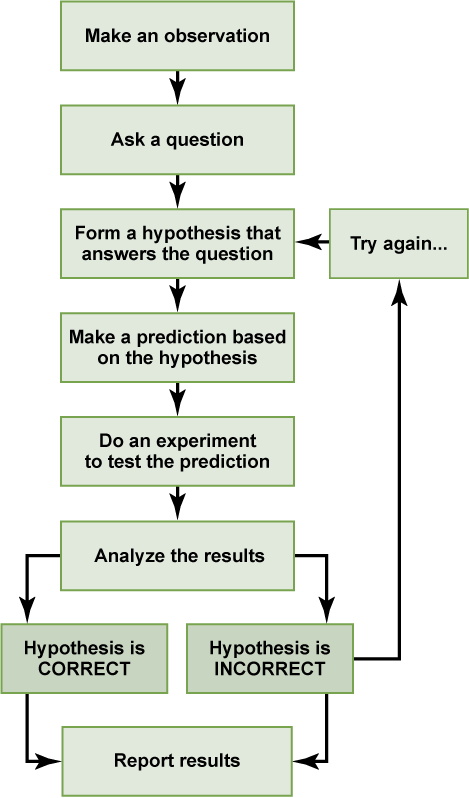

A problem-solving strategy is a plan of action used to find a solution. Different strategies have different action plans associated with them (table below). For example, a well-known strategy is trial and error. The old adage, “If at first you don’t succeed, try, try again” describes trial and error. In terms of your broken printer, you could try checking the ink levels, and if that doesn’t work, you could check to make sure the paper tray isn’t jammed. Or maybe the printer isn’t actually connected to your laptop. When using trial and error, you would continue to try different solutions until you solved your problem. Although trial and error is not typically one of the most time-efficient strategies, it is a commonly used one.

Another type of strategy is an algorithm. An algorithm is a problem-solving formula that provides you with step-by-step instructions used to achieve a desired outcome (Kahneman, 2011). You can think of an algorithm as a recipe with highly detailed instructions that produce the same result every time they are performed. Algorithms are used frequently in our everyday lives, especially in computer science. When you run a search on the Internet, search engines like Google use algorithms to decide which entries will appear first in your list of results. Facebook also uses algorithms to decide which posts to display on your newsfeed. Can you identify other situations in which algorithms are used?

A heuristic is another type of problem solving strategy. While an algorithm must be followed exactly to produce a correct result, a heuristic is a general problem-solving framework (Tversky & Kahneman, 1974). You can think of these as mental shortcuts that are used to solve problems. A “rule of thumb” is an example of a heuristic. Such a rule saves the person time and energy when making a decision, but despite its time-saving characteristics, it is not always the best method for making a rational decision. Different types of heuristics are used in different types of situations, but the impulse to use a heuristic occurs when one of five conditions is met (Pratkanis, 1989):

- When one is faced with too much information

- When the time to make a decision is limited

- When the decision to be made is unimportant

- When there is access to very little information to use in making the decision

- When an appropriate heuristic happens to come to mind in the same moment

Working backwards is a useful heuristic in which you begin solving the problem by focusing on the end result. Consider this example: You live in Washington, D.C. and have been invited to a wedding at 4 PM on Saturday in Philadelphia. Knowing that Interstate 95 tends to back up any day of the week, you need to plan your route and time your departure accordingly. If you want to be at the wedding service by 3:30 PM, and it takes 2.5 hours to get to Philadelphia without traffic, what time should you leave your house? You use the working backwards heuristic to plan the events of your day on a regular basis, probably without even thinking about it.

Another useful heuristic is the practice of accomplishing a large goal or task by breaking it into a series of smaller steps. Students often use this common method to complete a large research project or long essay for school. For example, students typically brainstorm, develop a thesis or main topic, research the chosen topic, organize their information into an outline, write a rough draft, revise and edit the rough draft, develop a final draft, organize the references list, and proofread their work before turning in the project. The large task becomes less overwhelming when it is broken down into a series of small steps.

Further problem solving strategies have been identified (listed below) that incorporate flexible and creative thinking in order to reach solutions efficiently.

Additional Problem Solving Strategies :

- Abstraction – refers to solving the problem within a model of the situation before applying it to reality.

- Analogy – is using a solution that solves a similar problem.

- Brainstorming – refers to collecting an analyzing a large amount of solutions, especially within a group of people, to combine the solutions and developing them until an optimal solution is reached.

- Divide and conquer – breaking down large complex problems into smaller more manageable problems.

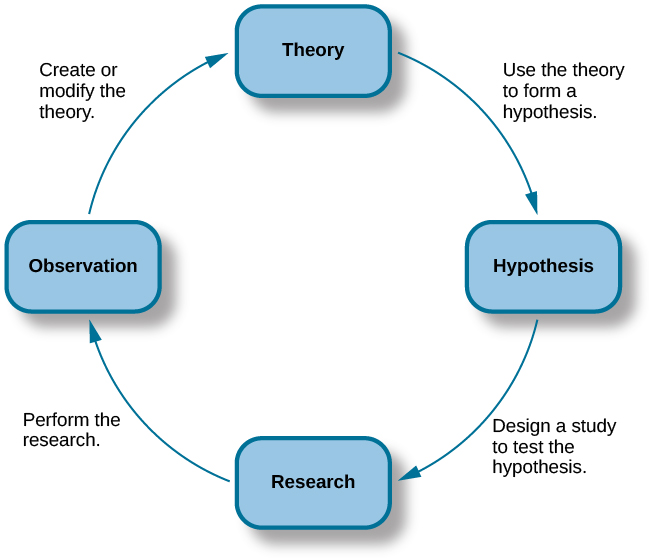

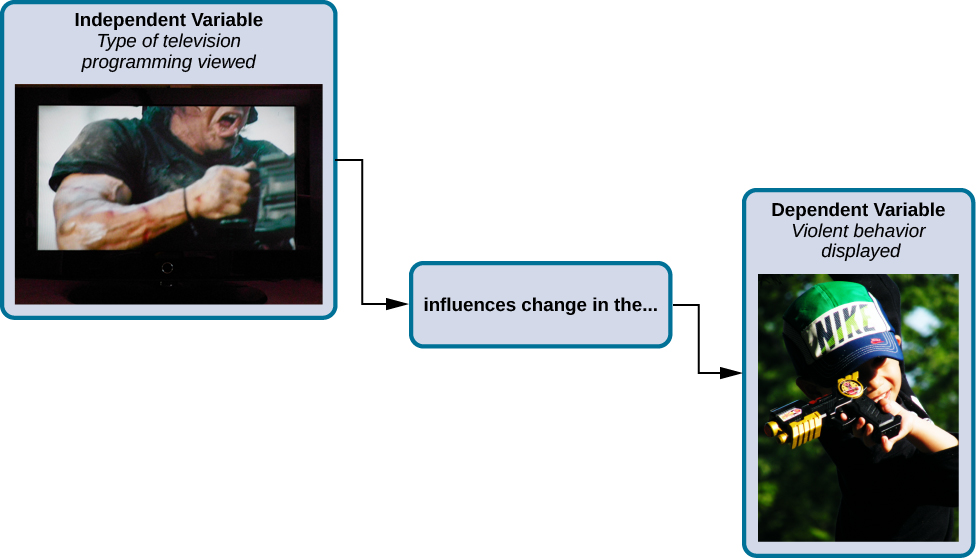

- Hypothesis testing – method used in experimentation where an assumption about what would happen in response to manipulating an independent variable is made, and analysis of the affects of the manipulation are made and compared to the original hypothesis.

- Lateral thinking – approaching problems indirectly and creatively by viewing the problem in a new and unusual light.

- Means-ends analysis – choosing and analyzing an action at a series of smaller steps to move closer to the goal.

- Method of focal objects – putting seemingly non-matching characteristics of different procedures together to make something new that will get you closer to the goal.

- Morphological analysis – analyzing the outputs of and interactions of many pieces that together make up a whole system.

- Proof – trying to prove that a problem cannot be solved. Where the proof fails becomes the starting point or solving the problem.

- Reduction – adapting the problem to be as similar problems where a solution exists.

- Research – using existing knowledge or solutions to similar problems to solve the problem.

- Root cause analysis – trying to identify the cause of the problem.

The strategies listed above outline a short summary of methods we use in working toward solutions and also demonstrate how the mind works when being faced with barriers preventing goals to be reached.

One example of means-end analysis can be found by using the Tower of Hanoi paradigm . This paradigm can be modeled as a word problems as demonstrated by the Missionary-Cannibal Problem :

Missionary-Cannibal Problem

Three missionaries and three cannibals are on one side of a river and need to cross to the other side. The only means of crossing is a boat, and the boat can only hold two people at a time. Your goal is to devise a set of moves that will transport all six of the people across the river, being in mind the following constraint: The number of cannibals can never exceed the number of missionaries in any location. Remember that someone will have to also row that boat back across each time.

Hint : At one point in your solution, you will have to send more people back to the original side than you just sent to the destination.

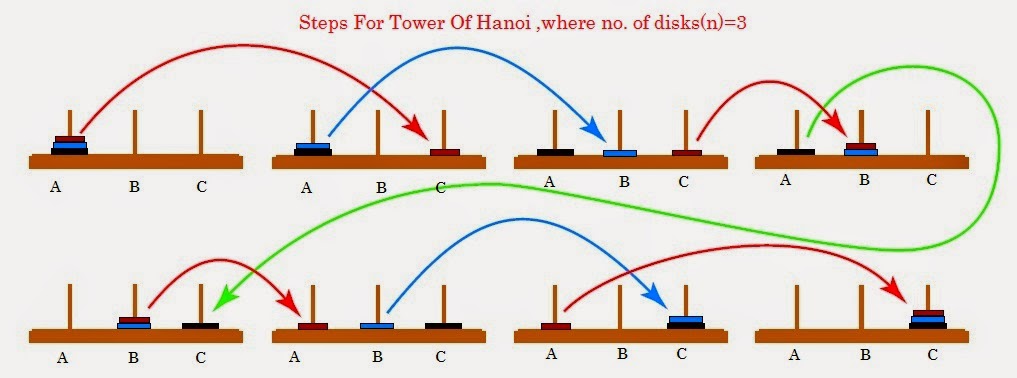

The actual Tower of Hanoi problem consists of three rods sitting vertically on a base with a number of disks of different sizes that can slide onto any rod. The puzzle starts with the disks in a neat stack in ascending order of size on one rod, the smallest at the top making a conical shape. The objective of the puzzle is to move the entire stack to another rod obeying the following rules:

- 1. Only one disk can be moved at a time.

- 2. Each move consists of taking the upper disk from one of the stacks and placing it on top of another stack or on an empty rod.

- 3. No disc may be placed on top of a smaller disk.

Figure 7.02. Steps for solving the Tower of Hanoi in the minimum number of moves when there are 3 disks.

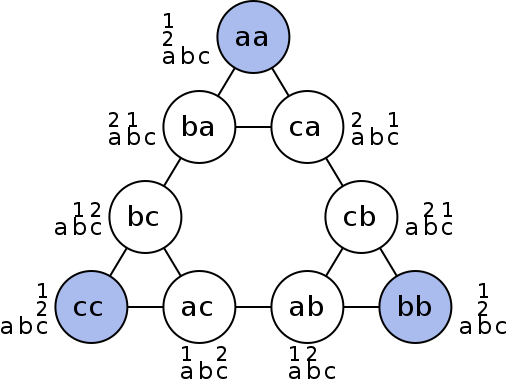

Figure 7.03. Graphical representation of nodes (circles) and moves (lines) of Tower of Hanoi.

The Tower of Hanoi is a frequently used psychological technique to study problem solving and procedure analysis. A variation of the Tower of Hanoi known as the Tower of London has been developed which has been an important tool in the neuropsychological diagnosis of executive function disorders and their treatment.

GESTALT PSYCHOLOGY AND PROBLEM SOLVING

As you may recall from the sensation and perception chapter, Gestalt psychology describes whole patterns, forms and configurations of perception and cognition such as closure, good continuation, and figure-ground. In addition to patterns of perception, Wolfgang Kohler, a German Gestalt psychologist traveled to the Spanish island of Tenerife in order to study animals behavior and problem solving in the anthropoid ape.

As an interesting side note to Kohler’s studies of chimp problem solving, Dr. Ronald Ley, professor of psychology at State University of New York provides evidence in his book A Whisper of Espionage (1990) suggesting that while collecting data for what would later be his book The Mentality of Apes (1925) on Tenerife in the Canary Islands between 1914 and 1920, Kohler was additionally an active spy for the German government alerting Germany to ships that were sailing around the Canary Islands. Ley suggests his investigations in England, Germany and elsewhere in Europe confirm that Kohler had served in the German military by building, maintaining and operating a concealed radio that contributed to Germany’s war effort acting as a strategic outpost in the Canary Islands that could monitor naval military activity approaching the north African coast.

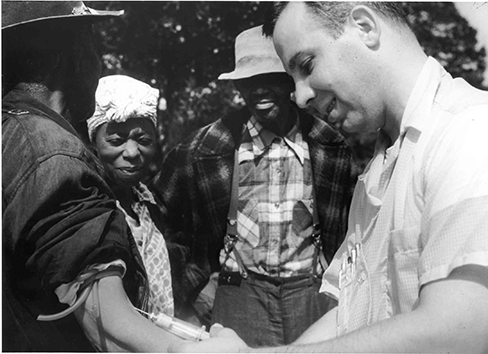

While trapped on the island over the course of World War 1, Kohler applied Gestalt principles to animal perception in order to understand how they solve problems. He recognized that the apes on the islands also perceive relations between stimuli and the environment in Gestalt patterns and understand these patterns as wholes as opposed to pieces that make up a whole. Kohler based his theories of animal intelligence on the ability to understand relations between stimuli, and spent much of his time while trapped on the island investigation what he described as insight , the sudden perception of useful or proper relations. In order to study insight in animals, Kohler would present problems to chimpanzee’s by hanging some banana’s or some kind of food so it was suspended higher than the apes could reach. Within the room, Kohler would arrange a variety of boxes, sticks or other tools the chimpanzees could use by combining in patterns or organizing in a way that would allow them to obtain the food (Kohler & Winter, 1925).

While viewing the chimpanzee’s, Kohler noticed one chimp that was more efficient at solving problems than some of the others. The chimp, named Sultan, was able to use long poles to reach through bars and organize objects in specific patterns to obtain food or other desirables that were originally out of reach. In order to study insight within these chimps, Kohler would remove objects from the room to systematically make the food more difficult to obtain. As the story goes, after removing many of the objects Sultan was used to using to obtain the food, he sat down ad sulked for a while, and then suddenly got up going over to two poles lying on the ground. Without hesitation Sultan put one pole inside the end of the other creating a longer pole that he could use to obtain the food demonstrating an ideal example of what Kohler described as insight. In another situation, Sultan discovered how to stand on a box to reach a banana that was suspended from the rafters illustrating Sultan’s perception of relations and the importance of insight in problem solving.

Grande (another chimp in the group studied by Kohler) builds a three-box structure to reach the bananas, while Sultan watches from the ground. Insight , sometimes referred to as an “Ah-ha” experience, was the term Kohler used for the sudden perception of useful relations among objects during problem solving (Kohler, 1927; Radvansky & Ashcraft, 2013).

Solving puzzles.

Problem-solving abilities can improve with practice. Many people challenge themselves every day with puzzles and other mental exercises to sharpen their problem-solving skills. Sudoku puzzles appear daily in most newspapers. Typically, a sudoku puzzle is a 9×9 grid. The simple sudoku below (see figure) is a 4×4 grid. To solve the puzzle, fill in the empty boxes with a single digit: 1, 2, 3, or 4. Here are the rules: The numbers must total 10 in each bolded box, each row, and each column; however, each digit can only appear once in a bolded box, row, and column. Time yourself as you solve this puzzle and compare your time with a classmate.

How long did it take you to solve this sudoku puzzle? (You can see the answer at the end of this section.)

Here is another popular type of puzzle (figure below) that challenges your spatial reasoning skills. Connect all nine dots with four connecting straight lines without lifting your pencil from the paper:

Did you figure it out? (The answer is at the end of this section.) Once you understand how to crack this puzzle, you won’t forget.

Take a look at the “Puzzling Scales” logic puzzle below (figure below). Sam Loyd, a well-known puzzle master, created and refined countless puzzles throughout his lifetime (Cyclopedia of Puzzles, n.d.).

What steps did you take to solve this puzzle? You can read the solution at the end of this section.

Pitfalls to problem solving.

Not all problems are successfully solved, however. What challenges stop us from successfully solving a problem? Albert Einstein once said, “Insanity is doing the same thing over and over again and expecting a different result.” Imagine a person in a room that has four doorways. One doorway that has always been open in the past is now locked. The person, accustomed to exiting the room by that particular doorway, keeps trying to get out through the same doorway even though the other three doorways are open. The person is stuck—but she just needs to go to another doorway, instead of trying to get out through the locked doorway. A mental set is where you persist in approaching a problem in a way that has worked in the past but is clearly not working now.

Functional fixedness is a type of mental set where you cannot perceive an object being used for something other than what it was designed for. During the Apollo 13 mission to the moon, NASA engineers at Mission Control had to overcome functional fixedness to save the lives of the astronauts aboard the spacecraft. An explosion in a module of the spacecraft damaged multiple systems. The astronauts were in danger of being poisoned by rising levels of carbon dioxide because of problems with the carbon dioxide filters. The engineers found a way for the astronauts to use spare plastic bags, tape, and air hoses to create a makeshift air filter, which saved the lives of the astronauts.

Researchers have investigated whether functional fixedness is affected by culture. In one experiment, individuals from the Shuar group in Ecuador were asked to use an object for a purpose other than that for which the object was originally intended. For example, the participants were told a story about a bear and a rabbit that were separated by a river and asked to select among various objects, including a spoon, a cup, erasers, and so on, to help the animals. The spoon was the only object long enough to span the imaginary river, but if the spoon was presented in a way that reflected its normal usage, it took participants longer to choose the spoon to solve the problem. (German & Barrett, 2005). The researchers wanted to know if exposure to highly specialized tools, as occurs with individuals in industrialized nations, affects their ability to transcend functional fixedness. It was determined that functional fixedness is experienced in both industrialized and nonindustrialized cultures (German & Barrett, 2005).

In order to make good decisions, we use our knowledge and our reasoning. Often, this knowledge and reasoning is sound and solid. Sometimes, however, we are swayed by biases or by others manipulating a situation. For example, let’s say you and three friends wanted to rent a house and had a combined target budget of $1,600. The realtor shows you only very run-down houses for $1,600 and then shows you a very nice house for $2,000. Might you ask each person to pay more in rent to get the $2,000 home? Why would the realtor show you the run-down houses and the nice house? The realtor may be challenging your anchoring bias. An anchoring bias occurs when you focus on one piece of information when making a decision or solving a problem. In this case, you’re so focused on the amount of money you are willing to spend that you may not recognize what kinds of houses are available at that price point.

The confirmation bias is the tendency to focus on information that confirms your existing beliefs. For example, if you think that your professor is not very nice, you notice all of the instances of rude behavior exhibited by the professor while ignoring the countless pleasant interactions he is involved in on a daily basis. Hindsight bias leads you to believe that the event you just experienced was predictable, even though it really wasn’t. In other words, you knew all along that things would turn out the way they did. Representative bias describes a faulty way of thinking, in which you unintentionally stereotype someone or something; for example, you may assume that your professors spend their free time reading books and engaging in intellectual conversation, because the idea of them spending their time playing volleyball or visiting an amusement park does not fit in with your stereotypes of professors.

Finally, the availability heuristic is a heuristic in which you make a decision based on an example, information, or recent experience that is that readily available to you, even though it may not be the best example to inform your decision . Biases tend to “preserve that which is already established—to maintain our preexisting knowledge, beliefs, attitudes, and hypotheses” (Aronson, 1995; Kahneman, 2011). These biases are summarized in the table below.

Were you able to determine how many marbles are needed to balance the scales in the figure below? You need nine. Were you able to solve the problems in the figures above? Here are the answers.

Many different strategies exist for solving problems. Typical strategies include trial and error, applying algorithms, and using heuristics. To solve a large, complicated problem, it often helps to break the problem into smaller steps that can be accomplished individually, leading to an overall solution. Roadblocks to problem solving include a mental set, functional fixedness, and various biases that can cloud decision making skills.

References:

Openstax Psychology text by Kathryn Dumper, William Jenkins, Arlene Lacombe, Marilyn Lovett and Marion Perlmutter licensed under CC BY v4.0. https://openstax.org/details/books/psychology

Review Questions:

1. A specific formula for solving a problem is called ________.

a. an algorithm

b. a heuristic

c. a mental set

d. trial and error

2. Solving the Tower of Hanoi problem tends to utilize a ________ strategy of problem solving.

a. divide and conquer

b. means-end analysis

d. experiment

3. A mental shortcut in the form of a general problem-solving framework is called ________.

4. Which type of bias involves becoming fixated on a single trait of a problem?

a. anchoring bias

b. confirmation bias

c. representative bias

d. availability bias

5. Which type of bias involves relying on a false stereotype to make a decision?

6. Wolfgang Kohler analyzed behavior of chimpanzees by applying Gestalt principles to describe ________.

a. social adjustment

b. student load payment options

c. emotional learning

d. insight learning

7. ________ is a type of mental set where you cannot perceive an object being used for something other than what it was designed for.

a. functional fixedness

c. working memory

Critical Thinking Questions:

1. What is functional fixedness and how can overcoming it help you solve problems?

2. How does an algorithm save you time and energy when solving a problem?

Personal Application Question:

1. Which type of bias do you recognize in your own decision making processes? How has this bias affected how you’ve made decisions in the past and how can you use your awareness of it to improve your decisions making skills in the future?

anchoring bias

availability heuristic

confirmation bias

functional fixedness

hindsight bias

problem-solving strategy

representative bias

trial and error

working backwards

Answers to Exercises

algorithm: problem-solving strategy characterized by a specific set of instructions

anchoring bias: faulty heuristic in which you fixate on a single aspect of a problem to find a solution

availability heuristic: faulty heuristic in which you make a decision based on information readily available to you

confirmation bias: faulty heuristic in which you focus on information that confirms your beliefs

functional fixedness: inability to see an object as useful for any other use other than the one for which it was intended

heuristic: mental shortcut that saves time when solving a problem

hindsight bias: belief that the event just experienced was predictable, even though it really wasn’t

mental set: continually using an old solution to a problem without results

problem-solving strategy: method for solving problems

representative bias: faulty heuristic in which you stereotype someone or something without a valid basis for your judgment

trial and error: problem-solving strategy in which multiple solutions are attempted until the correct one is found

working backwards: heuristic in which you begin to solve a problem by focusing on the end result

Share This Book

- Increase Font Size

- Table of Contents

- Random Entry

- Chronological

- Editorial Information

- About the SEP

- Editorial Board

- How to Cite the SEP

- Special Characters

- Advanced Tools

- Support the SEP

- PDFs for SEP Friends

- Make a Donation

- SEPIA for Libraries

- Entry Contents

Bibliography

Academic tools.

- Friends PDF Preview

- Author and Citation Info

- Back to Top

Modularity of Mind

The concept of modularity has loomed large in philosophy of psychology since the early 1980s, following the publication of Fodor’s landmark book The Modularity of Mind (1983). In the decades since the term ‘module’ and its cognates first entered the lexicon of cognitive science, the conceptual and theoretical landscape in this area has changed dramatically. Especially noteworthy in this respect has been the development of evolutionary psychology, whose proponents adopt a less stringent conception of modularity than the one advanced by Fodor, and who argue that the architecture of the mind is more pervasively modular than Fodor claimed. Where Fodor (1983, 2000) draws the line of modularity at the relatively low-level systems underlying perception and language, post-Fodorian theorists such as Sperber (2002) and Carruthers (2006) contend that the mind is modular through and through, up to and including the high-level systems responsible for reasoning, planning, decision making, and the like. The concept of modularity has also figured in recent debates in philosophy of science, epistemology, ethics, and philosophy of language—further evidence of its utility as a tool for theorizing about mental architecture.

1. What is a mental module?

2.1. challenges to low-level modularity, 2.2. fodor’s argument against high-level modularity, 3.1. the case for massive modularity, 3.2. doubts about massive modularity, 4. modularity and philosophy, other internet resources, related entries.

In his classic introduction to modularity, Fodor (1983) lists nine features that collectively characterize the type of system that interests him. In original order of presentation, they are:

- Domain specificity

- Mandatory operation

- Limited central accessibility

- Fast processing

- Informational encapsulation

- ‘Shallow’ outputs

- Fixed neural architecture

- Characteristic and specific breakdown patterns

- Characteristic ontogenetic pace and sequencing

A cognitive system counts as modular in Fodor’s sense if it is modular “to some interesting extent,” meaning that it has most of these features to an appreciable degree (Fodor, 1983, p. 37). This is a weighted most, since some marks of modularity are more important than others. Information encapsulation, for example, is more or less essential for modularity, as well as explanatorily prior to several of the other features on the list (Fodor, 1983, 2000).

Each of the items on the list calls for explication. To streamline the exposition, we will cluster most of the features thematically and examine them on a cluster-by-cluster basis, along the lines of Prinz (2006).

Encapsulation and inaccessibility. Informational encapsulation and limited central accessibility are two sides of the same coin. Both features pertain to the character of information flow across computational mechanisms, albeit in opposite directions. Encapsulation involves restriction on the flow of information into a mechanism, whereas inaccessibility involves restriction on the flow of information out of it.

A cognitive system is informationally encapsulated to the extent that in the course of processing a given set of inputs it cannot access information stored elsewhere; all it has to go on is the information contained in those inputs plus whatever information might be stored within the system itself, for example, in a proprietary database. In the case of language, for example:

A parser for [a language] L contains a grammar of L . What it does when it does its thing is, it infers from certain acoustic properties of a token to a characterization of certain of the distal causes of the token (e.g., to the speaker’s intention that the utterance should be a token of a certain linguistic type). Premises of this inference can include whatever information about the acoustics of the token the mechanisms of sensory transduction provide, whatever information about the linguistic types in L the internally represented grammar provides, and nothing else . (Fodor, 1984, pp. 245–246; italics in original)

Similarly, in the case of perception—understood as a kind of non-demonstrative (i.e., defeasible, or non-monotonic) inference from sensory ‘premises’ to perceptual ‘conclusions’—the claim that perceptual systems are informationally encapsulated is equivalent to the claim that “the data that can bear on the confirmation of perceptual hypotheses includes, in the general case, considerably less than the organism may know” (Fodor, 1983, p. 69). The classic illustration of this property comes from the study of visual illusions, which tend to persist even after the viewer is explicitly informed about the character of the stimulus. In the Müller-Lyer illusion, for example, the two lines continue to look as if they were of unequal length even after one has convinced oneself otherwise, e.g., by measuring them with a ruler (see Figure 1, below).

Figure 1 . The Müller-Lyer illusion .

Informational encapsulation is related to what Pylyshyn (1984, 1999) calls cognitive impenetrability. But the two properties are not the same; instead, they are related as genus to species. Cognitive impenetrability is a matter of encapsulation relative to information stored in central memory, paradigmatically in the form of beliefs and utilities. But a system could be encapsulated in this respect without being encapsulated across the board. For example, auditory speech perception might be encapsulated relative to beliefs and utilities but unencapsulated relative to vision, as suggested by the McGurk effect (see below, §2.1). Likewise, a system could be unencapsulated relative to beliefs and utilities yet encapsulated relative to perception; it’s plausible that central systems have this character, insofar as their operations are sensitive only to post-perceptual, propositionally encoded information. Strictly speaking, then, cognitive impenetrability is a specific type of informational encapsulation, albeit a type with special architectural significance. Lacking this feature means failing the encapsulation test, the litmus test of modularity. But systems with this feature might still fail the test, due to information seepage of a different (i.e., non-central) sort.

The flip side of informational encapsulation is inaccessibility to central monitoring. A system is inaccessible in this sense if the intermediate-level representations that it computes prior to producing its output are inaccessible to consciousness, and hence unavailable for explicit report. In effect, centrally inaccessible systems are those whose internal processing is opaque to introspection. Though the outputs of such systems may be phenomenologically salient, their precursor states are not. Speech comprehension, for example, likely involves the successive elaboration of myriad representations (of various types: phonological, lexical, syntactic, etc.) of the stimulus, but of these only the final product—the representation of the meaning of what was said—is consciously available.

Mandatoriness, speed, and superficiality. In addition to being informationally encapsulated and centrally inaccessible, modular systems and processes are “fast, cheap, and out of control” (to borrow a phrase by roboticist Rodney Brooks). These features form a natural trio, as we’ll see.

The operation of a cognitive system is mandatory just in case it is automatic, that is, not under conscious control (Bargh & Chartrand, 1999). This means that, like it or not, the system’s operations are switched on by presentation of the relevant stimuli and those operations run to completion. For example, native speakers of English cannot hear the sounds of English being spoken as mere noise: if they hear those sounds at all, they hear them as English. Likewise, it’s impossible to see a 3D array of objects in space as 2D patches of color, however hard one may try.

Speed is arguably the mark of modularity that requires least in the way of explication. But speed is relative, so the best way to proceed here is by way of examples. Speech shadowing is generally considered to be very fast, with typical lag times on the order of about 250 ms. Since the syllabic rate of normal speech is about 4 syllables per second, this suggests that shadowers are processing the stimulus in syllabus-length bits—probably the smallest bits that can be identified in the speech stream, given that “only at the level of the syllable do we begin to find stretches of wave form whose acoustic properties are at all reliably related to their linguistic values” (Fodor, 1983, p. 62). Similarly impressive results are available for vision: in a rapid serial visual presentation task (matching picture to description), subjects were 70% accurate at 125 ms. exposure per picture and 96% accurate at 167 ms. (Fodor, 1983, p. 63). In general, a cognitive process counts as fast in Fodor’s book if it takes place in a half second or less.

A further feature of modular systems is that their outputs are relatively ‘shallow’. Exactly what this means is unclear. But the depth of an output seems to be a function of at least two properties: first, how much computation is required to produce it (i.e., shallow means computationally cheap); second, how constrained or specific its informational content is (i.e., shallow means informationally general) (Fodor, 1983, p. 87). These two properties are correlated, in that outputs with more specific content tend to be more costly for a system to compute, and vice versa. Some writers have interpreted shallowness to require non-conceptual character (e.g., Carruthers, 2006, p. 4). But this conflicts with Fodor’s own gloss on the term, in which he suggests that the output of a plausibly modular system such as visual object recognition might be encoded at the level of ‘basic-level’ concepts, like DOG and CHAIR (Rosch et al., 1976). What’s ruled out here is not concepts per se , then, but highly theoretical concepts like PROTON, which are too informationally specific and too computationally expensive to meet the shallowness criterion.

All three of the features just discussed—mandatoriness, speed, and shallowness—are associated with, and to some extent explicable in terms of, informational encapsulation. In each case, less is more, informationally speaking. Mandatoriness flows from the insensitivity of the system to the organism’s utilities, which is one dimension of cognitive impenetrability. Speed depends upon the efficiency of processing, which positively correlates with encapsulation in so far as encapsulation tends to reduce the system’s informational load. Shallowness is a similar story: shallow outputs are computationally cheap, and computational expense is negatively correlated with encapsulation. In short, the more informationally encapsulated a system is, the more likely it is to be fast, cheap, and out of control.

Dissociability and localizability . To say that a system is functionally dissociable is to say that it can be selectively impaired, that is, damaged or disabled with little or no effect on the operation of other systems. As the neuropsychological record indicates, selective impairments of this sort have frequently been observed as a consequence of circumscribed brain lesions. Standard examples from the study of vision include prosopagnosia (impaired face recognition), achromatopsia (total color blindness), and akinetopsia (motion blindness); examples from the study of language include agrammatism (loss of complex syntax), jargon aphasia (loss of complex semantics), alexia (loss of object words), and dyslexia (impaired reading and writing). Each of these disorders have been found in otherwise cognitively normal individuals, suggesting that the lost capacities are subserved by functionally dissociable mechanisms.

Functional dissociability is associated with neural localizability in a strong sense. A system is strongly localized just in case it is (a) implemented in neural circuitry that is both relatively circumscribed in extent (though not necessarily in contiguous areas) and (b) dedicated to the realization of that system alone. Localization in this sense goes beyond mere implementation in local neural circuitry, since a given bit of circuitry could subserve more than one cognitive function (Anderson, 2010). Proposed candidates for strong localization include systems for color vision (V4), motion detection (MT), face recognition (fusiform gyrus), and spatial scene recognition (parahippocampal gyrus).

Domain specificity . A system is domain specific to the extent that it has a restricted subject matter, that is, the class of objects and properties that it processes information about is circumscribed in a relatively narrow way. As Fodor (1983) puts it, “domain specificity has to do with the range of questions for which a device provides answers (the range of inputs for which it computes analyses)” (p. 103): the narrower the range of inputs a system can compute, the narrower the range of problems the system can solve—and the narrower the range of such problems, the more domain specific the device. Alternatively, the degree of a system’s domain specificity can be understood as a function of the range of inputs that turn the system on, where the size of that range determines the informational reach of the system (Carruthers, 2006; Samuels, 2000).

Domains (and by extension, modules) are typically more fine-grained than sensory modalities like vision and audition. This seems clear from Fodor’s list of plausibly domain-specific mechanisms, which includes systems for color perception, visual shape analysis, sentence parsing, and face and voice recognition (Fodor, 1983, p. 47)—none of which correspond to perceptual or linguistic faculties in an intuitive sense. It also seems plausible, however, that the traditional sense modalities (vision, audition, olfaction, etc.), and the language faculty as a whole, are sufficiently domain specific to count as displaying this particular mark of modularity (McCauley & Henrich, 2006).

Innateness . The final feature of modular systems on Fodor’s roster is innateness, understood as the property of “develop[ing] according to specific, endogenously determined patterns under the impact of environmental releasers” (Fodor, 1983, p. 100). On this view, modular systems come on-line chiefly as the result of a brute-causal process like triggering, rather than an intentional-causal process like learning. (For more on this distinction, see Cowie, 1999; for an alternative analysis of innateness, based on the notion of canalization, see Ariew, 1999.) The most familiar example here is language, the acquisition of which occurs in all normal individuals in all cultures on more or less the same schedule: single words at 12 months, telegraphic speech at 18 months, complex grammar at 24 months, and so on (Stromswold, 1999). Other candidates include visual object perception (Spelke, 1994) and low-level mindreading (Scholl & Leslie, 1999).

2. Modularity, Fodor-style: A modest proposal

The hypothesis of modest modularity, as we shall call it, has two strands. The first strand of the hypothesis is positive. It says that input systems, such as systems involved in perception and language, are modular. The second strand is negative. It says that central systems, such as systems involved in belief fixation and practical reasoning, are not modular.

In this section, we assess the case for modest modularity. The next section (§3) will be devoted to discussion of the hypothesis of massive modularity, which retains the positive strand of Fodor’s hypothesis while reversing the polarity of the second strand from negative to positive—revising the concept of modularity in the process.

The positive part of the modest modularity hypothesis is that input systems are modular. By ‘input system’ Fodor (1983) means a computational mechanism that “presents the world to thought” (p. 40) by processing the outputs of sensory transducers. A sensory transducer is a device that converts the energy impinging on the body’s sensory surfaces, such as the retina and cochlea, into a computationally usable form, without adding or subtracting information. Roughly speaking, the product of sensory transduction is raw sensory data. Input processing involves non-demonstrative inferences from this raw data to hypotheses about the layout of objects in the world. These hypotheses are then passed on to central systems for the purpose of belief fixation, and those systems in turn pass their outputs to systems responsible for the production of behavior.

Fodor argues that input systems constitute a natural kind, defined as “a class of phenomena that have many scientifically interesting properties over and above whatever properties define the class” (Fodor, 1983, p. 46). He argues for this by presenting evidence that input systems are modular, where modularity is marked by a cluster of psychologically interesting properties—the most interesting and important of these being informational encapsulation, as discussed in §1. In the course of that discussion, we reviewed a representative sample of this evidence, and for present purposes that should suffice. (Readers interested in further details should consult Fodor, 1983, pp. 47–101.)

Fodor’s claim about the modularity of input systems has been disputed by a number of philosophers and psychologists (Churchland, 1988; Arbib, 1987; Marslen-Wilson & Tyler, 1987; McCauley & Henrich, 2006). The most wide-ranging philosophical critique is due to Prinz (2006), who argues that perceptual and linguistic systems rarely exhibit the features characteristic of modularity. In particular, he argues that such systems are not informationally encapsulated. To this end, Prinz adduces two types of evidence. First, there appear to be cross-modal effects in perception, which would tell against encapsulation at the level of input systems. The classic example of this, also from the speech perception literature, is the McGurk effect (McGurk & MacDonald, 1976). Here, subjects watching a video of one phoneme being spoken (e.g., /ga/) dubbed with a sound recording of a different phoneme (/ba/) hear a third, altogether different phoneme (/da/). Second, he points to what look to be top-down effects on visual and linguistic processing, the existence of which would tell against cognitive impenetrability, i.e., encapsulation relative to central systems. Some of the most striking examples of such effects come from research on speech perception. Probably the best-known is the phoneme restoration effect, as in the case where listeners ‘fill in’ a missing phoneme in a spoken sentence ( The state governors met with their respective legi*latures convening in the capital city ) from which the missing phoneme (the /s/ sound in legislatures ) has been deleted and replaced with the sound of a cough (Warren, 1970). By hypothesis, this filling-in is driven by listeners’ understanding of the linguistic context.

How convincing one finds this part of Prinz’s critique, however, depends on how convincing one finds his explanation of these effects. The McGurk effect, for example, seems consistent with the claim that speech perception is an informationally encapsulated system, albeit a system that is multi-modal in character (cf. Fodor, 1983, p.132n.13). If speech perception is a multi-modal system, the fact that its operations draw on both auditory and visual information need not undermine the claim that speech perception is encapsulated. Other cross-modal effects, however, resist this type of explanation. In the double flash illusion, for example, viewers shown a single flash accompanied by two beeps report seeing two flashes (Shams et al., 2000). The same goes for the rubber hand illusion, in which synchronous brushing of a hand hidden from view and a realistic-looking rubber hand seen at the usual location of the hand that was hidden gives rise to the impression that the fake hand is real (Botvinick & Cohen, 1998). With respect to phenomena of this sort, unlike the McGurk effect, there is no plausible candidate for a single, domain-specific system whose operations draw on multiple sources of sensory information.

Regarding phoneme restoration, it could be that the effect is driven by listeners’ drawing on information stored in a language-proprietary database (specifically, information about the linguistic types in the lexicon of English), rather than higher-level contextual information. Hence, it’s unclear whether the case of phoneme restoration described above counts as a top-down effect. But not all cases of phoneme restoration can be accommodated so readily, since the phenomenon also occurs when there are multiple lexical items available for filling in (Warren & Warren, 1970). For example, listeners fill the gap in the sentences The *eel is on the axle and The *eel is on the orange differently—with a /wh/ sound and a /p/ sound, respectively—suggesting that speech perception is sensitive to contextual information after all.

A further challenge to modest modularity, not addressed by Prinz (2006), comes from evidence that susceptibility to the Müller-Lyer illusion varies by both culture and age. For example, it appears that adults in Western cultures are more susceptible to the illusion than their non-Western counterparts; that adults in some non-Western cultures, such as hunter-gatherers from the Kalahari Desert, are nearly immune to the illusion; and that within (but not always across) Western and non-Western cultures, pre-adolescent children are more susceptible to the illusion than adults are (Segall, Campbell, & Herskovits, 1966). McCawley and Henrich (2006) take these findings as showing that the visual system is diachronically (as opposed to synchronically) penetrable, in that how one experiences the illusion-inducing stimulus changes as a result of one’s wider perceptual experience over an extended period of time. They also argue that the aforementioned evidence of cultural and developmental variability in perception militates against the idea that vision is an innate capacity, that is, the idea that vision is among the “endogenous features of the human cognitive system that are, if not largely fixed at birth, then, at least, genetically pre-programmed” and “triggered, rather than shaped, by the newborn’s subsequent experience” (p. 83). However, they also issue the following caveat:

[N]othing about any of the findings we have discussed establishes the synchronic cognitive penetrability of the Müller-Lyer stimuli. Nor do the Segall et al. (1966) findings provide evidence that adults’ visual input systems are diachronically penetrable. They suggest that it is only during a critical developmental stage that human beings’ susceptibility to the Müller-Lyer illusion varies considerably and that that variation substantially depends on cultural variables. (McCauley & Henrich, 2006, p. 99; italics in original)

As such, the evidence cited can be accommodated by friends of modest modularity, provided that allowance is made for the potential impact of environmental, including cultural, variables on development—something that most accounts of innateness make room for.

A useful way of making this point invokes Segal’s (1996) idea of diachronic modularity (see also Scholl & Leslie, 1999). Diachronic modules are systems that exhibit parametric variation over the course of their development. For example, in the case of language, different individuals learn to speak different languages depending on the linguistic environment in which they grew up, but they nonetheless share the same underlying linguistic competence in virtue of their (plausibly innate) knowledge of Universal Grammar. Given the observed variation in how people see the Müller-Lyer illusion, it may be that the visual system is modular in much the same way, with its development is constrained by features of the visual environment. Such a possibility seems consistent with the claim that input systems are modular in Fodor’s sense.

Another source of difficulty for proponents of input-level modularity is neuroscientific evidence against the claim that perceptual and linguistic systems are strongly localized. Recall that for a system to be strongly localized, it must be realized in dedicated neural circuitry. Strong localization at the level of input systems, then, entails the existence of a one-to-one mapping between input systems and brain structures. As Anderson (2010, 2014) argues, however, there is no such mapping, since most cortical regions of any size are deployed in different tasks across different domains. For instance, activation of the fusiform face area, once thought to be dedicated to the perception of faces, is also recruited for the perception of cars and birds (Gauthier et al., 2000). Likewise, Broca’s area, once thought to be dedicated to speech production, also plays a role in action recognition, action sequencing, and motor imagery (Tettamanti & Weniger, 2006). Functional neuroimaging studies generally suggest that cognitive systems are at best weakly localized, that is, implemented in distributed networks of the brain that overlap, rather than discrete and disjoint regions.

Arguably the most serious challenge to modularity at the level of input systems, however, comes from evidence that vision is cognitively penetrable, and hence, not informationally encapsulated. The concept of cognitive penetrability, originally introduced by Pylyshyn (1984), has been characterized in a variety of non-equivalent ways (Stokes, 2013), but the core idea is this: A perceptual system is cognitively penetrable if and only if its operations are directly causally sensitive to the agent’s beliefs, desires, intentions, or other nonperceptual states. Behavioral studies purporting to show that vision is cognitively penetrable date back to the early days of New Look psychology (Bruner and Goodman, 1947) and continue to the present day, with renewed interest in the topic emerging in the early 2000s (Firestone & Scholl, 2016). It appears, for example, that vision is influenced by an agent’s motivational states, with experimental subjects reporting that desirable objects look closer (Balcetis & Dunning, 2010) and ambiguous figures look like the interpretation associated with a more rewarding outcome (Balcetis & Dunning, 2006). In addition, vision seems to be influenced by subjects’ beliefs, with racial categorization affecting reports of the perceived skin tone of faces even when the stimuli are equiluminant (Levin & Banaji, 2006), and categorization of objects affecting reports of the perceived color of grayscale images of those objects (Hansen et al., 2006).

Skeptics of cognitive penetrability point out, however, that experimental evidence for top-down effects on perception can be explained in terms of effects of judgment, memory, and relatively peripheral forms of attention (Firestone & Scholl, 2016; Machery, 2015). Consider, for example, the claim that throwing a heavy ball (vs. a light ball) at a target makes the target look farther away, evidence for which consists of subjects’ visual estimates of the distance to the target (Witt, Proffitt, & Epstein, 2004). While it is possible that the greater effort involved in throwing the heavy ball caused the target to look farther away, it is also possible that the increased estimate of distance reflected the fact that subjects in the heavy ball condition judged the target to be farther away because they found it harder to hit (Firestone & Scholl, 2016). Indeed, reports by subjects in a follow-up study who were explicitly instructed to make their estimates on the basis of visual appearances only did not show the effect of effort, suggesting that the effect was post-perceptual (Woods, Philbeck, & Danoff, 2009). Other purported top-down effects on perception, such as the effect of golfing performance on size and distance estimates of golf holes (Witt et al., 2008), can be explained as effects of spatial attention, such as the fact that visually attended objects tend to appear larger and closer (Firestone & Scholl, 2016). These and related considerations suggest that the case for cognitive penetrability—and by extension, the case against low-level modularity—is weaker than its proponents make it out to be.

I turn now to the dark side of Fodor’s hypothesis: the claim that central systems are not modular.

Among the principal jobs of central systems is the fixation of belief, perceptual belief included, via non-demonstrative inference. Fodor (1983) argues that this sort of process cannot be realized in an informationally encapsulated system, and hence that central systems cannot be modular. Spelled out a bit further, his reasoning goes like this:

- Central systems are responsible for belief fixation.

- Belief fixation is isotropic and Quinean.

- Isotropic and Quinean processes cannot be carried out by informationally encapsulated systems.

- Belief fixation cannot be carried out by an informationally encapsulated system. [from 2 and 3]

- Modular systems are informationally encapsulated.

- Belief fixation is not modular. [from 4 and 5]

- Central systems are not modular. [from 1 and 6]

The argument here contains two terms that call for explication, both of which relate to the notion of confirmation holism in the philosophy of science. The term ‘isotropic’ refers to the epistemic interconnectedness of beliefs in the sense that “everything that the scientist knows is, in principle, relevant to determining what else he ought to believe. In principle, our botany constrains our astronomy, if only we could think of ways to make them connect” (Fodor, 1983, p. 105). Antony (2003) presents a striking case of this sort of long-range interdisciplinary cross-talk in the sciences, between astronomy and archaeology; Carruthers (2006, pp. 356–357) furnishes another example, linking solar physics and evolutionary theory. On Fodor’s view, since scientific confirmation is akin to belief fixation, the fact that scientific confirmation is isotropic suggests that belief fixation in general has this property.

A second dimension of confirmation holism is that confirmation is ‘Quinean’, meaning that:

[T]he degree of confirmation assigned to any given hypothesis is sensitive to properties of the entire belief system … simplicity, plausibility, and conservatism are properties that theories have in virtue of their relation to the whole structure of scientific beliefs taken collectively . A measure of conservatism or simplicity would be a metric over global properties of belief systems. (Fodor, 1983, pp. 107–108; italics in original).

Here again, the analogy between scientific thinking and thinking in general underwrites the supposition that belief fixation is Quinean.

Both isotropy and Quineanness are features that preclude encapsulation, since their possession by a system would require extensive access to the contents of central memory, and hence a high degree of cognitive penetrability. Put in slightly different terms: isotropic and Quinean processes are ‘global’ rather than ‘local’, and since globality precludes encapsulation, isotropy and Quineanness preclude encapsulation as well.

By Fodor’s lights, the upshot of this argument—namely, the nonmodular character of central systems—is bad news for the scientific study of higher cognitive functions. This is neatly expressed by his “First Law of the Non-Existence of Cognitive Science,” according to which “[t]he more global (e.g., the more isotropic) a cognitive process is, the less anybody understands it” (Fodor, 1983, p. 107). His grounds for pessimism on this score are twofold. First, global systems are unlikely to be associated with local brain architecture, thereby rendering them unpromising objects of neuroscientific study:

We have seen that isotropic systems are unlikely to exhibit articulated neuroarchitecture. If, as seems plausible, neuroarchitecture is often a concomitant of constraints on information flow, then neural equipotentiality is what you would expect in systems in which every process has more or less uninhibited access to all the available data. The moral is that, to the extent that the existence of form/function correspondence is a precondition for successful neuropsychological research, there is not much to be expected in the way of a neuropsychology of thought (Fodor, 1983, pp. 127).

Second, and more importantly, global processes are resistant to computational explanation, making them unpromising objects of psychological study:

The fact is that—considerations of their neural realization to one side—global systems are per se bad domains for computational models, at least of the sort that cognitive scientists are accustomed to employ. The condition for successful science (in physics, by the way, as well as psychology) is that nature should have joints to carve it at: relatively simple subsystems which can be artificially isolated and which behave, in isolation, in something like the way that they behave in situ . Modules satisfy this condition; Quinean/isotropic-wholistic-systems by definition do not. If, as I have supposed, the central cognitive processes are nonmodular, that is very bad news for cognitive science (Fodor, 1983, pp. 128).

By Fodor’s lights, then, considerations that militate against high-level modularity also militate against the possibility of a robust science of higher cognition—not a happy result, as far as most cognitive scientists and philosophers of mind are concerned.

Gloomy implications aside, Fodor’s argument against high-level modularity is difficult to resist. The main sticking points are these: first, the negative correlation between globality and encapsulation; second, the positive correlation between encapsulation and modularity. Putting these points together, we get a negative correlation between globality and modularity: the more global the process, the less modular the system that executes it. As such, there seem to be only three ways to block the conclusion of the argument:

- Deny that central processes are global.

- Deny that globality and encapsulation are negatively correlated.

- Deny that encapsulation and modularity are positively correlated.

Of these three options, the second seems least attractive, as it seems something like a conceptual truth that globality and encapsulation pull in opposite directions. The first option is slightly more appealing, but only slightly. The idea that central processes are relatively global, even if not as global as the process of confirmation in science suggests, is hard to deny. And that is all the argument really requires.

That leaves the third option: denying that modularity requires encapsulation. This is, in effect, the strategy pursued by Carruthers (2006). More specifically, Carruthers draws a distinction between two kinds of encapsulation: ‘narrow-scope’ and ‘wide-scope’. A system is narrow-scope encapsulated if it cannot draw on any information held outside of it in the course of its processing. This corresponds to encapsulation as Fodor uses the term. By contrast, a system that is wide-scope encapsulated can draw on exogenous information during the course of its operations—it just cannot draw on all of that information. (Compare: “No exogenous information is accessible” vs. “Some exogenous information is not accessible.”) This is encapsulation in a weaker sense of the term than Fodor’s. Indeed, Carruthers’s use of the term ‘encapsulation’ in this context is a bit misleading, insofar as wide-scope encapsulated systems count as unencapsulated in Fodor’s sense (Prinz, 2006).

Dropping the (narrow-scope) encapsulation requirement on modules raises a number of issues, not the least of which being that it reduces the power of modularity hypotheses to explain functional dissociations at the system level (Stokes & Bergeron, 2015). That said, if modularity requires only wide-scope encapsulation, then Fodor’s argument against central modularity no longer goes through. But given the importance of narrow-scope encapsulation to Fodorian modularity, all this shows is that central systems might be modular in a non-Fodorian way. The original argument that central systems are not Fodor-modular—and with it, the motivation for the negative strand of the modest modularity hypothesis—stands.

3. Post-Fodorian modularity

According to the massive modularity hypothesis, the mind is modular through and through, including the parts responsible for high-level cognition functions like belief fixation, problem-solving, planning, and the like. Originally articulated and advocated by proponents of evolutionary psychology (Sperber, 1994, 2002; Cosmides & Tooby, 1992; Pinker, 1997; Barrett, 2005; Barrett & Kurzban, 2006), the hypothesis has received its most comprehensive and sophisticated defense at the hands of Carruthers (2006). Before proceeding to the details of that defense, however, we need to consider briefly what concept of modularity is in play.

The main thing to note here is that the operative notion of modularity differs significantly from the traditional Fodorian one. Carruthers is explicit on this point:

[If] a thesis of massive mental modularity is to be remotely plausible, then by ‘module’ we cannot mean ‘Fodor-module’. In particular, the properties of having proprietary transducers, shallow outputs, fast processing, significant innateness or innate channeling, and encapsulation will very likely have to be struck out. That leaves us with the idea that modules might be isolable function-specific processing systems, all or almost all of which are domain specific (in the content sense), whose operations aren’t subject to the will, which are associated with specific neural structures (albeit sometimes spatially dispersed ones), and whose internal operations may be inaccessible to the remainder of cognition. (Carruthers, 2006, p. 12)

Of the original set of nine features associated with Fodor-modules, then, Carruthers-modules retain at most only five: dissociability, domain specificity, automaticity, neural localizability, and central inaccessibility. Conspicuously absent from the list is informational encapsulation, the feature most central to modularity in Fodor’s account. What’s more, Carruthers goes on to drop domain specificity, automaticity, and strong localizability (which rules out the sharing of parts between modules) from his initial list of five features, making his conception of modularity even more sparse (Carruthers, 2006, p. 62). Other proposals in the literature are similarly permissive in terms of the requirements a system must meet in order to count as modular (Coltheart, 1999; Barrett & Kurzban, 2006).

A second point, related to the first, is that defenders of massive modularity have chiefly been concerned to defend the modularity of central cognition, taking for granted that the mind is modular at the level of input systems. Thus, the hypothesis at issue for theorists like Carruthers might be best understood as the conjunction of two claims: first, that input systems are modular in a way that requires narrow-scope encapsulation; second, that central systems are modular, but only in a way that does not require this feature. In defending massive modularity, Carruthers focuses on the second of these claims, and so will we.

The centerpiece of Carruthers (2006) consists of three arguments for massive modularity: the Argument from Design, the Argument from Animals, and the Argument from Computational Tractability. Let’s briefly consider each of them in turn.

The Argument from Design is as follows:

- Biological systems are designed systems, constructed incrementally.

- Such systems, when complex, need to be organized in a pervasively modular way, that is, as a hierarchical assembly of separately modifiable, functionally autonomous components.

- The human mind is a biological system, and is complex.

- Therefore, the human mind is (probably) massively modular in its organization. (Carruthers, 2006, p. 25)

The crux of this argument is the idea that complex biological systems cannot evolve unless they are organized in a modular way, where modular organization entails that each component of the system (that is, each module) can be selected for change independently of the others. In other words, the evolvability of the system as a whole requires the independent evolvability of its parts. The problem with this assumption is twofold (Woodward & Cowie, 2004). First, not all biological traits are independently modifiable. Having two lungs, for example, is a trait that cannot be changed without changing other traits of an organism, because the genetic and developmental mechanisms underlying lung numerosity causally depend on the genetic and developmental mechanisms underlying bilateral symmetry. Second, there appear to be developmental constraints on neurogenesis which rule out changing the size of one brain area independently of the others. This in turn suggests that natural selection cannot modify cognitive traits in isolation from one another, given that evolving the neural circuitry for one cognitive trait is likely to result in changes to the neural circuitry for other traits.

A further worry about the Argument from Design concerns the gap between its conclusion (the claim that the mind is massively modular in organization ) and the hypothesis at issue (the claim that the mind is massively modular simpliciter ). The worry is this. According to Carruthers, the modularity of a system implies the possession of just two properties: functional dissociability and inaccessibility of processing to external monitoring. Suppose that a system is massively modular in organization. It follows from the definition of modular organization that the components of the system are functionally autonomous and separately modifiable. Though functional autonomy guarantees dissociability, it’s not clear why separate modifiability guarantees inaccessibility to external monitoring. According to Carruthers, the reason is that “if the internal operations of a system (e.g., the details of the algorithm being executed) were available elsewhere, then they couldn’t be altered without some corresponding alteration being made in the system to which they are accessible” (Carruthers, 2006, p. 61). But this is a questionable assumption. On the contrary, it seems plausible that the internal operations of one system could be accessible to a second system in virtue of a monitoring mechanism that functions the same way regardless of the details of the processing being monitored. At a minimum, the claim that separate modifiability entails inaccessibility to external monitoring calls for more justification than Carruthers offers.

In short, the Argument from Design is susceptible to a number of objections. Fortunately, there’s a slightly stronger argument in the vicinity of this one, due to Cosmides and Tooby (1992). It goes like this:

- The human mind is a product of natural selection.

- In order to survive and reproduce, our human ancestors had to solve a number of recurrent adaptive problems (finding food, shelter, mates, etc.).

- Since adaptive problems are solved more quickly, efficiently, and reliably by modular systems than by non-modular ones, natural selection would have favored the evolution of a massively modular architecture.

- Therefore, the human mind is (probably) massively modular.

The force of this argument depends chiefly on the strength of the third premise. Not everyone is convinced, to put it mildly (Fodor, 2000; Samuels, 2000; Woodward & Cowie, 2004). First, the premise exemplifies adaptationist reasoning, and adaptationism in the philosophy of biology has more than its share of critics. Second, it is doubtful whether adaptive problem-solving in general is easier to accomplish with a large collection of specialized problem-solving devices than with a smaller collection of general problem-solving devices with access to a library of specialized programs (Samuels, 2000). Hence, insofar as the massive modularity hypothesis postulates an architecture of the first sort—as evolutionary psychologists’ ‘Swiss Army knife’ metaphor of the mind implies (Cosmides & Tooby, 1992)—the premise seems shaky.

A related argument is the Argument from Animals. Unlike the Argument from Design, this argument is never explicitly stated in Carruthers (2006). But here is a plausible reconstruction of it, due to Wilson (2008):

- Animal minds are massively modular.

- Human minds are incremental extensions of animal minds.

Unfortunately for friends of massive modularity, this argument, like the argument from design, is vulnerable to a number of objections (Wilson, 2008). We’ll mention two of them here. First, it’s not easy to motivate the claim that animal minds are massively modular in the operative sense. Though Carruthers (2006) goes to heroic lengths to do so, the evidence he cites—e.g., for the domain specificity of animal learning mechanisms, à la Gallistel, 1990—adds up to less than what’s needed. The problem is that domain specificity is not sufficient for Carruthers-style modularity; indeed, it is not even one of the central characteristics of modularity in Carruthers’ account. So the argument falters at the first step. Second, even if animal minds are massively modular, and even if single incremental extensions of the animal mind preserve that feature, it’s quite possible that a series of such extensions of animal minds might have led to its loss. In other words, as Wilson (2008) puts it, it can’t be assumed that the conservation of massive modularity is transitive. And without this assumption, the argument from animals can’t go through.

Finally, we have the Argument from Computational Tractability (Carruthers, 2006, pp. 44–59). For the purposes of this argument, we assume that a mental process is computationally tractable if it can be specified at the algorithmic level in such a way that the execution of the process is feasible given time, energy, and other resource constraints on human cognition (Samuels, 2005). We also assume that a system is encapsulated if in the course of its operations the system lacks access to at least some information exogenous to it.

- The mind is computationally realized.

- All computational mental processes must be tractable.

- Tractable processing is possible only in encapsulated systems.

- Hence, the mind must consist entirely of encapsulated systems.

- Hence, the mind is (probably) massively modular.

There are two problems with this argument, however. The first problem has to do with the third premise, which states that tractability requires encapsulation, that is, the inaccessibility of at least some exogenous information to processing. What tractability actually requires is something weaker, namely, that not all information is accessed by the mechanism in the course of its operations (Samuels, 2005). In other words, it is possible for a system to have unlimited access to a database without actually accessing all of its contents. Though tractable computation rules out exhaustive search, for example, unencapsulated mechanisms need not engage in exhaustive search, so tractability does not require encapsulation. The second problem with the argument concerns the last step. Though one might reasonably suppose that modular systems must be encapsulated, the converse doesn’t follow. Indeed, Carruthers (2006) makes no mention of encapsulation in his characterization of modularity, so it’s unclear how one is supposed to get from a claim about pervasive encapsulation to a claim about pervasive modularity.

All in all, then, compelling general arguments for massive modularity are hard to come by. This is not yet to dismiss the possibility of modularity in high-level cognition, but it invites skepticism, especially given the paucity of empirical evidence directly supporting the hypothesis (Robbins, 2013). For example, it has been suggested that the capacity to think about social exchanges is subserved by a domain-specific, functionally dissociable, and innate mechanism (Stone et al., 2002; Sugiyama et al., 2002). However, it appears that deficits in social exchange reasoning do not occur in isolation, but are accompanied by other social-cognitive impairments (Prinz, 2006). Skepticism about modularity in other areas of central cognition, such as high-level mindreading, also seems to be the order of the day (Currie & Sterelny, 2000). The type of mindreading impairments characteristic of Asperger syndrome and high-functioning autism, for example, co-occur with sensory processing and executive function deficits (Frith, 2003). In general, there is little in the way of neuropsychological evidence to support the idea of high-level modularity.

Just as there are general theoretical arguments for massive modularity, there are general theoretical arguments against it. One argument takes the form of what Fodor (2000) calls the ‘Input Problem’. The problem is this. Suppose that the architecture of the mind is modular from top to bottom, and the mind consists entirely of domain-specific mechanisms. In that case, the outputs of each low-level (input) system will need to be routed to the appropriately specialized high-level (central) system for processing. But that routing can only be accomplished by a domain-general, non-modular mechanism—contradicting the initial supposition. In response to this problem, Barrett (2005) argues that processing in a massively modular architecture does not require a domain-general routing device of the sort envisaged by Fodor. An alternative solution, Barrett suggests, involves what he calls ‘enzymatic computation’. In this model, low-level systems pool their outputs together in a centrally accessible workspace where each central system is selectively activated by outputs that match its domain, in much the same way that enzymes selectively bind with substrates that match their specific templates. Like enzymes, specialized computational devices at the central level of the architecture accept a restricted range of inputs (analogous to biochemical substrates), perform specialized operations on that input (analogous to biochemical reactions), and produce outputs in a format useable by other computational devices (analogous to biochemical products). This obviates the need for a domain-general (hence, non-modular) mechanism to mediate between low-level and high-level systems.

A second challenge to massive modularity is posed by the ‘Domain Integration Problem’ (Carruthers, 2006). The problem here is that reasoning, planning, decision making, and other types of high-level cognition routinely involve the production of conceptually structured representations whose content crosses domains. This means that there must be some mechanism for integrating representations from multiple domains. But such a mechanism would be domain general rather than domain specific, and hence, non-modular. Like the Input Problem, however, the Domain Integration Problem is not insurmountable. One possible solution is that the language system has the capacity to play the role of content integrator in virtue of its capacity to transform conceptual representations that have been linguistically encoded (Hermer & Spelke, 1996; Carruthers, 2002, 2006). On this view, language is the vehicle of domain-general thought.

Empirical objections to massive modularity take a variety of forms. To start with, there is neurobiological evidence of developmental plasticity, a phenomenon that tells against the idea that brain structure is innately specified (Buller, 2005; Buller and Hardcastle, 2000). However, not all proponents of massive modularity insist that modules are innately specified (Carruthers, 2006; Kurzban, Tooby, and Cosmides, 2001). Furthermore, it’s unclear to what extent the neurobiological record is at odds with nativism, given the evidence that specific genes are linked to the normal development of cortical structures in both humans and animals (Machery & Barrett, 2008; Ramus, 2006).

Another source of evidence against massive modularity comes from research on individual differences in high-level cognition (Rabaglia, Marcus, & Lane, 2011). Such differences tend to be strongly positively correlated across domains—a phenomenon known as the ‘positive manifold’—suggesting that high-level cognitive abilities are subserved by a domain-general mechanism, rather than by a suite of specialized modules. There is, however, an alternative explanation of the positive manifold. Since post-Fodorian modules are allowed to share parts (Carruthers, 2006), the correlations observed may stem from individual differences in the functioning of components spanning multiple domain-specific mechanisms.