- Science Notes Posts

- Contact Science Notes

- Todd Helmenstine Biography

- Anne Helmenstine Biography

- Free Printable Periodic Tables (PDF and PNG)

- Periodic Table Wallpapers

- Interactive Periodic Table

- Periodic Table Posters

- How to Grow Crystals

- Chemistry Projects

- Fire and Flames Projects

- Holiday Science

Chemistry Problems With Answers

- Physics Problems

- Unit Conversion Example Problems

- Chemistry Worksheets

- Biology Worksheets

- Periodic Table Worksheets

- Physical Science Worksheets

- Science Lab Worksheets

- My Amazon Books

Use chemistry problems as a tool for mastering chemistry concepts. Some of these examples show using formulas while others include lists of examples.

Acids, Bases, and pH Chemistry Problems

Learn about acids and bases. See how to calculate pH, pOH, K a , K b , pK a , and pK b .

- Practice calculating pH.

- Get example pH, pK a , pK b , K a , and K b calculations.

- Get examples of amphoterism.

Atomic Structure Problems

Learn about atomic mass, the Bohr model, and the part of the atom.

- Practice identifying atomic number, mass number, and atomic mass.

- Get examples showing ways to find atomic mass.

- Use Avogadro’s number and find the mass of a single atom .

- Review the Bohr model of the atom.

- Find the number of valence electrons of an element’s atom.

Chemical Bonds

Learn how to use electronegativity to determine whether atoms form ionic or covalent bonds. See chemistry problems drawing Lewis structures.

- Identify ionic and covalent bonds.

- Learn about ionic compounds and get examples.

- Practice identifying ionic compounds.

- Get examples of binary compounds.

- Learn about covalent compounds and their properties.

- See how to assign oxidation numbers.

- Practice drawing Lewis structures.

- Practice calculating bond energy.

Chemical Equations

Practice writing and balancing chemical equations.

- Learn the steps of balancing equations.

- Practice balancing chemical equations (practice quiz).

- Get examples finding theoretical yield.

- Practice calculating percent yield.

- Learn to recognize decomposition reactions.

- Practice recognizing synthesis reactions.

- Practice recognizing single replacement reactions.

- Recognize double replacement reactions.

- Find the mole ratio between chemical species in an equation.

Concentration and Solutions

Learn how to calculate concentration and explore chemistry problems that affect chemical concentration, including freezing point depression, boiling point elevation, and vapor pressure elevation.

- Get example concentration calculations in several units.

- Practice calculating normality (N).

- Practice calculating molality (m).

- Explore example molarity (M) calculations.

- Get examples of colligative properties of solutions.

- See the definition and examples of saturated solutions.

- See the definition and examples of unsaturated solutions.

- Get examples of miscible and immiscible liquids.

Error Calculations

Learn about the types of error and see worked chemistry example problems.

- See how to calculate percent.

- Practice absolute and relative error calculations.

- See how to calculate percent error.

- See how to find standard deviation.

- Calculate mean, median, and mode.

- Review the difference between accuracy and precision.

Equilibrium Chemistry Problems

Learn about Le Chatelier’s principle, reaction rates, and equilibrium.

- Solve activation energy chemistry problems.

- Review factors that affect reaction rate.

- Practice calculating the van’t Hoff factor.

Practice chemistry problems using the gas laws, including Raoult’s law, Graham’s law, Boyle’s law, Charles’ law, and Dalton’s law of partial pressures.

- Calculate vapor pressure.

- Solve Avogadro’s law problems.

- Practice Boyle’s law problems.

- See Charles’ law example problems.

- Solve combined gas law problems.

- Solve Gay-Lussac’s law problems.

Some chemistry problems ask you identify examples of states of matter and types of mixtures. While there are any chemical formulas to know, it’s still nice to have lists of examples.

- Practice density calculations.

- Identify intensive and extensive properties of matter.

- See examples of intrinsic and extrinsic properties of matter.

- Get the definition and examples of solids.

- Get the definition and examples of gases.

- See the definition and examples of liquids.

- Learn what melting point is and get a list of values for different substances.

- Get the azeotrope definition and see examples.

- See how to calculate specific volume of a gas.

- Get examples of physical properties of matter.

- Get examples of chemical properties of matter.

- Review the states of matter.

Molecular Structure Chemistry Problems

See chemistry problems writing chemical formulas. See examples of monatomic and diatomic elements.

- Practice empirical and molecular formula problems.

- Practice simplest formula problems.

- See how to calculate molecular mass.

- Get examples of the monatomic elements.

- See examples of binary compounds.

- Calculate the number of atoms and molecules in a drop of water.

Nomenclature

Practice chemistry problems naming ionic compounds, hydrocarbons, and covalent compounds.

- Practice naming covalent compounds.

- Learn hydrocarbon prefixes in organic chemistry.

Nuclear Chemistry

These chemistry problems involve isotopes, nuclear symbols, half-life, radioactive decay, fission, fusion.

- Review the types of radioactive decay.

Periodic Table

Learn how to use a periodic table and explore periodic table trends.

- Know the trends in the periodic table.

- Review how to use a periodic table.

- Explore the difference between atomic and ionic radius and see their trends on the periodic table.

Physical Chemistry

Explore thermochemistry and physical chemistry, including enthalpy, entropy, heat of fusion, and heat of vaporization.

- Practice heat of vaporization chemistry problems.

- Practice heat of fusion chemistry problems.

- Calculate heat required to turn ice into steam.

- Practice calculating specific heat.

- Get examples of potential energy.

- Get examples of kinetic energy.

- See example activation energy calculations.

Spectroscopy and Quantum Chemistry Problems

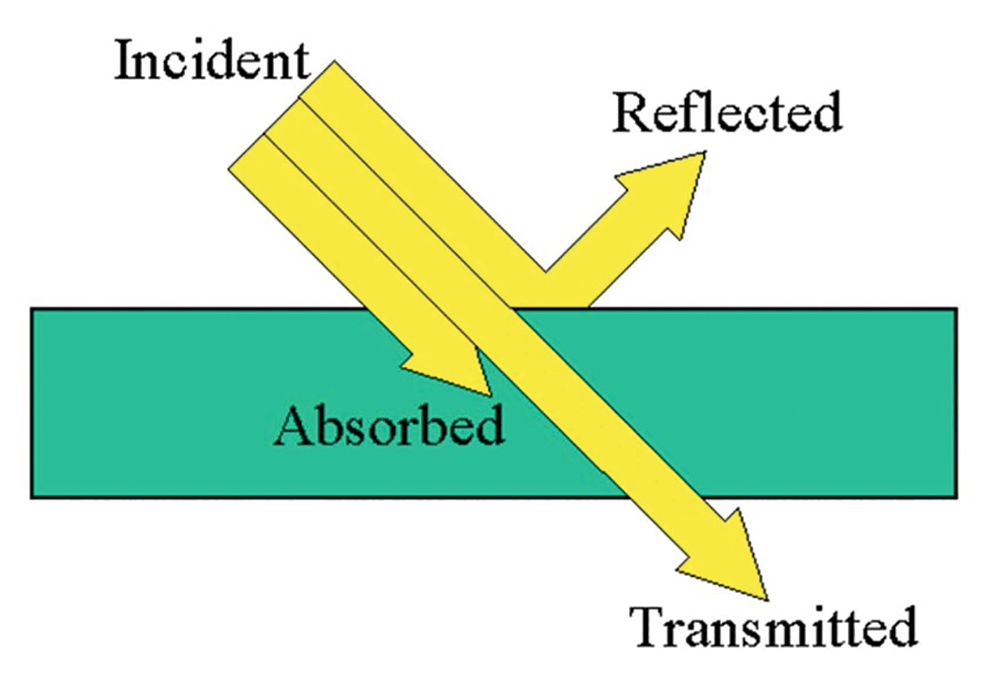

See chemistry problems involving the interaction between light and matter.

- Calculate wavelength from frequency or frequency from wavelength.

Stoichiometry Chemistry Problems

Practice chemistry problems balancing formulas for mass and charge. Learn about reactants and products.

- Get example mole ratio problems.

- Calculate percent yield.

- Learn how to assign oxidation numbers.

- Get the definition and examples of reactants in chemistry.

- Get the definition and examples of products in chemical reactions.

Unit Conversions

There are some many examples of unit conversions that they have their own separate page!

Physics Problems with Solutions

Physics problems with detailed solutions and explanations.

Physics problems with detailed solutions and thorough explanations are presented. Also physics formulas are included.

- Electrostatic Problems with Solutions and Explanations.

- Gravity Problems with Solutions and Explanations

- Projectile Problems with Solutions and Explanations

- Velocity and Speed: Problems

- Uniform Acceleration Motion: Problems

- Free Physics SAT and AP Practice Tests Questions .

Physics Formulas and Constants

- Physics Formulas Reference

- SI Prefixes Used with Units in Physics, Chemistry and Engineering

- Constants in Physics, Chemistry and Engineering

Popular Pages

- Privacy Policy

/cdn.vox-cdn.com/uploads/chorus_image/image/50119035/FixingScienceLead.0.png)

Filed under:

The 7 biggest problems facing science, according to 270 scientists

Share this story.

- Share this on Facebook

- Share this on Twitter

- Share this on Reddit

- Share All sharing options

Share All sharing options for: The 7 biggest problems facing science, according to 270 scientists

"Science, I had come to learn, is as political, competitive, and fierce a career as you can find, full of the temptation to find easy paths." — Paul Kalanithi, neurosurgeon and writer (1977–2015)

Science is in big trouble. Or so we’re told.

In the past several years, many scientists have become afflicted with a serious case of doubt — doubt in the very institution of science.

Explore the biggest challenges facing science, and how we can fix them:

- Academia has a huge money problem

- Too many studies are poorly designed

- Replicating results is crucial — and rare

- Peer review is broken

- Too much science is locked behind paywalls

- Science is poorly communicated

- Life as a young academic is incredibly stressful

Conclusion:

- Science is not doomed

As reporters covering medicine, psychology, climate change, and other areas of research, we wanted to understand this epidemic of doubt. So we sent scientists a survey asking this simple question: If you could change one thing about how science works today, what would it be and why?

We heard back from 270 scientists all over the world, including graduate students, senior professors, laboratory heads, and Fields Medalists . They told us that, in a variety of ways, their careers are being hijacked by perverse incentives. The result is bad science.

The scientific process, in its ideal form, is elegant: Ask a question, set up an objective test, and get an answer. Repeat. Science is rarely practiced to that ideal. But Copernicus believed in that ideal. So did the rocket scientists behind the moon landing.

But nowadays, our respondents told us, the process is riddled with conflict. Scientists say they’re forced to prioritize self-preservation over pursuing the best questions and uncovering meaningful truths.

"I feel torn between asking questions that I know will lead to statistical significance and asking questions that matter," says Kathryn Bradshaw, a 27-year-old graduate student of counseling at the University of North Dakota.

Today, scientists' success often isn't measured by the quality of their questions or the rigor of their methods. It's instead measured by how much grant money they win, the number of studies they publish, and how they spin their findings to appeal to the public.

Scientists often learn more from studies that fail. But failed studies can mean career death. So instead, they’re incentivized to generate positive results they can publish. And the phrase "publish or perish" hangs over nearly every decision. It’s a nagging whisper, like a Jedi’s path to the dark side.

"Over time the most successful people will be those who can best exploit the system," Paul Smaldino, a cognitive science professor at University of California Merced, says.

To Smaldino, the selection pressures in science have favored less-than-ideal research: "As long as things like publication quantity, and publishing flashy results in fancy journals are incentivized, and people who can do that are rewarded … they’ll be successful, and pass on their successful methods to others."

Many scientists have had enough. They want to break this cycle of perverse incentives and rewards. They are going through a period of introspection, hopeful that the end result will yield stronger scientific institutions . In our survey and interviews, they offered a wide variety of ideas for improving the scientific process and bringing it closer to its ideal form.

Before we jump in, some caveats to keep in mind: Our survey was not a scientific poll. For one, the respondents disproportionately hailed from the biomedical and social sciences and English-speaking communities.

Many of the responses did, however, vividly illustrate the challenges and perverse incentives that scientists across fields face. And they are a valuable starting point for a deeper look at dysfunction in science today.

The place to begin is right where the perverse incentives first start to creep in: the money.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/6698587/Funding-2.jpg)

(1) Academia has a huge money problem

To do most any kind of research, scientists need money: to run studies, to subsidize lab equipment, to pay their assistants and even their own salaries. Our respondents told us that getting — and sustaining — that funding is a perennial obstacle.

Their gripe isn’t just with the quantity, which, in many fields, is shrinking. It’s the way money is handed out that puts pressure on labs to publish a lot of papers, breeds conflicts of interest, and encourages scientists to overhype their work.

In the United States, academic researchers in the sciences generally cannot rely on university funding alone to pay for their salaries, assistants, and lab costs. Instead, they have to seek outside grants. "In many cases the expectations were and often still are that faculty should cover at least 75 percent of the salary on grants," writes John Chatham, a professor of medicine studying cardiovascular disease at University of Alabama at Birmingham.

Grants also usually expire after three or so years, which pushes scientists away from long-term projects. Yet as John Pooley, a neurobiology postdoc at the University of Bristol, points out, the biggest discoveries usually take decades to uncover and are unlikely to occur under short-term funding schemes.

Outside grants are also in increasingly short supply. In the US, the largest source of funding is the federal government, and that pool of money has been plateauing for years, while young scientists enter the workforce at a faster rate than older scientists retire.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/6789901/FIXING_SCIENCE_-02.0.jpg)

Take the National Institutes of Health, a major funding source. Its budget rose at a fast clip through the 1990s, stalled in the 2000s, and then dipped with sequestration budget cuts in 2013. All the while, rising costs for conducting science meant that each NIH dollar purchased less and less. Last year, Congress approved the biggest NIH spending hike in a decade . But it won’t erase the shortfall.

The consequences are striking: In 2000, more than 30 percent of NIH grant applications got approved. Today, it’s closer to 17 percent. "It's because of what's happened in the last 12 years that young scientists in particular are feeling such a squeeze," NIH Director Francis Collins said at the Milken Global Conference in May.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/6789907/FIXING_SCIENCE_-03.0.jpg)

Truly novel research takes longer to produce, and it doesn’t always pay off. A National Bureau of Economic Research working paper found that, on the whole, truly unconventional papers tend to be less consistently cited in the literature. So scientists and funders increasingly shy away from them, preferring short-turnaround, safer papers. But everyone suffers from that: the NBER report found that novel papers also occasionally lead to big hits that inspire high-impact, follow-up studies.

"I think because you have to publish to keep your job and keep funding agencies happy, there are a lot of (mediocre) scientific papers out there ... with not much new science presented," writes Kaitlyn Suski, a chemistry and atmospheric science postdoc at Colorado State University.

Another worry: When independent, government, or university funding sources dry up, scientists may feel compelled to turn to industry or interest groups eager to generate studies to support their agendas.

Finally, all of this grant writing is a huge time suck, taking resources away from the actual scientific work. Tyler Josephson, an engineering graduate student at the University of Delaware, writes that many professors he knows spend 50 percent of their time writing grant proposals. "Imagine," he asks, "what they could do with more time to devote to teaching and research?"

It’s easy to see how these problems in funding kick off a vicious cycle. To be more competitive for grants, scientists have to have published work. To have published work, they need positive (i.e., statistically significant ) results. That puts pressure on scientists to pick "safe" topics that will yield a publishable conclusion — or, worse, may bias their research toward significant results.

"When funding and pay structures are stacked against academic scientists," writes Alison Bernstein, a neuroscience postdoc at Emory University, "these problems are all exacerbated."

Fixes for science's funding woes

Right now there are arguably too many researchers chasing too few grants. Or, as a 2014 piece in the Proceedings of the National Academy of Sciences put it: "The current system is in perpetual disequilibrium, because it will inevitably generate an ever-increasing supply of scientists vying for a finite set of research resources and employment opportunities."

"As it stands, too much of the research funding is going to too few of the researchers," writes Gordon Pennycook, a PhD candidate in cognitive psychology at the University of Waterloo. "This creates a culture that rewards fast, sexy (and probably wrong) results."

One straightforward way to ameliorate these problems would be for governments to simply increase the amount of money available for science. (Or, more controversially, decrease the number of PhDs, but we’ll get to that later.) If Congress boosted funding for the NIH and National Science Foundation, that would take some of the competitive pressure off researchers.

But that only goes so far. Funding will always be finite, and researchers will never get blank checks to fund the risky science projects of their dreams. So other reforms will also prove necessary.

One suggestion: Bring more stability and predictability into the funding process. "The NIH and NSF budgets are subject to changing congressional whims that make it impossible for agencies (and researchers) to make long term plans and commitments," M. Paul Murphy, a neurobiology professor at the University of Kentucky, writes. "The obvious solution is to simply make [scientific funding] a stable program, with an annual rate of increase tied in some manner to inflation."

Another idea would be to change how grants are awarded: Foundations and agencies could fund specific people and labs for a period of time rather than individual project proposals. (The Howard Hughes Medical Institute already does this.) A system like this would give scientists greater freedom to take risks with their work.

Alternatively, researchers in the journal mBio recently called for a lottery-style system. Proposals would be measured on their merits, but then a computer would randomly choose which get funded.

"Although we recognize that some scientists will cringe at the thought of allocating funds by lottery," the authors of the mBio piece write, "the available evidence suggests that the system is already in essence a lottery without the benefits of being random." Pure randomness would at least reduce some of the perverse incentives at play in jockeying for money.

There are also some ideas out there to minimize conflicts of interest from industry funding. Recently, in PLOS Medicine , Stanford epidemiologist John Ioannidis suggested that pharmaceutical companies ought to pool the money they use to fund drug research, to be allocated to scientists who then have no exchange with industry during study design and execution. This way, scientists could still get funding for work crucial for drug approvals — but without the pressures that can skew results.

These solutions are by no means complete, and they may not make sense for every scientific discipline. The daily incentives facing biomedical scientists to bring new drugs to market are different from the incentives facing geologists trying to map out new rock layers. But based on our survey, funding appears to be at the root of many of the problems facing scientists, and it’s one that deserves more careful discussion.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/6698621/Replication-2.jpg)

(2) Too many studies are poorly designed. Blame bad incentives.

Scientists are ultimately judged by the research they publish. And the pressure to publish pushes scientists to come up with splashy results, of the sort that get them into prestigious journals. "Exciting, novel results are more publishable than other kinds," says Brian Nosek , who co-founded the Center for Open Science at the University of Virginia.

The problem here is that truly groundbreaking findings simply don’t occur very often, which means scientists face pressure to game their studies so they turn out to be a little more "revolutionary." (Caveat: Many of the respondents who focused on this particular issue hailed from the biomedical and social sciences.)

Some of this bias can creep into decisions that are made early on: choosing whether or not to randomize participants, including a control group for comparison, or controlling for certain confounding factors but not others. (Read more on study design particulars here .)

Many of our survey respondents noted that perverse incentives can also push scientists to cut corners in how they analyze their data.

"I have incredible amounts of stress that maybe once I finish analyzing the data, it will not look significant enough for me to defend," writes Jess Kautz, a PhD student at the University of Arizona. "And if I get back mediocre results, there's going to be incredible pressure to present it as a good result so they can get me out the door. At this moment, with all this in my mind, it is making me wonder whether I could give an intellectually honest assessment of my own work."

Increasingly, meta-researchers (who conduct research on research) are realizing that scientists often do find little ways to hype up their own results — and they’re not always doing it consciously. Among the most famous examples is a technique called "p-hacking," in which researchers test their data against many hypotheses and only report those that have statistically significant results.

In a recent study , which tracked the misuse of p-values in biomedical journals, meta-researchers found "an epidemic" of statistical significance: 96 percent of the papers that included a p-value in their abstracts boasted statistically significant results.

That seems awfully suspicious. It suggests the biomedical community has been chasing statistical significance, potentially giving dubious results the appearance of validity through techniques like p-hacking — or simply suppressing important results that don't look significant enough. Fewer studies share effect sizes (which arguably gives a better indication of how meaningful a result might be) or discuss measures of uncertainty.

"The current system has done too much to reward results," says Joseph Hilgard, a postdoctoral research fellow at the Annenberg Public Policy Center. "This causes a conflict of interest: The scientist is in charge of evaluating the hypothesis, but the scientist also desperately wants the hypothesis to be true."

The consequences are staggering. An estimated $200 billion — or the equivalent of 85 percent of global spending on research — is routinely wasted on poorly designed and redundant studies, according to meta-researchers who have analyzed inefficiencies in research. We know that as much as 30 percent of the most influential original medical research papers later turn out to be wrong or exaggerated.

Fixes for poor study design

Our respondents suggested that the two key ways to encourage stronger study design — and discourage positive results chasing — would involve rethinking the rewards system and building more transparency into the research process.

"I would make rewards based on the rigor of the research methods, rather than the outcome of the research," writes Simine Vazire, a journal editor and a social psychology professor at UC Davis. "Grants, publications, jobs, awards, and even media coverage should be based more on how good the study design and methods were, rather than whether the result was significant or surprising."

Likewise, Cambridge mathematician Tim Gowers argues that researchers should get recognition for advancing science broadly through informal idea sharing — rather than only getting credit for what they publish.

"We’ve gotten used to working away in private and then producing a sort of polished document in the form of a journal article," Gowers said. "This tends to hide a lot of the thought process that went into making the discoveries. I'd like attitudes to change so people focus less on the race to be first to prove a particular theorem, or in science to make a particular discovery, and more on other ways of contributing to the furthering of the subject."

When it comes to published results, meanwhile, many of our respondents wanted to see more journals put a greater emphasis on rigorous methods and processes rather than splashy results.

"I think the one thing that would have the biggest impact is removing publication bias: judging papers by the quality of questions, quality of method, and soundness of analyses, but not on the results themselves," writes Michael Inzlicht , a University of Toronto psychology and neuroscience professor.

Some journals are already embracing this sort of research. PLOS One , for example, makes a point of accepting negative studies (in which a scientist conducts a careful experiment and finds nothing) for publication, as does the aptly named Journal of Negative Results in Biomedicine .

More transparency would also help, writes Daniel Simons, a professor of psychology at the University of Illinois. Here’s one example: ClinicalTrials.gov , a site run by the NIH, allows researchers to register their study design and methods ahead of time and then publicly record their progress. That makes it more difficult for scientists to hide experiments that didn’t produce the results they wanted. (The site now holds information for more than 180,000 studies in 180 countries.)

Similarly, the AllTrials campaign is pushing for every clinical trial (past, present, and future) around the world to be registered, with the full methods and results reported. Some drug companies and universities have created portals that allow researchers to access raw data from their trials.

The key is for this sort of transparency to become the norm rather than a laudable outlier.

(3) Replicating results is crucial. But scientists rarely do it.

Replication is another foundational concept in science. Researchers take an older study that they want to test and then try to reproduce it to see if the findings hold up.

Testing, validating, retesting — it's all part of a slow and grinding process to arrive at some semblance of scientific truth. But this doesn't happen as often as it should, our respondents said. Scientists face few incentives to engage in the slog of replication. And even when they attempt to replicate a study, they often find they can’t do so . Increasingly it’s being called a "crisis of irreproducibility."

The stats bear this out: A 2015 study looked at 83 highly cited studies that claimed to feature effective psychiatric treatments. Only 16 had ever been successfully replicated. Another 16 were contradicted by follow-up attempts, and 11 were found to have substantially smaller effects the second time around. Meanwhile, nearly half of the studies (40) had never been subject to replication at all.

More recently, a landmark study published in the journal Science demonstrated that only a fraction of recent findings in top psychology journals could be replicated. This is happening in other fields too, says Ivan Oransky, one of the founders of the blog Retraction Watch , which tracks scientific retractions.

As for the underlying causes, our survey respondents pointed to a couple of problems. First, scientists have very few incentives to even try replication. Jon-Patrick Allem, a social scientist at the Keck School of Medicine of USC, noted that funding agencies prefer to support projects that find new information instead of confirming old results.

Journals are also reluctant to publish replication studies unless "they contradict earlier findings or conclusions," Allem writes. The result is to discourage scientists from checking each other's work. "Novel information trumps stronger evidence, which sets the parameters for working scientists."

The second problem is that many studies can be difficult to replicate. Sometimes their methods are too opaque. Sometimes the original studies had too few participants to produce a replicable answer. And sometimes, as we saw in the previous section, the study is simply poorly designed or outright wrong.

Again, this goes back to incentives: When researchers have to publish frequently and chase positive results, there’s less time to conduct high-quality studies with well-articulated methods.

Fixes for underreplication

Scientists need more carrots to entice them to pursue replication in the first place. As it stands, researchers are encouraged to publish new and positive results and to allow negative results to linger in their laptops or file drawers.

This has plagued science with a problem called "publication bias" — not all studies that are conducted actually get published in journals, and the ones that do tend to have positive and dramatic conclusions.

If institutions started to reward tenure positions or make hires based on the quality of a researcher’s body of work, instead of quantity, this might encourage more replication and discourage positive results chasing.

"The key that needs to change is performance review," writes Christopher Wynder, a former assistant professor at McMaster University. "It affects reproducibility because there is little value in confirming another lab's results and trying to publish the findings."

The next step would be to make replication of studies easier. This could include more robust sharing of methods in published research papers. "It would be great to have stronger norms about being more detailed with the methods," says University of Virginia’s Brian Nosek.

He also suggested more regularly adding supplements at the end of papers that get into the procedural nitty-gritty, to help anyone wanting to repeat an experiment. "If I can rapidly get up to speed, I have a much better chance of approximating the results," he said.

Nosek has detailed other potential fixes that might help with replication — all part of his work at the Center for Open Science .

A greater degree of transparency and data sharing would enable replications, said Stanford’s John Ioannidis. Too often, anyone trying to replicate a study must chase down the original investigators for details about how the experiment was conducted.

"It is better to do this in an organized fashion with buy-in from all leading investigators in a scientific discipline," he explained, "rather than have to try to find the investigator in each case and ask him or her in detective-work fashion about details, data, and methods that are otherwise unavailable."

Researchers could also make use of new tools , such as open source software that tracks every version of a data set, so that they can share their data more easily and have transparency built into their workflow.

Some of our respondents suggested that scientists engage in replication prior to publication. "Before you put an exploratory idea out in the literature and have people take the time to read it, you owe it to the field to try to replicate your own findings," says John Sakaluk, a social psychologist at the University of Victoria.

For example, he has argued, psychologists could conduct small experiments with a handful of participants to form ideas and generate hypotheses. But they would then need to conduct bigger experiments, with more participants, to replicate and confirm those hypotheses before releasing them into the world. "In doing so," Sakaluk says, "the rest of us can have more confidence that this is something we might want to [incorporate] into our own research."

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/6790505/PeerReview.0.jpg)

(4) Peer review is broken

Peer review is meant to weed out junk science before it reaches publication. Yet over and over again in our survey, respondents told us this process fails. It was one of the parts of the scientific machinery to elicit the most rage among the researchers we heard from.

Normally, peer review works like this: A researcher submits an article for publication in a journal. If the journal accepts the article for review, it's sent off to peers in the same field for constructive criticism and eventual publication — or rejection. (The level of anonymity varies; some journals have double-blind reviews, while others have moved to triple-blind review, where the authors, editors, and reviewers don’t know who one another are.)

It sounds like a reasonable system. But numerous studies and systematic reviews have shown that peer review doesn’t reliably prevent poor-quality science from being published.

The process frequently fails to detect fraud or other problems with manuscripts, which isn't all that surprising when you consider researchers aren't paid or otherwise rewarded for the time they spend reviewing manuscripts. They do it out of a sense of duty — to contribute to their area of research and help advance science.

But this means it's not always easy to find the best people to peer-review manuscripts in their field, that harried researchers delay doing the work (leading to publication delays of up to two years), and that when they finally do sit down to peer-review an article they might be rushed and miss errors in studies.

"The issue is that most referees simply don't review papers carefully enough, which results in the publishing of incorrect papers, papers with gaps, and simply unreadable papers," says Joel Fish, an assistant professor of mathematics at the University of Massachusetts Boston. "This ends up being a large problem for younger researchers to enter the field, since that means they have to ask around to figure out which papers are solid and which are not."

That's not to mention the problem of peer review bullying. Since the default in the process is that editors and peer reviewers know who the authors are (but authors don’t know who the reviews are), biases against researchers or institutions can creep in, opening the opportunity for rude, rushed, and otherwise unhelpful comments. (Just check out the popular #SixWordPeerReview hashtag on Twitter).

These issues were not lost on our survey respondents, who said peer review amounts to a broken system, which punishes scientists and diminishes the quality of publications. They want to not only overhaul the peer review process but also change how it's conceptualized.

Fixes for peer review

On the question of editorial bias and transparency, our respondents were surprisingly divided. Several suggested that all journals should move toward double-blinded peer review, whereby reviewers can't see the names or affiliations of the person they're reviewing and publication authors don't know who reviewed them. The main goal here was to reduce bias.

"We know that scientists make biased decisions based on unconscious stereotyping," writes Pacific Northwest National Lab postdoc Timothy Duignan. "So rather than judging a paper by the gender, ethnicity, country, or institutional status of an author — which I believe happens a lot at the moment — it should be judged by its quality independent of those things."

Yet others thought that more transparency, rather than less, was the answer: "While we correctly advocate for the highest level of transparency in publishing, we still have most reviews that are blinded, and I cannot know who is reviewing me," writes Lamberto Manzoli, a professor of epidemiology and public health at the University of Chieti, in Italy. "Too many times we see very low quality reviews, and we cannot understand whether it is a problem of scarce knowledge or conflict of interest."

Perhaps there is a middle ground. For example, e Life , a new open access journal that is rapidly rising in impact factor, runs a collaborative peer review process. Editors and peer reviewers work together on each submission to create a consolidated list of comments about a paper. The author can then reply to what the group saw as the most important issues, rather than facing the biases and whims of individual reviewers. (Oddly, this process is faster — eLife takes less time to accept papers than Nature or Cell.)

Still, those are mostly incremental fixes. Other respondents argued that we might need to radically rethink the entire process of peer review from the ground up.

"The current peer review process embraces a concept that a paper is final," says Nosek. "The review process is [a form of] certification, and that a paper is done." But science doesn't work that way. Science is an evolving process, and truth is provisional. So, Nosek said, science must "move away from the embrace of definitiveness of publication."

Some respondents wanted to think of peer review as more of a continuous process, in which studies are repeatedly and transparently updated and republished as new feedback changes them — much like Wikipedia entries. This would require some sort of expert crowdsourcing.

"The scientific publishing field — particularly in the biological sciences — acts like there is no internet," says Lakshmi Jayashankar, a senior scientific reviewer with the federal government. "The paper peer review takes forever, and this hurts the scientists who are trying to put their results quickly into the public domain."

One possible model already exists in mathematics and physics, where there is a long tradition of "pre-printing" articles. Studies are posted on an open website called arXiv.org , often before being peer-reviewed and published in journals. There, the articles are sorted and commented on by a community of moderators, providing another chance to filter problems before they make it to peer review.

"Posting preprints would allow scientific crowdsourcing to increase the number of errors that are caught, since traditional peer-reviewers cannot be expected to be experts in every sub-discipline," writes Scott Hartman, a paleobiology PhD student at the University of Wisconsin.

And even after an article is published, researchers think the peer review process shouldn't stop. They want to see more "post-publication" peer review on the web, so that academics can critique and comment on articles after they've been published. Sites like PubPeer and F1000Research have already popped up to facilitate that kind of post-publication feedback.

"We do this a couple of times a year at conferences," writes Becky Clarkson, a geriatric medicine researcher at the University of Pittsburgh. "We could do this every day on the internet."

The bottom line is that traditional peer review has never worked as well as we imagine it to — and it’s ripe for serious disruption.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/6698661/OpenAccessJournals-2.jpg)

(5) Too much science is locked behind paywalls

After a study has been funded, conducted, and peer-reviewed, there's still the question of getting it out so that others can read and understand its results.

Over and over, our respondents expressed dissatisfaction with how scientific research gets disseminated. Too much is locked away in paywalled journals, difficult and costly to access, they said. Some respondents also criticized the publication process itself for being too slow, bogging down the pace of research.

On the access question, a number of scientists argued that academic research should be free for all to read. They chafed against the current model, in which for-profit publishers put journals behind pricey paywalls.

A single article in Science will set you back $30; a year-long subscription to Cell will cost $279. Elsevier publishes 2,000 journals that can cost up to $10,000 or $20,000 a year for a subscription.

Many US institutions pay those journal fees for their employees, but not all scientists (or other curious readers) are so lucky. In a recent issue of Science , journalist John Bohannon described the plight of a PhD candidate at a top university in Iran. He calculated that the student would have to spend $1,000 a week just to read the papers he needed.

As Michael Eisen, a biologist at UC Berkeley and co-founder of the Public Library of Science (or PLOS ) , put it , scientific journals are trying to hold on to the profits of the print era in the age of the internet. Subscription prices have continued to climb, as a handful of big publishers (like Elsevier) have bought up more and more journals, creating mini knowledge fiefdoms.

"Large, publicly owned publishing companies make huge profits off of scientists by publishing our science and then selling it back to the university libraries at a massive profit (which primarily benefits stockholders)," Corina Logan, an animal behavior researcher at the University of Cambridge, noted. "It is not in the best interest of the society, the scientists, the public, or the research." (In 2014, Elsevier reported a profit margin of nearly 40 percent and revenues close to $3 billion.)

"It seems wrong to me that taxpayers pay for research at government labs and universities but do not usually have access to the results of these studies, since they are behind paywalls of peer-reviewed journals," added Melinda Simon, a postdoc microfluidics researcher at Lawrence Livermore National Lab.

Fixes for closed science

Many of our respondents urged their peers to publish in open access journals (along the lines of PeerJ or PLOS Biology ). But there’s an inherent tension here. Career advancement can often depend on publishing in the most prestigious journals, like Science or Nature , which still have paywalls.

There's also the question of how best to finance a wholesale transition to open access. After all, journals can never be entirely free. Someone has to pay for the editorial staff, maintaining the website, and so on. Right now, open access journals typically charge fees to those submitting papers, putting the burden on scientists who are already struggling for funding.

One radical step would be to abolish for-profit publishers altogether and move toward a nonprofit model. "For journals I could imagine that scientific associations run those themselves," suggested Johannes Breuer, a postdoctoral researcher in media psychology at the University of Cologne. "If they go for online only, the costs for web hosting, copy-editing, and advertising (if needed) can be easily paid out of membership fees."

As a model, Cambridge’s Tim Gowers has launched an online mathematics journal called Discrete Analysis . The nonprofit venture is owned and published by a team of scholars, it has no publisher middlemen, and access will be completely free for all.

Until wholesale reform happens, however, many scientists are going a much simpler route: illegally pirating papers.

Bohannon reported that millions of researchers around the world now use Sci-Hub , a site set up by Alexandra Elbakyan, a Russia-based neuroscientist, that illegally hosts more than 50 million academic papers. "As a devout pirate," Elbakyan told us, "I think that copyright should be abolished."

One respondent had an even more radical suggestion: that we abolish the existing peer-reviewed journal system altogether and simply publish everything online as soon as it’s done.

"Research should be made available online immediately, and be judged by peers online rather than having to go through the whole formatting, submitting, reviewing, rewriting, reformatting, resubmitting, etc etc etc that can takes years," writes Bruno Dagnino, formerly of the Netherlands Institute for Neuroscience. "One format, one platform. Judge by the whole community, with no delays."

A few scientists have been taking steps in this direction. Rachel Harding, a genetic researcher at the University of Toronto, has set up a website called Lab Scribbles , where she publishes her lab notes on the structure of huntingtin proteins in real time, posting data as well as summaries of her breakthroughs and failures. The idea is to help share information with other researchers working on similar issues, so that labs can avoid needless overlap and learn from each other's mistakes.

Not everyone might agree with approaches this radical; critics worry that too much sharing might encourage scientific free riding. Still, the common theme in our survey was transparency. Science is currently too opaque, research too difficult to share. That needs to change.

(6) Science is poorly communicated to the public

"If I could change one thing about science, I would change the way it is communicated to the public by scientists, by journalists, and by celebrities," writes Clare Malone, a postdoctoral researcher in a cancer genetics lab at Brigham and Women's Hospital.

She wasn't alone. Quite a few respondents in our survey expressed frustration at how science gets relayed to the public. They were distressed by the fact that so many laypeople hold on to completely unscientific ideas or have a crude view of how science works.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/6788187/FIXING_SCIENCE_-02.0.jpg)

They have a point. Science journalism is often full of exaggerated, conflicting, or outright misleading claims. If you ever want to see a perfect example of this, check out "Kill or Cure," a site where Paul Battley meticulously documents all the times the Daily Mail reported that various items — from antacids to yogurt — either cause cancer, prevent cancer, or sometimes do both.

Sometimes bad stories are peddled by university press shops. In 2015, the University of Maryland issued a press release claiming that a single brand of chocolate milk could improve concussion recovery. It was an absurd case of science hype.

Indeed, one review in BMJ found that one-third of university press releases contained either exaggerated claims of causation (when the study itself only suggested correlation), unwarranted implications about animal studies for people, or unfounded health advice.

But not everyone blamed the media and publicists alone. Other respondents pointed out that scientists themselves often oversell their work, even if it's preliminary, because funding is competitive and everyone wants to portray their work as big and important and game-changing.

"You have this toxic dynamic where journalists and scientists enable each other in a way that massively inflates the certainty and generality of how scientific findings are communicated and the promises that are made to the public," writes Daniel Molden, an associate professor of psychology at Northwestern University. "When these findings prove to be less certain and the promises are not realized, this just further erodes the respect that scientists get and further fuels scientists desire for appreciation."

Fixes for better science communication

Opinions differed on how to improve this sorry state of affairs — some pointed to the media, some to press offices, others to scientists themselves.

Plenty of our respondents wished that more science journalists would move away from hyping single studies. Instead, they said, reporters ought to put new research findings in context, and pay more attention to the rigor of a study's methodology than to the splashiness of the end results.

"On a given subject, there are often dozens of studies that examine the issue," writes Brian Stacy of the US Department of Agriculture. "It is very rare for a single study to conclusively resolve an important research question, but many times the results of a study are reported as if they do."

But it’s not just reporters who will need to shape up. The "toxic dynamic" of journalists, academic press offices, and scientists enabling one another to hype research can be tough to change, and many of our respondents pointed out that there were no easy fixes — though recognition was an important first step.

Some suggested the creation of credible referees that could rigorously distill the strengths and weaknesses of research. (Some variations of this are starting to pop up: The Genetic Expert News Service solicits outside experts to weigh in on big new studies in genetics and biotechnology.) Other respondents suggested that making research free to all might help tamp down media misrepresentations.

Still other respondents noted that scientists themselves should spend more time learning how to communicate with the public — a skill that tends to be under-rewarded in the current system.

"Being able to explain your work to a non-scientific audience is just as important as publishing in a peer-reviewed journal, in my opinion, but currently the incentive structure has no place for engaging the public," writes Crystal Steltenpohl, a graduate assistant at DePaul University.

Reducing the perverse incentives around scientific research itself could also help reduce overhype. "If we reward research based on how noteworthy the results are, this will create pressure to exaggerate the results (through exploiting flexibility in data analysis, misrepresenting results, or outright fraud)," writes UC Davis's Simine Vazire. "We should reward research based on how rigorous the methods and design are."

Or perhaps we should focus on improving science literacy. Jeremy Johnson, a project coordinator at the Broad Institute, argued that bolstering science education could help ameliorate a lot of these problems. "Science literacy should be a top priority for our educational policy," he said, "not an elective."

(7) Life as a young academic is incredibly stressful

When we asked researchers what they’d fix about science, many talked about the scientific process itself, about study design or peer review. These responses often came from tenured scientists who loved their jobs but wanted to make the broader scientific project even better.

But on the flip side, we heard from a number of researchers — many of them graduate students or postdocs — who were genuinely passionate about research but found the day-to-day experience of being a scientist grueling and unrewarding. Their comments deserve a section of their own.

Today, many tenured scientists and research labs depend on small armies of graduate students and postdoctoral researchers to perform their experiments and conduct data analysis.

These grad students and postdocs are often the primary authors on many studies. In a number of fields, such as the biomedical sciences, a postdoc position is a prerequisite before a researcher can get a faculty-level position at a university.

This entire system sits at the heart of modern-day science. (A new card game called Lab Wars pokes fun at these dynamics.)

But these low-level research jobs can be a grind. Postdocs typically work long hours and are relatively low-paid for their level of education — salaries are frequently pegged to stipends set by NIH National Research Service Award grants, which start at $43,692 and rise to $47,268 in year three.

Postdocs tend to be hired on for one to three years at a time, and in many institutions they are considered contractors, limiting their workplace protections. We heard repeatedly about extremely long hours and limited family leave benefits.

"Oftentimes this is problematic for individuals in their late 20s and early to mid-30s who have PhDs and who may be starting families while also balancing a demanding job that pays poorly," wrote one postdoc, who asked for anonymity.

This lack of flexibility tends to disproportionately affect women — especially women planning to have families — which helps contribute to gender inequalities in research. ( A 2012 paper found that female job applicants in academia are judged more harshly and are offered less money than males.) "There is very little support for female scientists and early-career scientists," noted another postdoc.

"There is very little long-term financial security in today's climate, very little assurance where the next paycheck will come from," wrote William Kenkel, a postdoctoral researcher in neuroendocrinology at Indiana University. "Since receiving my PhD in 2012, I left Chicago and moved to Boston for a post-doc, then in 2015 I left Boston for a second post-doc in Indiana. In a year or two, I will move again for a faculty job, and that's if I'm lucky. Imagine trying to build a life like that."

This strain can also adversely affect the research that young scientists do. "Contracts are too short term," noted another researcher. "It discourages rigorous research as it is difficult to obtain enough results for a paper (and hence progress) in two to three years. The constant stress drives otherwise talented and intelligent people out of science also."

Because universities produce so many PhDs but have way fewer faculty jobs available, many of these postdoc researchers have limited career prospects. Some of them end up staying stuck in postdoc positions for five or 10 years or more.

"In the biomedical sciences," wrote the first postdoc quoted above, "each available faculty position receives applications from hundreds or thousands of applicants, putting immense pressure on postdocs to publish frequently and in high impact journals to be competitive enough to attain those positions."

Many young researchers pointed out that PhD programs do fairly little to train people for careers outside of academia. "Too many [PhD] students are graduating for a limited number of professor positions with minimal training for careers outside of academic research," noted Don Gibson, a PhD candidate studying plant genetics at UC Davis.

Laura Weingartner, a graduate researcher in evolutionary ecology at Indiana University, agreed: "Few universities (specifically the faculty advisors) know how to train students for anything other than academia, which leaves many students hopeless when, inevitably, there are no jobs in academia for them."

Add it up and it's not surprising that we heard plenty of comments about anxiety and depression among both graduate students and postdocs. "There is a high level of depression among PhD students," writes Gibson. "Long hours, limited career prospects, and low wages contribute to this emotion."

A 2015 study at the University of California Berkeley found that 47 percent of PhD students surveyed could be considered depressed. The reasons for this are complex and can't be solved overnight. Pursuing academic research is already an arduous, anxiety-ridden task that's bound to take a toll on mental health.

But as Jennifer Walker explored recently at Quartz, many PhD students also feel isolated and unsupported, exacerbating those issues.

Fixes to keep young scientists in science

We heard plenty of concrete suggestions. Graduate schools could offer more generous family leave policies and child care for graduate students. They could also increase the number of female applicants they accept in order to balance out the gender disparity.

But some respondents also noted that workplace issues for grad students and postdocs were inseparable from some of the fundamental issues facing science that we discussed earlier. The fact that university faculty and research labs face immense pressure to publish — but have limited funding — makes it highly attractive to rely on low-paid postdocs.

"There is little incentive for universities to create jobs for their graduates or to cap the number of PhDs that are produced," writes Weingartner. "Young researchers are highly trained but relatively inexpensive sources of labor for faculty."

Some respondents also pointed to the mismatch between the number of PhDs produced each year and the number of academic jobs available.

A recent feature by Julie Gould in Nature explored a number of ideas for revamping the PhD system. One idea is to split the PhD into two programs: one for vocational careers and one for academic careers. The former would better train and equip graduates to find jobs outside academia.

This is hardly an exhaustive list. The core point underlying all these suggestions, however, was that universities and research labs need to do a better job of supporting the next generation of researchers. Indeed, that's arguably just as important as addressing problems with the scientific process itself. Young scientists, after all, are by definition the future of science.

Weingartner concluded with a sentiment we saw all too frequently: "Many creative, hard-working, and/or underrepresented scientists are edged out of science because of these issues. Not every student or university will have all of these unfortunate experiences, but they’re pretty common. There are a lot of young, disillusioned scientists out there now who are expecting to leave research."

Science needs to correct its greatest weaknesses

Science is not doomed.

For better or worse, it still works. Look no further than the novel vaccines to prevent Ebola, the discovery of gravitational waves , or new treatments for stubborn diseases. And it’s getting better in many ways. See the work of meta -researchers who study and evaluate research — a field that has gained prominence over the past 20 years.

More from this feature

We asked hundreds of scientists what they’d change about science. Here are 33 of our favorite responses.

But science is conducted by fallible humans, and it hasn’t been human-proofed to protect against all our foibles. The scientific revolution began just 500 years ago. Only over the past 100 has science become professionalized. There is still room to figure out how best to remove biases and align incentives.

To that end, here are some broad suggestions:

One: Science has to acknowledge and address its money problem. Science is enormously valuable and deserves ample funding. But the way incentives are set up can distort research.

Right now, small studies with bold results that can be quickly turned around and published in journals are disproportionately rewarded. By contrast, there are fewer incentives to conduct research that tackles important questions with robustly designed studies over long periods of time. Solving this won’t be easy, but it is at the root of many of the issues discussed above.

Two: Science needs to celebrate and reward failure. Accepting that we can learn more from dead ends in research and studies that failed would alleviate the "publish or perish" cycle. It would make scientists more confident in designing robust tests and not just convenient ones, in sharing their data and explaining their failed tests to peers, and in using those null results to form the basis of a career (instead of chasing those all-too-rare breakthroughs).

Three: Science has to be more transparent. Scientists need to publish the methods and findings more fully, and share their raw data in ways that are easily accessible and digestible for those who may want to reanalyze or replicate their findings.

There will always be waste and mediocre research, but as Stanford’s Ioannidis explains in a recent paper , a lack of transparency creates excess waste and diminishes the usefulness of too much research.

Again and again, we also heard from researchers, particularly in social sciences, who felt that their cognitive biases in their own work, influenced by pressures to publish and advance their careers, caused science to go off the rails. If more human-proofing and de-biasing were built into the process — through stronger peer review, cleaner and more consistent funding, and more transparency and data sharing — some of these biases could be mitigated.

These fixes will take time, grinding along incrementally — much like the scientific process itself. But the gains humans have made so far using even imperfect scientific methods would have been unimaginable 500 years ago. The gains from improving the process could prove just as staggering, if not more so.

Correction: An earlier version of this story misstated Noah Grand's title. At the time of the survey he was a lecturer in sociology at UCLA, not a professor.

Will you help keep Vox free for all?

At Vox, we believe that clarity is power, and that power shouldn’t only be available to those who can afford to pay. That’s why we keep our work free. Millions rely on Vox’s clear, high-quality journalism to understand the forces shaping today’s world. Support our mission and help keep Vox free for all by making a financial contribution to Vox today.

We accept credit card, Apple Pay, and Google Pay. You can also contribute via

The total solar eclipse is returning to the United States — better than before

Why did geologists reject the “anthropocene” epoch it’s not rock science., 17 astounding scientific mysteries that researchers can’t yet solve, sign up for the newsletter today, explained, thanks for signing up.

Check your inbox for a welcome email.

Oops. Something went wrong. Please enter a valid email and try again.

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Middle school biology - NGSS

Middle school earth and space science - ngss, middle school physics - ngss, high school biology, high school biology - ngss, high school chemistry, high school physics, high school physics - ngss, ap®︎/college biology, ap®︎/college chemistry, ap®︎/college environmental science, ap®︎/college physics 1, ap®︎/college physics 2, organic chemistry, health and medicine, cosmology and astronomy, electrical engineering, biology library, chemistry library, physics library, up class 11 chemistry, up class 12 chemistry, up class 11 physics, a brief introduction to biology, a brief introduction to physics, a brief introduction to chemistry.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

Identifying problems and solutions in scientific text

Kevin heffernan.

Department of Computer Science and Technology, University of Cambridge, 15 JJ Thomson Avenue, Cambridge, CB3 0FD UK

Simone Teufel

Research is often described as a problem-solving activity, and as a result, descriptions of problems and solutions are an essential part of the scientific discourse used to describe research activity. We present an automatic classifier that, given a phrase that may or may not be a description of a scientific problem or a solution, makes a binary decision about problemhood and solutionhood of that phrase. We recast the problem as a supervised machine learning problem, define a set of 15 features correlated with the target categories and use several machine learning algorithms on this task. We also create our own corpus of 2000 positive and negative examples of problems and solutions. We find that we can distinguish problems from non-problems with an accuracy of 82.3%, and solutions from non-solutions with an accuracy of 79.7%. Our three most helpful features for the task are syntactic information (POS tags), document and word embeddings.

Introduction

Problem solving is generally regarded as the most important cognitive activity in everyday and professional contexts (Jonassen 2000 ). Many studies on formalising the cognitive process behind problem-solving exist, for instance (Chandrasekaran 1983 ). Jordan ( 1980 ) argues that we all share knowledge of the thought/action problem-solution process involved in real life, and so our writings will often reflect this order. There is general agreement amongst theorists that state that the nature of the research process can be viewed as a problem-solving activity (Strübing 2007 ; Van Dijk 1980 ; Hutchins 1977 ; Grimes 1975 ).

One of the best-documented problem-solving patterns was established by Winter ( 1968 ). Winter analysed thousands of examples of technical texts, and noted that these texts can largely be described in terms of a four-part pattern consisting of Situation, Problem, Solution and Evaluation. This is very similar to the pattern described by Van Dijk ( 1980 ), which consists of Introduction-Theory, Problem-Experiment-Comment and Conclusion. The difference is that in Winter’s view, a solution only becomes a solution after it has been evaluated positively. Hoey changes Winter’s pattern by introducing the concept of Response in place of Solution (Hoey 2001 ). This seems to describe the situation in science better, where evaluation is mandatory for research solutions to be accepted by the community. In Hoey’s pattern, the Situation (which is generally treated as optional) provides background information; the Problem describes an issue which requires attention; the Response provides a way to deal with the issue, and the Evaluation assesses how effective the response is.

An example of this pattern in the context of the Goldilocks story can be seen in Fig. 1 . In this text, there is a preamble providing the setting of the story (i.e. Goldilocks is lost in the woods), which is called the Situation in Hoey’s system. A Problem in encountered when Goldilocks becomes hungry. Her first Response is to try the porridge in big bear’s bowl, but she gives this a negative Evaluation (“too hot!”) and so the pattern returns to the Problem. This continues in a cyclic fashion until the Problem is finally resolved by Goldilocks giving a particular Response a positive Evaluation of baby bear’s porridge (“it’s just right”).

Example of problem-solving pattern when applied to the Goldilocks story.

Reproduced with permission from Hoey ( 2001 )

It would be attractive to detect problem and solution statements automatically in text. This holds true both from a theoretical and a practical viewpoint. Theoretically, we know that sentiment detection is related to problem-solving activity, because of the perception that “bad” situations are transformed into “better” ones via problem-solving. The exact mechanism of how this can be detected would advance the state of the art in text understanding. In terms of linguistic realisation, problem and solution statements come in many variants and reformulations, often in the form of positive or negated statements about the conditions, results and causes of problem–solution pairs. Detecting and interpreting those would give us a reasonably objective manner to test a system’s understanding capacity. Practically, being able to detect any mention of a problem is a first step towards detecting a paper’s specific research goal. Being able to do this has been a goal for scientific information retrieval for some time, and if successful, it would improve the effectiveness of scientific search immensely. Detecting problem and solution statements of papers would also enable us to compare similar papers and eventually even lead to automatic generation of review articles in a field.

There has been some computational effort on the task of identifying problem-solving patterns in text. However, most of the prior work has not gone beyond the usage of keyword analysis and some simple contextual examination of the pattern. Flowerdew ( 2008 ) presents a corpus-based analysis of lexio-grammatical patterns for problem and solution clauses using articles from professional and student reports. Problem and solution keywords were used to search their corpora, and each occurrence was analysed to determine grammatical usage of the keyword. More interestingly, the causal category associated with each keyword in their context was also analysed. For example, Reason–Result or Means-Purpose were common causal categories found to be associated with problem keywords.

The goal of the work by Scott ( 2001 ) was to determine words which are semantically similar to problem and solution, and to determine how these words are used to signal problem-solution patterns. However, their corpus-based analysis used articles from the Guardian newspaper. Since the domain of newspaper text is very different from that of scientific text, we decided not to consider those keywords associated with problem-solving patterns for use in our work.

Instead of a keyword-based approach, Charles ( 2011 ) used discourse markers to examine how the problem-solution pattern was signalled in text. In particular, they examined how adverbials associated with a result such as “thus, therefore, then, hence” are used to signal a problem-solving pattern.

Problem solving also has been studied in the framework of discourse theories such as Rhetorical Structure Theory (Mann and Thompson 1988 ) and Argumentative Zoning (Teufel et al. 2000 ). Problem- and solutionhood constitute two of the original 23 relations in RST (Mann and Thompson 1988 ). While we concentrate solely on this aspect, RST is a general theory of discourse structure which covers many intentional and informational relations. The relationship to Argumentative Zoning is more complicated. The status of certain statements as problem or solutions is one important dimension in the definitions of AZ categories. AZ additionally models dimensions other than problem-solution hood (such as who a scientific idea belongs to, or which intention the authors might have had in stating a particular negative or positive statement). When forming categories, AZ combines aspects of these dimensions, and “flattens” them out into only 7 categories. In AZ it is crucial who it is that experiences the problems or contributes a solution. For instance, the definition of category “CONTRAST” includes statements that some research runs into problems, but only if that research is previous work (i.e., not if it is the work contributed in the paper itself). Similarly, “BASIS” includes statements of successful problem-solving activities, but only if they are achieved by previous work that the current paper bases itself on. Our definition is simpler in that we are interested only in problem solution structure, not in the other dimensions covered in AZ. Our definition is also more far-reaching than AZ, in that we are interested in all problems mentioned in the text, no matter whose problems they are. Problem-solution recognition can therefore be seen as one aspect of AZ which can be independently modelled as a “service task”. This means that good problem solution structure recognition should theoretically improve AZ recognition.

In this work, we approach the task of identifying problem-solving patterns in scientific text. We choose to use the model of problem-solving described by Hoey ( 2001 ). This pattern comprises four parts: Situation, Problem, Response and Evaluation. The Situation element is considered optional to the pattern, and so our focus centres on the core pattern elements.

Goal statement and task

Many surface features in the text offer themselves up as potential signals for detecting problem-solving patterns in text. However, since Situation is an optional element, we decided to focus on either Problem or Response and Evaluation as signals of the pattern. Moreover, we decide to look for each type in isolation. Our reasons for this are as follows: It is quite rare for an author to introduce a problem without resolving it using some sort of response, and so this is a good starting point in identifying the pattern. There are exceptions to this, as authors will sometimes introduce a problem and then leave it to future work, but overall there should be enough signal in the Problem element to make our method of looking for it in isolation worthwhile. The second signal we look for is the use of Response and Evaluation within the same sentence. Similar to Problem elements, we hypothesise that this formulation is well enough signalled externally to help us in detecting the pattern. For example, consider the following Response and Evaluation: “One solution is to use smoothing”. In this statement, the author is explicitly stating that smoothing is a solution to a problem which must have been mentioned in a prior statement. In scientific text, we often observe that solutions implicitly contain both Response and Evaluation (positive) elements. Therefore, due to these reasons there should be sufficient external signals for the two pattern elements we concentrate on here.

When attempting to find Problem elements in text, we run into the issue that the word “problem” actually has at least two word senses that need to be distinguished. There is a word sense of “problem” that means something which must be undertaken (i.e. task), while another sense is the core sense of the word, something that is problematic and negative. Only the latter sense is aligned with our sense of problemhood. This is because the simple description of a task does not predispose problemhood, just a wish to perform some act. Consider the following examples, where the non-desired word sense is being used:

- “Das and Petrov (2011) also consider the problem of unsupervised bilingual POS induction”. (Chen et al. 2011 ).

- “In this paper, we describe advances on the problem of NER in Arabic Wikipedia”. (Mohit et al. 2012 ).

Here, the author explicitly states that the phrases in orange are problems, they align with our definition of research tasks and not with what we call here ‘problematic problems’. We will now give some examples from our corpus for the desired, core word sense:

- “The major limitation of supervised approaches is that they require annotations for example sentences.” (Poon and Domingos 2009 ).

- “To solve the problem of high dimensionality we use clustering to group the words present in the corpus into much smaller number of clusters”. (Saha et al. 2008 ).

When creating our corpus of positive and negative examples, we took care to select only problem strings that satisfy our definition of problemhood; “ Corpus creation ” section will explain how we did that.

Corpus creation

Our new corpus is a subset of the latest version of the ACL anthology released in March, 2016 1 which contains 22,878 articles in the form of PDFs and OCRed text. 2

The 2016 version was also parsed using ParsCit (Councill et al. 2008 ). ParsCit recognises not only document structure, but also bibliography lists as well as references within running text. A random subset of 2500 papers was collected covering the entire ACL timeline. In order to disregard non-article publications such as introductions to conference proceedings or letters to the editor, only documents containing abstracts were considered. The corpus was preprocessed using tokenisation, lemmatisation and dependency parsing with the Rasp Parser (Briscoe et al. 2006 ).

Definition of ground truth

Our goal was to define a ground truth for problem and solution strings, while covering as wide a range as possible of syntactic variations in which such strings naturally occur. We also want this ground truth to cover phenomena of problem and solution status which are applicable whether or not the problem or solution status is explicitly mentioned in the text.

To simplify the task, we only consider here problem and solution descriptions that are at most one sentence long. In reality, of course, many problem descriptions and solution descriptions go beyond single sentence, and require for instance an entire paragraph. However, we also know that short summaries of problems and solutions are very prevalent in science, and also that these tend to occur in the most prominent places in a paper. This is because scientists are trained to express their contribution and the obstacles possibly hindering their success, in an informative, succinct manner. That is the reason why we can afford to only look for shorter problem and solution descriptions, ignoring those that cross sentence boundaries.

To define our ground truth, we examined the parsed dependencies and looked for a target word (“problem/solution”) in subject position, and then chose its syntactic argument as our candidate problem or solution phrase. To increase the variation, i.e., to find as many different-worded problem and solution descriptions as possible, we additionally used semantically similar words (near-synonyms) of the target words “problem” or “solution” for the search. Semantic similarity was defined as cosine in a deep learning distributional vector space, trained using Word2Vec (Mikolov et al. 2013 ) on 18,753,472 sentences from a biomedical corpus based on all full-text Pubmed articles (McKeown et al. 2016 ). From the 200 words which were semantically closest to “problem”, we manually selected 28 clear synonyms. These are listed in Table 1 . From the 200 semantically closest words to “solution” we similarly chose 19 (Table 2 ). Of the sentences matching our dependency search, a subset of problem and solution candidate sentences were randomly selected.

Selected words for use in problem candidate phrase extraction

Selected words for use in solution candidate phrase extraction