"where P(A) is the proportion of time that the coders agree and P(E) is the proportion of times that we would expect them to agree by chance." ( Carletta 1996 : 4).

There is no doubt that annotation tends to be highly labour-intensive and time-consuming to carry out well. This is why it is appropriate to admit, as a final observation, that 'best practice' in corpus annotation is something we should all strive for — but which perhaps few of us will achieve.

9. Getting down to the practical task of annotation

To conclude, it is useful to say something about the practicalities of corpus annotation. Assume, say, that you have a text or a corpus you want to work on, and want to 'get the tags into the text'.

- It is not necessary to have special software. You can annotate the text using a general-purpose text editor or word processor. But this means the job has to be done by hand, which risks being slow and prone to error.

- For some purposes, particularly if the corpus is large and is to be made available for general use, it is important to have the annotation validated. That is, the vocabulary of annotation is controlled and is allowed to occur only in syntactically valid ways. A validating tool can be written from scratch, or can use macros for word processors or editors.

- If you decide to use XML-compliant annotation, this means that you have the option to make use of the increasingly available XML editors. An XML editor, in conjunction with a DTD or schema, can do the job of enforcing well-formedness or validity without any programming of the software, although a high degree of expertise with XML will come in useful.

- Special tagging software has been developed for large projects — for example the CLAWS tagger and Template Tagger used for the Brown Family or corpora and the BNC. Such programs or packages can be licensed for your own annotation work. (For CLAWS, see the UCREL website http://www.comp.lancs.ac.uk/ucrel/ .)

- There are tagsets which come with specific software — e.g. the C5, C7 and C8 tagsets for CLAWS, and CHAT for the CHILDES system, which is the de facto standard for language acquisition data.

- There are more general architectures for handling texts, language data and software systems for building and annotation corpora. The most prominent example of this is GATE ('general architecture for text engineering' http://gate.ac.uk ) developed at the University of Sheffield.

Continue to Chapter Three: Metadata for corpus work

Return to the table of contents

© Geoffrey Leech 2004. The right of Geoffrey Leech to be identified as the Author of this Work has been asserted by him in accordance with the Copyright, Designs and Patents Act 1988.

All material supplied via the Arts and Humanities Data Service is protected by copyright, and duplication or sale of all or any part of it is not permitted, except that material may be duplicated by you for your personal research use or educational purposes in electronic or print form. Permission for any other use must be obtained from the Arts and Humanities Data Service.

Electronic or print copies may not be offered, whether for sale or otherwise, to any third party.

by Tony McEnery and Andrew Hardie; published by Cambridge University Press, 2012

Home > (1) Corpus linguistics > Annotated versus unannotated corpora

Website contents

- (1) Corpus Linguistics

- Mode of communication

- Corpus-based, corpus-driven

- Data collection

- Annotated corpora

- Multilingual corpora

- (2) Analysing corpus data

- (3) The web, laws and ethics

- (4) English Corpus Linguistics

- Extended footnotes

- Answers to exercises

- Weblink directory

- Corpus tools

- Other resources

- Buy the book

- About the authors

Annotated versus unannotated corpora

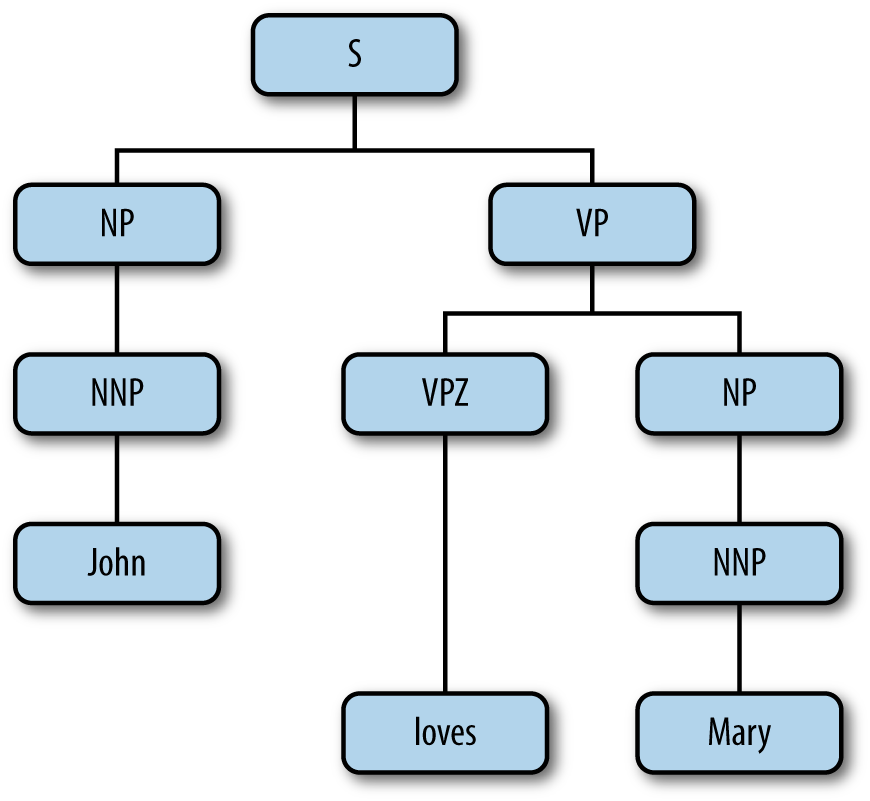

The tree diagram – a commonplace of (corpus) linguistics!

What is corpus annotation?

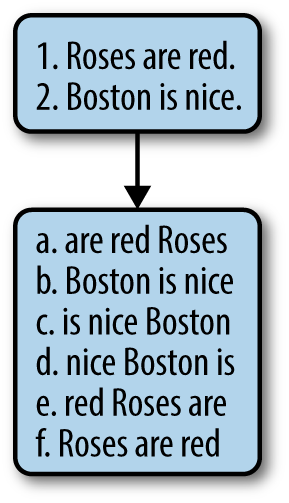

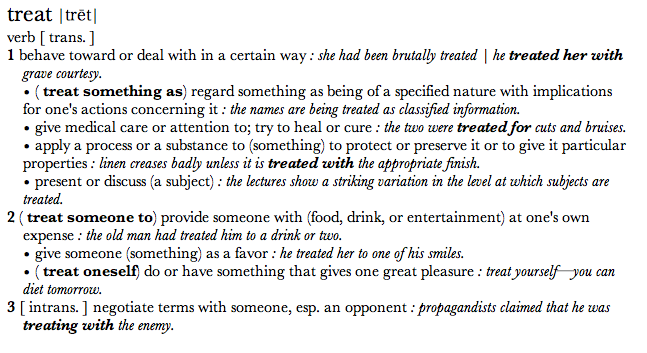

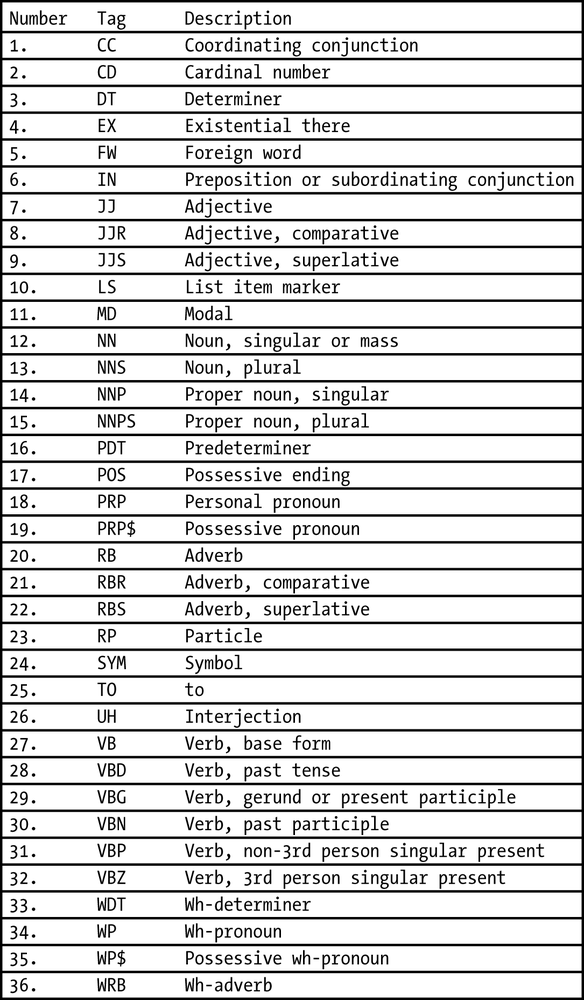

Linguistic analyses encoded in the corpus data itself are usually called corpus annotation . For example, we may wish to annotate a corpus to show parts of speech , assigning to each word a grammatical category label. So when we see the word talk in the sentence I heard John's talk and it was the same old thing , we would assign it the category noun in that context. This would often be done using some mnemonic code or tag such as N .

While the phrase corpus annotation may be unfamiliar, the basic operation it describes is not – it is just like the analyses of data that have been done using hand, eye, and pen for decades. For example, in Chomsky (1965), 24 invented sentences are analysed; in the parsed version of LOB , a million words are annotated with parse trees. So corpus annotation is largely the process of recording common analysis in a systematic and accessible form.

Annotating data: how to get started

If you are interested in experimenting with automatic annotation for yourself, there are online systems that will allow you to try this out without having to install any software on your own computer.

You can try out grammatical tagging of a small-to-medium text using the web-interface to the CLAWS tagger (below). This tagger, created by UCREL at Lancaster University, is the software that was used to tag the BNC. It can be set to use either of two tagsets, the standard C7 and the less-complex C5 .

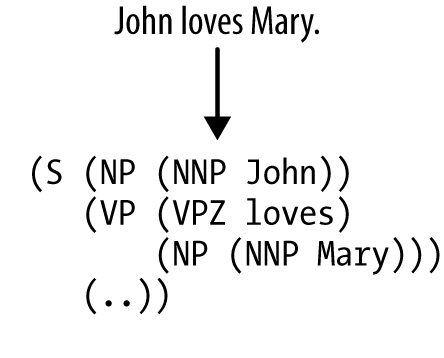

A more complex form of grammatical annotation is parsing . One easy way to try out parsing is to use the online Stanford Parser . This program does two different types of parsing – dependency parsing and constituency parsing – and is also openly available to download and use on your own computer.

A combination tool that part-of-speech tags text but also dependency-parses it aqnd lemmatises it is the Constraint Grammar system. You can try out Constraint Grammar-based taggers and parsers for English on the web here or here .

This page was last modified on Monday 31 October 2011 at 5:20 am.

Welcome | Part 1 | Part 2 | Part 3 | Part 4 | Footnotes | Answers | Weblinks | Corpus tools | Other resources | Buy the book | About the authors | References

Department of Linguistics and English Language, Lancaster University, United Kingdom

- Search Menu

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Literature

- Classical Reception

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Papyrology

- Greek and Roman Archaeology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Emotions

- History of Agriculture

- History of Education

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Acquisition

- Language Evolution

- Language Reference

- Language Variation

- Language Families

- Lexicography

- Linguistic Anthropology

- Linguistic Theories

- Linguistic Typology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies (Modernism)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Religion

- Music and Media

- Music and Culture

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Science

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Lifestyle, Home, and Garden

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Politics

- Law and Society

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Toxicology

- Medical Oncology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Clinical Neuroscience

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Medical Ethics

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Security

- Computer Games

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Psychology

- Cognitive Neuroscience

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business Strategy

- Business Ethics

- Business History

- Business and Government

- Business and Technology

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic Systems

- Economic History

- Economic Methodology

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Natural Disasters (Environment)

- Social Impact of Environmental Issues (Social Science)

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- International Political Economy

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Political Behaviour

- Political Economy

- Political Institutions

- Political Theory

- Politics and Law

- Public Administration

- Public Policy

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Developmental and Physical Disabilities Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

A newer edition of this book is available.

- < Previous chapter

- Next chapter >

24 Corpus Linguistics

Tony McEnery is Distinguished Professor of English Language and Linguistics at Lancaster University. He is the author of many books and papers on corpus linguistics, including Corpus Linguistics: Method, Theory and Practice (with Andrew Hardie, CUP, 2011). He was founding Director of the ESRC Corpus Approaches to Social Science (CASS) Centre, which was awarded the Queen's Anniversary Prize for its work on corpus linguistics in 2015.

- Published: 18 September 2012

- Cite Icon Cite

- Permissions Icon Permissions

Corpus data have emerged as the raw data/benchmark for several NLP applications. Corpus is described as a large body of linguistic evidence composed of attested language use. It may be contrasted against sentences constructed from metalinguist reflection upon language use, rather than as a result of communication in context. Corpus can be both spoken and written. It can be categorized as follows: monolingual, representing one language; comparable, using multiple monolingual corpora to create a comparative framework; parallel corpora, wherein, corpus of one language is considered, and the data obtained, is translated in other languages. The choice of corpus depends on the research question/the chosen application. Adding linguistic information can enhance a corpus. Analysts, human or mechanical, or a combination achieves annotation. The modern computerized corpus has been in vogue only since the 1940s. Ever since, the volume of corpus banks have risen steadily and assumed an increasingly multilingual nature.

In this chapter the use of corpora in natural language processing is overviewed. After defining what a corpus is and briefly overviewing the history of corpus linguistics, the chapter focuses on corpus annotation. Following the review of corpus annotation, a brief survey of existing corpora is presented, taking into account the types of corpus annotation present in each corpus. The chapter concludes by considering the use of corpora, both annotated, and unannotated, in a range of natural language processing (NLP) systems.

24.1 Introduction

Corpus data are, for many applications, the raw fuel of NLP, and/or the testbed on which an NLP application is evaluated. In this chapter the history of corpus linguistics is briefly considered. Following on from this, corpus annotation is introduced as a prelude to a discussion of some of the uses of corpus data in NLP. But before any of this can be done, we need to ask: what is a corpus?

24.2 What is a Corpus?

A corpus (pl. corpora , though corpuses is perfectly acceptable) is simply described as a large body of linguistic evidence typically composed of attested language use. One may contrast this form of linguistic evidence with sentences created not as a result of communication in context, but rather upon the basis of metalinguistic reflection upon language use, a type of data common in the generative approach to linguistics. Corpus data is not composed of the ruminations of theorists. It is composed of such varied material as everyday conversations (e.g. the spoken section of the British National Corpus 1 ), radio news broadcasts (e.g. the IBM/Lancaster Spoken English Corpus), published writing (e.g. the majority of the written section of the British National Corpus) and the writing of young children (e.g. the Leverhulme Corpus of Children's Writing). Such data are collected together into corpora which may be used for a range of research purposes. Typically these corpora are machine readable—trying to exploit a paper-based linguistic resource or audio recording running into millions of words is impractical. So while corpora could be paper based, or even simply sound recordings, the view taken here is that corpora are machine readable.

In this chapter the focus will be upon the use of corpora in NLP. But it is worth noting that one of the immense benefits of corpus data is that they may be used for a wide range of purposes in a number of disciplines. Corpora have uses in both linguistics and NLP, and are of interest to researchers from other disciplines, such as literary stylistics (Short, Culpeper, and Semino 1996 ). Corpora are multifunctional resources.

With this stated, a slightly more refined definition of a corpus is needed than that which has been introduced so far. It has been established that a corpus is a collection of naturally occurring language data. But is any collection of language data, from three sentences to three million words of data, a corpus? The term corpus should properly only be applied to a well-organized collection of data, collected within the boundaries of a sampling frame designed to allow the exploration of a certain linguistic feature (or set of features) via the data collected. A sampling frame is of crucial importance in corpus design. Sampling is inescapable. Unless the object of study is a highly restricted sublanguage or a dead language, it is quite impossible to collect all of the utterances of a natural language together within one corpus. As a consequence, the corpus should aim for balance and representativeness within a specific sampling frame, in order to allow a particular variety of language to be studied or modelled. The best way to explain these terms is via an example. Imagine that a researcher has the task of developing a dialogue manager for a planned telephone ticket selling system and decides to construct a corpus to assist in this task. The sampling frame here is clear—the relevant data for the planned corpus would have to be drawn from telephone ticket sales. It would be quite inappropriate to sample the novels of Jane Austen or face-to-face spontaneous conversation in order to undertake the task of modelling telephone-based transactional dialogues. Within the domain of telephone ticket sales there may be a number of different types of tickets sold, each of which requires distinct questions to be asked. Consequently, we can argue that there are various linguistically distinct categories of ticket sales. So the corpus is balanced by including a wide range of types of telephone ticket sales conversations within it, with the types organized into coherent subparts (for example, train ticket sales, plane ticket sales, and theatre ticket sales). Finally, within each of these categories there may be little point in recording one conversation, or even the conversations of only one operator taking a call. If one records only one conversation it may be highly idiosyncratic. If one records only the calls taken by one operator, one cannot be sure that they are typical of all operators. Consequently, the corpus aims for representativeness by including within it a range of speakers) in order that idiosyncrasies may be averaged out.

24.2.1 Monolingual, comparable, and parallel corpora

So, a corpus is a body of machine-readable linguistic evidence, which is collected with reference to a sampling frame. There are important variations on this theme, however. So far the focus has been upon monolingual corpora —corpora representing one language. Comparable corpora are corpora where a series of monolingual corpora are collected for a range of languages, preferably using the same sampling frame and with similar balance and representativeness, to enable the study of those languages in contrast. Parallel corpora take a slightly different approach to the study of languages in contrast, gathering a corpus in one language) and then translations of that corpus data into one or more languages. Parallel and comparable corpora may appear rather similar when first encountered, but the data they are composed of are significantly different. If the main focus of a study is on contrastive linguistics, comparable corpora are preferable, as, for example, the process of translation may influence the forms of a translation) with features of the source language carried over into the target language (Schmied and Fink 2000 ). If the interest in using the corpus is to gain translation examples for an application such as example-based machine translation (see Chapter 28 ), then the parallel corpus, used in conjunction with a range of alignment techniques (Botley, Mcfinery, and Wilson 2000 ; Véronis 2000 ), offers just such data.

24.2.2 Spoken corpora

Whether the corpus is monolingual, comparable, or parallel, the corpus may also be composed of written language, spoken language, or both. With spoken language some important variations in corpus design come into play. The spoken corpus could in principle exist as a set of audio recordings only (for example, the Survey of English Dialects existed in this form for many years). At the other extreme, the original sound recordings of the corpus may not be available at all, and an orthographic transcription of the corpus could be the sole source of data (as is the case with the spoken section of the British National Corpus 2 ). Both of these scenarios have drawbacks. If the corpus exists only as a sound recording, such data are difficult to exploit, even in digital form. It is currently problematic for a machine to search, say, for the word apple in a recording of spontaneous conversation in which a whole range of different speakers are represented. On the other hand, while an orthographic transcription is useful for retrieval purposes—retrieving word forms from a machine-readable corpus is typically a trivial computational task—many important acoustic features of the original data are lost, e.g. prosodic features, variations in pronunciation. 3 As a consequence of both of these problems, spoken corpora have been built which combine a transcription of the corpus data with the original sound recording, so that one is able to retrieve words from the transcription, but then also retrieve the original acoustic context of the production of the word via a process called time alignment (Roach and Arnfield 1995 ). Such corpora are now becoming increasingly common.

24.2.3 Research questions and corpora

The choice of corpus to be used in a study depends upon the research questions being asked of the corpus, or the applications one wishes to base upon the corpus. Yet whether the corpus is monolingual, comparable or parallel, within the sampling frame specified for the corpus, the corpus should be designed to be balanced and representative. 4 With this stated, let us now move to a brief overview of the history of corpus linguistics before introducing a further refinement to our definition of a corpus—the annotated versus the unannotated corpus.

24.3 A History of Corpus Linguistics

Outlining a history of corpus linguistics is difficult. In its modern, computerized, form, the corpus has only existed since the late 1940s. The basic idea of using attested language use for the study of language clearly pre-dated this time, but the problem was that the gathering and use of large volumes of linguistic data in the pre-computer age was so difficult as to be almost impossible. There were notable examples of it being achieved via the deployment of vast workforces—Kaeding 1897 is a notable example of this. Yet in reality, corpus linguistics in the form that we know it today, where any PC user can, with relative ease, exploit corpora running into millions of words, is a very recent phenomenon.

The crucial link between computers and the manipulation of large bodies of linguistic evidence was forged by Bussa 1980 in the late 1940s. During the 1950s the first large project in the construction of comparable corpora was undertaken by Juilland (see, for example, Juilland and Chang-Rodriguez 1964 ), who also articulated clearly the concepts behind the ideas of the sampling frame, balance, and representativeness. English corpus linguistics took off in the late 1950s, with work in America on the Brown corpus (Francis 1979 ) and work in Britain on the Survey of English Usage (Quirk 1960 ). Work in English corpus linguistics in particular grew throughout the 1960s, 1970s, and 1980s, with significant milestones such as a corpus of transcribed spoken language (Svartvik and Quirk 1980 ), a corpus with manual encodings of parts-of-speech information (Francis 1979 ), and a corpus with reliable automated encodings of parts of speech (Garside, Leech, and Sampson 1987 ) being reached in this period. During the 1980s, the number of corpora available steadily grew as did the size of those corpora. This trend became clear in the 1990s, with corpora such as the British National Corpus and the Bank of English reaching vast sizes (100,000,000 words and 300,000,000 words of modern British English respectively) which would have been for all practical purposes impossible in the pre-electronic age. The other trend that became noticeable during the 1990s was the increasingly multilingual nature of corpus linguistics, with monolingual corpora becoming available for a range of languages, and parallel corpora coming into widespread use (McEnery and Oakes 1996 ; Botley, McEnery, and Wilson 2000 ; Véronis 2000 ).

In conjunction with this growth in corpus data, fuelled in part by expanding computing power, came a range of technical innovations. For example, schemes for systematically encoding corpus data came into being (Sperberg-McQueen and Burnard 1994), programs were written to allow the manipulation of ever larger data sets (e.g. Sara), and work began in earnest to represent the audio recording of a transcribed spoken corpus text in tandem with its transcription. The range of future developments in corpus linguistics is too numerous to mention in detail here (see McEnery and Wilson 2001 for a fuller discussion). What can be said, however, is that as personal computing technology develops yet further, we can expect that research questions not addressable with corpus data at this point of time will become possible, as new types of corpora are developed, and new programs to exploit these new corpora are written.

One area which has only been touched upon here, but which has been a major area of innovation in corpus linguistics in the past and which will undoubtedly remain so in the future, is corpus annotation. In the next section corpus annotation will be discussed in some depth, as it is an area where corpus linguistics and NLP often interact, as will be shown in section 24.6 .

24.4 Corpus Annotation

24.4.1 what is corpus annotation.

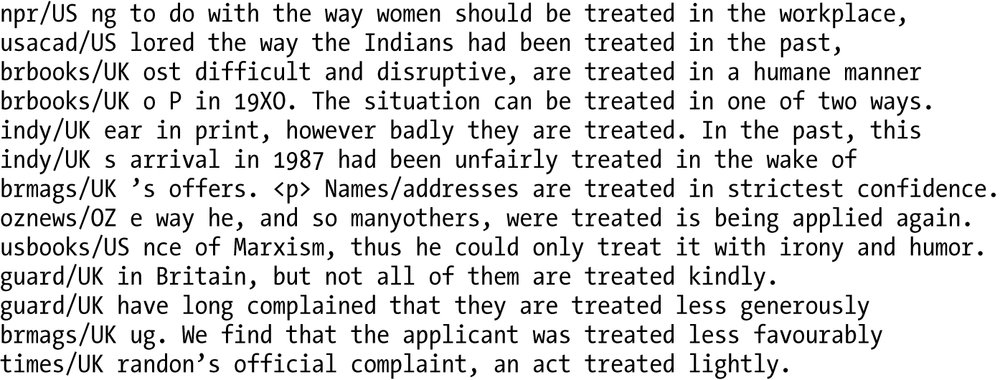

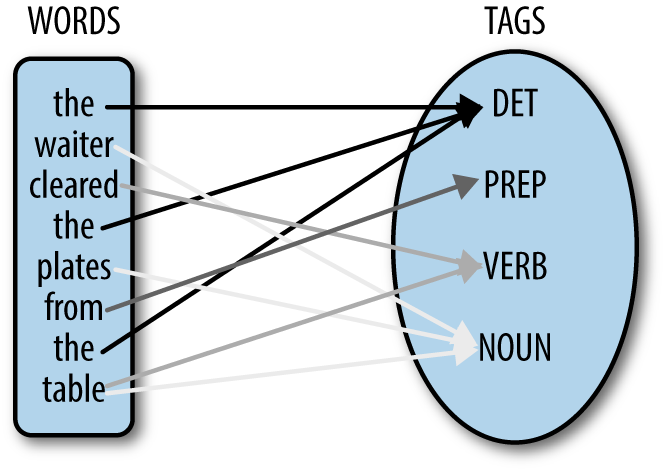

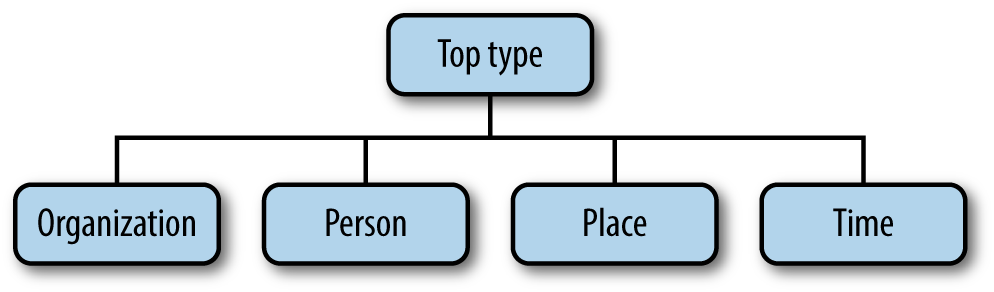

McEnery and Wilson ( 1996 : 24) describe annotated corpora as being ‘enhanced with various types of linguistic information’. This enhancement is achieved by analysts, whether they be humans, computers, or a mixture of both, imposing a linguistic interpretation upon a corpus. Typically this analysis is encoded by reference to a specified range of features represented by textual mnemonics which are introduced into the corpus. These mnemonics seek to link sections of the text to units of linguistic analysis. So, for example, in the case of introducing a part-of-speech analysis to a text, textual mnemonics are generally placed in a one-to-one relationship with the words in the text. 5

24.4.1.1 Enrichment, interpretation, and imposition

In essence corpus annotation is the enrichment of a corpus in order to aid the process of corpus exploitation. Note that enrichment of the corpus does not necessarily occur from the viewpoint of the expert human analyst—corpus annotation only makes explicit what is implicit, it does not introduce new information. For any level of linguistic information encoded explicitly in a corpus, the information that a linguist can extract from the corpus by means of a hand and eye analysis will hopefully differ little, except in terms of the speed of analysis, from that contained in the annotation. The enrichment is related to users who need linguistic analyses but are not in a position to provide them. This covers both humans who lack the metalinguistic ability to impose a meaningful linguistic analysis upon a text as well as computers, which may lack the ability to impose such analyses also.

Keywords in describing the process of corpus annotation are imposition and interpretation . Given any text, there are bound to be a plurality of analyses for any given level of interpretations that one may wish to undertake. This plurality arises from the fact that there is often an allowable degree of variation in linguistic analyses, arising in part at least from ambiguities in the data and fuzzy boundaries between categories of analysis in any given analytical scheme. Corpus annotation typically represents one of a variety of possible analyses, and imposes that consistently upon the corpus text.

24.4.2 What are the advantages of corpus annotation?

In the preceding sections, some idea of why we may wish to annotate a corpus has already emerged. In this section I want to detail four specific advantages of corpus annotation as a prelude to discussing the process of corpus annotation in the context of criticisms put forward against it to date. Key advantages of corpus annotation are ease of exploitation, reusability, multi-functionality , and explicit analyses .

24.4.2.1 Ease of exploitation

This is a point which we have considered briefly already. With an annotated corpus, the range and speed of corpus exploitation increases. Considering the range of exploitation, an annotated corpus can be used by a wider range of users than an unannotated corpus. For example, even if I cannot speak French, given an appropriately annotated corpus of French, I am capable of retrieving all of the nouns in a corpus of French. Similarly, even if a computer is not capable of parsing a sentence, given a parsed treebank and appropriate retrieval software, it can retrieve noun phrases from that corpus. Corpus annotation enables humans and machines to exploit and retrieve analyses of which they are not themselves capable.

Moving to the speed of corpus exploitation, even where a user is capable of undertaking the range of analyses encoded within an annotated corpus, they are able to exploit an analysis encoded within a corpus 6 swiftly and reliably.

24.4.2.2 Reusability

Annotated corpora also have the merit of allowing analyses to be exploited over and over again, as noted by Leech ( 1997 : 5). Rather than an analysis being performed for a specific purpose and discarded, corpus annotation records an analysis. This analysis is then prone to reuse.

24.4.2.3 Multi-functionality

An analysis originally annotated within a corpus may have been undertaken with one specific purpose in mind. When reused, however, the purpose of the corpus exploitation may be quite different from that originally envisaged. So as well as being reusable, corpus analyses can also be put to a wide range of uses.

24.4.2.4 Explicit analyses

A final advantage I would outline for corpus annotation is that it is an excellent means by which to make an analysis explicit. As well as promoting reuse, a corpus analysis stands as a clear objective record of the analysis imposed upon the corpus by the analyst/analysts responsible for the annotation. As we will see shortly, this clear benefit has actually been miscast as a drawback of corpus annotation in the past.

24.4.3 How corpus annotation is achieved

Corpus annotation may be achieved entirely automatically, by a semi-automated process, or entirely manually. To cover each in turn, some NLP tools, such as lemmatizers and part-of-speech taggers, are now so reliable for languages such as English, French, and Spanish 7 that we may consider a wholly automated approach to their annotation (see Chapter 11 for a more detailed review of part-of-speech tagging). While using wholly automated procedures does inevitably mean a rump of errors in a corpus, the error rates associated with taggers such as CLAWS (Garside, Leech, and Sampson 1987 ) are low, typically being reported at around 3 per cent. Where such a rate of error in analysis is acceptable, corpus annotation may proceed without human intervention.

More typically, however, NLP tools are not sufficiently accurate so as to allow fully automated annotation. Yet they may be sufficiently accurate that correcting the annotations introduced by them is faster than undertaking the annotation entirely by hand. This was the case in the construction of the Penn Treebank (Marcus, Santorini, and Marcinkiewicz 1993 ), where the constituent structure of the corpus was first annotated by a computer and then corrected by human analysts. Another scenario where a mixture of machine and human effort occurs is where NLP tools which are usually sufficiently accurate, such as part-of-speech taggers, are not sufficient because highly accurate annotation is required. This is the case, for example, in the core corpus of the British National Corpus. Here the core corpus (one million words of writen English and one million words of spoken) was first automatically part-of-speech annotated, and then hand corrected by expert human analysts.

Pure manual annotation occurs where no NLP application is available to a user, or where the accuracy of available systems is not high enough to make the time invested in manual correction less than pure manual annotation. An example of purely manual annotation is in the construction of corpora encoding anaphoric and cataphoric references (Botley and McEnery 2000 ; Mitkov 2002 ). It should be noted that in real terms, considering the range of possible annotations we may want to introduce into corpus texts, most would have to be introduced manually or at best semi-manually.

24.4.4 Criticisms of corpus annotation

Two main criticisms of corpus annotation have surfaced over the past decade or so. I believe it is quite safe to dismiss both, but for purposes of clarity let us spell out the criticisms and counter them here.

24.4.4.1 Corpus annotations produce impure corpora

The first criticism to be levelled at corpus annotation was that it somehow sullied the unannotated corpus by the process of imposing an interpretation on the data. The points to be made against this are simple. First, in imposing one analysis, there is no constraint upon the user of the corpus to use that analysis—they may impose one of their own. The plurality of interpretations of a text is something that must be accepted from the outset. Secondly, just because we do not make a clear record of the interpretation we have imposed via annotation it does not disguise the fact that in using raw corpora interpretations still occur. The interpretations imposed by corpus annotations have the advantage that they are objectively recorded and open to scrutiny. The interpretations of those who choose not to annotate corpus data remain fundamentally more obscure than those recorded clearly in a corpus. Bearing these two points in mind, it is plain to see the fundamental weakness of this criticism of corpus annotation.

24.4.4.2 Consistency versus accuracy

The second criticism, presented by Sinclair (1992) , is not a criticism of corpus annotation as such. Rather it is a criticism of two of the practices of corpus annotation we have just examined—manual and semi-automatic corpus annotation. The argument is subtle, and worth considering seriously. It is centred upon two related notion—saccuracy and consistency. When a part-of-speech tagger annotates a text and is 97 per cent accurate, its analysis should be 100 per cent consistent, i.e. given the same set of decision-making conditions in two different parts of the corpus, the answer given is the same. This consistency for the machine derives from its impartial and unswerving application of a program. Can we expect the same consistency of analysis from human annotators? As we have discussed already, there is a plurality of analyses possible for any given annotation. Consequently, when human beings are imposing an interpretation upon a text, can we assume that their analysis is 100 per cent consistent? May it not be the case that they may produce analyses which, when viewed from several points of view, are highly accurate, but which, when viewed from one analytical viewpoint, are not as accurate? It may be the case that the annotation of a corpus may be deemed to be accurate, but simultaneously be highly inconsistent.

This argument is potentially quite damaging to the practice of corpus annotation, especially when we consider that most hand analyses are carried out by teams of annotators, hence amplifying the possibility of inconsistency. As a result of such arguments, experiments have been carried out by annotation teams around the world (Marcus, Santorini, and Marcinkiewicz 1993 ; Voutilainen and Järvinen 1995 ; Baker 1997 ) in order to examine the validity of this criticism. No study to date has supported Sinclair's argument. Indeed every study has shown that while the introduction of a human element to corpus annotation does mean a modest decline in the consistency of annotation within the corpus, this decline is more than offset by a related rise in the accuracy of the annotation. There is one important rider to add to this observation, however. All of the studies above, especially the studies of Baker 1997 , have used teams of trained annotators—annotators who were well versed in the use of a particular annotation scheme, and who had long experience in working with lists of guidelines which helped their analyses to converge. It is almost certainly true, though as yet not validated experimentally, that, given a set of analysts with no guidelines to inform their annotation decisions and no experience of teamwork, Sinclair's criticism would undoubtedly be more relevant. As it is, there is no reason to assume that Sinclair's criticisms of human-aided annotation should colour one's view of corpora produced with the aid of human grammarians, such as the French, English, and Spanish CRATER corpora (McEnery et al. 1997 ).

24.5 What Corpora are in Existence?

An increasing variety of annotated corpora are currently in existence. It should come as no surprise to discover that the majority of annotated corpora involve part of speech and lemmatization, as these are procedures which can be undertaken largely automatically. Nonetheless, a growing number of hand-annotated corpora are becoming available. Table 24.1 seeks to show something of the range of annotated corpora of written language in existence. For more detail on the range and use of corpus annotation, see McEnery and Wilson (1996) , 8 and Garside, Leech, and McEnery 1997 .

Having now established the philosophical and practical basis for corpus annotation, and having reviewed the range of annotations related to written corpora, I would like to conclude this chapter by reviewing the practical benefits related to the use of annotated corpora in one field, NLP.

24.6 The Exploitation of Corpora in NLP

NLP is a rapidly developing area of study, which is producing working solutions to specified natural-language processing problems. The application of annotated corpora within NLP to date has resulted in advances in language processing—part-of-speech taggers, such as CLAWS, are an early example of how annotated corpora enabled the development of better language processing systems (see Garside, Leech, and Sampson 1987 ). Annotated corpora have allowed such developments to occur as they are unparalleled sources of quantitative data. To return to CLAWS, because the tagged Brown corpus was available, accurate transition probabilities could be extracted for use in the development of CLAWS. The benefits of this data are apparent when we compare the accuracy rate of CLAWS—around 97 per cent—to that of TAGGIT, used to develop the Brown corpus—around 77 per cent. This massive improvement can be attributed to the existence of annotated corpus data which enabled CLAWS to disambiguate between multiple potential part-of-speech tag assignments in context.

It is not simply part-of-speech tagging where quantitative data are of prime importance to disambiguation. Disambiguation is a key problem in a variety of areas such as anaphor resolution, parsing, and machine translation. It is beyond doubt that annotated corpora will have an important role to play in the development of NLP systems in the future, as can be seen from the burgeoning corpus-based NLP literature (LREC 2000 ).

Beyond the use of quantitative data derived from annotations as the basis of disambiguation in NLP systems, annotated corpora may also provide the raw fuel for various terminology extraction programs. Work has been developed in the area of automated terminology extraction which relies upon annotated corpora for its results (Daille 1995 ; Gausier 1995 ). So although disambiguation is an area where annotated corpora are having a key impact, there is ample scope for believing that they may be used in a wider variety of applications.

A further example of such an application may be called evidence-based learning. Until recently, language analysis programs almost exclusively relied on human intuition in the construction of their knowledge/rule base. Annotated corpora corrected/ produced by humans, while still encoding human intuitions, situate those intuitions within a context where the computer can recover intuitions from use, and where humans can moderate their intuitions by application to real examples. Rather than having to rely on decontextualized intuitions, the computer can recover intuitions from practice. The difference between human experts producing opinions about what they do out of context and practice in context has long been understood in artificial intelligence—humans tend to be better at showing what they know rather than explaining what they know, so to speak. The construction of an annotated corpus, therefore, allows us to overcome this known problem in communicating expert knowledge to machines, while simultaneously providing testbeds against which intuitions about language may be tested. Where machine learning algorithms are the basis for an NLP application, it is fair to say that corpus data are essential. Without them machine learning-based approaches to NLP simply will not work.

Another role which is emerging for the annotated corpus is as an evaluation testbed for NLP programs. Evaluation of language processing systems can be problematic, where people are training systems with different analytical schemes and texts, and have different target analyses which the system is to be judged by. Using one annotated corpus as an agreed testbed for evaluation can greatly ease such problems, as it specifies the text type/types, analytical scheme, and results which the performance of a program is to be judged upon. This approach to the evaluation of systems has been adopted in the past, as reported by Black, Garside, and Leech (1993) , for instance, and in the Message Understanding Conferences in the United States (Aone and Bennett 1994 ). The benefits of the approach are so evident, however, that the establishment of such testbed corpora is bound to become increasingly common in the very near future.

One final activity which annotated corpora allow is worthy of some coverage here. It is true that, at the moment, the range of annotations available is wider than the range of annotations which it is possible for a computer to introduce with a high degree of accuracy. Yet by the use of the annotations present in a hand-annotated corpus, a resource is developed that permits a computer, over the scope of the annotated corpus only, to act as if it could perform the analysis in question. In short, if we have a manually produced treebank, a computer can read the treebank and discover where the marked constituents are, rather than having to work it out for itself. The advantages of this are limited yet clear. Such a use of an annotated corpus may provide an economic means of evaluating whether the development of a certain NLP application is worthwhile—if somebody posits that the application of a parser of newspaper stories would be of use in some application, then by the use of a treebank of newspaper stories they can experiment the worth of their claim without actually producing a parser.

There are further uses of annotated corpora in NLP beyond those covered here. The range of uses covered, however, is more than sufficient to illustrate that annotated corpora, even though we can justify them on philosophical grounds, can more than be justified on practical grounds.

24.7 Conclusion

Corpora have played a useful role in the development of human language technology to date. In return, corpus linguistics has gained access to ever more sophisticated language processing systems. There is no reason to believe that this happy symbiosis will not continue—to the benefit of language engineers and corpus linguists alike—in the future.

Further Reading and Relevant Resources

There are now a number of introductions to corpus linguistics, each of which takes slightly different views on the topic. McEnery and Wilson (2001) take a view closest to that presented in this chapter. Kennedy 1999 is concerned largely with English corpus linguistics and the use of corpora in language pedagogy. Stubbs 1997 is written entirely from the viewpoint of neo-Firthian approaches to corpus linguistics, while Biber, Conrad, and Reppen (1998) is concerned mainly with the multi-feature multi-dimension approach to analysing corpus data established in Biber 1988 .

For those readers interested in corpus annotation, Garside, Leech, and McEnery 1997 provides a comprehensive overview of corpus annotation practices to date.

Many references in this chapter will lead to papers where specific corpora are discussed. The corpora listed here are simply those explicitly referenced in this chapter. For each corpus a URL is given where further information can be found about each corpus.

This list by no means represents the full range of corpora available. For a better idea of the range of corpora available visit the website of the European Language Resources Association ( http://www.icp.grenet.fr/ELRA/home.html ) or the Linguistic Data Consortium ( http://www.ldc.upenn.edu ).

British National Corpus: http://www.comp.lancs.ac.uk/computing/research/ucrel/bnc.html ; IBM/Lancaster Spoken English Corpus: http://midwich.reading.ac.uk/research/speechlab/marsec/marsec.html ; Leverhulme Corpus of Children's Writing: http://www.ling.lancs.ac.uk/monkey/lever/intro.htm ; Survey of English Dialects: http://www.xrefer.com/entry/444074 .

Aone, C. and S. W. Bennett. 1994 . ‘ Discourse tagging and discourse tagged multilingual corpora ’. Proceedings of the International Workshop on Sharable Natural Language Resources (Nara), 71–7.

Google Scholar

Google Preview

Baker, J. P. 1995. The Evaluation of Mutliple Posteditors: Inter Rater Consistency in Correcting Automatically Tagged Data , Unit for Computer Research on the English Language Technical Papers 7, Lancaster University.

—— 1997 . ‘ Consistency and accuracy in correcting automatically-tagged corpora ’. In Garside, Leech, and McEnery (1997), 243–50.

Biber, D. 1988 . Variation across speech and writing . Cambridge: Cambridge University Press.

—— S. Conrad, and R. Reppen. 1998 . Corpus Linguistics: Investigating Language Structure and Use . Cambridge: Cambridge University Press.

Black, E., R. Garside, and G. Leech. 1993 . Statistically Driven Computer Grammars of English: The IBM/Lancaster Approach . Amsterdam: Rodopi.

Botley, S. and A. M. McEnery (eds.). 2000 . Discourse Anaphora and Resolution . Studies in Corpus Linguistics. Amsterdam: John Benjamins.

Betley, S., A. M. McEnery, and A. Wilson (eds.). 2000 . Multilingual Corpora in Teaching and Research . Amsterdam: Rodopi.

Bussa, R. 1980 . ‘ The annals of humanities computing: the index Thomisticus ’ Computers and the Humanities , 14, 83–90.

Church, K. 1988. ‘A stochatic parts program and noun phrase parser for unrestricted texts’. Proceedings of the 2nd Annual Conference on Applied Natural Language Processing (Austin, Tex.), 136–48.

Daille, B. 1995 . Combined Approach for Terminology Extraction: Lexical Statistics and Linguistic Filtering . Unit for Computer Research on the English Language Technical Papers 5, Lancaster University.

Francis, W. 1979 . ‘Problems of assembling, describing and computerizing large corpora’. In H. Bergenholtz and B. Schader (eds.), Empirische Textwissenschaft: Aufbau und Auswertung von Text Corpora . Königstein: Scripter Verlag, 110–23.

Gaizauskas, R., T. Wakao, K. Humphreys, H. Cunningham, and Y. Wilks. 1995. ‘Description of the LaSIE System as used for MUC-6’. Proceedings of the 6th Message Understanding Conference(MUC-6) (San Jose, Calif.), 207–20.

Garside, R., G. Leech, and A. M. McEnery. 1997 . Corpus Annotation . London: Longman.

—— and G. Sampson. 1987 . The Computational Analysis of English . London: Longman.

Gausier, E. 1995. Modèles statistiques et patrins morphosyntactiques pour l'extraction de lexiques bilingues . Ph. D. thesis, University of Paris VII.

Juilland, A. and E. Chang-Rodriguez. 1964 . Frequency Dictionary of Spanish Words . The Hague: Mouton.

Kaeding, J. 1897 . Häufigkeitswörteroucn der deutschen Sprache . Steglitz: published by the author.

Kennedy, G. 1999 . Corpus Linguistics . London: Longman.

Leech, G. 1997 . ‘ Introducing corpus annotation ’. In Garside, Leech, and McEnery (1997), 1–18.

LREC 2000 . Proceedings of the 2nd International Conference on Language Resources and Evaluation (Athens).

McEnery, A. M. and M. P. Oakes. 1996 . ‘Sentence and word alignment in the CRATER project: methods and assessment’. In J. Thomas and M. Short (eds.), Using Corpora for Language Research . London: Longman, 211–31.

—— and A. Wilson. 1996 . Corpus Linguistics . Edinburgh: Edinburgh University Press.

—— 2001 . Corpus Linguistics , 2nd edn. Edinburgh: Edinburgh University Press.

—— F. Sanchez-Leon, and A. Nieto-Serano, 1997 . ‘ Multilingual resources for European languages: contributions of the CRATER project ’. Literary and Linguistic Computing , 12(4), 219–26.

Marcus, M., B. Santorini, and M. Marcinkiewicz. 1993 . ‘ Building a large annotated corpus of English: the Penn Treebank ’. Computational Linguistics , 19(2), 313–30.

Mitkov, R. 2002 . Anaphora Resolution . London: Longman.

Nagao, M. 1984 . ‘A framework of a mechanical translation between Japanese and English by analogy principle’. In A. Elithorn and J. Banerji (eds.), Artificial and Human Translation . Brussels: Nato Publications, 173–80.

Ng, H. T. and H. B. Lee. 1996. ‘Integrating multiple knowledge sources to disambiguate word sense: an exemplar-based approach’. Proceedings of the 34th Annual Meeting of the Association for Computational Linguistics (Santa Cruz, Calif.), 40–7.

Quirk, R. 1960 . ‘ Towards a description of English usage ’ Transactions of the Philological Society , 40–61.

Roach, P . and S. Arnfield. 1995 . ‘Linking prosodic transcription to the time dimension’. In G. Leech, G. Myers, and J. Thomas (eds.), Spoken English on Computer: Transcription, Mark-up and Applications . London: Longman, 149–60.

Sampson, G. 1995 . English for the Computer: The SUSANNE Corpus and Analytic Scheme . Oxford: Clarendon Press.

Schmied, J. and B. Fink. 2000 . ‘Corpus-based contrastive lexicology: the case of English with and its German translation eqivalents’. In Botley, McEnery, and Wilson (eds.), 157–76.

Short, M. , J. Culpeper, and E. Semino. 1996 . ‘Using a corpus for stylistics research: speech presentation’. In M. Short and J. Thomas (eds.), Using Corpora for Language Research . London: Longman.

Sinclair, J. 1991 . Corpus, Concordance, Collocation . Oxford: Oxford University Press.

—— 1992 . ‘The automatic analysis of text corpora’. In J. Svartvik (ed.), Directions in Corpus Linguistics: Proceedings of the Nobel Symposium 82, Stockholm , The Hague: Mouton, 379–97.

Sperberg-McQueen, C. M. and L Burnard. 1993 . Guidelines for Electronic Text Encoding and Interchange . Chicago: Text Encoding Initiative.

Stiles, W. B. 1992 . Describing Talk . New York: Sage.

Stubbs, M. 1997 . Texts and Corpus Analysis . Oxford: Blackwell.

Svartvik, J. and R. Quirk. 1980 . The London-Lund Corpus of Spoken English . Lund: Lund University Press.

Véronis, J. 2000 . Parallel Text Processing . Dordrecht: Kluwer.

Voutilanen, A. and T. Järvinen. 1995 . ‘ Specifying a shallow grammatical representation for parsing purposes ’. Proceedings of the 7th Conference of the European Chapter of the Association for Computational Linguistics (EACL'95) (Dublin), 210–14.

Wilson, A. and J. Thomas. 1997 . ‘ Semantic annotation ’. In Garside, Leech, and McEnery (1997), 53–65.

Details of all corpora mentioned in this chapter are given in ‘Further Reading and Relevant Resources’ below.

Some audio material for the BNC spoken corpus is available. Indeed, the entire set of recordings are lodged in the National Sound Archive in the UK. However, the recordings are not available for general use beyond the archive, and the sound files have not been time aligned against their transcriptions.

One can, as will be seen later, transcribe speech using a phonemic transcription and annotate the transcription to show features such as stress, pitch, and intonation. Nonetheless, as the original data will almost certainly contain information lost in the process of transcription, and, crucially, the process of transcription and annotation also entails the imposition of an analysis, the need to consult the sound recording would still exist.

There is another organizing principle upon which some corpora have been constructed, which emphasizes continued text collection through time with less of a focus on the features of corpus design outlined here. These corpora, called monitor corpora, are not numerous, but have been influential and are useful for diachronic studies of linguistic features which may change rapidly, such as lexis. Some, such as the Bank of English, are very large and used for a range of purposes. Readers interested in exploring the monitor corpus further are referred to Sinclair 1991 .

Note that there are exceptions to this general description. Multi-word units may be placed in a many-to-one relationship with a morphosyntactic tag. Similarly, enclitics in a text may force certain words to be placed in a one-to-many relationship with morphosyntactic annotations.

Assuming that suitable, preferably annotation-aware, retrieval software is available.

See McEnery et al. (1997) for an account of a project which produced reliable English, French, and Spanish lemmatization and part-of-speech tagging.

Also see http://www.ling.lancs.ac.uk/monkey/ihe/linguistics/corpus2/2fral.htm for some on-line examples of corpus annotation.

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Natural Language Annotation for Machine Learning by James Pustejovsky, Amber Stubbs

Get full access to Natural Language Annotation for Machine Learning and 60K+ other titles, with a free 10-day trial of O'Reilly.

There are also live events, courses curated by job role, and more.

Chapter 1. The Basics

It seems as though every day there are new and exciting problems that people have taught computers to solve, from how to win at chess or Jeopardy to determining shortest-path driving directions. But there are still many tasks that computers cannot perform, particularly in the realm of understanding human language. Statistical methods have proven to be an effective way to approach these problems, but machine learning (ML) techniques often work better when the algorithms are provided with pointers to what is relevant about a dataset, rather than just massive amounts of data. When discussing natural language, these pointers often come in the form of annotations—metadata that provides additional information about the text. However, in order to teach a computer effectively, it’s important to give it the right data, and for it to have enough data to learn from. The purpose of this book is to provide you with the tools to create good data for your own ML task. In this chapter we will cover:

Why annotation is an important tool for linguists and computer scientists alike

How corpus linguistics became the field that it is today

The different areas of linguistics and how they relate to annotation and ML tasks

What a corpus is, and what makes a corpus balanced

How some classic ML problems are represented with annotations

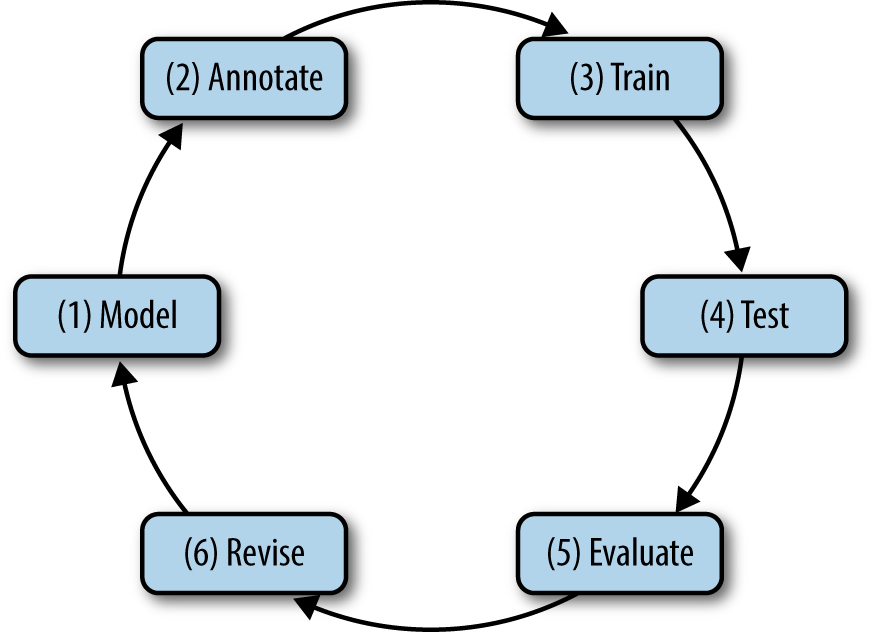

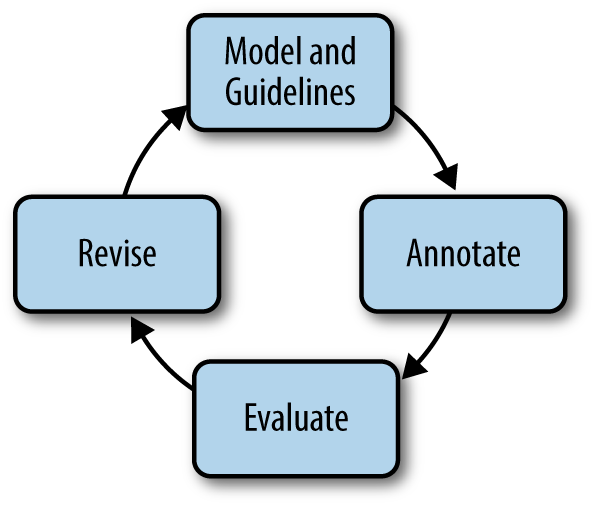

The basics of the annotation development cycle

The Importance of Language Annotation

Everyone knows that the Internet is an amazing resource for all sorts of information that can teach you just about anything: juggling, programming, playing an instrument, and so on. However, there is another layer of information that the Internet contains, and that is how all those lessons (and blogs, forums, tweets, etc.) are being communicated. The Web contains information in all forms of media—including texts, images, movies, and sounds—and language is the communication medium that allows people to understand the content, and to link the content to other media. However, while computers are excellent at delivering this information to interested users, they are much less adept at understanding language itself.

Theoretical and computational linguistics are focused on unraveling the deeper nature of language and capturing the computational properties of linguistic structures. Human language technologies (HLTs) attempt to adopt these insights and algorithms and turn them into functioning, high-performance programs that can impact the ways we interact with computers using language. With more and more people using the Internet every day, the amount of linguistic data available to researchers has increased significantly, allowing linguistic modeling problems to be viewed as ML tasks, rather than limited to the relatively small amounts of data that humans are able to process on their own.

However, it is not enough to simply provide a computer with a large amount of data and expect it to learn to speak—the data has to be prepared in such a way that the computer can more easily find patterns and inferences. This is usually done by adding relevant metadata to a dataset. Any metadata tag used to mark up elements of the dataset is called an annotation over the input. However, in order for the algorithms to learn efficiently and effectively, the annotation done on the data must be accurate, and relevant to the task the machine is being asked to perform. For this reason, the discipline of language annotation is a critical link in developing intelligent human language technologies.

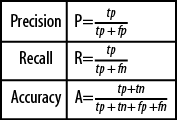

Giving an ML algorithm too much information can slow it down and lead to inaccurate results, or result in the algorithm being so molded to the training data that it becomes “overfit” and provides less accurate results than it might otherwise on new data. It’s important to think carefully about what you are trying to accomplish, and what information is most relevant to that goal. Later in the book we will give examples of how to find that information, and how to determine how well your algorithm is performing at the task you’ve set for it.

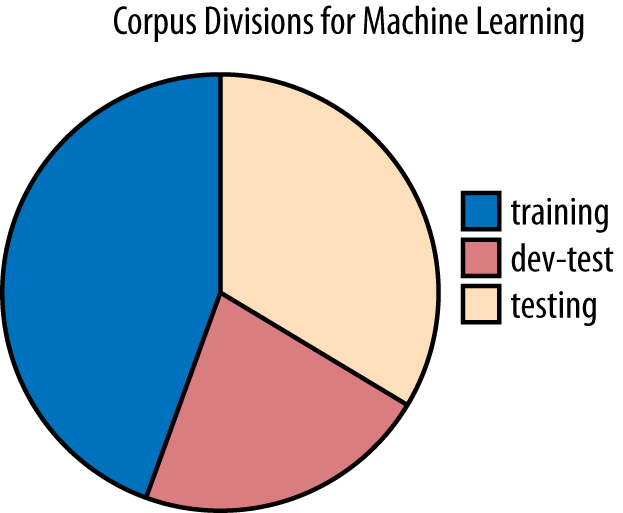

Datasets of natural language are referred to as corpora , and a single set of data annotated with the same specification is called an annotated corpus . Annotated corpora can be used to train ML algorithms. In this chapter we will define what a corpus is, explain what is meant by an annotation, and describe the methodology used for enriching a linguistic data collection with annotations for machine learning.

The Layers of Linguistic Description